At first, I refused to operate Zookeeper. I haven't done this thing, but I've always known about it, and I've never used it; Well, after reading the official website documents and trying for a period of time, I can only say "that's it ~".

This article does not involve too much other people's blog knowledge, just look at it zk official documents , and the zk tutorial in k8s's official documentation: Run ZooKeeper, a distributed coordination system;

Then upgraded start-zookeeper.sh Configure zk higher version, write zookeeper.yaml zk cluster deployed k8s; On second thought, it seems that only k8s single cluster deployment is not stable enough, so let's release another ECS version. That's it zookeeper-ECS.sh It came into being.

Simple deployment is really done, but you still have to understand the subsequent series of operation and maintenance;

- You don't know the election strategy of Zookeeper. Do you know the special meaning and purpose of myid?

- If you don't know the connection mode of the client, you can't design its domain name. Do you need multiple domain names, SLB or not, and how to deal with the problem of network connection in this layer;

- If you don't do well in monitoring + Metrics, I don't know when to hang up and have to carry a big pot!

- If you don't understand the reconfig provided in the new version, you don't know that you can hot expand and shrink cluster members;

- ...

Clear Zookeeper election strategy of extreme operation and maintenance

- Zxid (transaction log Id) value takes precedence. When zxid is equal, myid value takes precedence

- I don't want to be more specific. It's said by reading other people's blogs. I haven't seen the source code, and it's true according to practice; (for the sequential initialization of the three nodes, the second one must be started. myid=2 is the leader. You can see the details)

- Therefore, here we can discuss a problem: dual master computer room + third computer room architecture, should the "minimum value" or "maximum value" of myid be arranged in the third computer room?

- If the myid of the third machine room is the minimum value, the master node will not go to the third machine room. In case of disaster recovery in the machine room, the master node needs to be re elected;

- If the myid of the third machine room is the maximum value, the master node can basically reside in the third machine room. In case of disaster recovery in the machine room, there is no master node to be re elected; (unless it is disaster recovery in the third machine room)

Acme O & M knows the connection mode of the client

org.apache.zookeeper.client.StaticHostProvider

// shuffle the maintained ZK cluster address list. The order is not important and will be disrupted to provide choices.

private List<InetSocketAddress> shuffle(Collection<InetSocketAddress> serverAddresses) {

List<InetSocketAddress> tmpList = new ArrayList<>(serverAddresses.size());

tmpList.addAll(serverAddresses);

Collections.shuffle(tmpList, sourceOfRandomness);

return tmpList;

}

// Do domain name resolution for the selected ZK cluster address list. The domain name can resolve multiple IPS. After obtaining the ip list, shuffle to select one.

private InetSocketAddress resolve(InetSocketAddress address) {

try {

String curHostString = address.getHostString();

List<InetAddress> resolvedAddresses = new ArrayList<>(Arrays.asList(this.resolver.getAllByName(curHostString)));

if (resolvedAddresses.isEmpty()) {

return address;

}

Collections.shuffle(resolvedAddresses);

return new InetSocketAddress(resolvedAddresses.get(0), address.getPort());

} catch (UnknownHostException e) {

LOG.error("Unable to resolve address: {}", address.toString(), e);

return address;

}

}

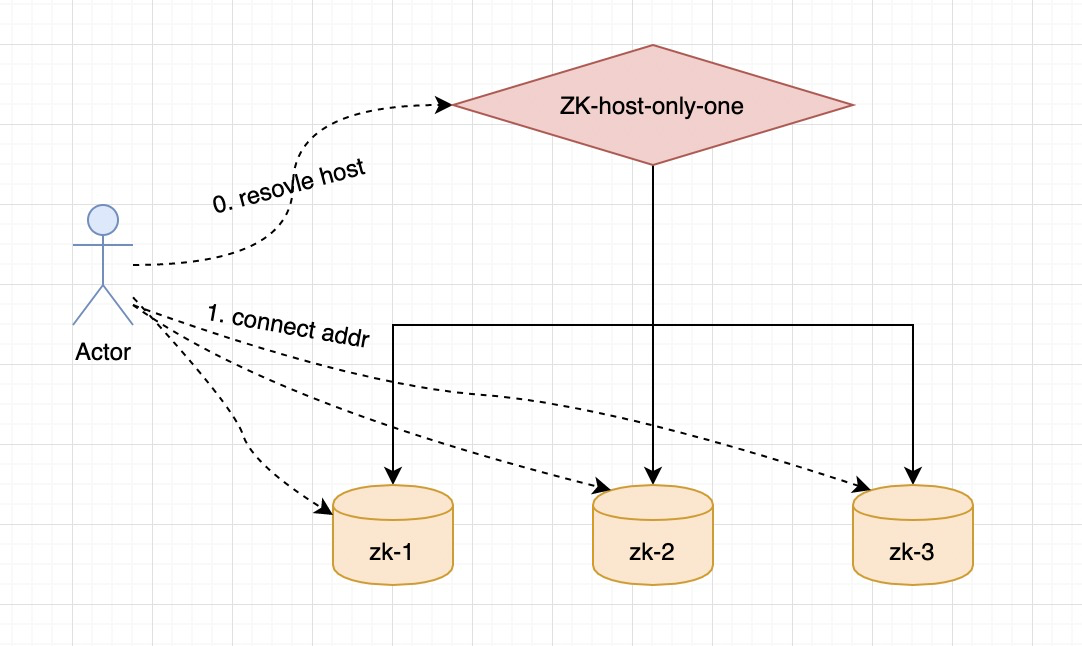

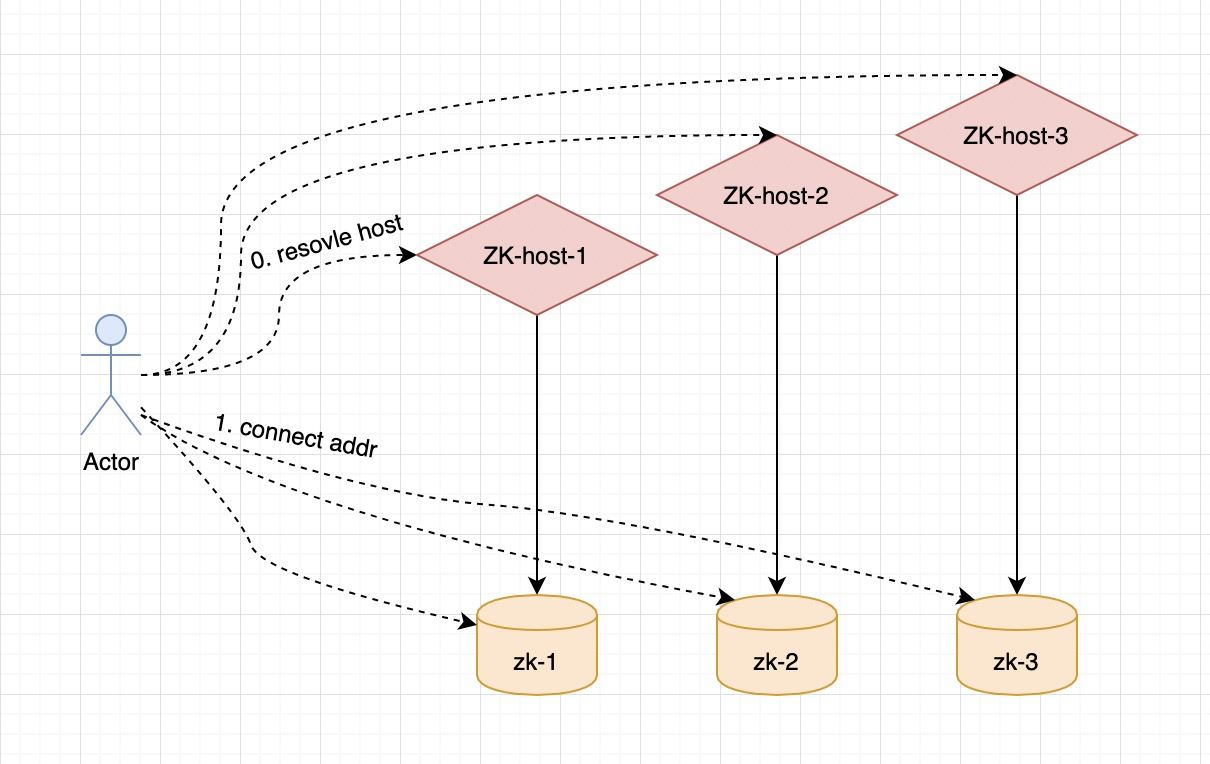

Summarize the above address selection and filtering: when Zookeeper cluster is used for intranet direct connection, it does not need SLB for transfer, but direct connection; for one or more domain names, please see the next section.

Zookeeper cluster domain name

-

Optional 1 (recommended): only one domain name is used to mount the ip of all ZK nodes in the cluster. (when expanding or shrinking capacity, only add or subtract ip mounting on DNS)

-

Optional 2: each ZK node in the cluster has its own domain name. (when expanding or shrinking, the domain name needs to be added or subtracted, and the address list maintained by the client needs to be changed!!!)

-

Of course! If you want to do computer room convergence under special circumstances, please consider it separately~

Monitoring of ultimate operation and maintenance + Metrics

- Configure disk 80% alarm

- Port 2181 down alarm

- Prometheus acquisition: 7000 / metric

reconfig of ultimate operation and maintenance: hot expansion and contraction of cluster members

- zk-Reconfig The ECS scenario is more applicable, and the expansion and contraction are no longer afraid~

- [example] remove server.1

[zk: localhost:2181(CONNECTED) 8] reconfig help reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*] [zk: localhost:2181(CONNECTED) 9] reconfig -remove 1 Committed new configuration: server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 version=100000003 [zk: localhost:2181(CONNECTED) 10] config server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 version=100000003 [zk: localhost:2181(CONNECTED) 11] quit [root@zookeeper-1 conf]# ll total 32 -rw-r--r-- 1 1000 1000 535 Apr 8 2021 configuration.xsl -rw-r--r-- 1 root root 264 Nov 9 06:43 dynamicConfigFile.cfg.dynamic -rw-r--r-- 1 root root 84 Nov 9 06:43 java.env -rw-r--r-- 1 1000 1000 3452 Nov 9 06:43 log4j.properties -rw-r--r-- 1 root root 553 Nov 9 07:59 zoo.cfg -rw-r--r-- 1 root root 323 Nov 9 06:43 zoo.cfg.dynamic.100000000 -rw-r--r-- 1 root root 215 Nov 9 07:59 zoo.cfg.dynamic.100000003 -rw-r--r-- 1 1000 1000 1148 Apr 8 2021 zoo_sample.cfg [root@zookeeper-1 conf]# cat dynamicConfigFile.cfg.dynamic server.1=zookeeper-0.zookeeper-hs.default.svc.cluster.local:2888:3888;2181 server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888;2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888;2181 [root@zookeeper-1 conf]# cat zoo.cfg.dynamic.100000000 server.1=zookeeper-0.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 [root@zookeeper-1 conf]# cat zoo.cfg.dynamic.100000003 server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181

- [example] add back to server.1

[zk: localhost:2181(CONNECTED) 0] config server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 version=100000003 [zk: localhost:2181(CONNECTED) 2] reconfig -add server.1=zookeeper-0.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 Committed new configuration: server.1=zookeeper-0.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 version=100000008 [zk: localhost:2181(CONNECTED) 3] config server.1=zookeeper-0.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.2=zookeeper-1.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 server.3=zookeeper-2.zookeeper-hs.default.svc.cluster.local:2888:3888:participant;0.0.0.0:2181 version=100000008

appendix

zookeeper-ECS.sh

#!/bin/sh

##

## -Server list, examples = 10.1.2.200,10.1.3.78,10.1.4.111 if the three machine rooms are deployed, the 2 2 1 deployment architecture is adopted. It is recommended that the 1-node server of the third machine room be placed first, and its myid=1

## `sh zookeeper-ECS.sh [server-list]`

##

USER=`whoami`

CUR_HOST_IP=`hostname -i`

CUR_PWD=`pwd`

ZK_VERSION="3.6.3"

ZK_TAR_GZ="apache-zookeeper-${ZK_VERSION}-bin.tar.gz"

ZK_PACKAGE_DIR="apache-zookeeper-${ZK_VERSION}-bin"

MYID="1"

SERVER_PORT="2888"

ELECTION_PORT="3888"

CLIENT_PORT="2181"

SERVERS=${1:-${CUR_HOST_IP}}

if [ ! -f ${ZK_TAR_GZ} ]; then

wget --no-check-certificate https://dlcdn.apache.org/zookeeper/zookeeper-${ZK_VERSION}/${ZK_TAR_GZ}

fi

if [ ! -f ${ZK_TAR_GZ} ]; then

echo "error: has no ${ZK_TAR_GZ}"

exit 1;

fi

tar -zxvf ${ZK_TAR_GZ}

ZOO_CFG="${ZK_PACKAGE_DIR}/conf/zoo.cfg"

ZOO_CFG_DYNAMIC="${ZK_PACKAGE_DIR}/conf/dynamicConfigFile.cfg.dynamic"

ZOO_JAVA_ENV="${ZK_PACKAGE_DIR}/conf/java.env"

function print_zoo_cfg() {

echo """

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=${CUR_PWD}/zkdata

dataLogDir=${CUR_PWD}/zkdata_log

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

maxClientCnxns=2000

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

autopurge.purgeInterval=1

#audit.enable=true

## Metrics Providers @see https://prometheus.io Metrics Exporter

metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

metricsProvider.httpPort=7000

metricsProvider.exportJvmInfo=true

## Reconfig Enabled @see https://zookeeper.apache.org/doc/current/zookeeperReconfig.html

reconfigEnabled=true

dynamicConfigFile=/root/zoo363/conf/dynamicConfigFile.cfg.dynamic

skipACL=yes

""" > ${ZOO_CFG}

}

## server list

function print_servers_to_zoo_cfg_dynamic() {

# Store old delimiters first

OLD_IFS="$IFS"

# Setting the Configurations separator

IFS=","

SERVERS_ARR=(${SERVERS})

SERVERS_ARR_LEN=${#SERVERS_ARR[@]}

# Restore original separator

IFS="$OLD_IFS"

echo "## Cluster member list role is also optional, it can be participant or observer (participant by default). Peertype = participant. "> ${zoo_cfg_dynamic}

for (( i=0; i < ${SERVERS_ARR_LEN}; i++ ))

do

## Judge ip equality and obtain myid

if [ "${SERVERS_ARR[${i}]}" == "${CUR_HOST_IP}" ]; then

MYID=$((i+1))

fi

echo "server.$((i+1))=${SERVERS_ARR[${i}]}:$SERVER_PORT:$ELECTION_PORT;$CLIENT_PORT" >> ${ZOO_CFG_DYNAMIC}

done

}

## ZK_SERVER_HEAP

function print_zoo_java_env() {

ZK_SERVER_HEAP_MEM=1000

pyhmem=`free -m |grep "Mem:" |awk '{print $2}'`

if [[ ${pyhmem} -gt 3666 ]]; then

ZK_SERVER_HEAP_MEM=$((1024 * 3))

elif [[ ${pyhmem} -gt 1888 ]]; then

ZK_SERVER_HEAP_MEM=$((1024 * 3/2))

fi

echo """

### The default value is 1000. The unit of - Xmx capacity is m. don't bring your own unit, that is - Xmx1000m

ZK_SERVER_HEAP=${ZK_SERVER_HEAP_MEM}

### Turn off JMX

JMXDISABLE=true

""" > ${ZOO_JAVA_ENV}

}

function create_zoo_data_dir() {

mkdir -p ${CUR_PWD}/zkdata ${CUR_PWD}/zkdata_log

echo ${MYID} > "${CUR_PWD}/zkdata/myid"

}

print_zoo_cfg && print_servers_to_zoo_cfg_dynamic && print_zoo_java_env && create_zoo_data_dir && sh ${ZK_PACKAGE_DIR}/bin/zkServer.sh start

zookeeper.yaml

### @see https://kubernetes.io/zh/docs/tutorials/stateful-application/zookeeper / # create a - zookeeper ensemble

#### 1. Modify character ZK - > zookeeper

#### 2. Modify the start zookeeper command

#### 3. Modify podmanagementpolicy: orderedready - > parallel

#### 4. Use the custom image Cherry / zookeeper

apiVersion: v1

kind: Service

metadata:

name: zookeeper-hs

labels:

app: zookeeper

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

clusterIP: None

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper-cs

labels:

app: zookeeper

spec:

ports:

- port: 2181

name: client

selector:

app: zookeeper

---

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zookeeper-pdb

spec:

selector:

matchLabels:

app: zookeeper

maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zookeeper

spec:

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper-hs

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel ## Parallel can initialize three pods in batch. If it is OrderedReady, it will create one pod first, and then create the next two step by step after it is ready.

template:

metadata:

labels:

app: zookeeper

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zookeeper

topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

imagePullPolicy: Always

image: "cherish/zookeeper:3.6.3-20211121.154529" ## Custom image

resources:

requests:

memory: "2Gi"

cpu: "2" ##

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "/opt/zookeeper/bin/start-zookeeper.sh \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data_log \

--log_dir=/var/log/zookeeper \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=1536 \

--max_client_cnxns=2000 \

--snap_retain_count=3 \

--purge_interval=3 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "curl 127.0.0.1:8080/commands/ruok"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "curl 127.0.0.1:8080/commands/ruok"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: zkdatadir

mountPath: /var/lib/zookeeper

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: zkdatadir

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 20Gi

## -----No PVC is temporarily removed from this submission. No PVC can only be used as an example. The production environment still has to be provided.

# volumeMounts:

# - name: zkdatadir

# mountPath: /var/lib/zookeeper

# securityContext:

# runAsUser: 1000

# fsGroup: 1000

# volumeClaimTemplates:

# - metadata:

# name: zkdatadir

# spec:

# accessModes: [ "ReadWriteOnce" ]

# resources:

# requests:

# storage: 20Gi

start-zookeeper.sh

#!/usr/bin/env bash

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

#

#Usage: start-zookeeper [OPTIONS]

# Starts a ZooKeeper server based on the supplied options.

# --servers The number of servers in the ensemble. The default

# value is 1.

# --data_dir The directory where the ZooKeeper process will store its

# snapshots. The default is /var/lib/zookeeper/data.

# --data_log_dir The directory where the ZooKeeper process will store its

# write ahead log. The default is

# /var/lib/zookeeper/data/log.

# --conf_dir The directoyr where the ZooKeeper process will store its

# configuration. The default is /opt/zookeeper/conf.

# --client_port The port on which the ZooKeeper process will listen for

# client requests. The default is 2181.

# --election_port The port on which the ZooKeeper process will perform

# leader election. The default is 3888.

# --server_port The port on which the ZooKeeper process will listen for

# requests from other servers in the ensemble. The

# default is 2888.

# --tick_time The length of a ZooKeeper tick in ms. The default is

# 2000.

# --init_limit The number of Ticks that an ensemble member is allowed

# to perform leader election. The default is 10.

# --sync_limit The maximum session timeout that the ensemble will

# allows a client to request. The default is 5.

# --heap The maximum amount of heap to use. The format is the

# same as that used for the Xmx and Xms parameters to the

# JVM. e.g. --heap=2G. The default is 2G.

# --max_client_cnxns The maximum number of client connections that the

# ZooKeeper process will accept simultaneously. The

# default is 60.

# --snap_retain_count The maximum number of snapshots the ZooKeeper process

# will retain if purge_interval is greater than 0. The

# default is 3.

# --purge_interval The number of hours the ZooKeeper process will wait

# between purging its old snapshots. If set to 0 old

# snapshots will never be purged. The default is 0.

# --max_session_timeout The maximum time in milliseconds for a client session

# timeout. The default value is 2 * tick time.

# --min_session_timeout The minimum time in milliseconds for a client session

# timeout. The default value is 20 * tick time.

# --log_level The log level for the zookeeeper server. Either FATAL,

# ERROR, WARN, INFO, DEBUG. The default is INFO.

USER=`whoami`

HOST=`hostname -s`

DOMAIN=`hostname -d`

LOG_LEVEL=INFO

DATA_DIR="/var/lib/zookeeper/data"

DATA_LOG_DIR="/var/lib/zookeeper/data_log"

LOG_DIR="/var/log/zookeeper"

CONF_DIR="/opt/zookeeper/conf"

CLIENT_PORT=2181

SERVER_PORT=2888

ELECTION_PORT=3888

TICK_TIME=2000

INIT_LIMIT=10

SYNC_LIMIT=5

JVM_OPTS=

HEAP=2048

MAX_CLIENT_CNXNS=60

SNAP_RETAIN_COUNT=3

PURGE_INTERVAL=1

SERVERS=1

ADMINSERVER_ENABLED=true

AUDIT_ENABLE=false

METRICS_PORT=7000

JMX_DISABLE=true

function print_usage() {

echo "\

Usage: start-zookeeper [OPTIONS]

Starts a ZooKeeper server based on the supplied options.

--servers The number of servers in the ensemble. The default

value is 1.

--data_dir The directory where the ZooKeeper process will store its

snapshots. The default is /var/lib/zookeeper/data.

--data_log_dir The directory where the ZooKeeper process will store its

write ahead log. The default is /var/lib/zookeeper/data/log.

--log_dir The directory where the ZooKeeper process will store its log.

The default is /var/log/zookeeper.

--conf_dir The directory where the ZooKeeper process will store its

configuration. The default is /opt/zookeeper/conf.

--client_port The port on which the ZooKeeper process will listen for

client requests. The default is 2181.

--election_port The port on which the ZooKeeper process will perform

leader election. The default is 3888.

--server_port The port on which the ZooKeeper process will listen for

requests from other servers in the ensemble. The

default is 2888.

--tick_time The length of a ZooKeeper tick in ms. The default is

2000.

--init_limit The number of Ticks that an ensemble member is allowed

to perform leader election. The default is 10.

--sync_limit The maximum session timeout that the ensemble will

allows a client to request. The default is 5.

--jvm_props The jvm opts must not contants the Xmx and Xms parameters to the

JVM. e.g. -XX:+UseG1GC -XX:MaxGCPauseMillis=200. The default not set.

--heap The maximum amount of heap to use. The format is the

same as that used for the Xmx and Xms parameters to the

JVM. e.g. --heap=2048. The default is 2048m.

--max_client_cnxns The maximum number of client connections that the

ZooKeeper process will accept simultaneously. The

default is 60.

--snap_retain_count The maximum number of snapshots the ZooKeeper process

will retain if purge_interval is greater than 0. The

default is 3.

--purge_interval The number of hours the ZooKeeper process will wait

between purging its old snapshots. If set to 0 old

snapshots will never be purged. The default is 1.

--max_session_timeout The maximum time in milliseconds for a client session

timeout. The default value is 2 * tick time.

--min_session_timeout The minimum time in milliseconds for a client session

timeout. The default value is 20 * tick time.

--log_level The log level for the zookeeeper server. Either FATAL,

ERROR, WARN, INFO, DEBUG. The default is INFO.

--audit_enable The audit.enable=?. The default is false.

--metrics_port The metricsProvider.httpPort=?. The default is 7000.

"

}

function create_data_dirs() {

if [ ! -d $DATA_DIR ]; then

mkdir -p $DATA_DIR

chown -R $USER:$USER $DATA_DIR

fi

if [ ! -d $DATA_LOG_DIR ]; then

mkdir -p $DATA_LOG_DIR

chown -R $USER:$USER $DATA_LOG_DIR

fi

if [ ! -d $LOG_DIR ]; then

mkdir -p $LOG_DIR

chown -R $USER:$USER $LOG_DIR

fi

if [ ! -f $ID_FILE ] && [ $SERVERS -gt 1 ]; then

echo $MY_ID >> $ID_FILE

fi

}

function print_servers() {

for (( i=1; i<=$SERVERS; i++ ))

do

echo "server.$i=$NAME-$((i-1)).$DOMAIN:$SERVER_PORT:$ELECTION_PORT;$CLIENT_PORT"

done

}

function create_config() {

rm -f $CONFIG_FILE

echo "# This file was autogenerated DO NOT EDIT" >> $CONFIG_FILE

echo "clientPort=$CLIENT_PORT" >> $CONFIG_FILE

echo "dataDir=$DATA_DIR" >> $CONFIG_FILE

echo "dataLogDir=$DATA_LOG_DIR" >> $CONFIG_FILE

echo "tickTime=$TICK_TIME" >> $CONFIG_FILE

echo "initLimit=$INIT_LIMIT" >> $CONFIG_FILE

echo "syncLimit=$SYNC_LIMIT" >> $CONFIG_FILE

echo "maxClientCnxns=$MAX_CLIENT_CNXNS" >> $CONFIG_FILE

echo "minSessionTimeout=$MIN_SESSION_TIMEOUT" >> $CONFIG_FILE

echo "maxSessionTimeout=$MAX_SESSION_TIMEOUT" >> $CONFIG_FILE

echo "autopurge.snapRetainCount=$SNAP_RETAIN_COUNT" >> $CONFIG_FILE

echo "autopurge.purgeInterval=$PURGE_INTERVAL" >> $CONFIG_FILE

echo "admin.enableServer=$ADMINSERVER_ENABLED" >> $CONFIG_FILE

echo "audit.enable=${AUDIT_ENABLE}" >> $CONFIG_FILE

echo "## Metrics Providers @see https://prometheus.io Metrics Exporter" >> $CONFIG_FILE

echo "metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider" >> $CONFIG_FILE

echo "metricsProvider.exportJvmInfo=true" >> $CONFIG_FILE

echo "metricsProvider.httpPort=${METRICS_PORT}" >> $CONFIG_FILE

echo "## Reconfig Enabled @see https://zookeeper.apache.org/doc/current/zookeeperReconfig.html" >> $CONFIG_FILE

echo "reconfigEnabled=true" >> $CONFIG_FILE

echo "dynamicConfigFile=${DINAMIC_CONF_FILE}" >> $CONFIG_FILE

echo "skipACL=yes" >> $CONFIG_FILE

if [ $SERVERS -gt 1 ]; then

print_servers >> $DINAMIC_CONF_FILE

fi

cat $CONFIG_FILE >&2

cat $DINAMIC_CONF_FILE >&2

}

function create_jvm_props() {

rm -f $JAVA_ENV_FILE

echo "ZOO_LOG_DIR=$LOG_DIR" >> $JAVA_ENV_FILE

echo "SERVER_JVMFLAGS=${JVM_OPTS}" >> $JAVA_ENV_FILE

echo "ZK_SERVER_HEAP=${HEAP}" >> $JAVA_ENV_FILE

echo "JMXDISABLE=${JMX_DISABLE}" >> $JAVA_ENV_FILE

}

function change_log_props() {

sed -i "s!zookeeper\.log\.dir=\.!zookeeper\.log\.dir=${LOG_DIR}!g" $LOGGER_PROPS_FILE

}

optspec=":hv-:"

while getopts "$optspec" optchar; do

case "${optchar}" in

-)

case "${OPTARG}" in

servers=*)

SERVERS=${OPTARG##*=}

;;

data_dir=*)

DATA_DIR=${OPTARG##*=}

;;

data_log_dir=*)

DATA_LOG_DIR=${OPTARG##*=}

;;

log_dir=*)

LOG_DIR=${OPTARG##*=}

;;

conf_dir=*)

CONF_DIR=${OPTARG##*=}

;;

client_port=*)

CLIENT_PORT=${OPTARG##*=}

;;

election_port=*)

ELECTION_PORT=${OPTARG##*=}

;;

server_port=*)

SERVER_PORT=${OPTARG##*=}

;;

tick_time=*)

TICK_TIME=${OPTARG##*=}

;;

init_limit=*)

INIT_LIMIT=${OPTARG##*=}

;;

sync_limit=*)

SYNC_LIMIT=${OPTARG##*=}

;;

jvm_props=*)

JVM_OPTS=${OPTARG##*=}

;;

heap=*)

HEAP=${OPTARG##*=}

;;

max_client_cnxns=*)

MAX_CLIENT_CNXNS=${OPTARG##*=}

;;

snap_retain_count=*)

SNAP_RETAIN_COUNT=${OPTARG##*=}

;;

purge_interval=*)

PURGE_INTERVAL=${OPTARG##*=}

;;

max_session_timeout=*)

MAX_SESSION_TIMEOUT=${OPTARG##*=}

;;

min_session_timeout=*)

MIN_SESSION_TIMEOUT=${OPTARG##*=}

;;

log_level=*)

LOG_LEVEL=${OPTARG##*=}

;;

audit_enable=*)

AUDIT_ENABLE=${OPTARG##*=}

;;

metrics_port=*)

METRICS_PORT=${OPTARG##*=}

;;

*)

echo "Unknown option --${OPTARG}" >&2

exit 1

;;

esac;;

h)

print_usage

exit

;;

v)

echo "Parsing option: '-${optchar}'" >&2

;;

*)

if [ "$OPTERR" != 1 ] || [ "${optspec:0:1}" = ":" ]; then

echo "Non-option argument: '-${OPTARG}'" >&2

fi

;;

esac

done

MIN_SESSION_TIMEOUT=${MIN_SESSION_TIMEOUT:- $((TICK_TIME*2))}

MAX_SESSION_TIMEOUT=${MAX_SESSION_TIMEOUT:- $((TICK_TIME*20))}

ID_FILE="$DATA_DIR/myid"

CONFIG_FILE="$CONF_DIR/zoo.cfg"

DINAMIC_CONF_FILE="$CONF_DIR/dynamicConfigFile.cfg.dynamic"

LOGGER_PROPS_FILE="$CONF_DIR/log4j.properties"

JAVA_ENV_FILE="$CONF_DIR/java.env"

if [[ $HOST =~ (.*)-([0-9]+)$ ]]; then

NAME=${BASH_REMATCH[1]}

ORD=${BASH_REMATCH[2]}

else

echo "Fialed to parse name and ordinal of Pod"

exit 1

fi

MY_ID=$((ORD+1))

create_config && create_jvm_props && change_log_props && create_data_dirs && exec ./bin/zkServer.sh start-foreground

Dockerfile

FROM apaas/edas:latest

MAINTAINER Cherish "785427346@qq.com"

# set environment

ENV CLIENT_PORT=2181 \

SERVER_PORT=2888 \

ELECTION_PORT=3888 \

BASE_DIR="/opt/zookeeper"

WORKDIR ${BASE_DIR}

# add zookeeper-?-bin.tar.gz file, override by --build-arg ZK_VERSION=3.6.3

ARG ZK_VERSION=3.6.3

RUN wget --no-check-certificate "https://dlcdn.apache.org/zookeeper/zookeeper-${ZK_VERSION}/apache-zookeeper-${ZK_VERSION}-bin.tar.gz" \

&& tar -zxf apache-zookeeper-${ZK_VERSION}-bin.tar.gz --strip-components 1 -C ./ \

&& rm -rf apache-zookeeper-${ZK_VERSION}-bin.tar.gz

ADD ./start-zookeeper.sh ${BASE_DIR}/bin/start-zookeeper.sh

RUN chmod +x ${BASE_DIR}/bin/start-zookeeper.sh

EXPOSE ${CLIENT_PORT} ${SERVER_PORT} ${ELECTION_PORT}

# examples START_ZOOKEEPER_COMMAMDS=--servers=3 --data_dir=/var/lib/zookeeper/data --data_log_dir=/var/lib/zookeeper/data/data_log --conf_dir=/opt/zookeeper/conf --client_port=2181 --election_port=3888 --server_port=2888 --tick_time=2000 --init_limit=10 --sync_limit=5 --heap=1535 --max_client_cnxns=1000 --snap_retain_count=3 --purge_interval=12 --min_session_timeout=4000 --max_session_timeout=40000 --log_level=INFO

ENTRYPOINT ${BASE_DIR}/bin/start-zookeeper.sh ${START_ZOOKEEPER_COMMAMDS}