Learning notes and development of JUC

- Java JUC

- 1 Introduction to Java JUC

- 2 volatile keyword - memory visibility

- 3 atomic variables and CAS algorithm

- 4 ConcurrentHashMap

- 5 countdownlatch

- 6 implement Callable interface

- 7 -Lock synchronization lock

- 8 Condition control thread communication

- 9 threads alternating in order

- 10 ReadWriteLock

- 11 thread 8 lock

- 12 thread pool

- 12.1 introduction to line pool

- 12.2 architecture of thread pool

- 12.3 tool class: Executors

- 12.4 thread scheduling

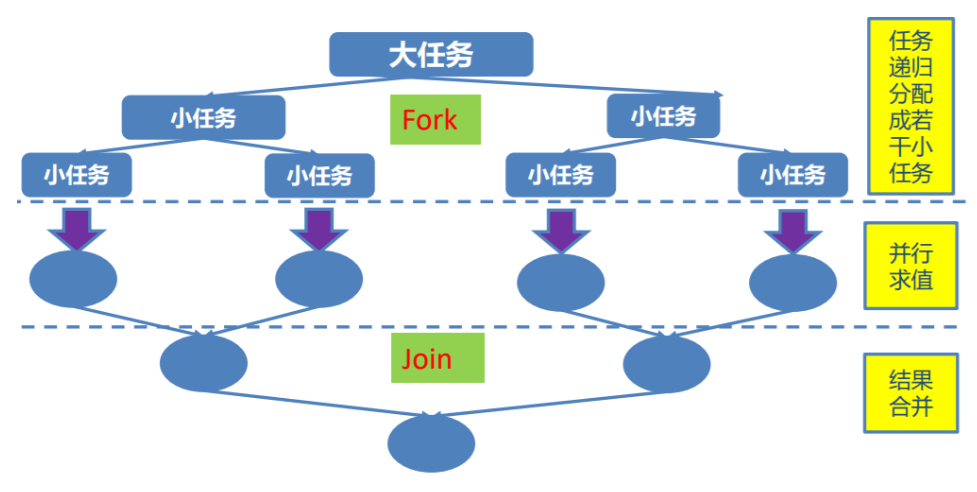

- 13 ForkJoinPool branch / merge framework work theft

Java JUC

1 Introduction to Java JUC

Java 5.0 provides java.util.concurrent In this package, a utility class which is very commonly used in concurrent programming is added to define a custom subsystem similar to threads, including thread pool, asynchronous IO and lightweight task framework. Provide adjustable and flexible thread pool. It also provides a Collection implementation designed for multithreading context.

2 volatile keyword - memory visibility

2.1 memory visibility

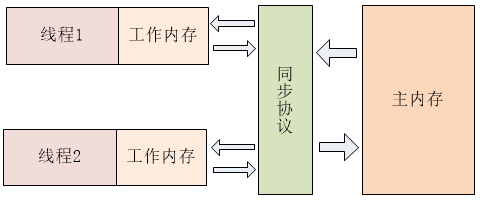

Java Memory Model stipulates that for variables shared by multiple threads, stored in main memory, each thread has its own independent working memory, and the thread can only access its own working memory, not the working memory of other threads. A copy of shared variables in the main memory is saved in the working memory. To operate these shared variables, a thread can only operate the copy in the working memory. After the operation, it will synchronize back to the main memory. Its JVM model is roughly shown in the figure below.

The JVM model stipulates that: 1) all operations of threads on shared variables must be carried out in their own memory and cannot be read or written directly from the main memory; 2) variables in working memory of other threads cannot be accessed directly between different threads, and the transfer of variable values between threads needs to be completed through the main memory. Such a rule may lead to the consequence that the thread's modification of the shared variable is not updated to the main memory immediately, or the thread fails to synchronize the latest value of the shared variable to the working memory immediately, so that when the thread uses the value of the shared variable, the value is not up-to-date. This leads to memory visibility.

Memory Visibility refers to that when a thread is using an object state and another thread is modifying the state at the same time, it is necessary to ensure that when one thread modifies the object state, other threads can see the state changes.

Visibility error refers to that when the read operation and write operation are executed in different threads, we cannot ensure that the thread executing the read operation can timely see the values written by other threads, sometimes even impossible things at all.

public class TestVolatile { public static void main(String[] args) { ThreadDemo td = new ThreadDemo(); new Thread(td).start(); while(true){ if(td.isFlag()){ System.out.println("------------------"); break; } } } } class ThreadDemo implements Runnable { private boolean flag = false; @Override public void run() { try { Thread.sleep(200); } catch (InterruptedException e) { } flag = true; System.out.println("flag=" + isFlag()); } public boolean isFlag() { return flag; } public void setFlag(boolean flag) { this.flag = flag; } } //Output: //flag=true

2.2 volatile keyword

Java provides a weak synchronization mechanism, i.e. volatile variables, to ensure that updates to variables are notified to other threads. When the shared variable is declared as volatile type, when the thread modifies the variable, it will immediately refresh the value of the variable back to main memory, and make the variable cached in other threads invalid, so that other threads will re read the value from the main when reading the value (refer to cache consistency). Therefore, when reading variables of type volatile, the latest written value will always be returned.

volatile blocks the necessary code optimization (instruction reordering) in the JVM, so it is inefficient

//If set to private volatile boolean flag = false; //Output results: flag=true ------------------

The main functions of volatile keyword are:

- Ensure memory visibility of variables

- Local blocking of reordering

volatile can be regarded as a lightweight lock, but it is different from lock:

- For multithreading, it is not an exclusive relationship

- Atomic operation of variable state cannot be guaranteed“

3 atomic variables and CAS algorithm

3.1 atomic variables

3.1.1 atomicity of I + +

public class TestAtomicDemo { public static void main(String[] args) { AtomicDemo ad = new AtomicDemo(); for (int i = 0; i < 10; i++) { new Thread(ad).start(); } } } class AtomicDemo implements Runnable{ // private volatile int serialNumber = 0; private AtomicInteger serialNumber = new AtomicInteger(0); @Override public void run() { try { Thread.sleep(200); } catch (InterruptedException e) { } System.out.print(getSerialNumber()+" "); } public int getSerialNumber(){ return serialNumber.getAndIncrement(); } } //Operation results //1 3 2 0 4 6 5 7 8 9 -- > not repeated

//If changed to: class AtomicDemo implements Runnable{ private volatile int serialNumber = 0; // private AtomicInteger serialNumber = new AtomicInteger(0); @Override public void run() { try { Thread.sleep(200); } catch (InterruptedException e) { } System.out.print(getSerialNumber()+" "); } public int getSerialNumber(){ return serialNumber++; // return serialNumber.getAndIncrement(); } } //Operation result: //0 4 3 2 1 0 5 6 7 8

3.1.2 atomic variables

What is the most simple and effective way to realize global self increasing id? java.util.concurrent The. Atomic package defines some common types of atomic variables. These atomic variables provide us with a lock free thread safety method.

In fact, the following classes of the package provide us with features similar to volatile variables, as well as functions such as boolean compareAndSet(expectedValue, updateValue).

It seems inconceivable to realize thread safety without lock. In fact, it is realized through the compare and swap instruction of CPU. Because of the support of hardware instruction, there is no need to add lock.

Core method: boolean compareAndSet(expectedValue, updateValue)

-

Atomic variable classes are named like AtomicXxx, for example, the AtomicInteger class is used to represent an int variable.

-

Scalar atomic variable class

The AtomicInteger, AtomicLong, and AtomicBoolean classes support operations on the original data types int, long, and boolean, respectively.

The AtomicReference class is used to handle reference data types when reference variables need to be updated atomically.

-

Atomic array class

There are three classes called AtomicIntegerArray, AtomicLongArray, and AtomicReferenceArray, which represent an array of int, long, and reference types whose elements can be atomically updated.

3.2 CAS algorithm

-

Compare And Swap (Compare And Exchange) / spin / spin lock / no lock

-

CAS is a kind of hardware support for concurrency. It is a special instruction designed for multiprocessor operation, which is used to manage concurrent access to shared data.

-

CAS is a non blocking algorithm without lock.

-

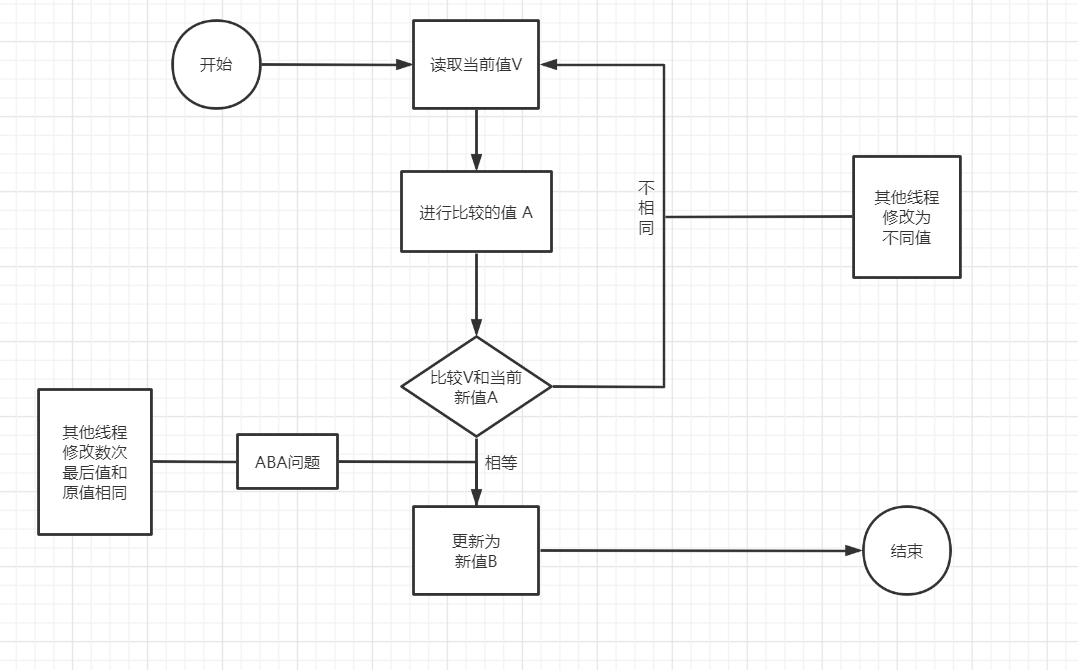

CAS contains three operands:

- Memory value V to read and write

- Value A for comparison

- New value B to be written

When and only if the value of V is equal to A, CAS atomically updates the value of V with the new value of B, otherwise no operation is performed.

3.2.1 ABA problems

CAS will cause ABA problem. Thread 1 is ready to replace the value of variable with CAS from a to B. before that, thread 2 will replace the value of variable with C from a and C to A. then thread 1 finds that the value of variable is still a when executing CAS, so CAS succeeds. But in fact, the scene at this time is different from that at the beginning. Although CAS is successful, there may be potential problems.

Solution (version number AtomicStampedReference), simple value of basic type does not need version number

3.2.2 implementation of CAS in JAVA

Unsafe

Unsafe class: a very obvious difference between Java and C/C + + is that memory cannot be directly operated in Java. Of course, this is not entirely correct, because unsafe can do it.

Application of Unsafe in AtomicInteger:

class AtomicDemo implements Runnable{ private AtomicInteger serialNumber = new AtomicInteger(0); @Override public void run() { try { Thread.sleep(200); } catch (InterruptedException e) { } System.out.print(getSerialNumber()+" "); } public int getSerialNumber(){ return serialNumber.incrementAndGet(); } }

public final int incrementAndGet() { for (;;) { int current = get(); int next = current + 1; if (compareAndSet(current, next)) return next; } } public final boolean compareAndSet(int expect, int update) { return unsafe.compareAndSwapInt(this, valueOffset, expect, update); }

Unsafe:

public final native boolean compareAndSwapInt(Object var1, long var2, int var4, int var5);

application:

package com.mashibing.jol; import sun.misc.Unsafe; import java.lang.reflect.Field; public class T02_TestUnsafe { int i = 0; private static T02_TestUnsafe t = new T02_TestUnsafe(); public static void main(String[] args) throws Exception { //Unsafe unsafe = Unsafe.getUnsafe(); Field unsafeField = Unsafe.class.getDeclaredFields()[0]; unsafeField.setAccessible(true); Unsafe unsafe = (Unsafe) unsafeField.get(null); Field f = T02_TestUnsafe.class.getDeclaredField("i"); long offset = unsafe.objectFieldOffset(f); System.out.println(offset); boolean success = unsafe.compareAndSwapInt(t, offset, 0, 1); System.out.println(success); System.out.println(t.i); //unsafe.compareAndSwapInt() } }

jdk8u: unsafe.cpp:

cmpxchg = compare and exchange

UNSAFE_ENTRY(jboolean, Unsafe_CompareAndSwapInt(JNIEnv *env, jobject unsafe, jobject obj, jlong offset, jint e, jint x))

UnsafeWrapper("Unsafe_CompareAndSwapInt");

oop p = JNIHandles::resolve(obj);

jint* addr = (jint *) index_oop_from_field_offset_long(p, offset);

return (jint)(Atomic::cmpxchg(x, addr, e)) == e;

UNSAFE_END

jdk8u: atomic_linux_x86.inline.hpp

is_MP = Multi Processor

inline jint Atomic::cmpxchg (jint exchange_value, volatile jint* dest, jint compare_value) {

int mp = os::is_MP();

__asm__ volatile (LOCK_IF_MP(%4) "cmpxchgl %1,(%3)"

: "=a" (exchange_value)

: "r" (exchange_value), "a" (compare_value), "r" (dest), "r" (mp)

: "cc", "memory");

return exchange_value;

}

jdk8u: os.hpp is_MP()

static inline bool is_MP() {

// During bootstrap if _processor_count is not yet initialized

// we claim to be MP as that is safest. If any platform has a

// stub generator that might be triggered in this phase and for

// which being declared MP when in fact not, is a problem - then

// the bootstrap routine for the stub generator needs to check

// the processor count directly and leave the bootstrap routine

// in place until called after initialization has ocurred.

return (_processor_count != 1) || AssumeMP;

}

jdk8u: atomic_linux_x86.inline.hpp

#define LOCK_IF_MP(mp) "cmp $0, " #mp "; je 1f; lock; 1: "

Final realization:

cmpxchg = cas modify variable value

lock cmpxchg instruction / / remember this instruction

Cmpxchg can not guarantee atomicity, and lock guarantees atomicity (when executing cmpxchg instruction, other CPU s are not allowed to modify the value in it).

Hardware:

The lock instruction locks a North Bridge signal (electrical signal) when executing subsequent instructions

(without locking bus)

CAS is implemented in JAVA through lock cmpxchg

The implementation of volatile and synchronized is also related to this instruction

3.3 difference between atomicity and visibility

4 ConcurrentHashMap

The ConcurrentHashMap synchronization container class is a thread safe hash table added by Java 5. For multithreaded operations, between HashMap and Hashtable. The "lock segmentation" mechanism is used to replace the exclusive lock of Hashtable in order to improve the performance.

4.1 lock granularity

Reducing lock granularity means reducing the scope of locked objects, reducing the possibility of lock conflict, and improving the concurrency of the system. Reducing lock granularity is an effective way to weaken the competition of multi-threaded locks. The typical application of this technology is the implementation of ConcurrentHashMap class. For HashMap, the two most important methods are get and set. If we lock the whole HashMap, we can get thread safe objects, but the lock granularity is too large. The size of the Segment is also known as the concurrency of the ConcurrentHashMap.

4.2 lock section

Concurrent HashMap, which internally subdivides several small hashmaps, called segments. By default, a concurrent HashMap is further subdivided into 16 segments, that is, the concurrency of locks.

If you need to add a new table item to the ConcurrentHashMap, it is not to lock the entire HashMap, but to get the segment in which the table item should be stored according to the hashcode first, then lock the segment and complete the put operation. In a multithreaded environment, if multiple threads perform put operation at the same time, as long as the added table items are not stored in the same segment, the real parallelism between threads can be achieved.

4.3 others

This package also provides a Collection implementation designed for use in a multithreaded context:

ConcurrentHashMap, ConcurrentSkipListMap, ConcurrentSkipListSet, CopyOnWriteArrayList, and CopyOnWriteArraySet.

-

When many threads are expected to access a given collection, ConcurrentHashMap is usually better than synchronized HashMap, and ConcurrentSkipListMap is usually better than synchronized TreeMap.

-

CopyOnWriteArrayList is better than synchronized ArrayList when the expected reading and traversal are much greater than the number of updates to the list.

4.4 write and copy

Note: when there are many adding operations, the efficiency is low, because every time you add it, it will be copied, and the cost is very large. When there are many concurrent iterative operations, you can choose.

public class TestCopyOnWriteArrayList { public static void main(String[] args) { HelloThread ht = new HelloThread(); for (int i = 0; i < 10; i++) { new Thread(ht).start(); } } } class HelloThread implements Runnable{ private static List<String> list = Collections.synchronizedList(new ArrayList<String>()); // private static CopyOnWriteArrayList<String> list = new CopyOnWriteArrayList<>(); static{ list.add("AA"); list.add("BB"); list.add("CC"); } @Override public void run() { Iterator<String> it = list.iterator(); while(it.hasNext()){ System.out.println(it.next()); list.add("AA"); } } }

Running will cause concurrent modification exception. The traversal list and the added list are the same.

// private static List<String> list = Collections.synchronizedList(new ArrayList<String>()); private static CopyOnWriteArrayList<String> list = new CopyOnWriteArrayList<>();

In this way, no error will be reported and normal operation will be achieved. **Because at each write, a copy will be completed at the bottom, a new list will be copied, and then added. Every write is copied. **It will not cause concurrent modification exceptions, but it is inefficient.

When there are many adding operations, the efficiency is low, because every time you add it, there will be replication, which is very expensive. When there are many concurrent iterative operations, you can choose.

5 countdownlatch

5.1 concept

CountDownLatch - a synchronization helper class that allows one or more threads to wait until a set of operations is completed that are being performed in another thread. CountDown latch

Initializes CountDownLatch with the given count. Because the countDown() method is called, the await method is blocked until the current count reaches zero. After that, all waiting threads are released and all subsequent calls to await are immediately returned. This happens only once - the count cannot be reset. One thread (or multiple threads) can wait for another N threads to complete something before execution.

Locking can delay the progress of a thread until it reaches the termination state. Locking can be used to ensure that some activities will not continue until other activities are completed:

- Ensure that a calculation will not continue until all the resources it needs are initialized;

- Ensure that a service does not start until all other services it depends on have started;

- Wait until all participants in an operation are ready to continue.

5.2 method introduction

The most important method of CountDownLatch is countDown() -- countdown and await(), the former is mainly counting down once, the latter is waiting for the countdown to 0. If it does not reach 0, it will only block waiting.

public class TestCountDownLatch { public static void main(String[] args) { final CountDownLatch latch = new CountDownLatch(50); //Set the initial value of 50 after - 1 is executed by one thread each time LatchDemo ld = new LatchDemo(latch); long start = System.currentTimeMillis(); for (int i = 0; i < 50; i++) { new Thread(ld).start(); } try { latch.await(); //Continue executing main thread only after 50 threads have finished executing } catch (InterruptedException e) { } long end = System.currentTimeMillis(); System.out.println("Time consuming:" + (end - start)); } } class LatchDemo implements Runnable { private CountDownLatch latch; public LatchDemo(CountDownLatch latch) { this.latch = latch; } @Override public void run() { try { for (int i = 0; i < 50000; i++) { if (i % 2 == 0) { System.out.println(i); } } } finally { latch.countDown(); //After - 1 is executed, put it in finally to ensure that it is executed every time } } }

6 implement Callable interface

Java 5.0 in java.util.concurrent Provides a new way to create execution threads: the Callable interface.

Callable needs to rely on FutureTask, which can also be used as a latch.

6.1 four ways to create threads

No return:

- Implement the Runnable interface and rewrite run();

- Inherit Thread class, override run();

Yes return:

- Implement the Callable interface, rewrite the call(), wrap the Callable with FutureTask, and pass it into the Thread constructor as task;

- Using thread pool;

6.2 use of callable

public class TestCallable { public static void main(String[] args) { ThreadDemo td = new ThreadDemo(); //1. To execute the Callable mode, the FutureTask implementation class is required to receive the operation results. FutureTask<Integer> result = new FutureTask<>(td); new Thread(result).start(); //2. Receive the result after thread operation try { Integer sum = result.get(); //FutureTask can be used for blocking System.out.println(sum); System.out.println("------------------------------------"); } catch (InterruptedException | ExecutionException e) { e.printStackTrace(); } } } class ThreadDemo implements Callable<Integer>{ @Override public Integer call() throws Exception { int sum = 0; for (int i = 0; i <= 100000; i++) { sum += i; } return sum; } }

7 -Lock synchronization lock

Before Java 5.0, only synchronized and volatile mechanisms were available to coordinate access to shared objects. Some new mechanisms have been added after Java 5.0, but it is not a way to replace the built-in lock, but as an optional advanced function when the built-in lock is not applicable.

ReentrantLock implements the Lock interface and provides the same mutex and memory visibility as synchronized. But it provides more flexibility to handle locks than synchronized.

Three ways to solve multithreading security problems

-

Before jdk 1.5:

-

synchronized: implicit lock

1. Synchronization code block

2. Synchronization method

-

-

After jdk 1.5:

-

3. Synchronous Lock: explicit Lock

Note: it is a display lock, which needs to be locked by the lock() method, and must be released by the unlock() method

-

public class TestLock { public static void main(String[] args) { Ticket ticket = new Ticket(); new Thread(ticket, "1 Window").start(); new Thread(ticket, "2 Window").start(); new Thread(ticket, "3 Window").start(); } } class Ticket implements Runnable{ private int tick = 100; private Lock lock = new ReentrantLock(); @Override public void run() { while(true){ lock.lock(); //Lock try{ if(tick > 0){ try { Thread.sleep(200); } catch (InterruptedException e) { } System.out.println(Thread.currentThread().getName() + " The remaining tickets are:" + --tick); } }finally{ lock.unlock(); //Must be executed so the lock is released in finally } } } }

8 Condition control thread communication

The Condition interface describes the Condition variables that may be associated with a Lock. These variables are used with Object.wait The implicit monitors accessed are similar, but provide more powerful functionality. In particular, a single Lock can be associated with multiple Condition objects. To avoid compatibility issues, the name of the Condition method is different from that in the corresponding object version.

In the Condition object, the wait, notify, and notifyAll methods correspond to wait, signal, and signalAll, respectively.

The Condition instance is essentially bound to a Lock. To get a Condition instance for a specific Lock instance, use its newCondition() method.

8.1 using Condition

Use Condition to control thread communication:

- If you do not use the synchronized keyword to ensure synchronization, but directly use the Lock object to ensure synchronization, there is no implicit synchronization monitor in the system, and you cannot use wait() notify() notifyAll() for thread communication

- When using the lock object to ensure synchronization, Java provides a Condition class to maintain coordination. Using the Condition can let threads that have already got the lock object but cannot continue to execute release the lock object, and the Condition object can also wake up other waiting processes.

- The Condition instance is bound to a Lock object. To get the Condition instance of the Lock instance, just call the newCondition() method of the Lock object.

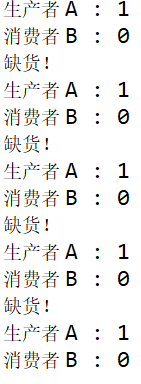

8.2 producer and consumer cases

public class TestProductorAndConsumerForLock {

public static void main(String[] args) {

Clerk clerk = new Clerk();

Productor pro = new Productor(clerk);

Consumer con = new Consumer(clerk);

new Thread(pro, "producer A").start();

new Thread(con, "consumer B").start();

// new Thread(pro, "producer C").start();

// new Thread(con, "consumer D").start();

}

}

class Clerk {

private int product = 0;

private Lock lock = new ReentrantLock(); //Create lock object

private Condition condition = lock.newCondition(); //Get the Condition instance of Lock instance

// Purchase

public void get() {

lock.lock();

try {

if (product >= 1) { // To avoid false wakeup, it should always be used in the loop.

System.out.println("Product full!");

try {

condition.await();

} catch (InterruptedException e) {

}

}

System.out.println(Thread.currentThread().getName() + " : " + ++product);

condition.signalAll();

} finally {

lock.unlock();

}

}

//Selling

public void sale() {

lock.lock();

try {

if (product <= 0) {

System.out.println("Out of stock!");

try {

condition.await();

} catch (InterruptedException e) {

}

}

System.out.println(Thread.currentThread().getName() + " : " + --product);

condition.signalAll();

} finally {

lock.unlock();

}

}

}

// producer

class Productor implements Runnable {

private Clerk clerk;

public Productor(Clerk clerk) {

this.clerk = clerk;

}

@Override

public void run() {

for (int i = 0; i < 20; i++) {

try {

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

clerk.get(); //Call the store clerk's purchase method

}

}

}

// consumer

class Consumer implements Runnable {

private Clerk clerk;

public Consumer(Clerk clerk) {

this.clerk = clerk;

}

@Override

public void run() {

for (int i = 0; i < 20; i++) {

clerk.sale(); //Call salesclerk selling method

}

}

}

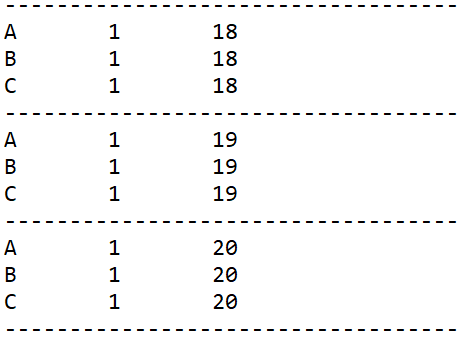

9 threads alternating in order

Requirements: write A program, open three threads, the ID of these three threads are A, B, C, each thread will print its own ID on the screen 10 times, the output results must be displayed in order. For example: abcababc Recursion in turn

public class TestABCAlternate { public static void main(String[] args) { AlternateDemo ad = new AlternateDemo(); new Thread(new Runnable() { @Override public void run() { for (int i = 1; i <= 20; i++) { ad.loopA(i); } } }, "A").start(); new Thread(new Runnable() { @Override public void run() { for (int i = 1; i <= 20; i++) { ad.loopB(i); } } }, "B").start(); new Thread(new Runnable() { @Override public void run() { for (int i = 1; i <= 20; i++) { ad.loopC(i); System.out.println("-----------------------------------"); } } }, "C").start(); } } class AlternateDemo{ private int number = 1; //Flag for the currently executing thread private Lock lock = new ReentrantLock(); private Condition condition1 = lock.newCondition(); private Condition condition2 = lock.newCondition(); private Condition condition3 = lock.newCondition(); /** * @param totalLoop : Cycle number */ public void loopA(int totalLoop){ lock.lock(); try { //1. Judgment if(number != 1){ condition1.await(); //Thread A wait } //2. Printing for (int i = 1; i <= 1; i++) { System.out.println(Thread.currentThread().getName() + "\t" + i + "\t" + totalLoop); } //3. Wake up number = 2; condition2.signal(); //Wake up B thread } catch (Exception e) { e.printStackTrace(); } finally { lock.unlock(); } } public void loopB(int totalLoop){ lock.lock(); try { //1. Judgment if(number != 2){ condition2.await(); //Thread B wait } //2. Printing for (int i = 1; i <= 1; i++) { System.out.println(Thread.currentThread().getName() + "\t" + i + "\t" + totalLoop); } //3. Wake up number = 3; condition3.signal(); //Wake up C thread } catch (Exception e) { e.printStackTrace(); } finally { lock.unlock(); } } public void loopC(int totalLoop){ lock.lock(); try { //1. Judgment if(number != 3){ condition3.await(); //C thread waiting } //2. Printing for (int i = 1; i <= 1; i++) { System.out.println(Thread.currentThread().getName() + "\t" + i + "\t" + totalLoop); } //3. Wake up number = 1; condition1.signal(); //Wake up A thread } catch (Exception e) { e.printStackTrace(); } finally { lock.unlock(); } } }

10 ReadWriteLock

ReadWriteLock maintains a pair of related locks, one for read-only operations and one for write operations. As long as there is no writer, the read lock can be held by multiple reader threads at the same time. The write lock is exclusive.

ReadWriteLock read operations usually do not change shared resources, but when a write operation is performed, the lock must be acquired exclusively. For data structures where read operations are the majority. ReadWriteLock can provide higher concurrency than exclusive locks. For read-only data structures, the invariance contained in them can be completely ignored.

- Write / read / write requires "mutual exclusion"

- Reading doesn't need mutual exclusion

public class TestReadWriteLock { public static void main(String[] args) { ReadWriteLockDemo rw = new ReadWriteLockDemo(); new Thread(new Runnable() { @Override public void run() { rw.set((int)(Math.random() * 101)); } }, "Write:").start(); for (int i = 0; i < 100; i++) { new Thread(new Runnable() { @Override public void run() { rw.get(); } }).start(); } } } class ReadWriteLockDemo{ private int number = 0; private ReadWriteLock lock = new ReentrantReadWriteLock(); //read public void get(){ lock.readLock().lock(); //Lock try{ System.out.println(Thread.currentThread().getName() + " : " + number); }finally{ lock.readLock().unlock(); //Release lock } } //write public void set(int number){ lock.writeLock().lock(); try{ System.out.println(Thread.currentThread().getName()); this.number = number; }finally{ lock.writeLock().unlock(); } } }

11 thread 8 lock

Judge "one" or "two" of printing?

-

- Two common synchronization methods, two threads, standard printing, printing results?

-

- newly added Thread.sleep() to getOne(), print the result?

-

- Add a new normal method getThree() to print the result?

-

- Two common synchronization methods, two Number objects, print results?

-

- Change getOne() to static synchronization method, and print the result?

-

- Modify the two methods as static synchronization method, a Number object, and print the result?

-

- One static synchronization method, one non static synchronization method, two Number objects, print results?

-

- Two static synchronization methods, two Number objects, print results?

To know the answer to thread 8 lock above, you need to know the key:

- ① The lock of the non static method defaults to this (instance object), and the lock of the static method is the corresponding Class object (class object).

- ② At a certain time, for the same object, only one thread can hold the lock, no matter how many methods.

- ③ Lock static method: at a certain time, different instance objects can only have one object holding the lock.

public class TestThread8Monitor { public static void main(String[] args) { Number number = new Number(); Number number2 = new Number(); new Thread(new Runnable() { @Override public void run() { number.getOne(); } }).start(); new Thread(new Runnable() { @Override public void run() { // number.getTwo(); number2.getTwo(); } }).start(); // new Thread(new Runnable() { // @Override // public void run() { // number.getThree(); // } // }).start(); } } class Number{ public static synchronized void getOne(){ try { Thread.sleep(3000); System.out.println("--Three seconds later--"); } catch (InterruptedException e) { } System.out.println("one"); } public static synchronized void getTwo(){ System.out.println("two"); } public void getThree(){ System.out.println("three"); } }

answer:

- Two common synchronization methods, two threads, one Number object, standard printing, printing result? / / one two

- newly added Thread.sleep() to getOne(), print the result? / / -- after 3 seconds -- one two

- Add a new normal method getThree(), print the result? / / three -- after 3 seconds -- one two

- Two common synchronization methods, two Number objects, print result? / / two -- after 3 seconds -- one

- Change getOne() to static synchronization method, print the result? / / two -- after 3 seconds -- one

- Modify the two methods to be static synchronization methods, a Number object, and print the result? / / -- 3 seconds later -- one two

- A static synchronization method, a non static synchronization method, two Number objects, print results? / / two -- after 3 seconds -- one

- Two static synchronization methods, two Number objects, print result? / / -- 3 seconds later -- one two

12 thread pool

12.1 introduction to line pool

The fourth way to get threads: thread pool. The thread pool provides a thread queue in which all the waiting threads are stored. It avoids the extra cost of creating and destroying, and improves the response speed. It is usually configured using the Executors factory method.

Thread pools can solve two different problems: because they reduce the cost of each task call, they can usually provide enhanced performance when executing a large number of asynchronous tasks, and also provide methods to bind and manage resources (including threads used when executing task sets).

12.2 architecture of thread pool

/* * java.util.concurrent.Executor: the root interface responsible for thread usage and scheduling *| -- ExecutorService sub interface: the main interface of thread pool *| -- implementation class of ThreadPoolExecutor thread pool *| -- ScheduledExecutorService sub interface: responsible for thread scheduling *| -- ScheduledThreadPoolExecutor: inherits ThreadPoolExecutor and implements ScheduledExecutorService */

12.3 tool class: Executors

For ease of use across a large number of contexts, this class provides many adjustable parameters and extension hooks. However, it is strongly recommended that programmers use the more convenient Executors factory method:

-

Executors newCachedThreadPool() (the number of cached thread pools is not fixed. The number can be changed automatically according to the demand, and automatic thread recycling can be performed.)

-

Executors newFixedThreadPool(int)

-

Executors newSingleThreadExecutor() (only one thread in the thread pool)

-

ScheduledExecutorService newScheduledThreadPool(): creates a fixed size thread that can delay or schedule the execution of tasks.

public class TestThreadPool { public static void main(String[] args) throws Exception { //1. Create thread pool ExecutorService pool = Executors.newFixedThreadPool(5); List<Future<Integer>> list = new ArrayList<>(); for (int i = 0; i < 10; i++) { Future<Integer> future = pool.submit(new Callable<Integer>(){ @Override public Integer call() throws Exception { int sum = 0; for (int i = 0; i <= 100; i++) { sum += i; } return sum; } }); list.add(future); } pool.shutdown(); for (Future<Integer> future : list) { System.out.println(future.get()); } /*ThreadPoolDemo tpd = new ThreadPoolDemo(); //2. Assign tasks to threads in the thread pool for (int i = 0; i < 10; i++) { pool.submit(tpd); } //3. Close thread pool pool.shutdown();*/ } // new Thread(tpd).start(); // new Thread(tpd).start(); } //class ThreadPoolDemo implements Runnable{ // // private int i = 0; // // @Override // public void run() { // while(i <= 100){ // System.out.println(Thread.currentThread().getName() + " : " + i++); // } // } // //}

12.4 thread scheduling

- ScheduledExecutorService newScheduledThreadPool(): creates a fixed size thread that can delay or schedule the execution of tasks.

public class TestScheduledThreadPool { public static void main(String[] args) throws Exception { ScheduledExecutorService pool = Executors.newScheduledThreadPool(5); for (int i = 0; i < 5; i++) { Future<Integer> result = pool.schedule(new Callable<Integer>(){ @Override public Integer call() throws Exception { int num = new Random().nextInt(100);//Generate random number System.out.println(Thread.currentThread().getName() + " : " + num); return num; } }, 1, TimeUnit.SECONDS); //Delay thread, delay time, time unit System.out.println(result.get()); } pool.shutdown(); } }

13 ForkJoinPool branch / merge framework work theft

13.1 Fork/Join framework

Fork/Join framework: if necessary, split a large task into several small tasks (when it cannot be disassembled again), and then summarize the results of each small task operation.

13.2 difference between fork / join framework and thread pool

Adopt "work stealing" mode:

When a new task is executed, it can divide it into smaller tasks, add the small tasks to the thread queue, and then steal one from the queue of a random thread and put it in its own queue.

Compared with the general thread pool implementation, the advantage of fork/join framework lies in the way of processing the tasks contained in it. In a general thread pool, if a task that a thread is executing cannot continue to run for some reason, the thread will be in a waiting state. In the implementation of fork/join framework, if one sub problem cannot continue to run because it is waiting for another sub problem to complete. Then the thread dealing with this sub problem will actively look for other sub problems that have not yet run to execute. This method reduces the waiting time of thread and improves the performance.

public class TestForkJoinPool { public static void main(String[] args) { Instant start = Instant.now(); ForkJoinPool pool = new ForkJoinPool(); ForkJoinTask<Long> task = new ForkJoinSumCalculate(0L, 5000000000L); Long sum = pool.invoke(task); System.out.println(sum); Instant end = Instant.now(); System.out.println("Time consuming:" + Duration.between(start, end).toMillis());//2709 split also takes time } @Test public void test1(){ Instant start = Instant.now(); long sum = 0L; for (long i = 0L; i <= 5000000000L; i++) { sum += i; } System.out.println(sum); Instant end = Instant.now(); System.out.println("for Time consuming:" + Duration.between(start, end).toMillis());//2057 } //What's new in java8 @Test public void test2(){ Instant start = Instant.now(); Long sum = LongStream.rangeClosed(0L, 5000000000L) .parallel() .reduce(0L, Long::sum); System.out.println(sum); Instant end = Instant.now(); System.out.println("java8 New features take time:" + Duration.between(start, end).toMillis());//1607 } } class ForkJoinSumCalculate extends RecursiveTask<Long>{ /** * */ private static final long serialVersionUID = -259195479995561737L; private long start; private long end; private static final long THURSHOLD = 10000L; //critical value public ForkJoinSumCalculate(long start, long end) { this.start = start; this.end = end; } @Override protected Long compute() { long length = end - start; if(length <= THURSHOLD){ long sum = 0L; for (long i = start; i <= end; i++) { sum += i; } return sum; }else{ long middle = (start + end) / 2; ForkJoinSumCalculate left = new ForkJoinSumCalculate(start, middle); left.fork(); //Split and push into thread queue ForkJoinSumCalculate right = new ForkJoinSumCalculate(middle+1, end); right.fork(); //Split and push into thread queue return left.join() + right.join(); } } }