Foreword: Recent project of combining kafka and zookeeper containerization with rancher, I consulted related websites and books and found that there are many reasons why I chose to customize it if it is relatively strong to standardize the company. Let me briefly talk about the reasons why I chose to customize it finally: (Because k8s+kakfa test is deployed locally by an individual, production needs to configure the memory and C needed by myselfPu, dynamic persistent storage, etc.)

1. jdk cannot be customized using the official dockfile.

2. The association between dockerfile and yaml is relatively strong. Everyone has everyone's thoughts.

3. It cannot be combined with the physical machine deployment standardization documents prior to the company.

.....

Next, I'll share a deployment that I've spent half a month researching. Interested people can discuss with friends:

https://github.com/renzhiyuan6666666/kubernetes-docker

1. zookeeper cluster deployment

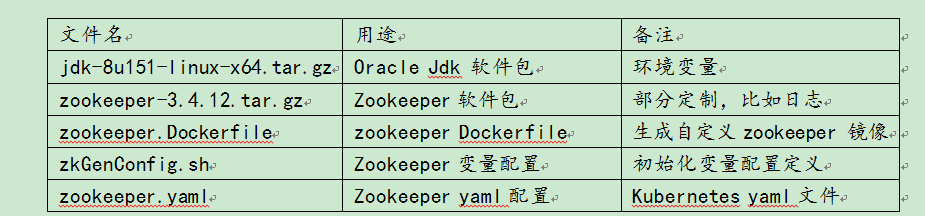

1.1) List of zookeeper files

2.

1.2) Detailed list of zookeeper files

1.2.1) oracle jdk package

jdk-8u151-linux-x64.tar.gz

The bottom level uses centos6.6 mirroring, deploys the directory to the app directory, and configures environment variables within the dockerfile.

1.2.2) zookeeper package

zookeeper-3.4.12.tar.gz

The bottom uses centos6.6 mirroring to deploy directories to app directories

1.2.3)zookeeper Dockerfile

#Set Inheritance Mirror FROM centos:6.6 #Author's Information MAINTAINER docker_user renzhiyuan #Zookeeper and jdk standardized versions #ENV JAVA_VERSION="1.8.0_151" #ENV ZK_VERSION="3.4.12" ENV ZK_JDK_HOME=/app ENV JAVA_HOME=/app/jdk1.8.0_151 ENV ZK_HOME=/app/zookeeper ENV LANG=en_US.utf8 #Basic Use Package Installation Configuration #RUN yum makecache RUN yum install lsof yum-utils lrzsz net-tools nc -y &>/dev/null #Create installation directory RUN mkdir $ZK_JDK_HOME #Permissions and Variables RUN chown -R root.root $ZK_JDK_HOME && chmod -R 755 $ZK_JDK_HOME #Install Configuration JDK ADD jdk-8u151-linux-x64.tar.gz /app RUN echo "export JAVA_HOME=/app/jdk1.8.0_151" >>/etc/profile RUN echo "export PATH=\$JAVA_HOME/bin:\$PATH" >>/etc/profile RUN echo "export CLASSPATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.jar" >>/etc/profile && source /etc/profile #Installation configuration zookeeper-3.4.12, related directories integrated into the installation package ADD zookeeper-3.4.12.tar.gz /app RUN ln -s /app/zookeeper-3.4.12 /app/zookeeper #Configuration files, log cutting, jvm standardization configured separately in zkGenConfig.sh COPY zkGenConfig.sh /app/zookeeper/bin/ #Open Port EXPOSE 2181 10052

1.2.4)zkGenConfig.sh

# !/usr/bin/env

# Copyright 2016 The Kubernetes Authors.

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#Configuring ZK-related variables

#ZK_USER=${ZK_USER:-"root"}

#ZK_LOG_LEVEL=${ZK_LOG_LEVEL:-"INFO"}

#ZK_HOME=${ZK_HOME:-"/app/zookeeper"}

ZK_DATA_DIR=${ZK_DATA_DIR:-"/app/zookeeper/data"}

ZK_DATA_LOG_DIR=${ZK_DATA_LOG_DIR:-"/app/zookeeper/datalog"}

ZK_LOG_DIR=${ZK_LOG_DIR:-"/app/zookeeper/logs"}

ZK_CONF_DIR=${ZK_CONF_DIR:-"/app/zookeeper/conf"}

LOGGER_PROPS_FILE="$ZK_CONF_DIR/log4j.properties"

#ZK_CLIENT_PORT=${ZK_CLIENT_PORT:-2181}

#ZK_SERVER_PORT=${ZK_SERVER_PORT:-2222}

#ZK_ELECTION_PORT=${ZK_ELECTION_PORT:-2223}

#ZK_TICK_TIME=${ZK_TICK_TIME:-3000}

#ZK_INIT_LIMIT=${ZK_INIT_LIMIT:-10}

#ZK_SYNC_LIMIT=${ZK_SYNC_LIMIT:-5}

#ZK_MAX_CLIENT_CNXNS=${ZK_MAX_CLIENT_CNXNS:-100}

#ZK_MIN_SESSION_TIMEOUT=${ZK_MIN_SESSION_TIMEOUT:- $((ZK_TICK_TIME*2))}

#ZK_MAX_SESSION_TIMEOUT=${ZK_MAX_SESSION_TIMEOUT:- $((ZK_TICK_TIME*20))}

#ZK_SNAP_RETAIN_COUNT=${ZK_SNAP_RETAIN_COUNT:-3}

#ZK_PURGE_INTERVAL=${ZK_PURGE_INTERVAL:-0}

ID_FILE="$ZK_DATA_DIR/myid"

ZK_CONFIG_FILE="$ZK_CONF_DIR/zoo.cfg"

JAVA_ENV_FILE="$ZK_CONF_DIR/java.env"

#Number of copies

#ZK_REPLICAS=3

#Configure Host Name and domain

HOST=`hostname -s`

DOMAIN=`hostname -d`

#ipdrr=`ip a|grep eth1|grep inet|awk '{print $2}'|awk -F"/" '{print $1}'`

#Configure Election Port and Data Synchronization Port

function print_servers() {

for (( i=1; i<=$ZK_REPLICAS; i++ ))

do

echo "server.$i=$NAME-$((i-1)).$DOMAIN:$ZK_SERVER_PORT:$ZK_ELECTION_PORT"

done

}

#Get the last hostName, such as zookeeper-0 Get 0 as myid

function validate_env() {

echo "Validating environment"

if [ -z $ZK_REPLICAS ]; then

echo "ZK_REPLICAS is a mandatory environment variable"

exit 1

fi

if [[ $HOST =~ (.*)-([0-9]+)$ ]]; then

NAME=${BASH_REMATCH[1]}

ORD=${BASH_REMATCH[2]}

else

echo "Failed to extract ordinal from hostname $HOST"

exit 1

fi

MY_ID=$((ORD+1))

if [ ! -f $ID_FILE ]; then

echo $MY_ID >> $ID_FILE

fi

#echo "ZK_REPLICAS=$ZK_REPLICAS"

#echo "MY_ID=$MY_ID"

#echo "ZK_LOG_LEVEL=$ZK_LOG_LEVEL"

#echo "ZK_DATA_DIR=$ZK_DATA_DIR"

#echo "ZK_DATA_LOG_DIR=$ZK_DATA_LOG_DIR"

#echo "ZK_LOG_DIR=$ZK_LOG_DIR"

#echo "ZK_CLIENT_PORT=$ZK_CLIENT_PORT"

#echo "ZK_SERVER_PORT=$ZK_SERVER_PORT"

#echo "ZK_ELECTION_PORT=$ZK_ELECTION_PORT"

#echo "ZK_TICK_TIME=$ZK_TICK_TIME"

#echo "ZK_INIT_LIMIT=$ZK_INIT_LIMIT"

#echo "ZK_SYNC_LIMIT=$ZK_SYNC_LIMIT"

#echo "ZK_MAX_CLIENT_CNXNS=$ZK_MAX_CLIENT_CNXNS"

#echo "ZK_MIN_SESSION_TIMEOUT=$ZK_MIN_SESSION_TIMEOUT"

#echo "ZK_MAX_SESSION_TIMEOUT=$ZK_MAX_SESSION_TIMEOUT"

#echo "ZK_HEAP_SIZE=$ZK_HEAP_SIZE"

#echo "ZK_SNAP_RETAIN_COUNT=$ZK_SNAP_RETAIN_COUNT"

#echo "ZK_PURGE_INTERVAL=$ZK_PURGE_INTERVAL"

#echo "ENSEMBLE"

#print_servers

#echo "Environment validation successful"

}

#Configure ZK Profile Variables

function create_config() {

#rm -f $ZK_CONFIG_FILE

echo "dataDir=$ZK_DATA_DIR" >>$ZK_CONFIG_FILE

echo "dataLogDir=$ZK_DATA_LOG_DIR" >>$ZK_CONFIG_FILE

echo "tickTime=$ZK_TICK_TIME" >>$ZK_CONFIG_FILE

echo "initLimit=$ZK_INIT_LIMIT" >>$ZK_CONFIG_FILE

echo "syncLimit=$ZK_SYNC_LIMIT" >>$ZK_CONFIG_FILE

echo "clientPort=$ZK_CLIENT_PORT" >>$ZK_CONFIG_FILE

echo "maxClientCnxns=$ZK_MAX_CLIENT_CNXNS" >>$ZK_CONFIG_FILE

if [ $ZK_REPLICAS -gt 1 ]; then

print_servers >> $ZK_CONFIG_FILE

fi

echo "Write ZooKeeper configuration file to $ZK_CONFIG_FILE"

}

#Create ZK related directory and myid

#function create_data_dirs() {

# echo "Creating ZooKeeper data directories and setting permissions"

#

# if [ ! -d $ZK_DATA_DIR ]; then

# mkdir -p $ZK_DATA_DIR

# chown -R $ZK_USER:$ZK_USER $ZK_DATA_DIR

# fi

#

# if [ ! -d $ZK_DATA_LOG_DIR ]; then

# mkdir -p $ZK_DATA_LOG_DIR

# chown -R $ZK_USER:$ZK_USER $ZK_DATA_LOG_DIR

# fi

#

# if [ ! -d $ZK_LOG_DIR ]; then

# mkdir -p $ZK_LOG_DIR

# chown -R $ZK_USER:$ZK_USER $ZK_LOG_DIR

# fi

#

# echo "Created ZooKeeper data directories and set permissions in $ZK_DATA_DIR"

#}

#Configure Log Cutting

#function create_log_props () {

# rm -f $LOGGER_PROPS_FILE

# echo "Creating ZooKeeper log4j configuration"

# echo "zookeeper.root.logger=CONSOLE" >> $LOGGER_PROPS_FILE

# echo "zookeeper.console.threshold="$ZK_LOG_LEVEL >> $LOGGER_PROPS_FILE

# echo "log4j.rootLogger=\${zookeeper.root.logger}" >> $LOGGER_PROPS_FILE

# echo "log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender" >> $LOGGER_PROPS_FILE

# echo "log4j.appender.CONSOLE.Threshold=\${zookeeper.console.threshold}" >> $LOGGER_PROPS_FILE

# echo "log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout" >> $LOGGER_PROPS_FILE

# echo "log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n" >> $LOGGER_PROPS_FILE

# echo "Wrote log4j configuration to $LOGGER_PROPS_FILE"

#}

#Configure Start jmx Configuration

function create_java_env() {

rm -f $JAVA_ENV_FILE

echo "Creating JVM configuration file"

echo '#!/bin/bash' >> $JAVA_ENV_FILE

echo "export JMXPORT=10052" >> $JAVA_ENV_FILE

echo "JVMFLAGS=\"\$JVMFLAGS -Xms256m -Xmx256m -Djute.maxbuffer=5000000 -Xloggc:gc.log -XX:+PrintGCApplicationStoppedTime -XX:+PrintGCApplicationConcurrentTime -XX:+PrintGC -XX:+PrintGCTimeStamps -XX:+PrintGCDetails -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC\"" >> $JAVA_ENV_FILE

echo "Wrote JVM configuration to $JAVA_ENV_FILE"

}

validate_env && create_config && create_java_env && cat $ZK_CONFIG_FILE && cat $JAVA_ENV_FILE

1.2.5)zookeeper.yaml

# !/usr/bin/env

#Deploy Service Headless for communication between Zookeeper s

apiVersion: v1

kind: Service

metadata:

name: zookeeper-headless

labels:

app: zookeeper

spec:

clusterIP: None

publishNotReadyAddresses: true

ports:

- name: client

port: 2181

targetPort: client

- name: server

port: 2222

targetPort: server

- name: leader-election

port: 2223

targetPort: leader-election

selector:

app: zookeeper

---

#Deploy a Service for external access to Zookeeper

apiVersion: v1

kind: Service

metadata:

name: zookeeper

labels:

app: zookeeper

spec:

type: NodePort

ports:

- name: client

port: 2181

targetPort: 2181

nodePort: 32181

protocol: TCP

selector:

app: zookeeper

---

#Configuration controller guarantees minimum number of POD clusters running

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

selector:

matchLabels:

app: zookeeper

minAvailable: 2

---

#Configure StatefulSet

apiVersion: apps/v1beta2

kind: StatefulSet

metadata:

name: zookeeper

spec:

podManagementPolicy: OrderedReady

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper-headless

template:

metadata:

annotations:

labels:

app: zookeeper

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zookeeper

topologyKey: "kubernetes.io/hostname"

containers:

- name: zookeeper

imagePullPolicy: Always

image: 192.168.8.183/library/zookeeper-zyxf:3.4.12

resources:

requests:

memory: "512m"

cpu: "500m"

ports:

- containerPort: 2181

name: client

- containerPort: 2222

name: server

- containerPort: 2223

name: leader-election

env:

- name : ZK_REPLICAS

value: "3"

- name : ZK_DATA_DIR

value: "/app/zookeeper/data"

- name : ZK_DATA_LOG_DIR

value: "/app/zookeeper/dataLog"

- name : ZK_TICK_TIME

value: "3000"

- name : ZK_INIT_LIMIT

value: "10"

- name : ZK_SYNC_LIMIT

value: "5"

- name : ZK_MAX_CLIENT_CNXNS

value: "100"

- name: ZK_CLIENT_PORT

value: "2181"

- name: ZK_SERVER_PORT

value: "2222"

- name: ZK_ELECTION_PORT

value: "2223"

command:

- sh

- -c

- /app/zookeeper/bin/zkGenConfig.sh && /app/zookeeper/bin/zkServer.sh start-foreground

volumeMounts:

- name: datadir

mountPath: /app/zookeeper/dataLog

volumes:

- name: datadir

hostPath:

path: /zk

type: DirectoryOrCreate

# volumeMounts:

# - name: data

# mountPath: /renzhiyuan/zookeeper

# volumeClaimTemplates:

# - metadata:

# name: data

# spec:

# accessModes: [ "ReadWriteOnce" ]

# storageClassName: local-storage

# resources:

# requests:

# storage: 3Gi

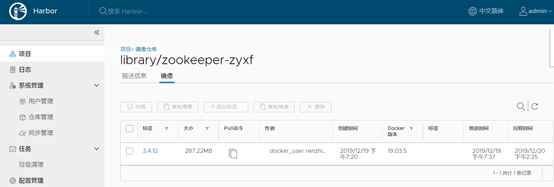

* 1.3) zookeeper image generation upload

1.3.1) zookeeper image packaging

docker build -t zookeeper:3.4.12 -f zookeeper.Dockerfile .

docker tag zookeeper:3.4.12 192.168.8.183/library/zookeeper-zyxf:3.4.12

1.3.2) zookeeper mirror upload harbor repository

docker login 192.168.8.183 -u admin -p renzhiyuan

docker push 192.168.8.183/library/zookeeper-zyxf

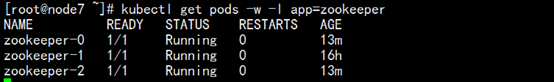

1.4) zookeeper deployment

1.4) zookeeper deployment

1.4.1) zookeeper deployment

kubectl apply -f zookeeper.yaml

1.4.2) zookeeper Deployment Process Check

[root@node7 ~]# kubectl describe pods zookeeper-

zookeeper-0 zookeeper-1 zookeeper-2

kubectl get pods -w -l app=zookeeper

1.5) zookeeper cluster validation

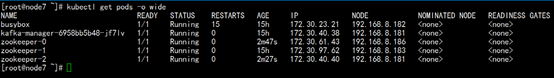

1.5.1) Check Pods distribution in zookeeper StatefulSet

[root@node7 ~]# kubectl get pods -o wide

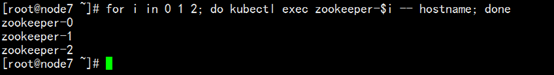

1.5.2) Check the Pods host name in zookeeper StatefulSet

for i in 0 1 2; do kubectl exec zookeeper-$i – hostname ; done

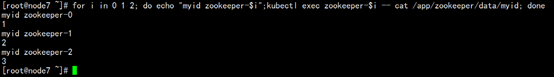

1.5.3) Check myid identity in zookeeper StatefulSet

for i in 0 1 2; do echo "myid zookeeper-$i";kubectl exec zookeeper-$i -- cat /app/zookeeper/data/myid; done

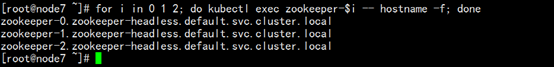

1.5.4) Check the FQDN (official domain name) in the zookeeper StatefulSet

for i in 0 1 2; do kubectl exec zookeeper-$i -- hostname -f; done

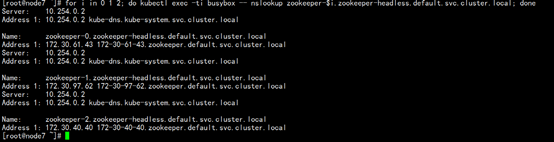

1.5.5) Check dns resolution in zookeeper StatefulSet pods

for i in 0 1 2; do kubectl exec -ti busybox -- nslookup zookeeper-$i.zookeeper-headless.default.svc.cluster.local; done

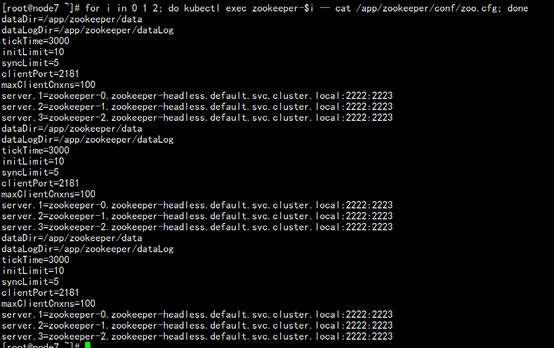

1.5.6) Check zookeeper StatefulSet zoo.cfg profile standardization

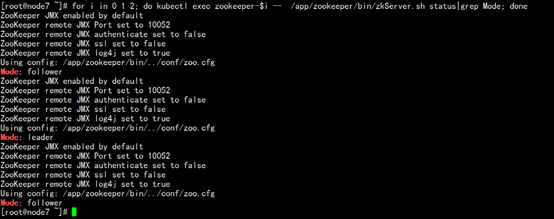

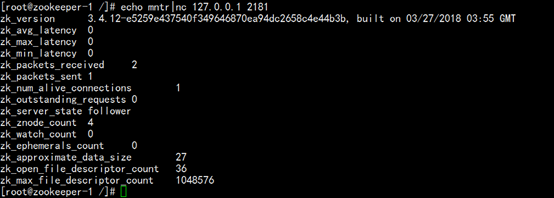

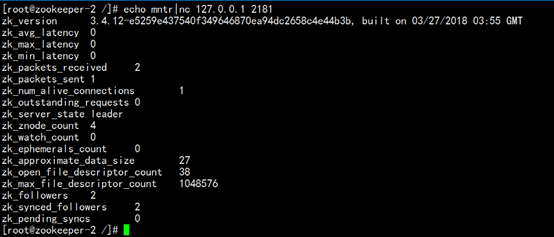

1.5.7) Check zookeeper StatefulSet cluster status

for i in 0 1 2; do kubectl exec zookeeper-$i -- /app/zookeeper/bin/zkServer.sh status|grep Mode; done

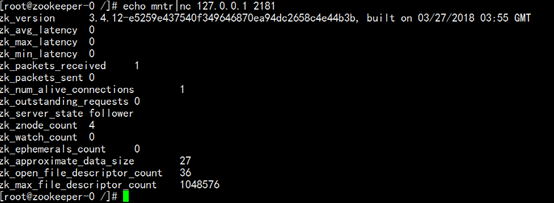

1.5.8) Check the zookeeper StatefulSet four-character command check

1.6) zookeeper Cluster Expansion

2. kafka cluster deployment

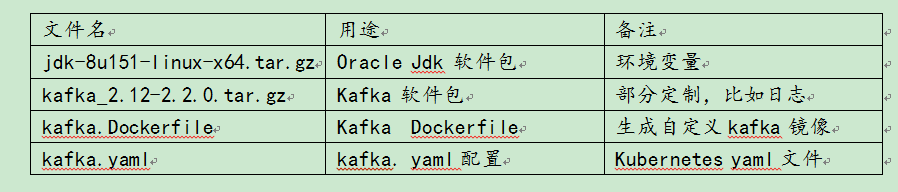

2.1) List of kafka files

2.2) Detailed kafka file list

2.2.1) oracle jdk package

jdk-8u151-linux-x64.tar.gz

The bottom level uses centos6.6 mirroring, deploys the directory to the app directory, and configures environment variables within the dockerfile.

2.2.2) zookeeper package

kafka_2.12-2.2.0.tar.gz

The bottom uses centos6.6 mirroring to deploy directories to app directories

2.2.3)kafka Dockerfile

#Set Inheritance Mirror FROM centos:6.6 #Author's Information MAINTAINER docker_user (renzhiyuan@docker.com) #Standardized versions of kafka and jdk ENV JAVA_VERSION="1.8.0_151" ENV KAFKA_VERSION="2.2.0" ENV KAFKA_JDK_HOME=/app ENV JAVA_HOME=/app/jdk1.8.0_151 ENV KAFKA_HOME=/app/kafka ENV LANG=en_US.utf8 #Basic Use Package Installation Configuration #RUN yum makecache RUN yum install lsof yum-utils lrzsz net-tools nc -y &>/dev/null #Create installation directory RUN mkdir $KAFKA_JDK_HOME #Permissions and Variables RUN chown -R root.root $KAFKA_JDK_HOME && chmod -R 755 $KAFKA_JDK_HOME #Install Configuration JDK ADD jdk-8u151-linux-x64.tar.gz /app RUN echo "export JAVA_HOME=/app/jdk1.8.0_151" >>/etc/profile RUN echo "export PATH=\$JAVA_HOME/bin:\$PATH" >>/etc/profile RUN echo "export CLASSPATH=.:\$JAVA_HOME/lib/dt.jar:\$JAVA_HOME/lib/tools.jar" >>/etc/profile #Install Configuration Kafka and Create Directory ADD kafka_2.12-2.2.0.tar.gz /app RUN ln -s /app/kafka_2.12-2.2.0 /app/kafka #Configuration file, log cutting, jvm standardization configured separately in kafkaGenConfig.sh #Open Port EXPOSE 9092 9999

2.2.4)kafka.yaml

#Deploy Service Headless for communication between Kafka

apiVersion: v1

kind: Service

metadata:

name: kafka-headless

labels:

app: kafka

spec:

type: ClusterIP

clusterIP: None

ports:

- name: kafka

port: 9092

targetPort: kafka

selector:

app: kafka

---

#Deploy Service for external access to kafka

apiVersion: v1

kind: Service

metadata:

name: kafka

labels:

app: kafka

spec:

type: NodePort

ports:

- name: kafka

port: 9092

targetPort: 9092

nodePort: 32192

protocol: TCP

selector:

app: kafka

---

#Configuration controller guarantees minimum number of POD clusters running

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: kafka-pdb

spec:

selector:

matchLabels:

app: kafka

minAvailable: 2

---

#Configure StatefulSet

apiVersion: apps/v1beta2

kind: StatefulSet

metadata:

name: kafka

spec:

podManagementPolicy: OrderedReady

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: kafka

serviceName: kafka-headless

template:

metadata:

annotations:

labels:

app: kafka

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- kafka

topologyKey: "kubernetes.io/hostname"

containers:

- name: kafka

imagePullPolicy: Always

image: 192.168.8.183/library/kafka-zyxf:2.2.0

resources:

requests:

memory: "500m"

cpu: "256m"

ports:

- containerPort: 9092

name: kafka

env:

- name: KAFKA_HEAP_OPTS

value : "-Xmx256M -Xms256M"

command:

- sh

- -c

- "/app/kafka/bin/kafka-server-start.sh /app/kafka/config/server.properties \

--override broker.id=${HOSTNAME##*-} \

--override zookeeper.connect=zookeeper:2181 \

--override listeners=PLAINTEXT://:9092 \

--override advertised.listeners=PLAINTEXT://:9092 \

--override broker.id.generation.enable=false \

--override auto.create.topics.enable=false \

--override min.insync.replicas=2 \

--override log.dir= \

--override log.dirs=/app/kafka/kafka-logs \

--override offsets.retention.minutes=10080 \

--override default.replication.factor=3 \

--override queued.max.requests=2000 \

--override num.network.threads=8 \

--override num.io.threads=16 \

--override auto.create.topics.enable=false \

--override socket.send.buffer.bytes=1048576 \

--override socket.receive.buffer.bytes=1048576 \

--override num.replica.fetchers=4 \

--override replica.fetch.max.bytes=5242880 \

--override replica.socket.receive.buffer.bytes=1048576"

volumeMounts:

- name: datadir

mountPath: /app/kafka/kafka-logs

volumes:

- name: datadir

hostPath:

path: /kafka

type: DirectoryOrCreate

# emptyDir: {}

# volumeMounts:

# - name: data

# mountPath: /renzhiyuan/kafka

# volumeClaimTemplates:

# - metadata:

# name: data

# spec:

# accessModes: [ "ReadWriteOnce" ]

# storageClassName: local-storage

# resources:

# requests:

# storage: 3Gi

2.3) kafka mirror generation upload

2.3.1) zookeeper image packaging

docker build -t kafka:2.2.0 -f kafka.Dockerfile .

docker tag kafka:2.2.0 192.168.8.183/library/kafka-zyxf:2.2.0

2.3.2) zookeeper mirror upload harbor repository

docker login 192.168.8.183 -u admin -p renzhiyuan

docker push 192.168.8.183/library/kafka-zyxf:2.2.0

2.4) kafka deployment

2.4.1) kafka deployment

kubectl apply -f kafka.yaml

2.4.2) kafka Deployment Process Check

[root@node7 ~]# kubectl describe pods kafka-

kafka-0 kafka-1 kafka-2

[root@node7 ~]#

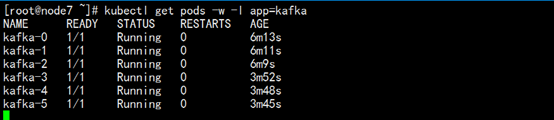

kubectl get pods -w -l app=kafka

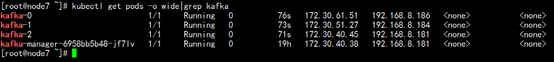

2.5) kafka cluster validation

2.5.1) Examine Pods distribution in Kafka StatefulSet

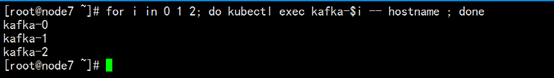

2.5.2) Check the Pods host name in the kafka StatefulSet

for i in 0 1 2; do kubectl exec kafka-$i -- hostname ; done

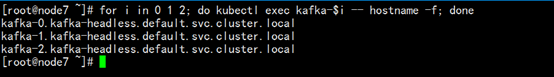

2.5.3) Check the FQDN (official domain name) in the kafka StatefulSet

for i in 0 1 2; do kubectl exec kafka-$i -- hostname -f; done

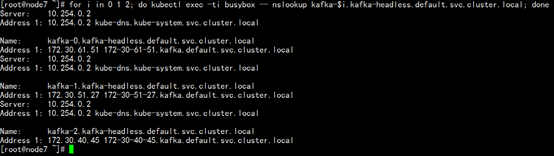

2.5.4) Check dns resolution in Kafka StatefulSet pods

for i in 0 1 2; do kubectl exec -ti busybox -- nslookup kafka-$i.kafka-headless.default.svc.cluster.local; done

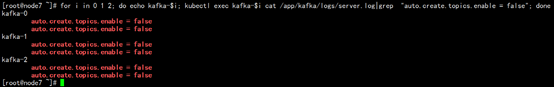

2.5.5) Check Kafka StatefulSet server.properties profile standardization

for i in 0 1 2; do echo kafka-$i; kubectl exec kafka-$i cat /app/kafka/logs/server.log|grep "auto.create.topics.enable = false"; done 2.5.6) Check kafka StatefulSet cluster validation

2.5.6) Check kafka StatefulSet cluster validation

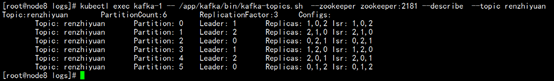

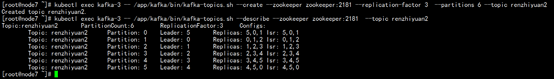

Create a topic

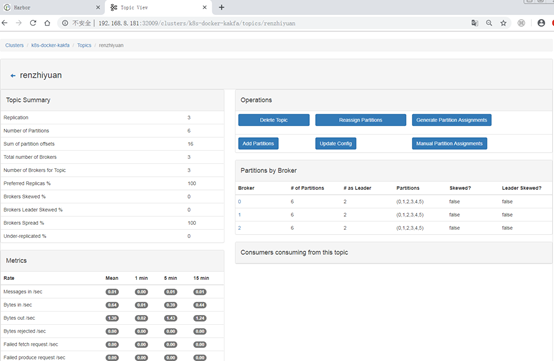

kubectl exec kafka-1 -- /app/kafka/bin/kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 3 --partitions 6 --topic renzhiyuan

Check topic information

kubectl exec kafka-1 -- /app/kafka/bin/kafka-topics.sh --describe --zookeeper zookeeper:2181 --topic renzhiyuan

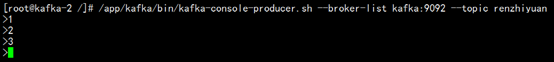

Production message

/app/kafka/bin/kafka-console-producer.sh --broker-list kafka:9092 --topic renzhiyuan

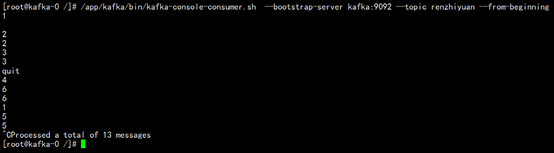

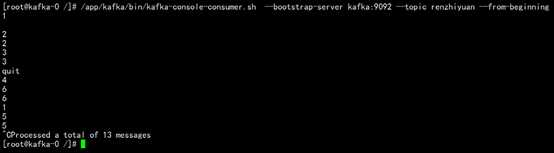

Consumer News

/app/kafka/bin/kafka-console-consumer.sh --bootstarp-server kafka:9092 --from-beginning --topic renzhiyuan

2.6) kafka cluster expansion

2.3.1) kafka expanded to 6 instances

kubectl scale --replicas=6 StatefulSet/kafka

statefulset.apps/kafka scaled

[root@node7 ~]#

2.3.2) kafka Expansion Process Check

[root@node7 ~]# kubectl describe pods kafka-

kafka-0 kafka-2 kafka-4

kafka-1 kafka-3 kafka-5

[root@node7 ~]#

kubectl get pods -w -l app=kafka

Create a topic

kubectl exec kafka-3 -- /app/kafka/bin/kafka-topics.sh --create --zookeeper zookeeper:2181 --replication-factor 3 --partitions 6 --topic renzhiyuan2

Check topic information

kubectl exec kafka-3 -- /app/kafka/bin/kafka-topics.sh --describe --zookeeper zookeeper:2181 --topic renzhiyuan2

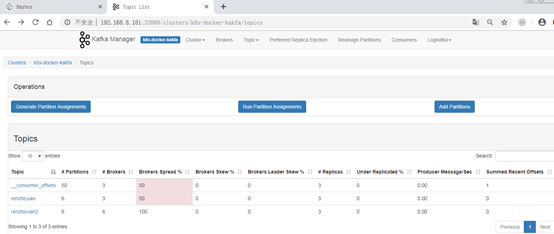

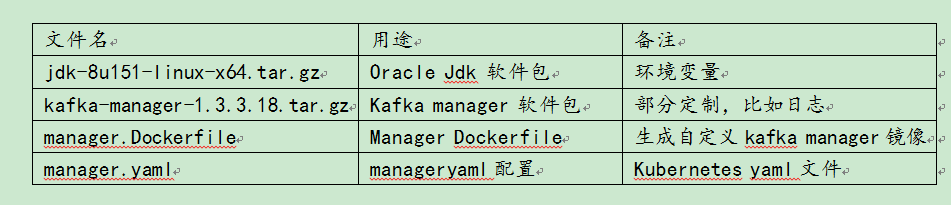

3. Management Deployment of Kafka manager

3.1) List of kafka manager files

3.2) Detailed kafka manager file list

3.3) kafka manager image generation upload

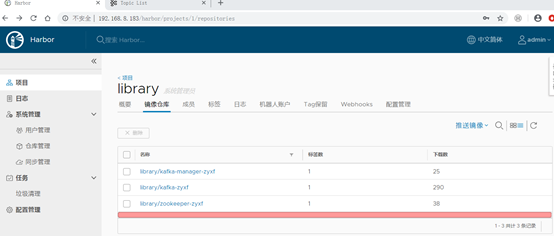

3.3.1) zookeeper image packaging

docker build -t kafka-manager:1.3.3.18 -f manager.Dockerfile .

docker tag kafka-manager:1.3.3.18 192.168.8.183/library/ kafka-manager-zyxf: 1.3.3.18

3.3.2) zookeeper mirror upload harbor repository

docker login 192.168.8.183 -u admin -p renzhiyuan

docker push 192.168.8.183/library/kafka-manager-zyxf

3.4) kafka manager deployment

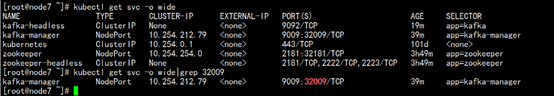

[root@node7 ~]# kubectl apply -f manager.yaml

3.5) kafka manager validation

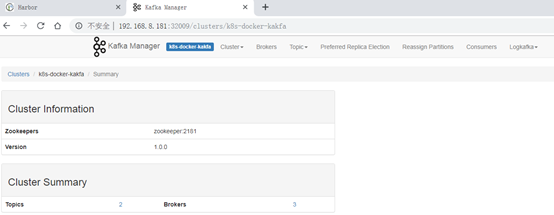

http://192.168.8.181:32009/

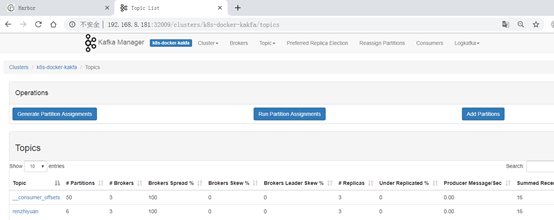

3.5.1) Pre-expansion validation

3.5.2) Post-expansion validation