Using Docker to build zookeeper cluster environment

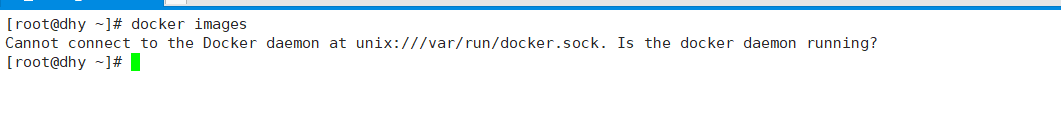

Start docker service

The above situation indicates that the docker is not started and the docker service needs to be started manually

service docker start

This error is reported when starting the service

[root@localhost ~]# docker start 722cb567ad8b Error response from daemon: driver failed programming external connectivity on endpoint mall-user-service (f83187d7e06975dbfb8d83d45a6907bf8e575be0aedee8aed9ea694cc90e5b97): (iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 8184 -j DNAT --to-destination 172.18.6.2:8184 ! -i docker0: iptables: No chain/target/match by that name. (exit status 1)) Error: failed to start containers: 722cb567ad8b

Just restart docker at this time (systemctl docker restart)

Attach a firewall (systemctl stop firewalld) that closes cent7

It is recommended to set docker as a daemon, i.e. startup and self startup

systemctl enable docker # Start docker automatically after startup systemctl start docker # Start docker systemctl restart docker # Restart dokcer

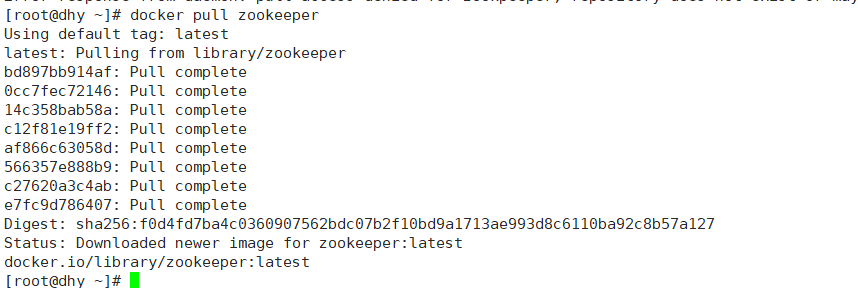

Use Alibaba cloud image accelerator to pull the official image of zookeeper from the central warehouse

docker pull zookeeper

Test start zookeeper

Start zookeeper in the background

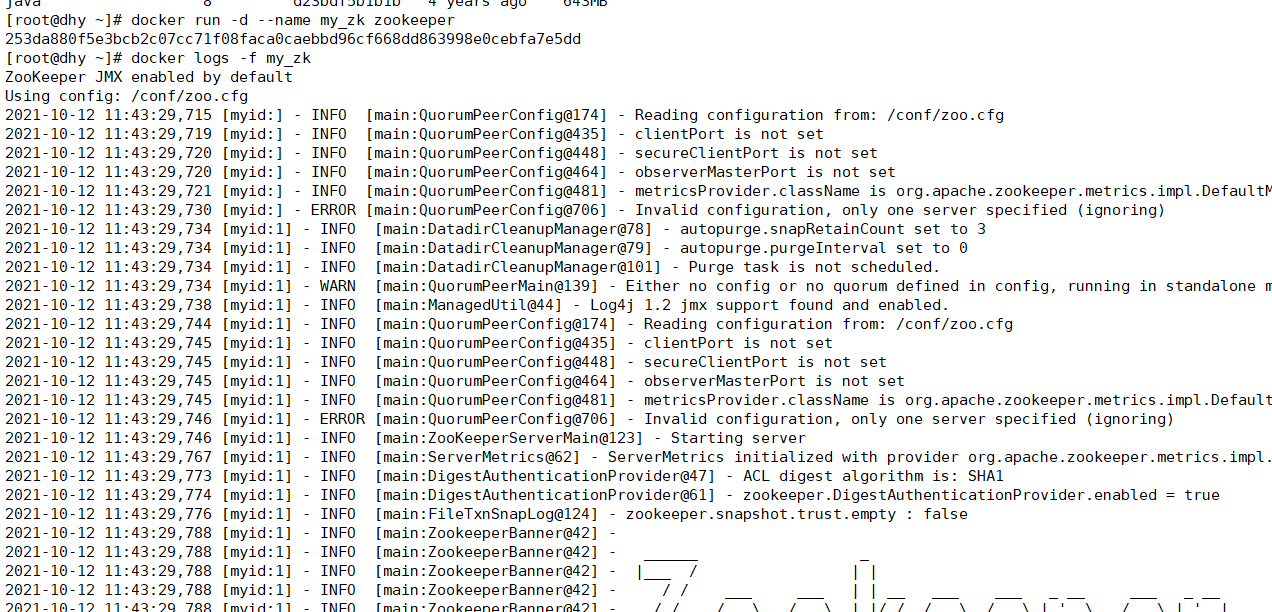

docker run -d --name my_zk zookeeper

docker logs -f my_zk: check the log print to ensure successful startup

Start the zk client and test the connection to the zk server

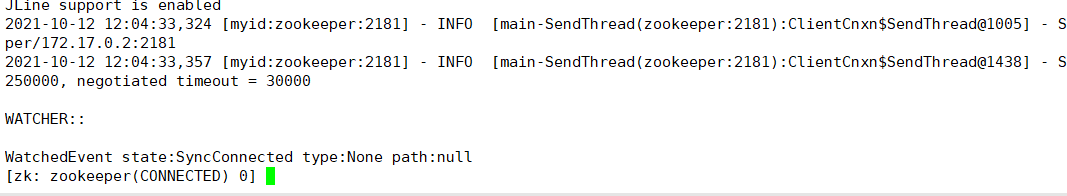

Use the ZK command line client to connect to ZK. Because the ZK container we just started is not bound to the port of the host, we cannot access it directly. However, we can access the ZK container through the link mechanism of Docker. Execute the following command:

docker run -it --rm --link my_zookeeper:zookeeper zookeeper zkCli.sh -server zookeeper

Start a zookeeper image and run the zkCli.sh command in the image. The command parameter is "- server zookeeper"

-rm: delete the container when it stops running

–link my_zk:zookeeper: map the ip and domain name of the server container in the current client container. The domain name is zookeeper

Name the one we started earlier as my_zk's container is linked to the newly created container, and its host name is zookeeper

After we execute this command, we can operate ZK service as normal using ZK command line client

Construction of ZK cluster

1. Create docker-compose.yml file

version: '3'#Fixed version number

services:

zoo1:

image: zookeeper #Mirror used

restart: always #Automatic restart after downtime

hostname: zoo1 #The host (parent container) name that hosts the zookeeper container can be omitted

container_name: zoo1 #Container name

privileged: true #Using this parameter, the root in the container has the real root right. The container started by privileged can see many devices on the host and execute mount. It even allows you to start the docker container in the docker container.

ports: #Port mapping for hosts and containers

- "2181:2181"

volumes: #Create the mount directory of the zookeeper container on the host

- /opt/zookeeper-cluster/zookeeper01/data:/data #data

- /opt/zookeeper-cluster/zookeeper01/datalog:/datalog #journal

- /opt/zookeeper-cluster/zookeeper01/conf:/conf #configuration file

environment: #zookeeper3.4 and zookeeper 3.5 stay docker This is the difference in setting up clusters in the environment #zoo1 is the container name and also the host name, which means to use the intranet communication of the container (1) zoom specified in zookeeper 3.5_ "; 2181" is added after the ip address and port number of the servers parameter. (2)ZOO_ When servers specifies the ip address, the local ip address is written as 0.0.0.0.

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo2:

image: zookeeper

restart: always

hostname: zoo2

container_name: zoo2

privileged: true

ports:

- "2182:2181"

volumes:

- /opt/zookeeper-cluster/zookeeper02/data:/data

- /opt/zookeeper-cluster/zookeeper02/datalog:/datalog

- /opt/zookeeper-cluster/zookeeper02/conf:/conf

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

zoo3:

image: zookeeper

restart: always

hostname: zoo3

container_name: zoo3

privileged: true

ports:

- "2183:2181"

volumes:

- /opt/zookeeper-cluster/zookeeper03/data:/data

- /opt/zookeeper-cluster/zookeeper03/datalog:/datalog

- /opt/zookeeper-cluster/zookeeper03/conf:/conf

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

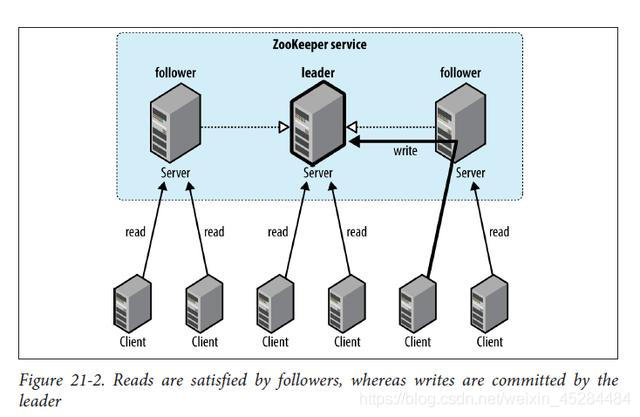

This configuration file will tell Docker to run three zookeeper images respectively, and bind the local ports 2181, 2182 and 2183 to the corresponding container port 2181. Zoom_ MY_ id and zoom_ Servers are two environment variables that need to be set to build ZK clusters, among which zoom_ MY_ id represents the id of ZK service. It is an integer between 1 and 255 and must be unique in the cluster_ Servers is the host list of ZK cluster

server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

Interpretation of configuration parameters

server.A=B:C:D

A is a number indicating the server number;

In the cluster mode, configure A file myid, which is in the dataDir directory. There is A value of A in this file. When Zookeeper starts, read this file and compare the data with the configuration information in zoo.cfg to determine which server it is.

B is the address of the server;

C is the port where the Follower server exchanges information with the Leader server in the cluster;

D is that in case the Leader server in the cluster hangs up, a port is needed to re elect and select a new Leader, and this port is the port used to communicate with each other during the election

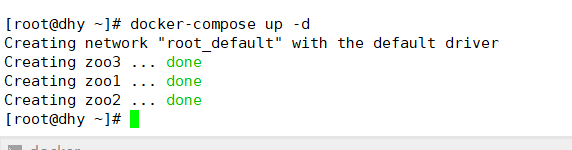

2. Run in the current directory of docker-compose.yml

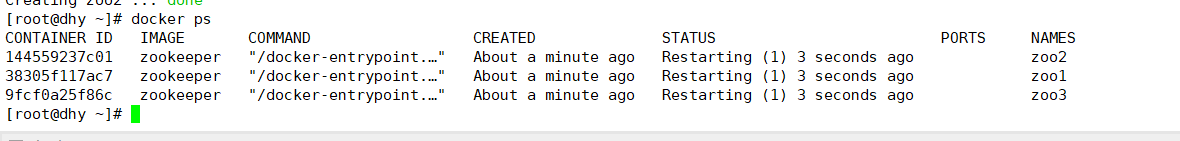

3. View the created zookeeper cluster

Or enter it in the directory where the docker-compose.yml file is located

docker-compose ps

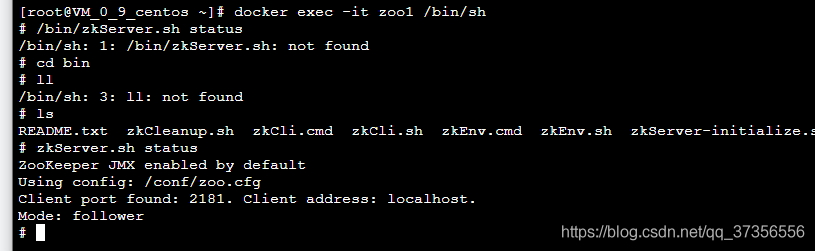

4. Enter the cluster to view the cluster status

docker exec -it zoo1 /bin/bash

cd bin

zkServer.sh status