preface

the deadlock problem in the case of HashMap concurrency exists in jdk 1.7 and before. jdk 1.8 has been repaired by adding loHead and loTail. Although it has been repaired, if concurrency is involved, it is generally recommended to use CurrentHashMap instead of HashMap to ensure that thread safety problems will not occur.

in jdk 1.7 and before, the loop problem of HashMap in the case of concurrency will cause the cpu of the server to soar to 100%. In order to answer this doubt, let's learn how thread unsafe HashMap causes dead loop in the case of high concurrency. To explore the causes of HashMap dead loop, we need to start with the source code of HashMap, In this way, HashMap can be understood fundamentally. Before analysis, we should know that in JDK version 1.7 and before, HashMap adopts the data structure of array + linked list, while in jdk 1.8, it adopts the data structure of array + linked list + red black tree to further reduce the query loss caused by hash conflict.

text

First, hashmap inserts elements, and the put method is called here

public V put(K key, V value) {

if (table == EMPTY_TABLE) {

inflateTable(threshold);//Allocate array space

}

if (key == null)

return putForNullKey(value);

int hash = hash(key);//The hashcode of the key is further calculated to ensure uniform hash

int i = indexFor(hash, table.length);//Gets the actual location in the table

for (Entry<K,V> e = table[i]; e != null; e = e.next) {...}

modCount++;//When ensuring concurrent access, if the internal structure of HashMap changes, the rapid response fails

//Focus on the method of adding elements to this addEntry

addEntry(hash, key, value, i);

return null;

}

Next, let's look at the addEntry method. The resize() expansion method called in it is the protagonist today

void addEntry(int hash, K key, V value, int bucketIndex) {

if ((size >= threshold) && (null != table[bucketIndex])) {

resize(2 * table.length);//When the size exceeds the critical threshold and a hash conflict is about to occur, the capacity is expanded. After expansion, the new capacity is twice the old capacity

hash = (null != key) ? hash(key) : 0;

bucketIndex = indexFor(hash, table.length);//Recalculate the inserted position subscript after capacity expansion

}

//Put the element into the corresponding position of the bucket of HashMap

createEntry(hash, key, value, bucketIndex);

}

Next, let's enter the resize() method and uncover the transfer() method. This method is also the culprit of the dead cycle

//Expand Hash table by new capacity

void resize(int newCapacity) {

Entry[] oldTable = table;//Old data

int oldCapacity = oldTable.length;//Get old capacity value

if (oldCapacity == MAXIMUM_CAPACITY) {//The old capacity value has reached the maximum capacity value

threshold = Integer.MAX_VALUE;//Modify the expansion threshold

return;

}

//New structure

Entry[] newTable = new Entry[newCapacity];

//Copy the data from the old table to the new structure

transfer(newTable, initHashSeedAsNeeded(newCapacity));

table = newTable;//Modify the underlying array of HashMap

threshold = (int)Math.min(newCapacity * loadFactor, MAXIMUM_CAPACITY + 1);//Modify threshold

}

Finally, let's take a closer look at the transfer() method

//Copy the data from the old table to the new structure

void transfer(Entry[] newTable, boolean rehash) {

int newCapacity = newTable.length;//capacity

for (Entry<K,V> e : table) { //Traverse all buckets

while(null != e) { //Traverse all elements in the bucket (a linked list)

Entry<K,V> next = e.next;

if (rehash) {//If it is a hash, you need to recalculate the hash value

e.hash = null == e.key ? 0 : hash(e.key);

}

int i = indexFor(e.hash, newCapacity);//Positioning the Hash bucket

e.next = newTable[i];//The element is connected to the bucket, which is equivalent to the insertion of a single linked list. It is always inserted in the front

newTable[i] = e;//The value of newTable[i] is always the latest inserted value

e = next;//Continue to the next element

}

}

}

After the added elements reach the threshold value, expand the HashMap and use the realize method. When expanding the HashMap, a transfer() will be called to transfer the elements in the old HashMap. Therefore, the dead cycle problem we want to explore today occurs in this method. During element transfer, the transfer method will call the following four lines of code

Entry<K,V> next = e.next; e.next = newTable[i]; newTable[i] = e; e = next;

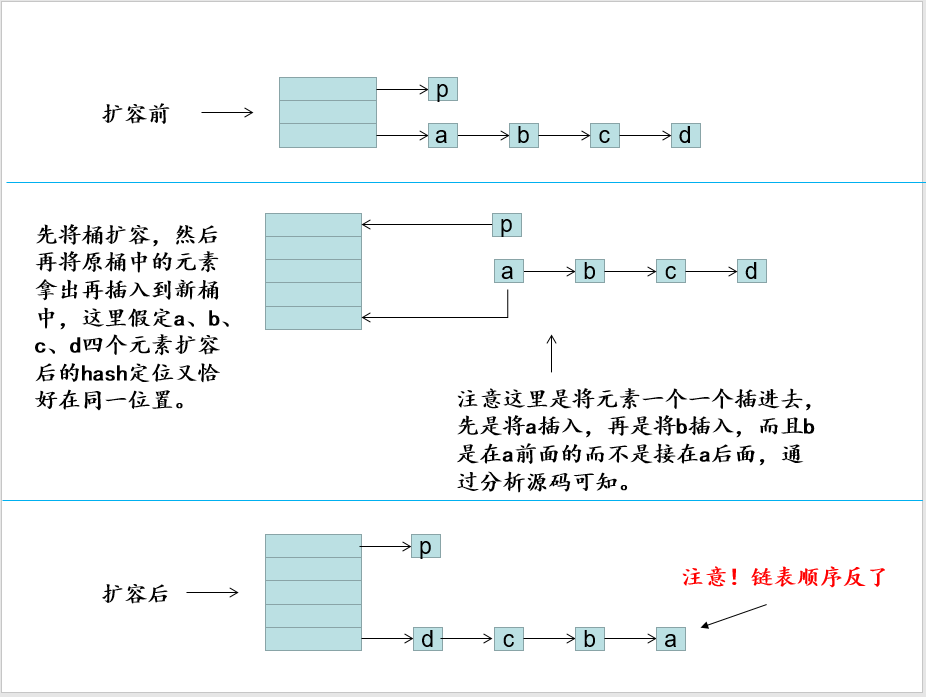

Insert the element into the new HashMap. It seems that there is no problem with these four lines of code. The diagram of element transfer is as follows (without thread conflict)

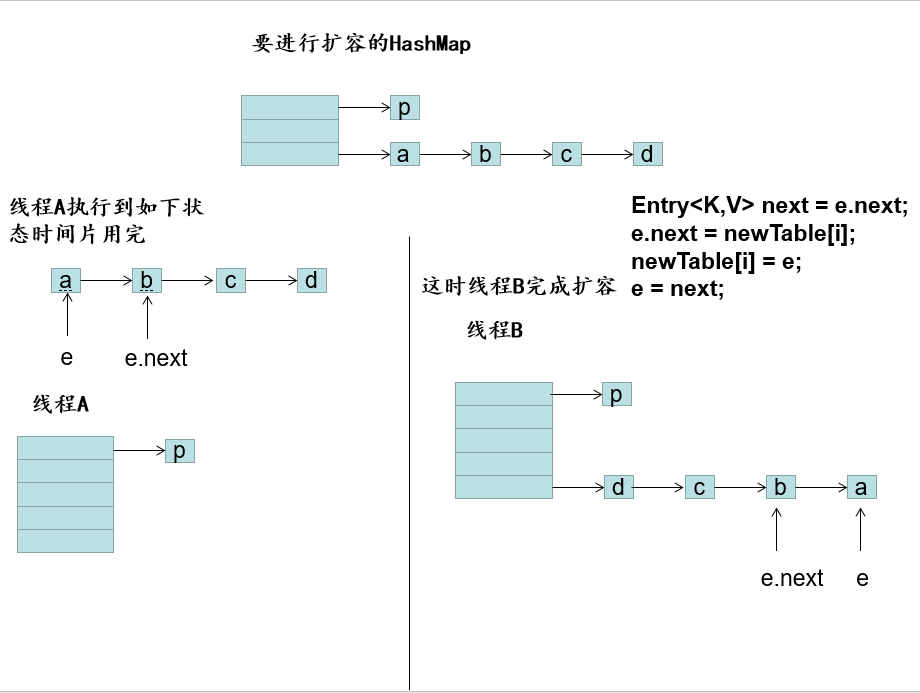

Then let threads A and B access my code at the same time. When thread A executes the following code

Entry<k,v> next = e.next;

Thread A hands over the time slice, and thread B takes over the transfer and completes the transfer of elements. At this time, thread A gets the time slice and then executes the code

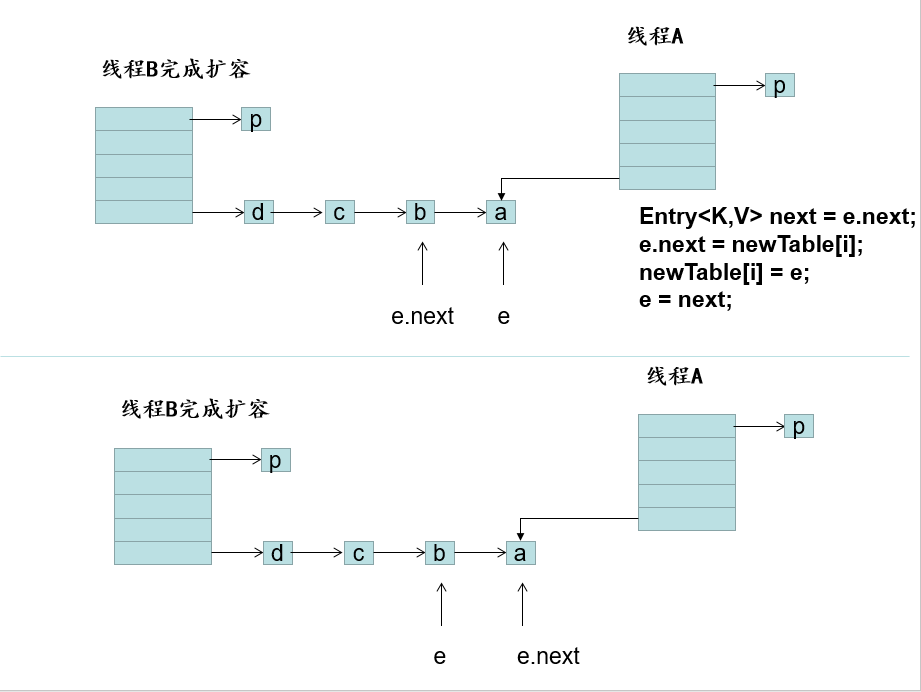

The code after execution is shown in the figure. When e = a, it will be executed at this time

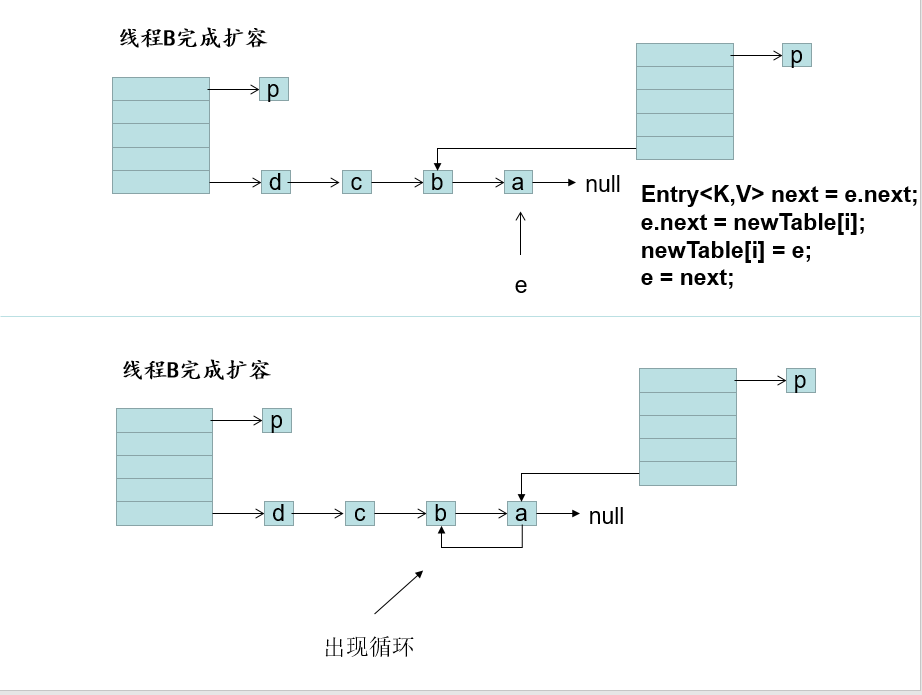

e.next = newTable[i];// The a element points to the b element, creating a loop

after a loop is generated in the linked list, when the get() method gets the elements and falls on the linked list of the loop, the thread will always traverse the loop and cannot jump out, resulting in a 100% surge in cpu!

summary

In the case of multithreading, try not to use HashMap. Instead, use thread safe hash tables, such as ConcurrentHashMap, HashTable, Collections.synchronizedMap(). To avoid multithreading safety problems.

/Thank you for your support/

The above is all the content of this sharing. I hope it will help you_

If you like, don't forget to share, like and collect~

Welcome to the official account bus programmer, a fun, fan, temperature programmer bus, dabbling in the factory face, programmer's life, practical course, technology frontier and other content, pay attention to me, make friends!