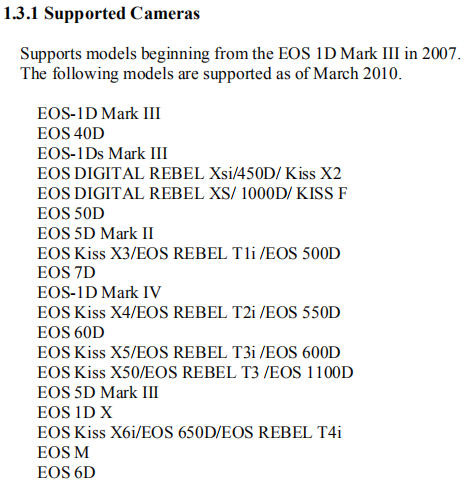

Canon SLRs are commonly used in the market. Whether other SLRs are also provided with SDK s has not been searched.

Canon SLR generally uses EOS500D, 550D, 600D, 650D and 750D, which are supported by EDSDK.

As of the beginning of 2019, Canon's official EDSDK needs to be applied on the official website and is not available in China.

The version of EDSDK I am using is 3.5 / 3.6.1

After the single reverse side flip is opened, two interfaces are generally seen, one is micro usb and the other is mini hdmi. The former is mainly used in this article, that is to connect SLR and host through micro usb to usb cable

Turn on the SLR and turn the dial to M gear

If you think the original usb cable is too short, you can add a usb extension cable, and consider whether to bring your own amplifier according to the distance

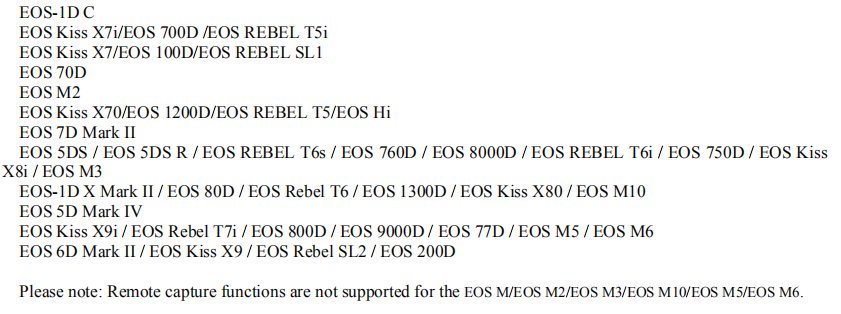

The dll and c script of EDSDK are imported as follows

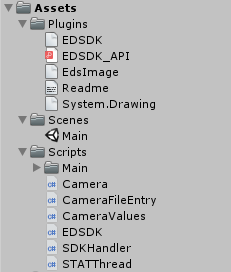

Our main concern is SDKHander.cs. It defines variables, events, connection device methods, and camera commands.

STAThread is a thread management script.

1 initialization

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using EDSDKLib;

using UnityEngine.UI;

using EDSDK.NET;

public class Main : MonoBehaviour {

SDKHandler CameraHandler;

EDSDK.NET.Camera myCamera;

#region UI variable

public RawImage moviePlane;

private Texture2D tt;

#endregion

#region device available variables

private string avValues = "";

private string tvValues = "";

private string isoValues = "";

#endregion

// Use this for initialization

void Start () {

//Instantiate SLR control class

CameraHandler = new SDKHandler();

print("EDSDK Initialization successful");

//Find equipment

}

}2 find the device

//Find equipment

List<EDSDK.NET.Camera> ll = CameraHandler.GetCameraList();

foreach (EDSDK.NET.Camera cc in ll)

print(cc.Info.szDeviceDescription);

if (ll.Count == 0)

{

print("No Canon SLR equipment found");

return;

}

myCamera = ll[0];

//Set up equipment3 setting up the device

//Understand equipment parameters

//Shooting mode is the main dial gear. It doesn't make sense. Just dial on the camera

//When I try, I need to dial to M gear. If I need to get video stream, it must be video gear

//foreach (int ii in CameraHandler.GetSettingsList(EDSDK.PropID_AEModeSelect))

// print("AEMode = " + ii);

//aperture

foreach (int ii in CameraHandler.GetSettingsList(EDSDKLib.EDSDK.PropID_Av))

avValues += ii + "|";

print("Av = " + avValues);

//shutter

foreach (int ii in CameraHandler.GetSettingsList(EDSDKLib.EDSDK.PropID_Tv))

tvValues += ii + "|";

print("Tv = " + tvValues);

//Sensitivity

foreach (int ii in CameraHandler.GetSettingsList(EDSDKLib.EDSDK.PropID_ISOSpeed))

isoValues += ii + "|";

print("ISO = " + isoValues);

//The following are not important

//foreach (int ii in CameraHandler.GetSettingsList(EDSDK.PropID_MeteringMode))

// print("Metering = " + ii);

//foreach (int ii in CameraHandler.GetSettingsList(EDSDK.PropID_ExposureCompensation))

// print("Exposure = " + ii);

//Set up equipment

CameraHandler.SetSetting(EDSDKLib.EDSDK.PropID_Av, 80);//aperture

CameraHandler.SetSetting(EDSDKLib.EDSDK.PropID_Tv, 61);//shutter speed

CameraHandler.SetSetting(EDSDKLib.EDSDK.PropID_ISOSpeed, 104);//ISO

//There are three types of Save To: camera memory card, local computer and both. Select Save To host here, SLR does not have a memory card

CameraHandler.SetSetting(EDSDKLib.EDSDK.PropID_SaveTo, (uint)EDSDKLib.EDSDK.EdsSaveTo.Host);//Picture save location

CameraHandler.SetCapacity();//This sentence has to be added. I didn't understand the API explanation of this sentence and missed it

CameraHandler.ImageSaveDirectory = Application.streamingAssetsPath;//Save the photos to streamingAsset

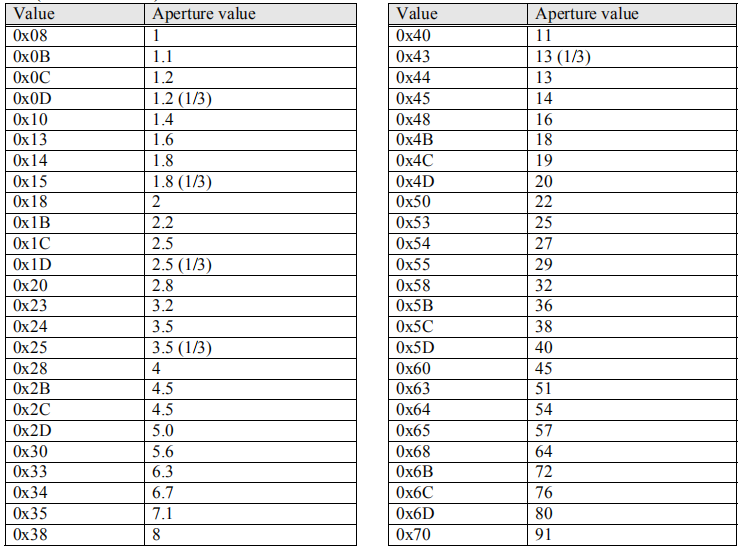

//display outputTable of values:

aperture

shutter

4 display of preset real-time video stream

This is simply to prepare a RawImage component for playback.

tt = new Texture2D(1920, 1080,TextureFormat.RGB24,true);

moviePlane.texture = tt;5 control and output

private bool isLiveOn = false;

private byte[] tempBytes;

// Update is called once per frame

void Update () {

if (Input.GetKeyDown(KeyCode.S))

{

//Photograph

CameraHandler.TakePhoto();

}

if (Input.GetKeyDown(KeyCode.D))

{

//Live broadcast

CameraHandler.StartLiveView();

isLiveOn = true;

}

if (Input.GetKeyDown(KeyCode.F))

{

//Exit the studio~~

CameraHandler.StopLiveView();

isLiveOn = false;

}

if (isLiveOn)

{

//If the live broadcast is on, one frame load will be displayed. This is where there are pits.

tempBytes = CameraHandler.GetImageByte();

tt.LoadImage(tempBytes);

}

}6. API transformation of video stream

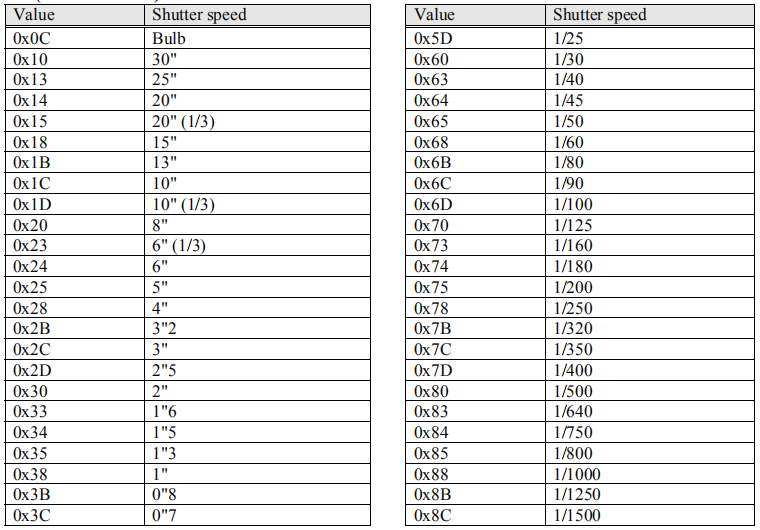

The cs script provided by Canon's EDSDK does not aim at the development of Unity, Unity C and. net framework. The most prominent problem is: there is no Bitmap in Unity in C, how to convert Bitmap into Texture?

Some changes need to be made in SDKHandler.cs

1 new functions and variables

public byte[] TextureBytes;//byte array, external get

public byte[] GetImageByte()

{

if (TextureBytes != null && TextureBytes.Length != 0)

return TextureBytes;

return null;

}

public Bitmap TextureBitmap;//Bitmap transit variable

private MemoryStream ms;//Key variables in transformation2 find the DownloadEvf function and modify it in the function body as follows

//run live view

while (IsLiveViewOn)

{

//download current live view image

err = EDSDKLib.EDSDK.EdsCreateEvfImageRef(stream, out EvfImageRef);

if (err == EDSDKLib.EDSDK.EDS_ERR_OK) err = EDSDKLib.EDSDK.EdsDownloadEvfImage(MainCamera.Ref, EvfImageRef);

if (err == EDSDKLib.EDSDK.EDS_ERR_OBJECT_NOTREADY) { Thread.Sleep(4); continue; }

else Error = err;

/*

lock (STAThread.ExecLock)

{

//download current live view image

err = EDSDKLib.EDSDK.EdsCreateEvfImageRef(stream, out EvfImageRef);

if (err == EDSDKLib.EDSDK.EDS_ERR_OK) err = EDSDKLib.EDSDK.EdsDownloadEvfImage(MainCamera.Ref, EvfImageRef);

if (err == EDSDKLib.EDSDK.EDS_ERR_OBJECT_NOTREADY) { Thread.Sleep(4); continue; }

else Error = err;

}

*/

//get pointer

Error = EDSDKLib.EDSDK.EdsGetPointer(stream, out jpgPointer);

Error = EDSDKLib.EDSDK.EdsGetLength(stream, out length);

//get some live view image metadata

if (!IsCoordSystemSet) { Evf_CoordinateSystem = GetEvfCoord(EvfImageRef); IsCoordSystemSet = true; }

Evf_ZoomRect = GetEvfZoomRect(EvfImageRef);

Evf_ZoomPosition = GetEvfPoints(EDSDKLib.EDSDK.PropID_Evf_ZoomPosition, EvfImageRef);

Evf_ImagePosition = GetEvfPoints(EDSDKLib.EDSDK.PropID_Evf_ImagePosition, EvfImageRef);

//release current evf image

if (EvfImageRef != IntPtr.Zero) { Error = EDSDKLib.EDSDK.EdsRelease(EvfImageRef); }

//create stream to image

unsafe { ums = new UnmanagedMemoryStream((byte*)jpgPointer.ToPointer(), (long)length, (long)length, FileAccess.Read); }

//Convert ums of unmanagedmemorystream type to Bitmap

TextureBitmap = new Bitmap(ums, true);

ms = new MemoryStream();

//Use the method of System.Drawing.Bitmap to convert Bitmap to MemoryStream

TextureBitmap.Save(ms, System.Drawing.Imaging.ImageFormat.Jpeg);

//Convert MemoryStream to Bytes

TextureBytes = ms.GetBuffer();

//fire the LiveViewUpdated event with the live view image stream

if (LiveViewUpdated != null) LiveViewUpdated(ums);

ums.Close();

}Main attention is paid to this paragraph:

7 close session

void OnApplicationQuit()

{

//CameraHandler.StopLiveView();

CameraHandler.Dispose();

}On this basis, you can take photos. The photos will be generated in the streaming assets folder. You can turn on the real-time video stream, but the video stream has a delay of 0.5 - 1 second. For interactive applications, if you need real-time images to let users see the effect, it will not affect the realization of the demand. However, if you have high demand for real-time, this delay is not good. I have seen the direct use of micro on the market The SLR photographing application implemented by USB interface is not delayed and combines with green screen. I think it should not be implemented through unity at present, and I will continue to study how to improve it later.

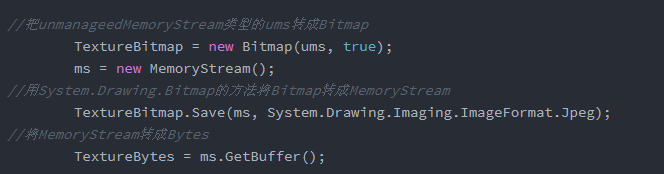

Other programmes

Video capture card

SLR with mini hdmi interface

We can use mini hdmi to connect SLR and acquisition card

The video capture card can be PCI internal capture card or external capture card

If it is the built-in collection card of PCIE, Unity can obtain the "camera" device through webcmdevice

If it is an external acquisition card, use the USB3.0 interface on the other end of the acquisition card to connect with the host. Similarly, Unity is also obtained through webcmdevice.

There is no delay in such a technical solution.

The disadvantage is that the mini hdmi gear needs to be in the camera gear when it is connected, and the micro usb cannot be controlled by the program.

This requirement has been studied for a long time. If something is wrong or not good enough, you are welcome to correct it.