Using docker configuration to install hadoop and spark

Install hadoop and spark images respectively

Install hadoop image

docker selected Mirror Address , the version of hadoop provided by this image is relatively new, and jdk8 is installed, which can support the installation of the latest version of spark.

docker pull uhopper/hadoop:2.8.1

Install spark image

If the requirements for spark version are not very high, you can pull other people's images directly. If a new version is required, you need to configure the dockerfile.

Environmental preparation

-

Download the sequenceiq/spark image building source code

git clone https://github.com/sequenceiq/docker-spark -

Download Spark 2.3.2 installation package from Spark official website

- Download address: http://spark.apache.org/downloads.html

-

The downloaded files need to be placed in the docker spark directory

-

Check the local image to make sure hadoop is installed

-

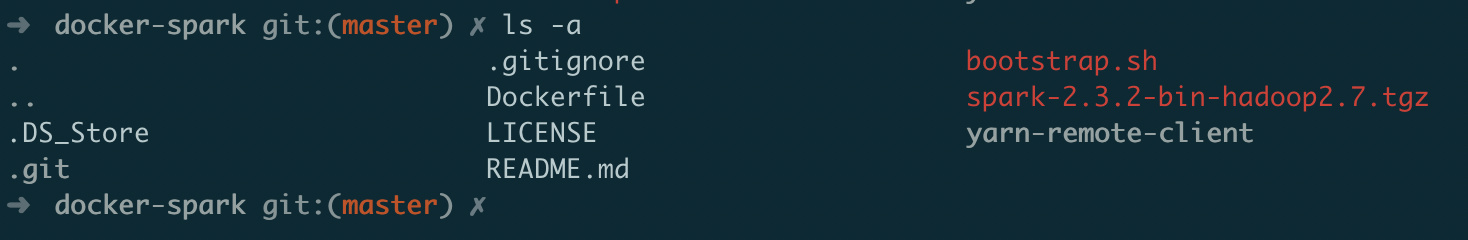

Enter the docker spark directory and confirm that all the files for image building are ready

Modify profile

-

Modify the Dockerfile to the following

-

FROM sequenceiq/hadoop-docker:2.7.0 MAINTAINER scottdyt #support for Hadoop 2.7.0 #RUN curl -s http://d3kbcqa49mib13.cloudfront.net/spark-1.6.1-bin-hadoop2.6.tgz | tar -xz -C /usr/local/ ADD spark-2.3.2-bin-hadoop2.7.tgz /usr/local/ RUN cd /usr/local && ln -s spark-2.3.2-bin-hadoop2.7 spark ENV SPARK_HOME /usr/local/spark RUN mkdir $SPARK_HOME/yarn-remote-client ADD yarn-remote-client $SPARK_HOME/yarn-remote-client RUN $BOOTSTRAP && $HADOOP_PREFIX/bin/hadoop dfsadmin -safemode leave && $HADOOP_PREFIX/bin/hdfs dfs -put $SPARK_HOME-2.3.2-bin-hadoop2.7/jars /spark && $HADOOP_PREFIX/bin/hdfs dfs -put $SPARK_HOME-2.3.2-bin-hadoop2.7/examples/jars /spark ENV YARN_CONF_DIR $HADOOP_PREFIX/etc/hadoop ENV PATH $PATH:$SPARK_HOME/bin:$HADOOP_PREFIX/bin # update boot script COPY bootstrap.sh /etc/bootstrap.sh RUN chown root.root /etc/bootstrap.sh RUN chmod 700 /etc/bootstrap.sh #install R RUN rpm -ivh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm RUN yum -y install R ENTRYPOINT ["/etc/bootstrap.sh"]

-

-

Modify bootstrap.sh to

#!/bin/bash : ${HADOOP_PREFIX:=/usr/local/hadoop} $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh rm /tmp/*.pid # installing libraries if any - (resource urls added comma separated to the ACP system variable) cd $HADOOP_PREFIX/share/hadoop/common ; for cp in ${ACP//,/ }; do echo == $cp; curl -LO $cp ; done; cd - # altering the core-site configuration sed s/HOSTNAME/$HOSTNAME/ /usr/local/hadoop/etc/hadoop/core-site.xml.template > /usr/local/hadoop/etc/hadoop/core-site.xml # setting spark defaults echo spark.yarn.jar hdfs:///spark/* > $SPARK_HOME/conf/spark-defaults.conf cp $SPARK_HOME/conf/metrics.properties.template $SPARK_HOME/conf/metrics.properties service sshd start $HADOOP_PREFIX/sbin/start-dfs.sh $HADOOP_PREFIX/sbin/start-yarn.sh CMD=${1:-"exit 0"} if [[ "$CMD" == "-d" ]]; then service sshd stop /usr/sbin/sshd -D -d else /bin/bash -c "$*" fi

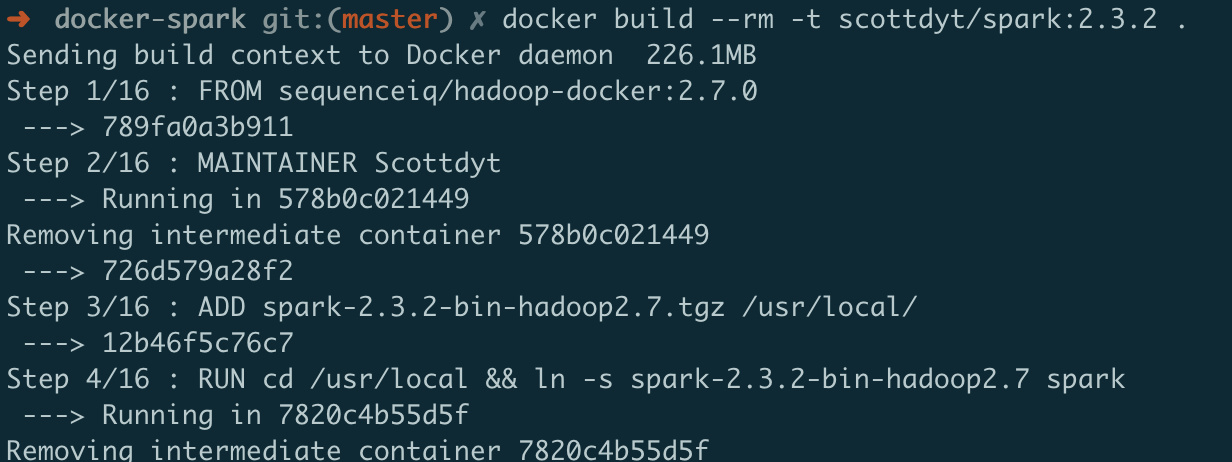

Constructing mirrors

docker build --rm -t scottdyt/spark:2.3.2 .

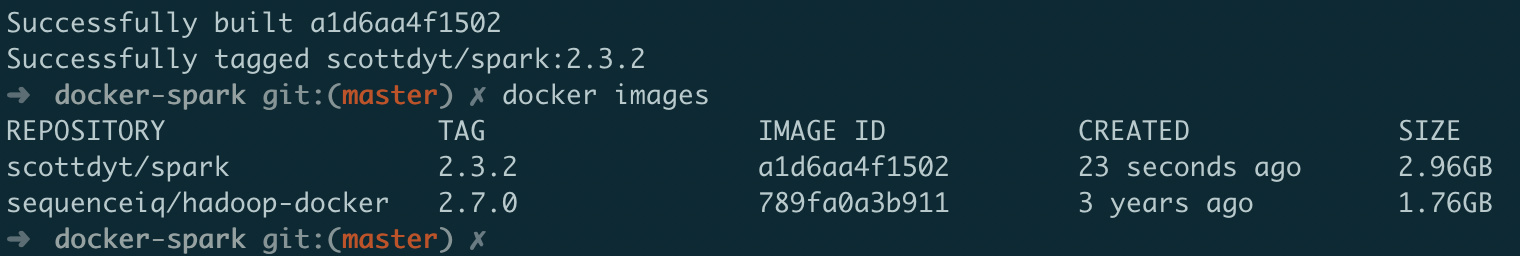

View mirroring

Start a spark 2.3.1 container

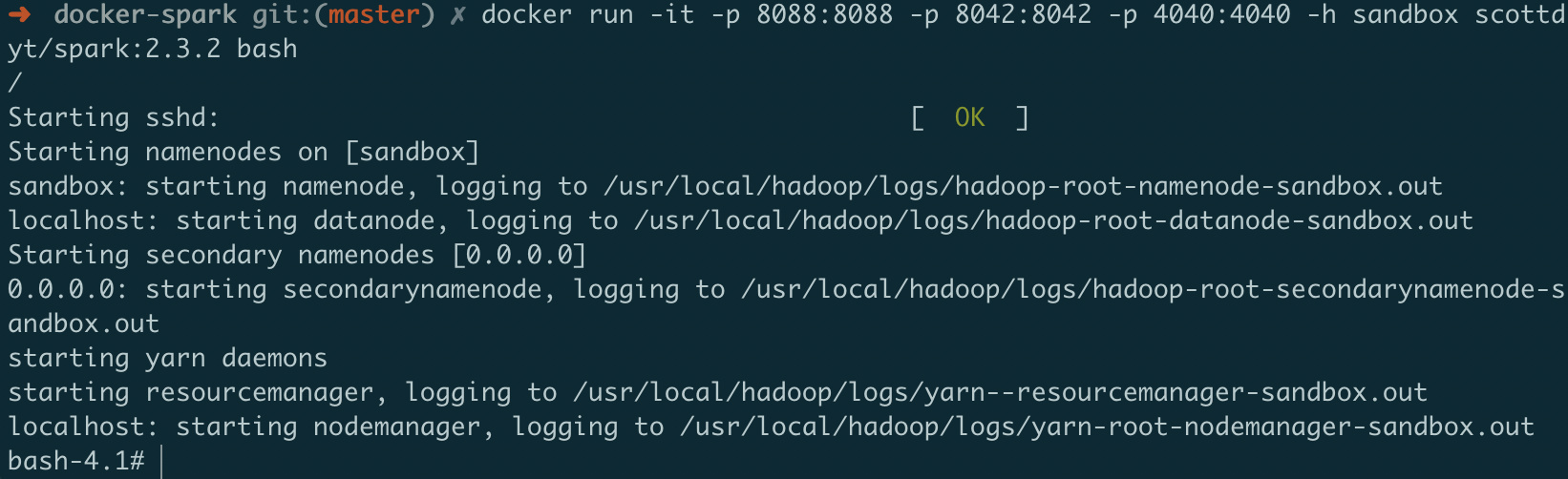

docker run -it -p 8088:8088 -p 8042:8042 -p 4040:4040 -h sandbox scottdyt/spark:2.3.2 bash

Start successful:

Install spark Hadoop image

If you want to be lazy, install the image of spark and hadoop directly. The image address is Here.

Or input directly at the terminal:

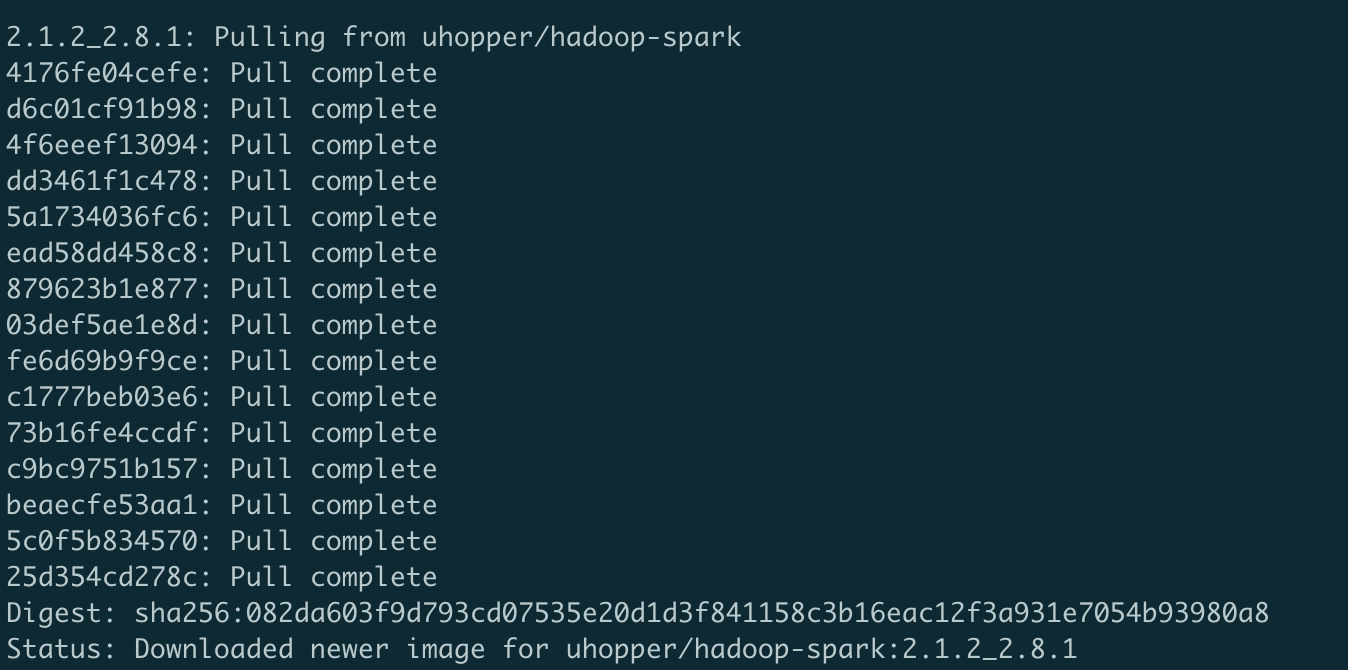

docker pull uhopper/hadoop-spark:2.1.2_2.8.1

Installation complete: