Background:

With the business iteration, some projects are reconstructed with nodejs and deployed to k8s environment. In order to facilitate analysis, the last version of the code increases the function of output log.

Phenomenon:

Half a day after the launch, it was found that there was an alarm of too many open files in the R & D feedback, which affected the user experience.

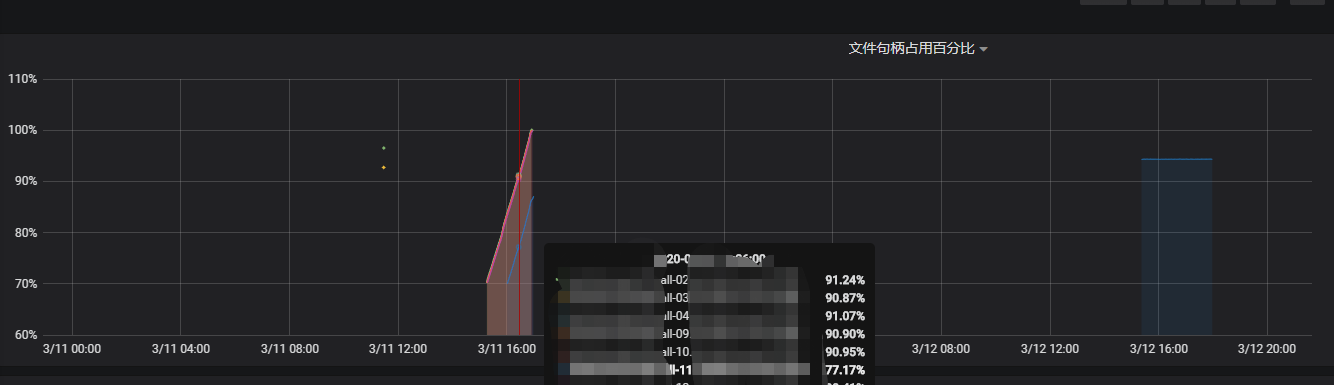

At the same time, the operation and maintenance view monitoring shows that the use of file handles has increased dramatically in a short period of time, as shown in the following figure:

O & M view file handle usage of k8s node

ulimit - n - view the maximum handle available to the current user sysctl - a | grep fs.file-max ා view kernel level file handle maximum limit cat / proc / sys / FS / file NR - view the maximum number of file handles currently in use and the kernel level file handle limit As you can see, the number of used file handles of cat / proc / sys / FS / file NR of the k8s node is almost full.

Fast solutions on the operation and maintenance side:

vim / etc/sysctl.conf add a line of configuration fs.file-max = 13129438 ා (if this value is not specified manually, linux will automatically set it according to the hardware configuration of each server. You can see the hosts with 64G and 128G memory, which are different.) sysctl - p causes the above operation to adjust the file handle to take effect immediately Then, remove this node from the k8s cluster (and rush the pod to other normal nodes). Unable to release the file handle, we can only release it by restarting the server. And guess that the new nodejs project may not log correctly.

The following is the lsof information captured in the k8s worker node13 node (as long as the k8s worker node with this abnormal pod runs, you can run lsof to see. After all, if there is a handle that is not closed, all the pods in this series must have problems):

Lsof > / TMP / lsof ා, the file size is almost 2GB (this process is relatively long, which may take 5-10 minutes)

[root@k8s-worker-node-13 ~]# grep REG /tmp/lsof | grep -c java

3422

[root@k8s-worker-node-13 ~]# grep REG /tmp/lsof | egrep -c '\bnode\b'

14621626

[root@k8s-worker-node-13 ~] (grep REG /tmp/lsof | egrep '\ bnode\b' | less) view the filtered log files

TID Listed as empty COMMAND PID TID USER FD TYPE DEVICE SIZE/OFF NODE NAME node 16966 root 458w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 459w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 460w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 461w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 462w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 463w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 464w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 465w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 466w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 467w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 468w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 469w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 470w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 471w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 472w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json node 16966 root 473w REG 253,1 671641730 1572898 /usr/src/app/log/test-app/test-app-2020-03-11.json . . . . . Omit parts . . . . . node 20927 20955 root mem REG 253,16 6823953 /usr/lib/libgcc_s.so.1 (stat: No such file or directory) node 20927 20955 root mem REG 253,16 6823955 /usr/lib/libstdc++.so.6.0.22 (stat: No such file or directory) node 20927 20955 root mem REG 253,16 6030733 /lib/ld-musl-x86_64.so.1 (stat: No such file or directory) node 20927 20955 root mem REG 253,16 6030679 /etc/localtime (path dev=253,1, inode=788097) node 20927 20955 root 23w REG 0,460 717345 9178728 /tmp/access-20200311.log node 20927 20955 root 27w REG 0,460 209117 9178762 /tmp/tracing-20200311.log node 20927 20955 root 29w REG 0,460 209117 9178762 /tmp/tracing-20200311.log node 20927 20956 root txt REG 0,460 40885256 7079234 /usr/local/bin/node node 20927 20956 root mem REG 253,16 7079234 /usr/local/bin/node (stat: No such file or directory) node 20927 20956 root mem REG 253,16 6823953 /usr/lib/libgcc_s.so.1 (stat: No such file or directory) node 20927 20956 root mem REG 253,16 6823955 /usr/lib/libstdc++.so.6.0.22 (stat: No such file or directory) node 20927 20956 root mem REG 253,16 6030733 /lib/ld-musl-x86_64.so.1 (stat: No such file or directory) node 20927 20956 root mem REG 253,16 6030679 /etc/localtime (path dev=253,1, inode=788097) node 20927 20957 root txt REG 0,460 40885256 7079234 /usr/local/bin/node node 20927 20957 root mem REG 253,16 7079234 /usr/local/bin/node (stat: No such file or directory) node 20927 20957 root mem REG 253,16 6823953 /usr/lib/libgcc_s.so.1 (stat: No such file or directory) node 20927 20957 root mem REG 253,16 6823955 /usr/lib/libstdc++.so.6.0.22 (stat: No such file or directory) node 20927 20957 root mem REG 253,16 6030733 /lib/ld-musl-x86_64.so.1 (stat: No such file or directory) node 20927 20957 root mem REG 253,16 6030679 /etc/localtime (path dev=253,1, inode=788097) node 20927 20957 root 23w REG 0,460 717345 9178728 /tmp/access-20200311.log node 20927 20957 root 27w REG 0,460 209117 9178762 /tmp/tracing-20200311.log node 20927 20957 root 29w REG 0,460 209117 9178762 /tmp/tracing-20200311.log node:Log 20927 21094 root txt REG 0,460 40885256 7079234 /usr/local/bin/node node:Log 20927 21094 root mem REG 253,16 7079234 /usr/local/bin/node (stat: No such file or directory) node:Log 20927 21094 root mem REG 253,16 6823953 /usr/lib/libgcc_s.so.1 (stat: No such file or directory) node:Log 20927 21094 root mem REG 253,16 6823955 /usr/lib/libstdc++.so.6.0.22 (stat: No such file or directory) node:Log 20927 21094 root mem REG 253,16 6030733 /lib/ld-musl-x86_64.so.1 (stat: No such file or directory)

Solutions on the development side:

Back off the service, and check whether there is a problem with the log in the code. Later, the next day, the development fed back that there was a problem in their previous log writing. They all kept opening the file without closing, resulting in the file handle not being released.

Optimization scheme of operation and maintenance side:

1. Add the related monitoring (node ﹣ exporter is OK) Monitoring expression: node ﹣ filefd ﹣ allocated / node ﹣ filefd ﹣ maximum * 100 > 70 triggers an alarm, indicating that the file handle occupies more than 70%, which requires operation and maintenance intervention to view and analyze 2. Limit the kernel parameters in docker image (it hasn't been tested to see if it works, to be verified in actual combat) Reason: docker image is also a thin version of linux. We found that the default fs.file-max and ulimt-n settings in the image of the production environment are very large. We can consider lowering ulimit-n to 65535 and fs.file-max to 655350. In this way, even if there is a problem with this pod, it can only affect itself, but not other pods running on the host.