1. Preface

19 years passed pretty fast. As the first one of 20 years, I want to make a simple summary here for the previous year: seriously, 19 years passed pretty miserable. Yes, the conclusion is this sentence. After all, the sense of ceremony must still exist.

Well, let's not talk about it. I hope it's better after 20 years. Save more money and worry less.

2. Simply say what zookeeper is and what problems can be solved

ZooKeeper is a sub project of Hadoop, which is a reliable coordination system for large-scale distributed system. Its functions include: configuration maintenance, name service, distributed synchronization, group service, etc. its goal is to encapsulate complex and error prone key services, and provide users with simple and easy-to-use interfaces and high-performance and stable systems.

- Unified naming

In the distributed environment, unified naming service is just like domain name and IP. IP is not easy to remember, domain name is easier to remember. Unified naming service can directly obtain key information such as service address through service name. - configuration management This is easy to understand. In distributed projects, the configuration of each project is extracted and managed uniformly. At present, apollo, nacos, spring cloud conifg are widely used in the market

- Cluster management Serve as the Registration Center for service producers and service consumers. Service producers register their services to Zookeeper center. Service consumers first search for services in Zookeeper when invoking services, obtain the detailed information of service producers, and then call the content and data of service producers.

- Distributed notification and coordination Heartbeat mechanism, message push, etc

- Distributed lock It's said that there are quite a lot of them in the market, but my company implements redis in distributed lock, which is not very well understood.

- Distributed queue

3. Roles in zookeeper

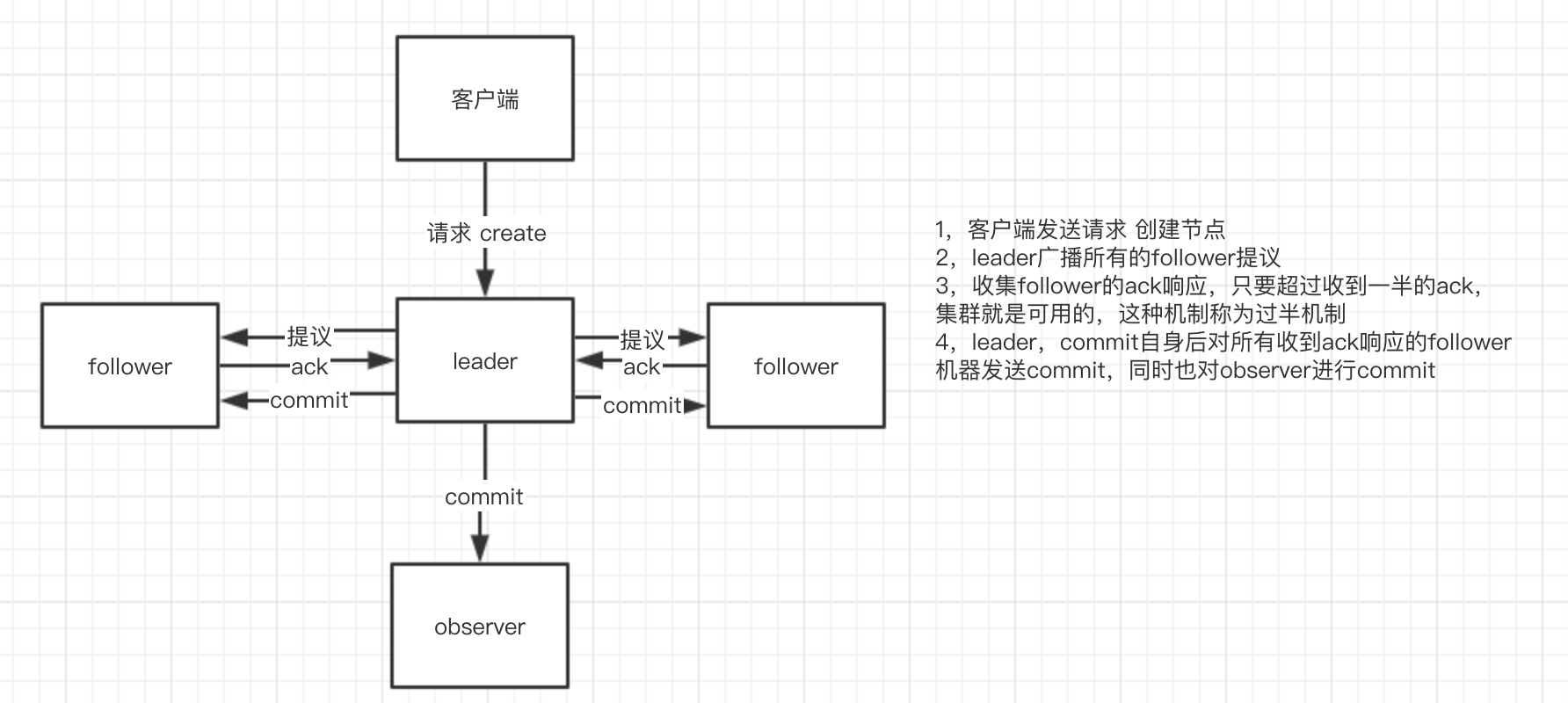

There are three roles in zk: leader, follower, observer;

leader: mainly for data recovery and heartbeat maintenance. It is the main node in zk cluster

Follow: participate in leader election and deal with leader's suggestions

Observer: similar to follower, but does not participate in leader election. If the read load in the cluster is relatively high, the negative impact of increasing observer is much better than that of increasing follower (because leader can reduce the requests sent by leader without participating in election)

Draw a sketch.

4. zookeeper stand-alone deployment

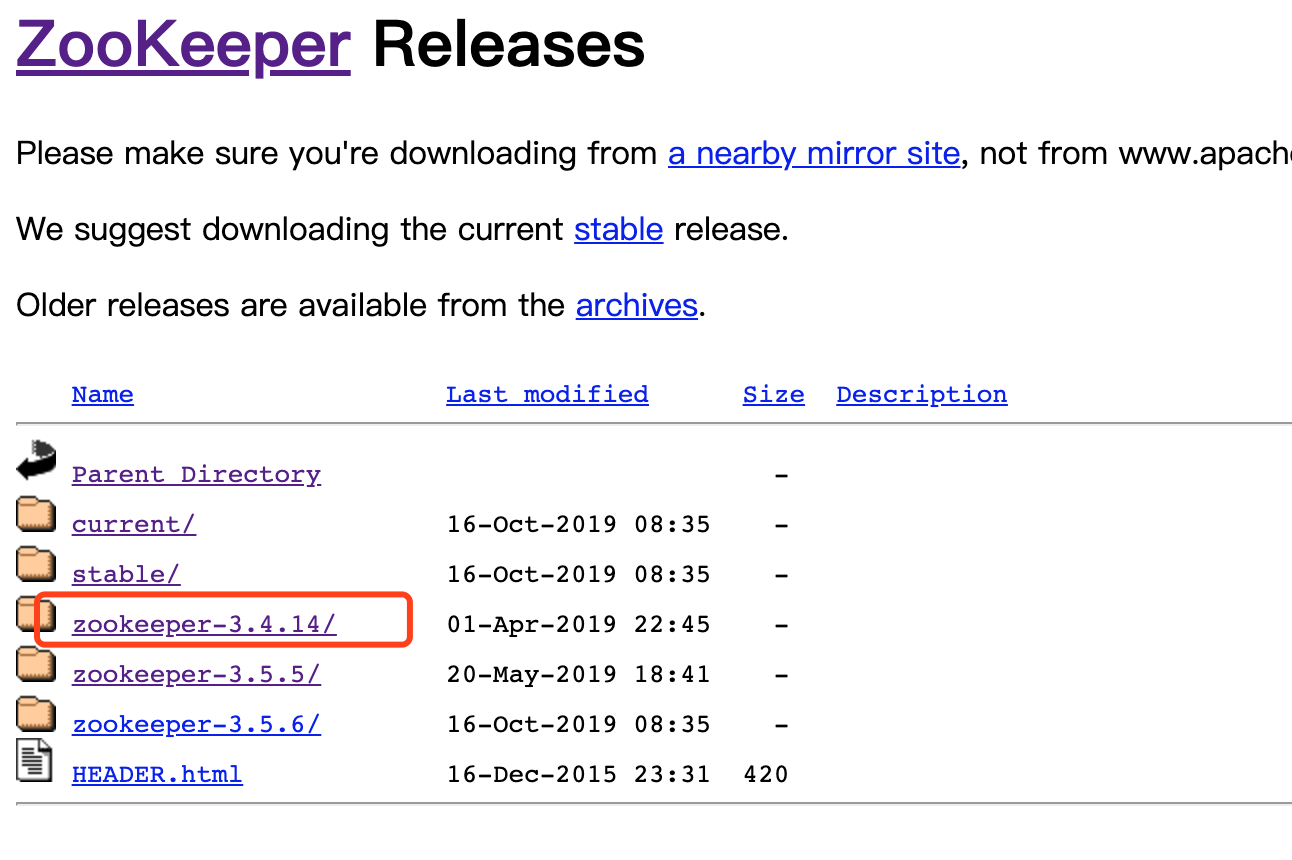

Download address https://zookeeper.apache.org/

I downloaded version 3.4.14 here

Decompression file

Enter the directory of zookeeper-3.4.14/conf, and copy the file of zoo_sample.cfg to zoo.cfg

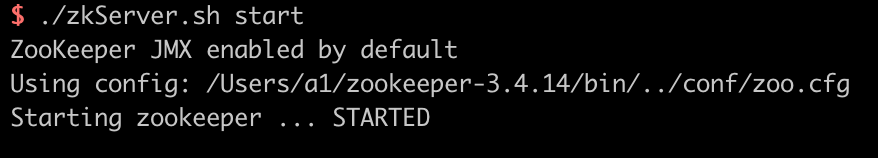

Enter bin directory and execute

./zkServer.sh start

Stop service type directly:. / zkServer.sh stop

View service status:. / zkServer.sh status

Client link:

./zkCli.sh

Stand alone or quite simple, directly find the running file to execute the corresponding command.

5. zookeeper cluster deployment

I deploy four zk instances here, one of which is observer, and enter the conf directory

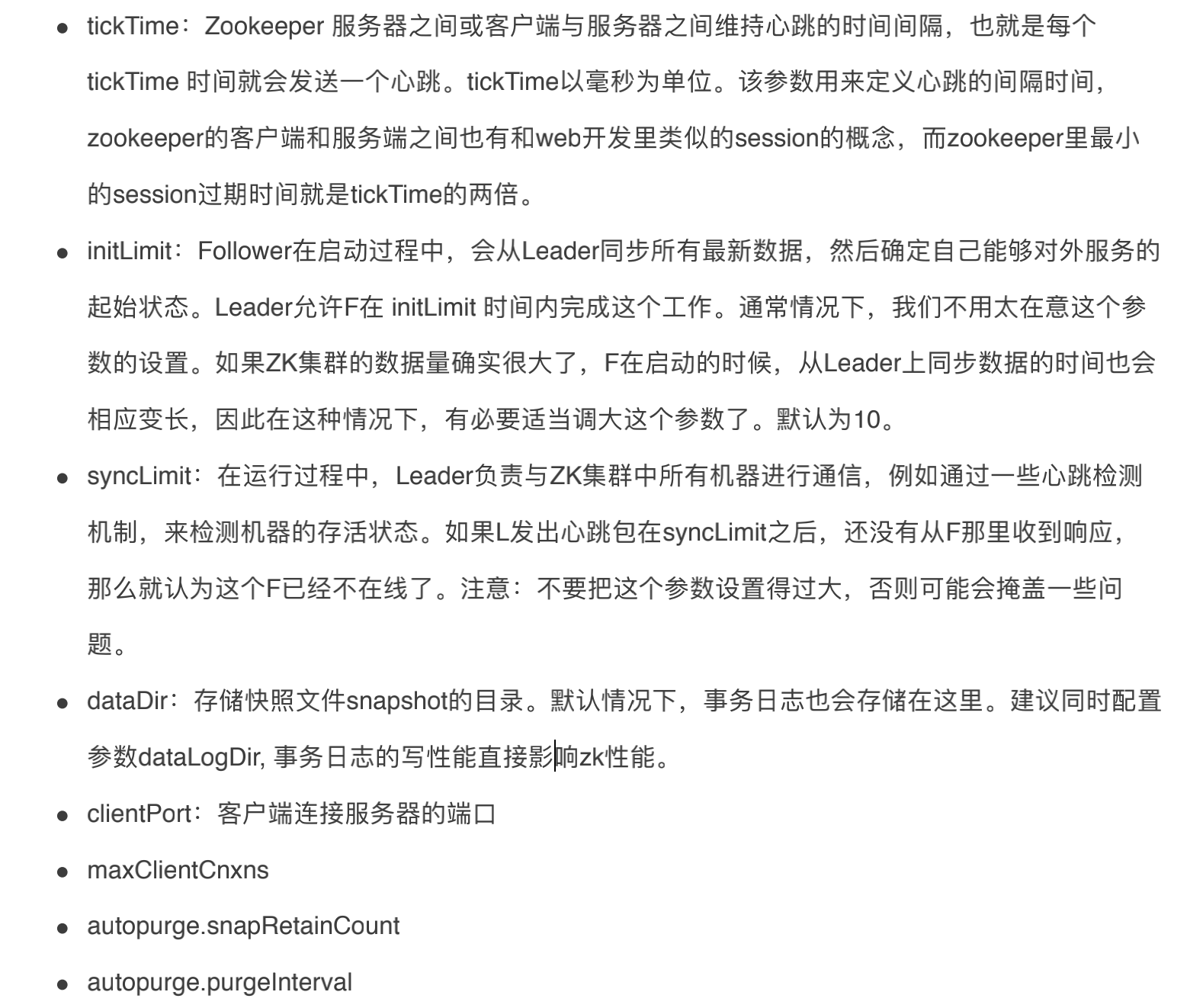

Profile interpretation

Copy zoo.cfg file

cp zoo.cfg zoo1.cfg

Edit the zoo1.cfg file

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/zookeeper/data_1 #New corresponding directory clientPort=2181 dataLogDir=/zookeeper/logs_1 #New corresponding directory # The x in server.x needs to be consistent with myid. The first client user, Leader and Leader, are synchronized. The second port is used for voting communication during the election process server.1=localhost:2887:3887 server.2=localhost:2888:3888 server.3=localhost:2889:3889 server.4=localhost:2890:3890:observer

Three copies of zoo1.cfg

cp zoo1.cfg zoo2.cfg cp zoo1.cfg zoo3.cfg cp zoo1.cfg zoo4.cfg

Modify the port number in the file: clientPort, dataDir, dataLogDir, and create a new directory Next, paste the configuration file contents of the other three instances

zoo2.cfg

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/zookeeper/data_2 #New corresponding directory clientPort=2182 dataLogDir=/zookeeper/logs_2 #New corresponding directory # The x in server.x needs to be consistent with myid. The first client user, Leader and Leader, are synchronized. The second port is used for voting communication during the election process server.1=localhost:2887:3887 server.2=localhost:2888:3888 server.3=localhost:2889:3889 server.4=localhost:2890:3890:observer

zoo3.cfg

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/zookeeper/data_3 #New corresponding directory clientPort=2183 dataLogDir=/zookeeper/logs_3 #New corresponding directory # The x in server.x needs to be consistent with myid. The first client user, Leader and Leader, are synchronized. The second port is used for voting communication during the election process server.1=localhost:2887:3887 server.2=localhost:2888:3888 server.3=localhost:2889:3889 server.4=localhost:2890:3890:observer

zoo4.cfg this instance is observer, and the peerType=observer configuration should be added

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/zookeeper/data_4 #New corresponding directory clientPort=2184 dataLogDir=/zookeeper/logs_4 #New corresponding directory peerType=observer # The x in server.x needs to be consistent with myid. The first client user, Leader and Leader, are synchronized. The second port is used for voting communication during the election process server.1=localhost:2887:3887 server.2=localhost:2888:3888 server.3=localhost:2889:3889 server.4=localhost:2890:3890:observer

Create a new myid file in four data folders

echo "1" > /zookeeper/data_1/myid echo "2" > /zookeeper/data_2/myid echo "3" > /zookeeper/data_3/myid echo "4" > /zookeeper/data_4/myid

Boot instance

zkServer.sh start zookeeper-3.4.14/conf/zoo1.cfg zkServer.sh start zookeeper-3.4.14/conf/zoo2.cfg zkServer.sh start zookeeper-3.4.14/conf/zoo3.cfg zkServer.sh start zookeeper-3.4.14/conf/zoo4.cfg

View instance status

zkServer.sh status zookeeper-3.4.14/conf/zoo1.cfg zkServer.sh status zookeeper-3.4.14/conf/zoo2.cfg zkServer.sh status zookeeper-3.4.14/conf/zoo3.cfg zkServer.sh status zookeeper-3.4.14/conf/zoo4.cfg

You can see that the roles 1 and 3 of each instance are follower, 2 are leader, and 4 are observer

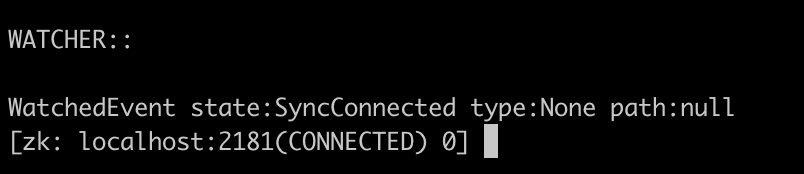

Enter. / zkCli.sh -server localhost:2181 connection

Create a node

create /test 'test1'

Then connect other instances, and you can see the data nodes synchronized to

[zk: localhost:2181(CONNECTED) 0] ls / [zookeeper, test] [zk: localhost:2182(CONNECTED) 0] ls / [zookeeper, test] [zk: localhost:2183(CONNECTED) 0] ls / [zookeeper, test] [zk: localhost:2184(CONNECTED) 0] ls / [zookeeper, test]