start-up

nginx

restart

nginx -s reopen

stop it

nginx -s stop

Thermal loading

./nginx -s reload

Test whether the modified configuration file is normal

nginx -t

Default profile location

/usr/local/nginx/conf/

Adjust the basic configuration of Nginx

/usr/local/nginx/conf/nginx.conf

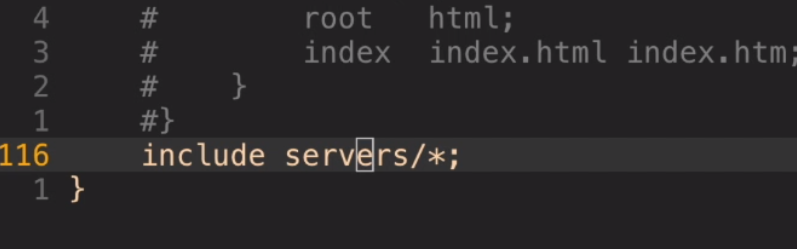

Where is the custom Nginx configuration

/usr/local/nginx/servers

You can also customize as long as it is include d

nginx

Safe exit

./nginx -s quit

load balancing

1. Polling 2. Weight 3.fair4.url_hash 5.ip_hash

1. Polling (default)

Each request is allocated to different back-end servers one by one in chronological order. If the back-end server goes down, it can be automatically eliminated.

upstream backserver {

server 192.168.0.14;

server 192.168.0.15;

}

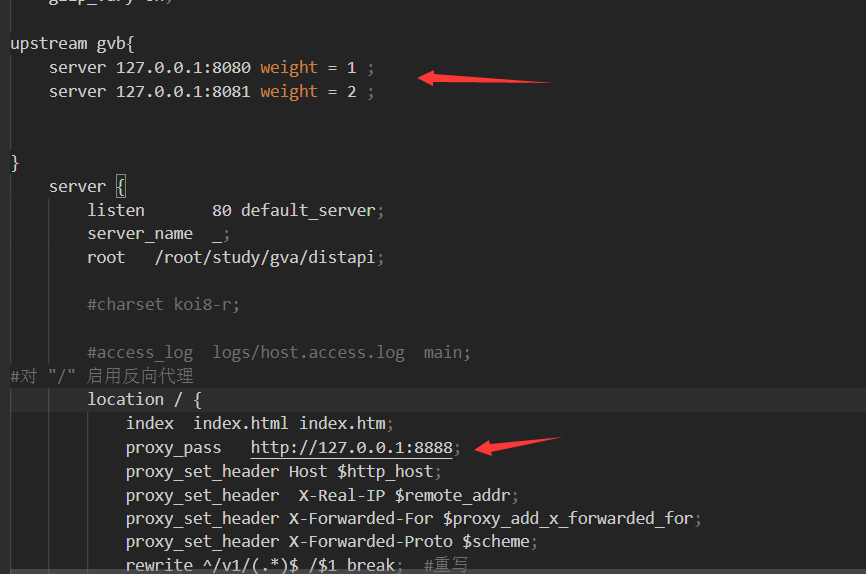

2,weight

Specifies the polling probability. The weight is proportional to the access ratio. It is used for the of uneven performance of back-end servers

situation.

upstream backserver {

server 192.168.0.14 weight=3;

server 192.168.0.15 weight=7;

}

The higher the weight, the greater the probability of being visited. For example, 30% and 70% respectively.

3,ip_hash

There is a problem with the above method, that is, in the load balancing system, if the user logs in on a server, the user will relocate to a server in the server cluster every time when he requests the second time, because we are the load balancing system. Then * the user who has logged in to a server will relocate to another server, Its login information will be lost, which is obviously inappropriate *.

We can use * * IP_ The hash * instruction solves this problem. If the client has accessed a server, when the user accesses it again, the request will be automatically located to the server * through the hash algorithm.

Each request is allocated according to the hash result of the access ip, so that each visitor accesses a back-end server regularly, which can solve the problem of * session *.

upstream backserver {

ip_hash;

server 192.168.0.14:88;

server 192.168.0.15:80;

}

4. fair (third party)

Requests are allocated according to the response time of the back-end server, and those with short response time are allocated first.

upstream backserver {

server server1;

server server2;

fair;

}

5,url_hash (third party)

The request is allocated according to the hash result of the access url, so that each url is directed to the same (corresponding) back-end server, which is more effective when the back-end server is cache.

upstream backserver {

server squid1:3128;

server squid2:3128;

hash $request_uri;

hash_method crc32;

}

Add in server s that need load balancing

proxy_pass http://backserver/;

upstream backserver{

ip_hash;

server 127.0.0.1:9090 down; (down Indicates the number before the order server Temporarily not participating in the load)

server 127.0.0.1:8080 weight=2; (weight The default is 1.weight The greater the, the greater the weight of the load)

server 127.0.0.1:6060;

server 127.0.0.1:7070 backup; (All other non backup machine down Or when you're busy, ask backup machine)

}

max_ Failures: the number of times the request is allowed to fail. The default is 1. When the maximum number of times is exceeded, the proxy is returned_ next_ Error in upstream module definition

fail_ timeout:max_ Pause time after failures

Configuration instance:

#user nobody;

worker_processes 4;

events {

# Maximum concurrent number

worker_connections 1024;

}

http{

# List of servers to be selected

upstream myproject{

# ip_ The hash instruction introduces the same user into the same server.

ip_hash;

server 125.219.42.4 fail_timeout=60s;

server 172.31.2.183;

}

server{

# Listening port

listen 80;

# Under the root directory

location / {

# Select which server list

proxy_pass http://myproject;

}

}

}

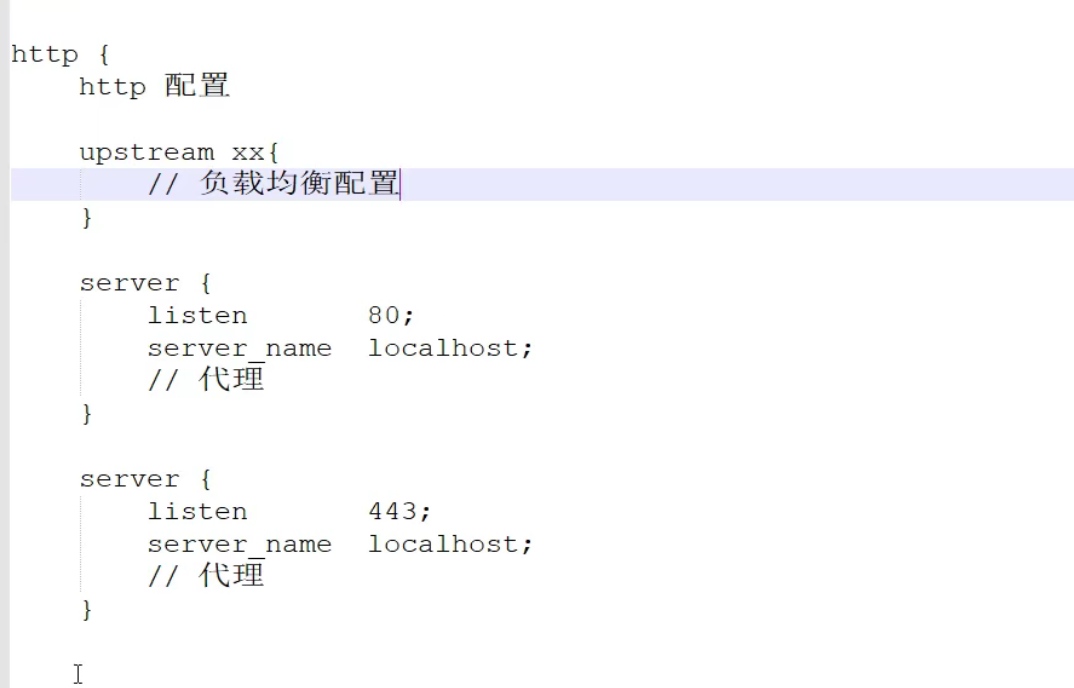

Configuration file structure

Performance is global

Virtual host

Virtual host

The website has a large number of visits and needs load balancing. However, not all websites are so excellent. Some websites need to save costs because the number of visits is too small. They deploy multiple websites on the same server.

For example, when two websites are deployed on the same server, the two domain names resolve to the same IP address, but users can open two completely different websites through the two domain names without affecting each other, just like accessing two servers, so it is called two virtual hosts.

server {

listen 80 default_server;

server_name _;

return 444; # Filter requests from other domain names and return 444 status code

}

server {

listen 80;

server_name www.aaa.com; # www.aaa.com domain name

location / {

proxy_pass http://localhost:8080; # Corresponding port number: 8080

}

}

server {

listen 80;

server_name www.bbb.com; # www.bbb.com domain name

location / {

proxy_pass http://localhost:8081; # Corresponding port number 8081

}

}

An application is opened on servers 8080 and 8081 respectively. The client accesses through different domain names according to the server_name can reverse proxy to the corresponding application server.

The principle of virtual Host is to check whether the Host in the HTTP request header matches the server_name. Interested students can study the HTTP protocol.

In addition, server_ The name configuration can also filter that someone maliciously points some domain names to your host server.