netty Literacy

> The purpose of this article is to have a general impression of netty, so that we can have a deep understanding of some basic concepts before netty.

brief introduction

- NIO Communication Framework

- The number of connections supported by a single point is related to machine memory (about 10W + 1G memory), not to the maximum number of handles (35536) of the system.

- API interface is simple (compared with NIO of JDK)

- event driven

- Commonly used in games, finance and other businesses requiring long links and high real-time requirements

Data Flow in NIO

sequenceDiagram Channel - > Buffer: Read Buffer - > Program: Read Program - > Buffer: Write Buffer - > Channel: Write

> 1. In NIO, all data is read from Channel and cached into Buffer, and the user's own code is read from Buffer. > 2. To write data, you must first write it to Buffer, and then write it to Channnel.

Several Important Objects in netty

- Channel

- ByteBuf

- ChannelHandler

- ChannelHandlerContext

- ChannelPipeline

Channel

> Channel is a connection abstraction. All read and write data are eventually circulated through Channel. There are mainly NioSocket Channel and NioServer Socket Channel.

ByteBuf

> Netty's own cache Buf has some advantages over JDK's ByteBuffer > 1. Length can be expanded dynamically (when writing, judging that capacity is not enough, a large buf will be re-opened, and then the data from the previous buf will be copied into the new buf) > 2. Simple operation. JDK's ByteBuffer has only one position pointer. netty's ByteBuf has read index (degree position) and write index (writing position). Flp () and rewind() are not required for buf operation; the operation is simple.

ChannelHandler

> When ChannelHandler reads data from Channel, it needs to hand it over to ChannelHandler for processing.

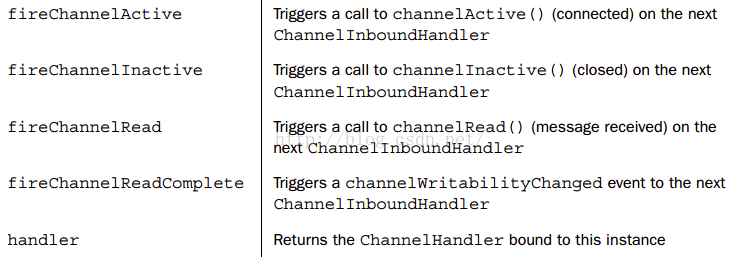

ChannelHandlerContext

> Record the current ChannelHandler environment context with the following information. Each ChannelHandler will have a ChannelHandler Context corresponding to it (one-to-one relationship) > 1. Record the current ChannelHandler object > 2. Identify inbound or outbound > 3. Channel Pipeline > 4. The former ChannelHandlerContext and the latter ChannelHandlerContext. This forms a processing chain similar to the interceptor chain in Spring MVC.

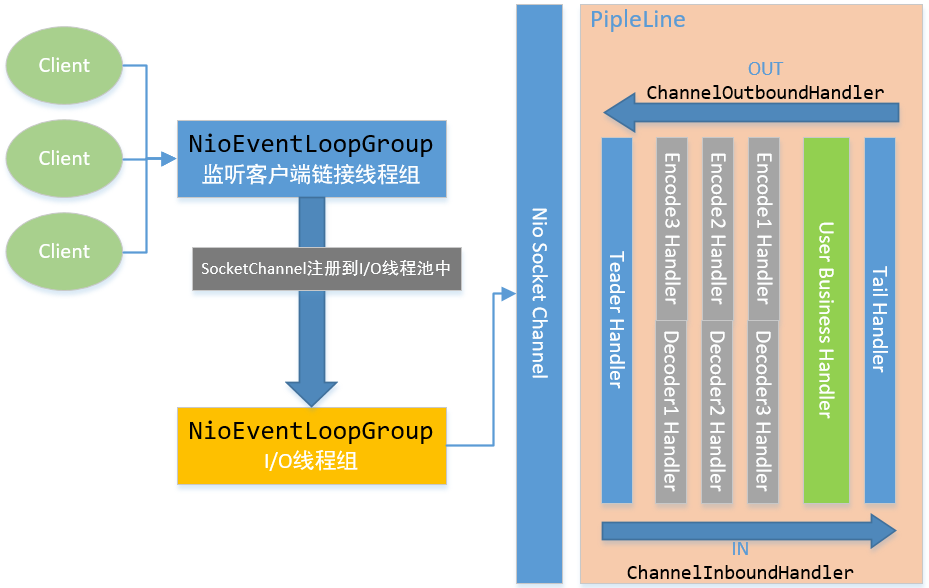

ChannelPipeline

> Netty is event-driven. When different events (data) are acquired, different business logic processing will be done. At this time, there may be a need for multiple handlers to cooperate, some handlers may not care about the current events, some may have finished processing, and do not want the latter Handler processing. <br/> Channel Pipeline is needed when dealing with events. Channel Pipeline holds Channel Handler Context for the head (the incoming type event starts from the beginning) and Channel Handler Context for the tail (the outgoing type event starts from the tail). They are serial links.

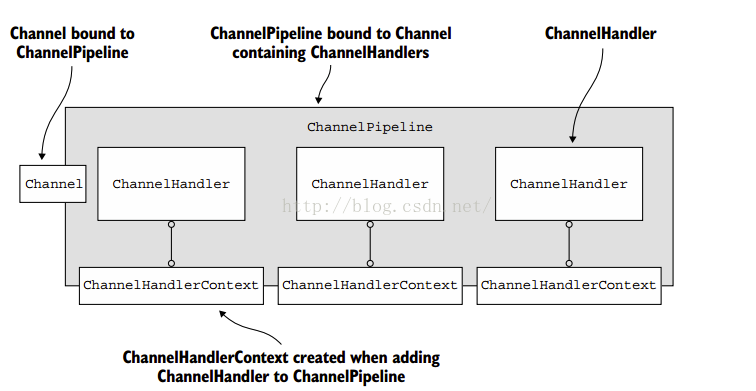

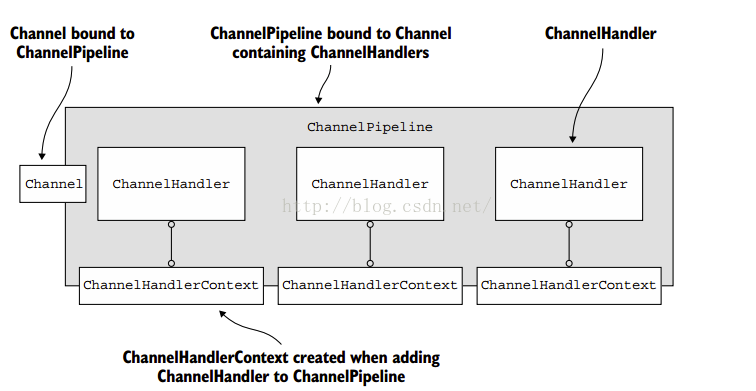

The relationship among ChannelHandler, ChannelHandlerContext, ChannelPipeline

>  >

>  >

>  >

> Channel and Channel are linked through Channel Handler Context.

>

> Channel and Channel are linked through Channel Handler Context.

concrete content This blog is very detailed.

netty's data flow chart

netty official demo

server side

package io.netty.example.discard; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; /** * Discards any incoming data. */ public class DiscardServer { private int port; public DiscardServer(int port) { this.port = port; } public void run() throws Exception { EventLoopGroup bossGroup = new NioEventLoopGroup(); // (1) EventLoopGroup workerGroup = new NioEventLoopGroup(); try { ServerBootstrap b = new ServerBootstrap(); // (2) b.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) // (3) .childHandler(new ChannelInitializer<SocketChannel>() { // (4) @Override public void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new DiscardServerHandler()); } }) .option(ChannelOption.SO_BACKLOG, 128) // (5) .childOption(ChannelOption.SO_KEEPALIVE, true); // (6) // Bind and start to accept incoming connections. ChannelFuture f = b.bind(port).sync(); // (7) // Wait until the server socket is closed. // In this example, this does not happen, but you can do that to gracefully // shut down your server. f.channel().closeFuture().sync(); } finally { workerGroup.shutdownGracefully(); bossGroup.shutdownGracefully(); } } public static void main(String[] args) throws Exception { int port; if (args.length > 0) { port = Integer.parseInt(args[0]); } else { port = 8080; } new DiscardServer(port).run(); } }

package io.netty.example.discard; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; /** * Handles a server-side channel. */ public class DiscardServerHandler extends ChannelInboundHandlerAdapter { // (1) @Override public void channelRead(ChannelHandlerContext ctx, Object msg) { // (2) try { // Do something with msg } finally { ReferenceCountUtil.release(msg); } } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) { // (4) // Close the connection when an exception is raised. cause.printStackTrace(); ctx.close(); } }

package io.netty.example.time; public class TimeServerHandler extends ChannelInboundHandlerAdapter { @Override public void channelActive(final ChannelHandlerContext ctx) { // (1) final ByteBuf time = ctx.alloc().buffer(4); // (2) time.writeInt((int) (System.currentTimeMillis() / 1000L + 2208988800L)); final ChannelFuture f = ctx.writeAndFlush(time); // (3) f.addListener(new ChannelFutureListener() { @Override public void operationComplete(ChannelFuture future) { assert f == future; ctx.close(); } }); // (4) } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) { cause.printStackTrace(); ctx.close(); } }

client side

package io.netty.example.time; public class TimeClient { public static void main(String[] args) throws Exception { String host = args[0]; int port = Integer.parseInt(args[1]); EventLoopGroup workerGroup = new NioEventLoopGroup(); try { Bootstrap b = new Bootstrap(); // (1) b.group(workerGroup); // (2) b.channel(NioSocketChannel.class); // (3) b.option(ChannelOption.SO_KEEPALIVE, true); // (4) b.handler(new ChannelInitializer<SocketChannel>() { @Override public void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new TimeClientHandler()); } }); // Start the client. ChannelFuture f = b.connect(host, port).sync(); // (5) // Wait until the connection is closed. f.channel().closeFuture().sync(); } finally { workerGroup.shutdownGracefully(); } } }

package io.netty.example.time; import java.util.Date; public class TimeClientHandler extends ChannelInboundHandlerAdapter { @Override public void channelRead(ChannelHandlerContext ctx, Object msg) { ByteBuf m = (ByteBuf) msg; // (1) try { long currentTimeMillis = (m.readUnsignedInt() - 2208988800L) * 1000L; System.out.println(new Date(currentTimeMillis)); ctx.close(); } finally { m.release(); } } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) { cause.printStackTrace(); ctx.close(); } }