background

Since I learned PyTorch last time, I have left it for a long time. I almost forgot it. After brushing "die into DL PyTorch" once again, I tried to do the one on Kaggle Digit Reconizer Match.

Reference material

https://tangshusen.me/Dive-into-DL-PyTorch/#/

https://www.kaggle.com/kanncaa1/pytorch-tutorial-for-deep-learning-lovers

https://blog.csdn.net/oliver233/article/details/83274285

code annotation

Code link: https://www.kaggle.com/yannnnnnnnnnnn/kernel5d66c76231/output

The experimental results are as follows. At present, I feel OK. I will continue to adjust later.

Default environment of Kaggle Kernel

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk('/kaggle/input'):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

output

/kaggle/input/digit-recognizer/test.csv /kaggle/input/digit-recognizer/train.csv /kaggle/input/digit-recognizer/sample_submission.csv

Read data

digit_recon_tran_csv = pd.read_csv('/kaggle/input/digit-recognizer/train.csv',dtype = np.float32)

digit_recon_test_csv = pd.read_csv('/kaggle/input/digit-recognizer/test.csv',dtype = np.float32)

print('tran dataset size: ',digit_recon_tran_csv.size,'\n')

print('test dataset size: ',digit_recon_test_csv.size,'\n')

output

tran dataset size: 32970000 test dataset size: 21952000

Convert pandas data to numpy

tran_label = digit_recon_tran_csv.label.values

tran_image = digit_recon_tran_csv.loc[:,digit_recon_tran_csv.columns != "label"].values/255 # normalization

test_image = digit_recon_test_csv.values/255

print('train label size: ',tran_label.shape)

print('train image size: ',tran_image.shape)

print('test image size: ',test_image.shape)

output

train label size: (42000,) train image size: (42000, 784) test image size: (28000, 784)

Using sklearn to divide train into train and valid

from sklearn.model_selection import train_test_split

train_image, valid_image, train_label, valid_label = train_test_split(tran_image,

tran_label,

test_size = 0.2,

random_state = 42)

print('train size: ',train_image.shape)

print('valid size: ',valid_image.shape)

output

train size: (33600, 784) valid size: (8400, 784)

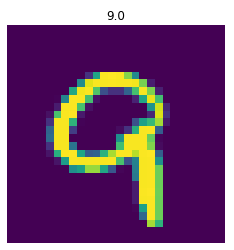

Visualize the test data

# visual

import matplotlib.pyplot as plt

plt.imshow(train_image[10].reshape(28,28))

plt.axis("off")

plt.title(str(train_label[10]))

plt.show()

output

Building data loader with PyTorch

import torch import torch.nn as nn import numpy as np train_image = torch.from_numpy(train_image) train_label = torch.from_numpy(train_label).type(torch.LongTensor) # data type is long valid_image = torch.from_numpy(valid_image) valid_label = torch.from_numpy(valid_label).type(torch.LongTensor) # data type is long # form dataset train_dataset = torch.utils.data.TensorDataset(train_image,train_label) valid_dataset = torch.utils.data.TensorDataset(valid_image,valid_label) # form loader batch_size = 64 # 2^5=64 train_loader = torch.utils.data.DataLoader(train_dataset, batch_size = batch_size, shuffle = True) valid_loader = torch.utils.data.DataLoader(valid_dataset, batch_size = batch_size, shuffle = True)

Use PyTorch to build the model. I designed it by myself, mainly referring to AlexNet

import torchvision

from torchvision import transforms

from torchvision import models

class YANNet(nn.Module):

def __init__(self):

super(YANNet,self).__init__()

self.conv = nn.Sequential(

# size: 28*28

nn.Conv2d(1,8,3,1,1), # in_channels out_channels kernel_size stride padding

nn.ReLU(),

nn.Conv2d(8,16,3,1,1),

nn.ReLU(),

nn.MaxPool2d(2),

# size: 14*14

nn.Conv2d(16,16,3,1,1),

nn.ReLU(),

nn.Conv2d(16,8,3,1,1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.fc = nn.Sequential(

# size: 7*7

nn.Linear(8*7*7,256),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(256,256),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(256,10)

)

def forward(self, img):

x = self.conv(img)

o = self.fc(x.view(x.shape[0],-1))

return o

Build models and start training

model = YANNet()

error = nn.CrossEntropyLoss()

optim = torch.optim.SGD(model.parameters(),lr=0.1)

num_epoc = 7

from torch.autograd import Variable

for epoch in range(num_epoc):

epoc_train_loss = 0.0

epoc_train_corr = 0.0

epoc_valid_corr = 0.0

print('Epoch:{}/{}'.format(epoch,num_epoc))

for data in train_loader:

images,labels = data

images = Variable(images.view(64,1,28,28))

labels = Variable(labels)

outputs = model(images)

optim.zero_grad()

loss = error(outputs,labels)

loss.backward()

optim.step()

epoc_train_loss += loss.data

outputs = torch.max(outputs.data,1)[1]

epoc_train_corr += torch.sum(outputs==labels.data)

with torch.no_grad():

for data in valid_loader:

images,labels = data

images = Variable(images.view(len(images),1,28,28))

labels = Variable(labels)

outputs = model(images)

outputs = torch.max(outputs.data,1)[1]

epoc_valid_corr += torch.sum(outputs==labels.data)

print("loss is :{:.4f},Train Accuracy is:{:.4f}%,Test Accuracy is:{:.4f}".format(epoc_train_loss/len(train_dataset),100*epoc_train_corr/len(train_dataset),100*epoc_valid_corr/len(valid_dataset)))

output

Epoch:0/7 loss is :0.0322,Train Accuracy is:22.7262%,Test Accuracy is:73.0119 Epoch:1/7 loss is :0.0047,Train Accuracy is:90.8244%,Test Accuracy is:94.4167 Epoch:2/7 loss is :0.0024,Train Accuracy is:95.4881%,Test Accuracy is:96.2143 Epoch:3/7 loss is :0.0019,Train Accuracy is:96.4226%,Test Accuracy is:96.6667 Epoch:4/7 loss is :0.0016,Train Accuracy is:97.0804%,Test Accuracy is:96.3095 Epoch:5/7 loss is :0.0013,Train Accuracy is:97.5833%,Test Accuracy is:97.1310 Epoch:6/7 loss is :0.0012,Train Accuracy is:97.8155%,Test Accuracy is:97.5119

Predict the test data and save it as a csv file

test_results = np.zeros((test_image.shape[0],2),dtype='int32')

for i in range(test_image.shape[0]):

one_image = torch.from_numpy(test_image[i]).view(1,1,28,28)

one_output = model(one_image)

test_results[i,0] = i+1

test_results[i,1] = torch.max(one_output.data,1)[1].numpy()

Data = {'ImageId': test_results[:, 0], 'Label': test_results[:, 1]}

DataFrame = pd.DataFrame(Data)

DataFrame.to_csv('submission.csv', index=False, sep=',')

summary

The above is the whole process, but it has to be said that the current accuracy is not particularly high, only about 94%. The possible reasons are as follows:

- No data enhancement for train data

- Few epoch s and no learning rate adjustment

- The network structure is still very simple, maybe it can be more complex, for example, refer to the structure of restnet.