1, Introduction

After the product goes online, our data is very important. It's not allowed to be lost. We should be well prepared. If one day is hacked or deleted maliciously, it will crash. So we need to do full backup and incremental backup for our online database regularly. For example, make incremental backup once an hour, full backup once a day for hot databases, and weekly backup once a week for cold databases. The operating system involved below is centos7.

2, Full backup

/usr/bin/mysqldump -uroot -proot --lock-all-tables --flush-logs hzero-customs > /home/backup.sql

As shown in the above code, its function is to back up the hzoro customers database in full. Where MySQL user name is: root password is: root backup file path is: / home (of course, this path can also be modified according to personal wishes.) The backed up file name is: backup.sql Parameter - Flush logs: use a new log file to record the next log parameter - lock all tables: lock all databases

1. Backup Shell script

#!/bin/bash #Get current time date_simple=$(date "+%Y%m%d") date_now=$(date "+%Y%m%d-%H%M%S") backUpFolder=/home/db/backup/mysql username="root" password="root" db_name="bjja_mdm" #Define backup file name fileName="${db_name}_${date_now}.sql" #Define backup file directory backUpFileName="${backUpFolder}/${date_simple}/${fileName}" mkdir "${backUpFolder}/${date_simple}" echo "starting backup mysql ${db_name} at ${date_now}." docker exec -it mysqlmaster /usr/bin/mysqldump -u${username} -p${password} --lock-all-tables --flush-logs ${db_name} > ${backUpFileName} #Go to backup file directory cd ${backUpFolder}/${date_simple} #Compress backup files tar zcvf ${fileName}.tar.gz ${fileName} rm -rf ${fileName} # use nodejs to upload backup file other place #NODE_ENV=$backUpFolder@$backUpFileName node /home/tasks/upload.js date_end=$(date "+%Y%m%d-%H%M%S") echo "finish backup mysql database ${db_name} at ${date_end}." date_now=$(date "+%Y%m%d-%H%M%S") backUpFolder=/home/db/backup/mysql username="root" password="root" db_name="hzero_customs" #Define backup file name fileName="${db_name}_${date_now}.sql" #Define backup file directory backUpFileName="${backUpFolder}/${date_simple}/${fileName}" echo "starting backup mysql ${db_name} at ${date_now}." docker exec -it mysqlmaster /usr/bin/mysqldump -u${username} -p${password} --lock-all-tables --flush-logs ${db_name} > ${backUpFileName} #Go to backup file directory cd ${backUpFolder}/${date_simple} #Compress backup files tar zcvf ${fileName}.tar.gz ${fileName} rm -rf ${fileName} # use nodejs to upload backup file other place #NODE_ENV=$backUpFolder@$backUpFileName node /home/tasks/upload.js date_end=$(date "+%Y%m%d-%H%M%S") echo "finish backup mysql database ${db_name} at ${date_end}." date_now=$(date "+%Y%m%d-%H%M%S") backUpFolder=/home/db/backup/mysql username="root" password="root" db_name="hzero_platform" #Define backup file name fileName="${db_name}_${date_now}.sql" #Define backup file directory backUpFileName="${backUpFolder}/${date_simple}/${fileName}" echo "starting backup mysql ${db_name} at ${date_now}." docker exec -it mysqlmaster /usr/bin/mysqldump -u${username} -p${password} --lock-all-tables --flush-logs ${db_name} > ${backUpFileName} #Enter the backup file directory cd ${backUpFolder}/${date_simple} #Compress backup files tar zcvf ${fileName}.tar.gz ${fileName} rm -rf ${fileName} # use nodejs to upload backup file other place #NODE_ENV=$backUpFolder@$backUpFileName node /home/tasks/upload.js date_end=$(date "+%Y%m%d-%H%M%S") echo "finish backup mysql database ${db_name} at ${date_end}."

2. Restore full backup

mysql -h localhost -uroot -proot < bakdup.sql

perhaps

mysql> source /path/backup/bakdup.sql

After the full backup is restored, the incremental backup after the full backup is also restored to the database.

3. Scheduled backup

Enter the following command to enter the crontab scheduled task editing interface:

crontab -e

Add the following command, which means to execute the backup script every minute:

* * * * * sh /usr/your/path/mysqlBackup.sh

Every five minutes:

*/5 * * * * sh /usr/your/path/mysqlBackup.sh

Hourly execution:

0 * * * * sh /usr/your/path/mysqlBackup.sh

Every day:

0 0 * * * sh /usr/your/path/mysqlBackup.sh

Weekly:

0 0 * * 0 sh /usr/your/path/mysqlBackup.sh

Monthly execution:

0 0 1 * * sh /usr/your/path/mysqlBackup.sh

Annually:

0 0 1 1 * sh /usr/your/path/mysqlBackup.sh

Restart crontab

service crond restart

3, Incremental backup

First, before incremental backup, you need to check the configuration file and log_ Whether bin is enabled? To do incremental backup, log must be enabled first_ bin . First, enter the myslq command line and enter the following command:

show variables like '%log_bin%';

It is not opened as shown in the following command

mysql> show variables like '%log_bin%'; +---------------------------------+-------+ | Variable_name | Value | +---------------------------------+-------+ | log_bin | OFF | | log_bin_basename | | | log_bin_index | | | log_bin_trust_function_creators | OFF | | log_bin_use_v1_row_events | OFF | | sql_log_bin | ON | +---------------------------------+-------+

1. Modify the configuration file and turn on logging

Edit Mysql configuration file my.ini perhaps docker.cnf

[mysqld] lower_case_table_names=1 max_connections=2000 server-id=1 log-bin=master-bin #Only read and write, as long as the master database configuration. If you want to make master-slave switch, both the master database and the slave database need to be opened skip-host-cache skip-name-resolve collation-server = utf8_unicode_ci init-connect='SET NAMES utf8' slave_skip_errors=1032 character-set-server = utf8 sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION [mysql] default-character-set=utf8 [client] default-character-set=utf8

After modification, restart mysql service, and enter:

show variables like '%log_bin%';

The status is as follows

mysql> show variables like '%log_bin%'; +---------------------------------+--------------------------------+ | Variable_name | Value | +---------------------------------+--------------------------------+ | log_bin | ON | | log_bin_basename | /var/lib/mysql/mysql-bin | | log_bin_index | /var/lib/mysql/mysql-bin.index | | log_bin_trust_function_creators | OFF | | log_bin_use_v1_row_events | OFF | | sql_log_bin | ON | +---------------------------------+--------------------------------+

2. Incremental backup script file

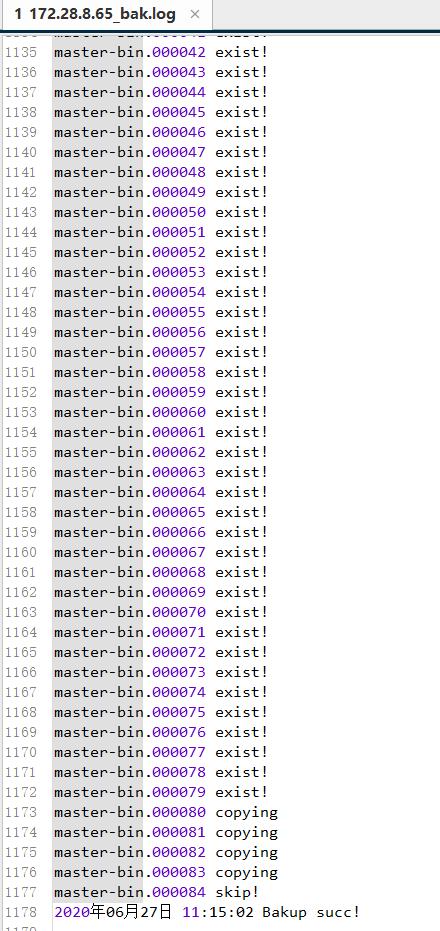

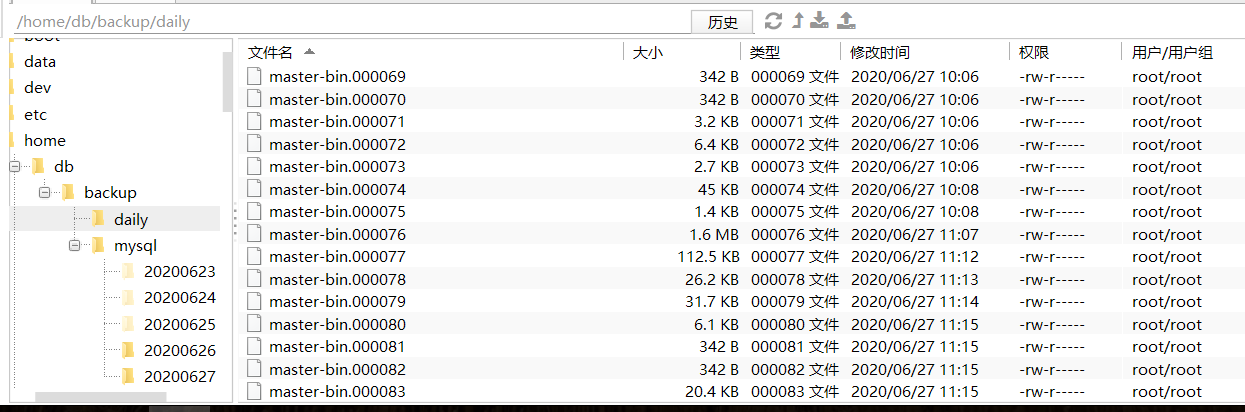

#!/bin/bash #Before using, please create the following directories in advance backupDir=/home/db/backup/daily #Copy the target directory of mysql-bin.00000 * during incremental backup, and manually create this directory in advance mysqlDir=/usr/local/mysql/data #mysql data directory logFile=/home/db/backup/bak.log BinFile=/usr/local/mysql/data/master-bin.index #The index file path of mysql is placed in the data directory docker exec -it mysqlmaster /usr/bin/mysqladmin -uroot -proot flush-logs #This is used to generate a new mysql-bin.00000 * file # Wc-l statistics lines # Awk simply says that awk is to read the file line by line, slice each line with a space as the default separator, and then analyze the cut part. Counter=`wc -l $BinFile |awk '{print $1}'` NextNum=0 #This for loop is used to compare the values of $counter and $nextnum to determine whether the file exists or is up to date for file in `cat $BinFile` do base=`basename $file` echo $base #basename is used to intercept the file name of mysql-bin.00000 * and remove the one before. / mysql-bin.000005/ NextNum=`expr $NextNum + 1` if [ $NextNum -eq $Counter ] then echo $base skip! >> $logFile else dest=$backupDir/$base if(test -e $dest) #test -e is used to check whether the target file exists. If it exists, write exist! To $logFile then echo $base exist! >> $logFile else cp $mysqlDir/$base $backupDir echo $base copying >> $logFile fi fi done echo `date +"%Y year%m month%d day %H:%M:%S"` $Next Bakup succ! >> $logFile

3. View log file

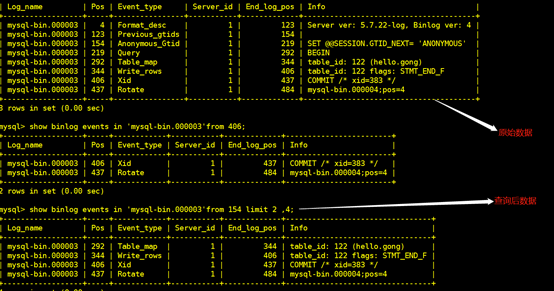

show binlog events in 'mysql-bin.000003';

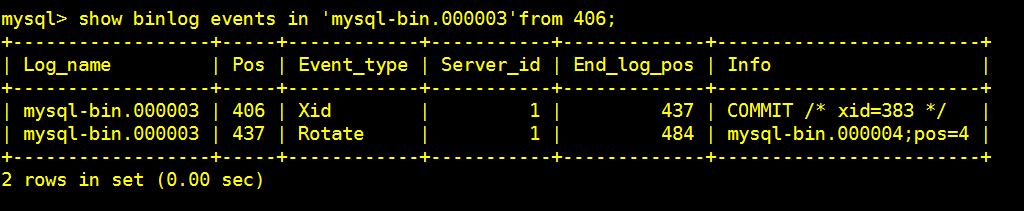

Specify query, starting from pos point 406

Specify query, start from pos point 154, skip 2 lines in the middle, and query 4 pieces of data

4. Recover log files

/usr/bin/mysqlbinlog --start-position=573 --stop-position=718 --database=hello /var/lib/mysql/mysql-bin.000006 | /usr/bin/mysql -uroot -p password -v hello

Restore by time

/usr/bin/mysqlbinlog --start-datetime="2018-04-27 20:57:55" --stop-datetime="2018-04-27 20:58:18" --database=hello /var/lib/mysql/mysql-bin.000009 | /usr/bin/mysql -uroot -p8856769abcd -v hello

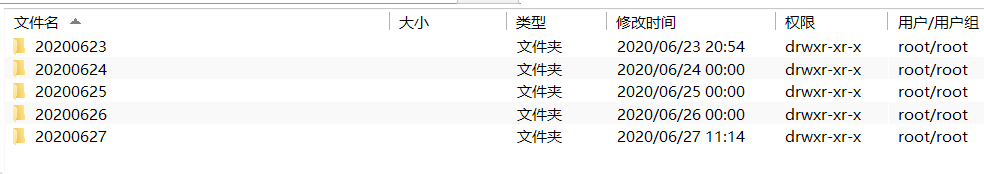

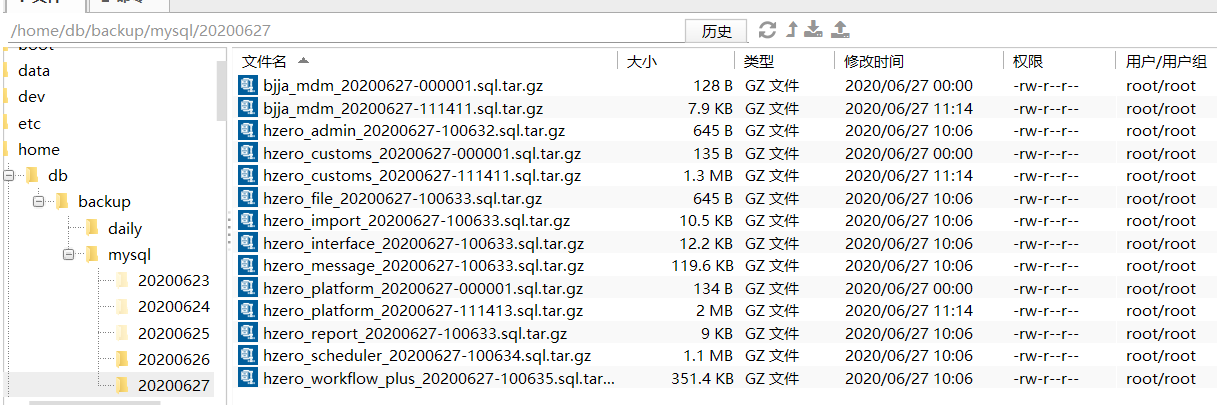

4, Effect

1. Full backup

2. Incremental backup