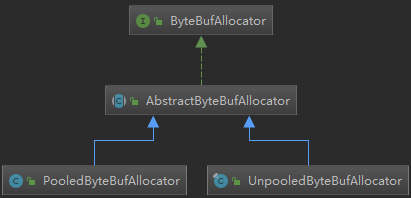

ByteBufAllocator Memory Manager:

The top-level abstraction of memory allocation in Netty is ByteBuf Allocator, which allocates all ByteBuf-type memory. In fact, there are not many functions, mainly the following important API s:

public interface ByteBufAllocator {/**Allocate a block of memory to automatically determine whether to allocate in-heap memory or out-of-heap memory.

* Allocate a {@link ByteBuf}. If it is a direct or heap buffer depends on the actual implementation.

*/

ByteBuf buffer();/**Allocate a block of out-of-heap direct memory as much as possible, and allocate in-heap memory if the system does not support it.

* Allocate a {@link ByteBuf}, preferably a direct buffer which is suitable for I/O.

*/

ByteBuf ioBuffer();/**Allocate a block of in-heap memory.

* Allocate a heap {@link ByteBuf}.

*/

ByteBuf heapBuffer();/**Allocate a block of out-of-heap memory.

* Allocate a direct {@link ByteBuf}.

*/

ByteBuf directBuffer();/**Combination allocation, which combines multiple ByteBuf s into a whole.

* Allocate a {@link CompositeByteBuf}.If it is a direct or heap buffer depends on the actual implementation.

*/

CompositeByteBuf compositeBuffer();

}

Some of you may wonder why there is no memory allocation API of the 8 types mentioned above. Let's look at the basic implementation class AbstractByteBufAllocator of ByteBufAllocator, focusing on the basic implementation of the main API, such as buffer() method source code as follows:

public abstract class AbstractByteBufAllocator implements ByteBufAllocator { @Override public ByteBuf buffer() {

//Determine whether directBuffer is supported by default if (directByDefault) { return directBuffer(); } return heapBuffer(); } }

We find that the buffer() method makes a judgment as to whether direct Buffer is supported by default, and if it is supported, direct Buffer is allocated, otherwise heapBuffer is allocated. The implementation logic of the directBuffer() method and heapBuffer() method is almost the same. Let's look at the directBuffer() method:

@Override public ByteBuf directBuffer() {

//Allocate size, initial size 256 default maximum capacity is Integer.MAX return directBuffer(DEFAULT_INITIAL_CAPACITY, DEFAULT_MAX_CAPACITY);

}@Override

public ByteBuf directBuffer(int initialCapacity, int maxCapacity) {

if (initialCapacity == 0 && maxCapacity == 0) {

return emptyBuf;

}//Check Initialization Size and Maximum Size

validate(initialCapacity, maxCapacity);

return newDirectBuffer(initialCapacity, maxCapacity);

}/**

* Create a direct {@link ByteBuf} with the given initialCapacity and maxCapacity.

*/

protected abstract ByteBuf newDirectBuffer(int initialCapacity, int maxCapacity);

The directBuffer() method has several overloaded methods, and eventually calls the newDirectBuffer() method. We find that the newDirectBuffer() method is actually an abstract method, which is ultimately implemented by a subclass of AbstractByteBufAllocator. Similarly, we find that the heapBuffer() method ultimately calls the newHeapBuffer() method, and the newHeapBuffer() method is also an abstract method, which is specifically implemented by a subclass of AbstractByteBufAllocator. There are two main subclasses of AbstractByteBufAllocator: Pooled ByteBufAllocator and Unpooled ByteBufAllocator.

At this point, we only know the distribution rules of directBuffer, heapBuffer, pooled and unpooled. How can unsafe and non-unsafe be distinguished? In fact, Netty automatically helps us to distinguish, if the underlying operating system supports unsafe, then use unsafe to read and write, otherwise use non-unsafe to read and write. We can check the source code of Unpooled ByteBuf Allocator to see the source code:

public final class UnpooledByteBufAllocator extends AbstractByteBufAllocator implements ByteBufAllocatorMetricProvider {@Override

protected ByteBuf newHeapBuffer(int initialCapacity, int maxCapacity) {

return PlatformDependent.hasUnsafe() ? new UnpooledUnsafeHeapByteBuf(this, initialCapacity, maxCapacity)

: new UnpooledHeapByteBuf(this, initialCapacity, maxCapacity);

}

@Override

protected ByteBuf newDirectBuffer(int initialCapacity, int maxCapacity) {

ByteBuf buf = PlatformDependent.hasUnsafe() ?

UnsafeByteBufUtil.newUnsafeDirectByteBuf(this, initialCapacity, maxCapacity) :

new UnpooledDirectByteBuf(this, initialCapacity, maxCapacity);

return disableLeakDetector ? buf : toLeakAwareBuffer(buf);

}

}

We found that in the new HeapBuffer () method and the new DirectBuffer () method, allocated memory determines whether Platform Dependent supports Unsafa, and if so, creates Buffers of Unsafe type, or creates Buffers of non-Unsafe type. Netty helped us judge automatically.

Unpooled non-pooled memory allocation:

Allocation of in-heap memory:

Now let's look at Unpooled ByteBuf Allocator's memory allocation principle. First, let's look at heapBuffer's allocation logic and enter the source code of the new HeapBuffer () method:

public final class UnpooledByteBufAllocator extends AbstractByteBufAllocator implements ByteBufAllocatorMetricProvider {protected ByteBuf newHeapBuffer(int initialCapacity, int maxCapacity) {

//Whether unsafe is implemented by the bottom of jdk or not, if unsafe objects can be obtained, unsafe is used

return PlatformDependent.hasUnsafe() ? new UnpooledUnsafeHeapByteBuf(this, initialCapacity, maxCapacity)

: new UnpooledHeapByteBuf(this, initialCapacity, maxCapacity);

}

}

By calling Platform Dependent. hasUnsafe () method to determine whether the operating system supports Unsafe, if Unsafe is supported, create Unpooled Unsafe HeapByteBuf class, otherwise create Unpooled HeapByteBuf class. Let's go into the Unpooled Unsafe HeapByteBuf constructor and see what we're going to do.

final class UnpooledUnsafeHeapByteBuf extends UnpooledHeapByteBuf {

.... UnpooledUnsafeHeapByteBuf(ByteBufAllocator alloc, int initialCapacity, int maxCapacity) { super(alloc, initialCapacity, maxCapacity);//The parent constructor is as follows }.... }public UnpooledUnsafeHeapByteBuf(ByteBufAllocator alloc, int initialCapacity, int maxCapacity) {

super(alloc, initialCapacity, maxCapacity);//The parent constructor is as follows

}public UnpooledHeapByteBuf(ByteBufAllocator alloc, int initialCapacity, int maxCapacity) {

super(maxCapacity);//The parent constructor is as follows

checkNotNull(alloc, "alloc");

if (initialCapacity > maxCapacity) {

throw new IllegalArgumentException(String.format(

"initialCapacity(%d) > maxCapacity(%d)", initialCapacity, maxCapacity));

}

this.alloc = alloc;

//Assign the default allocated array new byte [initial capacity] to the global variable array.

setArray(allocateArray(initialCapacity));

setIndex(0, 0);

}protected AbstractReferenceCountedByteBuf(int maxCapacity) {

super(maxCapacity);//The parent constructor is as follows

}protected AbstractByteBuf(int maxCapacity) {

checkPositiveOrZero(maxCapacity, "maxCapacity");

this.maxCapacity = maxCapacity;

}

One key method is the setArray() method, which is implemented very simply by assigning the default allocated array new byte [initial capacity] to the global variable array. Next, the setIndex() method is invoked, and finally readerIndex and writerIndex are initialized in the setIndex0() method:

@Override public ByteBuf setIndex(int readerIndex, int writerIndex) { if (checkBounds) {//The Size Relation of Check 3 checkIndexBounds(readerIndex, writerIndex, capacity()); } setIndex0(readerIndex, writerIndex); return this; }

//AbstractByteBuf Sets Read and Write Pointer Position final void setIndex0(int readerIndex, int writerIndex) { this.readerIndex = readerIndex; this.writerIndex = writerIndex; }

Since Unpooled Unsafe HeapByteBuf and Unpooled HeapByteBuf call the construction method of Unpooled HeapByteBuf, what is the difference between them? In fact, the fundamental difference lies in IO's reading and writing. We can look at their getByte() methods separately to understand the difference between them. Let's start with Unpooled HeapByteBuf's getByte() method implementation:

@Override public byte getByte(int index) { ensureAccessible(); return _getByte(index); } @Override protected byte _getByte(int index) { return HeapByteBufUtil.getByte(array, index); }final class HeapByteBufUtil {

//Take values directly by array index

static byte getByte(byte[] memory, int index) {

return memory[index];

}

}

Consider the implementation of the getByte() method of Unpooled Unsafe HeapByteBuf:

@Override

public byte getByte(int index) {

checkIndex(index);

return _getByte(index);

}

@Override

protected byte _getByte(int index) {

return UnsafeByteBufUtil.getByte(array, index);

}//Call the underlying Unsafe to perform IO operations on data

static byte getByte(byte[] array, int index) {

return PlatformDependent.getByte(array, index);

}

Through this comparison, we have basically understood the difference between Unpooled Unsafe Heap ByteBuf and Unpooled Heap ByteBuf.

Out-of-heap memory allocation:

Or go back to the new DirectBuffer () method of Unpooled ByteBufAllocator:

@Override

protected ByteBuf newDirectBuffer(int initialCapacity, int maxCapacity) {

ByteBuf buf = PlatformDependent.hasUnsafe() ?

UnsafeByteBufUtil.newUnsafeDirectByteBuf(this, initialCapacity, maxCapacity) :

new UnpooledDirectByteBuf(this, initialCapacity, maxCapacity);

return disableLeakDetector ? buf : toLeakAwareBuffer(buf);

}

As with allocating memory in the heap, if Unsafe is supported, the Unsafe ByteBufUtil.newUnsafe DirectByteBuf() method is called, otherwise the Unpooled DirectByteBuf class is created. Let's first look at the Unpooled Direct ByteBuf constructor:

protected UnpooledDirectByteBuf(ByteBufAllocator alloc, int initialCapacity, int maxCapacity) { super(maxCapacity);//The parent constructor is as follows // check paramthis.alloc = alloc;

//Called ByteBuffer.allocateDirect.allocateDirect() through the JDK bottom allocation of a direct buffer, mainly to do an assignment. setByteBuffer(ByteBuffer.allocateDirect(initialCapacity)); }private void setByteBuffer(ByteBuffer buffer) {

ByteBuffer oldBuffer = this.buffer;

if (oldBuffer != null) {

if (doNotFree) {

doNotFree = false;

} else {

freeDirect(oldBuffer);

}

}

this.buffer = buffer;

tmpNioBuf = null;

//Returns the remaining available length, which is the actual read data length

capacity = buffer.remaining();

}protected AbstractReferenceCountedByteBuf(int maxCapacity) {

super(maxCapacity);//The parent constructor is as follows

}protected AbstractByteBuf(int maxCapacity) {

if (maxCapacity < 0) {

throw new IllegalArgumentException("maxCapacity: " + maxCapacity + " (expected: >= 0)");

}

this.maxCapacity = maxCapacity;

}

Next, let's go on to the Unsafe ByteBufUtil. newUnsafe DirectByteBuf () method and see its logic:

static UnpooledUnsafeDirectByteBuf newUnsafeDirectByteBuf( ByteBufAllocator alloc, int initialCapacity, int maxCapacity) { if (PlatformDependent.useDirectBufferNoCleaner()) { return new UnpooledUnsafeNoCleanerDirectByteBuf(alloc, initialCapacity, maxCapacity); } return new UnpooledUnsafeDirectByteBuf(alloc, initialCapacity, maxCapacity); }protected UnpooledUnsafeDirectByteBuf(ByteBufAllocator alloc, int initialCapacity, int maxCapacity) {

super(maxCapacity);//The parent constructor is as follows

//check param

this.alloc = alloc;

setByteBuffer(allocateDirect(initialCapacity), false);

}protected AbstractReferenceCountedByteBuf(int maxCapacity) {

super(maxCapacity);//The parent constructor is as follows

}protected AbstractByteBuf(int maxCapacity) {

if (maxCapacity < 0) {

throw new IllegalArgumentException("maxCapacity: " + maxCapacity + " (expected: >= 0)");

}//Setting Buf Maximum Size

this.maxCapacity = maxCapacity;

}

Its logic is similar to that of the Unpooled DirectByteBuf constructor, so we focus on the setByteBuffer() method:

final void setByteBuffer(ByteBuffer buffer, boolean tryFree) { if (tryFree) { ByteBuffer oldBuffer = this.buffer; if (oldBuffer != null) { if (doNotFree) { doNotFree = false; } else { freeDirect(oldBuffer); } } } this.buffer = buffer; memoryAddress = PlatformDependent.directBufferAddress(buffer); tmpNioBuf = null;

//Returns the remaining available length, which is the actual read data length capacity = buffer.remaining(); }

Save the buffer created from the bottom of the JDK first, and then call PlatformDependent.directBufferAddress() method to get the real memory address of the buffer and save it to the memory Address variable. Let's explore the Platform Dependent. directBufferAddress () method.

public static long directBufferAddress(ByteBuffer buffer) {

return PlatformDependent0.directBufferAddress(buffer);

}

static long directBufferAddress(ByteBuffer buffer) {

return getLong(buffer, ADDRESS_FIELD_OFFSET);

}

private static long getLong(Object object, long fieldOffset) {

return UNSAFE.getLong(object, fieldOffset);

}

As you can see, the getLong() method of UNSAFE is called, which is a native method. It takes data directly from the buffer's memory address plus an offset. So far we have basically understood the difference between Unpooled Unsafe DirectByteBuf and Unpooled DirectByteBuf. Non-unsafe fetches data by subscribing to arrays, while unsafe is the direct operation of memory addresses, which is of course more efficient than non-unsafe.

Pooled pooled memory allocation:

Now let's start by analyzing the allocation principle of Pooled pooled memory. First, we find two methods to allocate memory, the new DirectBuffer () method and the new HeapBuffer () method, which are implemented by Pooled ByteBufAllocator, a subclass of AbstractByteBufAllocator. Let's take the new DirectBuffer () method as an example.

public class PooledByteBufAllocator extends AbstractByteBufAllocator {

......

@Override

protected ByteBuf newDirectBuffer(int initialCapacity, int maxCapacity) {

PoolThreadCache cache = threadCache.get();

PoolArena<ByteBuffer> directArena = cache.directArena;

ByteBuf buf;

if (directArena != null) {

buf = directArena.allocate(cache, initialCapacity, maxCapacity);

} else {

if (PlatformDependent.hasUnsafe()) {

buf = UnsafeByteBufUtil.newUnsafeDirectByteBuf(this, initialCapacity, maxCapacity);

} else {

buf = new UnpooledDirectByteBuf(this, initialCapacity, maxCapacity);

}

}

return toLeakAwareBuffer(buf);

}......

}

First, we see that it gets a cache object of type PoolThreadCache through threadCache.get(), then gets the directArea object through cache, and eventually calls the directArena.allocate() method to allocate ByteBuf. This place may look a little confused. Let's analyze it in detail. We followed the threadCache object as a variable of the PoolThreadLocalCache type and the source code of the PoolThreadLocalCache:

final class PoolThreadLocalCache extends FastThreadLocal<PoolThreadCache> {

@Override

protected synchronized PoolThreadCache initialValue() {

final PoolArena<byte[]> heapArena = leastUsedArena(heapArenas);

final PoolArena<ByteBuffer> directArena = leastUsedArena(directArenas);

return new PoolThreadCache(

heapArena, directArena, tinyCacheSize, smallCacheSize, normalCacheSize,

DEFAULT_MAX_CACHED_BUFFER_CAPACITY, DEFAULT_CACHE_TRIM_INTERVAL);

}.......

}

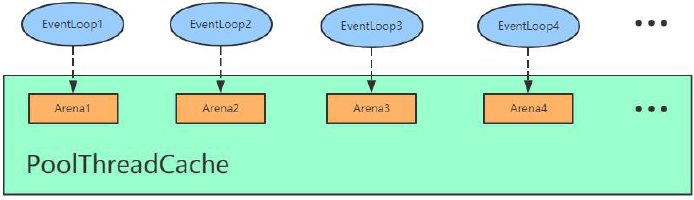

The PoolArena,netty's total memory pool is an array, and each member of the array is a separate memory pool. It is equivalent to a country with several provinces (pool Arena) autonomously administering different areas.

From the name, we find that the initial Value () method of PoolThreadLocalCache is used to initialize PoolThreadLocalCache. First, the leastUsedArena() method is called to obtain heapArena and directArena objects of type PoolArena, respectively. Then heapArena and directArena objects are passed as parameters to the constructor of PoolThreadCache. So where are heapArena and directArena objects initialized? We found that in the construction method of Pooled ByteBufAllocator, we invoked the newArenaArray() method to assign heapArenas and directArenas.

public PooledByteBufAllocator(boolean preferDirect, int nHeapArena, int nDirectArena, int pageSize, int maxOrder, int tinyCacheSize, int smallCacheSize, int normalCacheSize) { ......if (nHeapArena > 0) { heapArenas = newArenaArray(nHeapArena); ...... } else { heapArenas = null; heapArenaMetrics = Collections.emptyList(); } if (nDirectArena > 0) { directArenas = newArenaArray(nDirectArena); ...... } else { directArenas = null; directArenaMetrics = Collections.emptyList(); } }private static <T> PoolArena<T>[] newArenaArray(int size) {

return new PoolArena[size];

}

In fact, a fixed-size PoolArena array is created, the size of which is determined by the parameters nHeapArena and nDirectArena passed in. Let's go back to the source code of the constructor of the Pooled ByteBuf Allocator and see how nHeapArena and nDirectArena are initialized. We find the overloaded constructor of the Pooled ByteBuf Allocator:

public PooledByteBufAllocator(boolean preferDirect) {

//Call the overload constructor as follows this(preferDirect, DEFAULT_NUM_HEAP_ARENA, DEFAULT_NUM_DIRECT_ARENA, DEFAULT_PAGE_SIZE, DEFAULT_MAX_ORDER); }public PooledByteBufAllocator(boolean preferDirect, int nHeapArena, int nDirectArena, int pageSize, int maxOrder) {

this(preferDirect, nHeapArena, nDirectArena, pageSize, maxOrder,

DEFAULT_TINY_CACHE_SIZE, DEFAULT_SMALL_CACHE_SIZE, DEFAULT_NORMAL_CACHE_SIZE);

}

We find that nHeapArena and nDirectArena are assigned by default through DEFAULT_NUM_HEAP_ARENA and DEFAULT_NUM_DIRECT_ARENA constants. Continue with the definition of constants (in static code blocks):

final int defaultMinNumArena = runtime.availableProcessors() * 2;

final int defaultChunkSize = DEFAULT_PAGE_SIZE << DEFAULT_MAX_ORDER;

DEFAULT_NUM_HEAP_ARENA = Math.max(0,SystemPropertyUtil.getInt( "io.netty.allocator.numHeapArenas", (int) Math.min( defaultMinNumArena, runtime.maxMemory() / defaultChunkSize / 2 / 3))); DEFAULT_NUM_DIRECT_ARENA = Math.max(0, SystemPropertyUtil.getInt( "io.netty.allocator.numDirectArenas", (int) Math.min( defaultMinNumArena, PlatformDependent.maxDirectMemory() / defaultChunkSize / 2 / 3)));

Until now, we know the default assignments for nHeapArena and nDirectArena. The default is to allocate the CPU core * 2, which is to assign defaultMinNumArena values to nHeapArena and nDirectArena. You should be impressed with CPU Number * 2. The default number of threads allocated by EventLoopGroup is also CPU Number * 2. So why does Netty design this way? In fact, the main purpose is to ensure that every task thread in Netty can have a unique Arena, so that each thread allocates memory without locking.

Based on the above analysis, we know that Arena has heapArean and DirectArena, which we collectively call Arena. Assuming we have four threads, four Areans are allocated. When creating BtyeBuf, the Arena object is first acquired by PoolThreadCache and assigned to its member variables. Then, when each thread calls the get method through PoolThreadCache, it gets its underlying Arena, that is to say, EventLoop1 gets Arena 1, EventLoop2 gets Arena 2, and so on. As shown in the following figure:

PoolThreadCache can allocate memory not only on Arena, but also on the ByteBuf cache list maintained at its bottom. For example: We created a 1024-byte ByteBuf through Pooled ByteBuf Allocator, and when we run out of it, we may continue to allocate 1024-byte ByteBuf elsewhere. At this point, instead of allocating memory on Arena, it is returned directly through the cache list of ByteBuf maintained in Pool ThreadCache. Then, in Pooled ByteBuf Allocator, maintain three sizes of cache lists: tinyCacheSize, smallCacheSize, and normalCacheSize:

public class PooledByteBufAllocator extends AbstractByteBufAllocator { private final int tinyCacheSize; private final int smallCacheSize; private final int normalCacheSize;

static{DEFAULT_TINY_CACHE_SIZE = SystemPropertyUtil.getInt("io.netty.allocator.tinyCacheSize", 512);

DEFAULT_SMALL_CACHE_SIZE = SystemPropertyUtil.getInt("io.netty.allocator.smallCacheSize", 256);

DEFAULT_NORMAL_CACHE_SIZE = SystemPropertyUtil.getInt("io.netty.allocator.normalCacheSize", 64);}

public PooledByteBufAllocator(boolean preferDirect) { this(preferDirect, DEFAULT_NUM_HEAP_ARENA, DEFAULT_NUM_DIRECT_ARENA, DEFAULT_PAGE_SIZE, DEFAULT_MAX_ORDER); } public PooledByteBufAllocator(int nHeapArena, int nDirectArena, int pageSize, int maxOrder) { this(false, nHeapArena, nDirectArena, pageSize, maxOrder); } public PooledByteBufAllocator(boolean preferDirect, int nHeapArena, int nDirectArena, int pageSize, int maxOrder) { this(preferDirect, nHeapArena, nDirectArena, pageSize, maxOrder, DEFAULT_TINY_CACHE_SIZE, DEFAULT_SMALL_CACHE_SIZE, DEFAULT_NORMAL_CACHE_SIZE); } public PooledByteBufAllocator(boolean preferDirect, int nHeapArena, int nDirectArena, int pageSize, int maxOrder, int tinyCacheSize, int smallCacheSize, int normalCacheSize) { super(preferDirect); threadCache = new PoolThreadLocalCache(); this.tinyCacheSize = tinyCacheSize; this.smallCacheSize = smallCacheSize; this.normalCacheSize = normalCacheSize; final int chunkSize = validateAndCalculateChunkSize(pageSize, maxOrder); ...... } }

We see that in the constructor of Pooled ByteBuf Allocator, tinyCacheSize=512, smallCacheSize=256, and normalCacheSize=64 are assigned respectively. In this way, we can pre-create fixed size memory pools in Netty, which greatly improves the performance of memory allocation.

DirectArena memory allocation process:

Arena's basic process of allocating memory consists of three steps:

- Pooled ByteBuf is reused from the object pool.

- Memory allocation from the cache;

- Allocate memory from the memory heap.

Taking directBuff as an example, let's first look at the reuse of Pooled ByteBuf from the object pool. We still follow the new DirectBuffer () method of Pooled ByteBuf Allocator:

@Override

protected ByteBuf newDirectBuffer(int initialCapacity, int maxCapacity) {

PoolThreadCache cache = threadCache.get();

PoolArena<ByteBuffer> directArena = cache.directArena;

ByteBuf buf;

if (directArena != null) {

buf = directArena.allocate(cache, initialCapacity, maxCapacity);

} else {

.......

}

return toLeakAwareBuffer(buf);

}......

We have already made it clear that the allocate() method of PoolArena is now directly followed by the allocate() method of PoolArena:

PooledByteBuf<T> allocate(PoolThreadCache cache, int reqCapacity, int maxCapacity) {

PooledByteBuf<T> buf = newByteBuf(maxCapacity);

allocate(cache, buf, reqCapacity);

return buf;

}

It's very clear in this place. First, we call the newByteBuf() method to get a PooledByteBuf object, then allocate a piece of memory in the thread-private PoolThreadCache by allocate() method, and then initialize the value of memory address in the buf. We can follow up with newByteBuf() and select the DirectArea object:

@Override protected PooledByteBuf<ByteBuffer> newByteBuf(int maxCapacity) { if (HAS_UNSAFE) { return PooledUnsafeDirectByteBuf.newInstance(maxCapacity); } else { return PooledDirectByteBuf.newInstance(maxCapacity); }

}

We found that we first decided whether UnSafe was supported or not, and by default Unsafe was generally supported, so we went on to the new Instance () method of Pooled Unsafe DirectByteBuf:

final class PooledUnsafeDirectByteBuf extends PooledByteBuf<ByteBuffer> {

private static final Recycler<PooledUnsafeDirectByteBuf> RECYCLER = new Recycler<PooledUnsafeDirectByteBuf>() {

@Override

protected PooledUnsafeDirectByteBuf newObject(Handle<PooledUnsafeDirectByteBuf> handle) {

return new PooledUnsafeDirectByteBuf(handle, 0);

}

};

static PooledUnsafeDirectByteBuf newInstance(int maxCapacity) {

PooledUnsafeDirectByteBuf buf = RECYCLER.get();

buf.reuse(maxCapacity);

return buf;

}.......

}

As the name implies, the first thing I see is to get a buf from the get() method of the RECYCLER object. From the code snippet above, the RECYCLER object implements a new Object () method that creates a new buf when there is no buf available in the recycle bin. Because the acquired buf may be taken out of the recycle bin, it needs to be reset before reuse. So, as you go on, you call buf's reuse() method:

final void reuse(int maxCapacity) {

maxCapacity(maxCapacity);

setRefCnt(1);

setIndex0(0, 0);

discardMarks();

}

We find that reuse() is to restore all parameters to their initial state. By this point, we should be clear about the whole process of getting buf objects from the memory pool. Next, go back to the allocate() method of PoolArena and see how the real memory is allocated. Buf's memory allocation has two main situations: memory allocation from cache and memory allocation from memory heap. Let's look at the code and go into the allocate() method logic:

private void allocate(PoolThreadCache cache, PooledByteBuf<T> buf, final int reqCapacity) { ... if (normCapacity <= chunkSize) { if (cache.allocateNormal(this, buf, reqCapacity, normCapacity)) { // was able to allocate out of the cache so move on return; }

allocateNormal(buf, reqCapacity, normCapacity); } else { // Huge allocations are never served via the cache so just call allocateHuge allocateHuge(buf, reqCapacity); } }

This code logic looks very complex, but the logic we omitted is basically to determine the size of different specifications and get memory from the corresponding cache. If all specifications are not met, the allocateHuge() method is called directly for real memory allocation.