I. Environmental preparation

(Each machine is CentOS 7.6)

Each machine executes:

yum install chronyd -y

systemctl start chronyd

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.8.130 master

192.168.8.131 node01

192.168.8.132 node02

192.168.8.133 node03systemctl disable firewalld

systemctl stop firewalld

setenforce 0 is provisionally effective

vim /etc/selinux/config

SELINUX=disabled

Permanent entry into force but need to be restarted

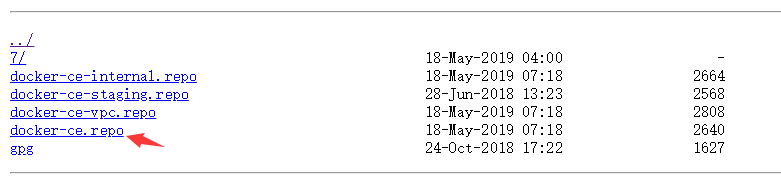

Configuring docker image source

Visit mirrors.aliyun.com, find docker-ce, click linux, click centos, and right-click docker-ce.repo to copy the link address

cd /etc/yum.repos.d/

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

--2019-05-19 17:39:51-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Host being parsed mirrors.aliyun.com (mirrors.aliyun.com)...

The command is also executed on three other machines

Next, execute at the master node:

Modify yum source

[root@master ~]# cd /etc/yum.repos.d/

[root@master yum.repos.d]# ls

CentOS-Base.repo CentOS-fasttrack.repo CentOS-Vault.repo epel-testing.repo

CentOS-CR.repo CentOS-Media.repo docker-ce.repo kubernetes.repo

CentOS-Debuginfo.repo CentOS-Sources.repo epel.repo

[root@master yum.repos.d]# vim CentOS-Base.repo

[base]

name=CentOS-$releasever - Base

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os&infra=$infra

#baseurl=http://mirror.centos.org/centos/$releasever/os/$basearch/

baseurl=https://mirrors.aliyun.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates&infra=$infra

#baseurl=http://mirror.centos.org/centos/$releasever/updates/$basearch/

baseurl=https://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=extras&infra=$infra

#baseurl=http://mirror.centos.org/centos/$releasever/extras/$basearch/

baseurl=https://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

Change the base updates and extras into Ali's. Save the exit and send it to the other three work stations

scp /etc/yum.repos.d/CentOS-Base.repo node01:/etc/yum.repos.d/

scp /etc/yum.repos.d/CentOS-Base.repo node02:/etc/yum.repos.d/

scp /etc/yum.repos.d/CentOS-Base.repo node03:/etc/yum.repos.d/yum install docker-ce -y

systemctl enable docker

systemctl start docker

Modify docker startup parameters

[root@master ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by dockerExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

The red item is added

systemctl daemon-reload

systemctl restart docker

docker info Look at the startup item[root@master ~]# iptables -vnL

Chain INPUT (policy ACCEPT 1307 packets, 335K bytes)

pkts bytes target prot opt in out source destination

2794 168K KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 ctstate NEW / kubernetes service portals /

2794 168K KUBE-EXTERNAL-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 ctstate NEW / kubernetes externally-visible service portals /

773K 188M KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 KUBE-FORWARD all -- 0.0.0.0/0 0.0.0.0/0 / kubernetes forwarding rules /

0 0 KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 ctstate NEW / kubernetes service portals /

0 0 DOCKER-USER all -- 0.0.0.0/0 0.0.0.0/0

0 0 DOCKER-ISOLATION-STAGE-1 all -- 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DOCKER all -- docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0

Send it to three jobs

scp /usr/lib/systemd/system/docker.service node01:/usr/lib/systemd/system/docker.service

scp /usr/lib/systemd/system/docker.service node02:/usr/lib/systemd/system/docker.service

scp /usr/lib/systemd/system/docker.service node03:/usr/lib/systemd/system/docker.service

View rules

[root@master ~]# sysctl -a |grep bridge

net.bridge.bridge-nf-call-arptables = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-filter-pppoe-tagged = 0

net.bridge.bridge-nf-filter-vlan-tagged = 0

net.bridge.bridge-nf-pass-vlan-input-dev = 0

sysctl: reading key "net.ipv6.conf.all.stable_secret"

sysctl: reading key "net.ipv6.conf.default.stable_secret"

sysctl: reading key "net.ipv6.conf.docker0.stable_secret"

sysctl: reading key "net.ipv6.conf.ens33.stable_secret"

sysctl: reading key "net.ipv6.conf.lo.stable_secret"

Among them, the red items have different values in different environments. Add configuration to ensure that they are 1.

[root@master ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

~

Reread

[root@master ~]# systctl -p /etc/sysctl.d/k8s.conf

scp /etc/sysctl.d/k8s.conf node01:/etc/sysctl.d/

scp /etc/sysctl.d/k8s.conf node02:/etc/sysctl.d/

scp /etc/sysctl.d/k8s.conf node03:/etc/sysctl.d/

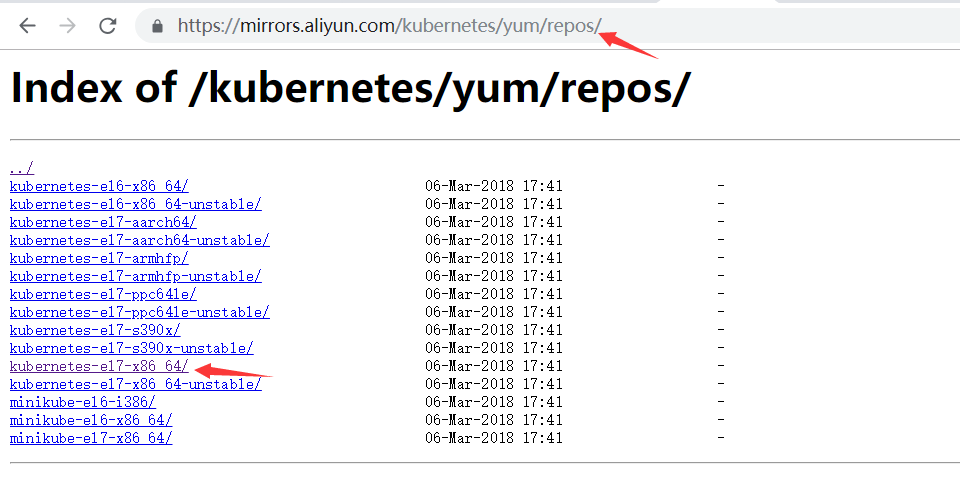

Create the kubernetes.repo file locally

[root@master ~]# cd /etc/yum.repos.d/

[root@master yum.repos.d]# vim kubernetes.repo

[kubernetes]

name=Kubernetes Repository

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

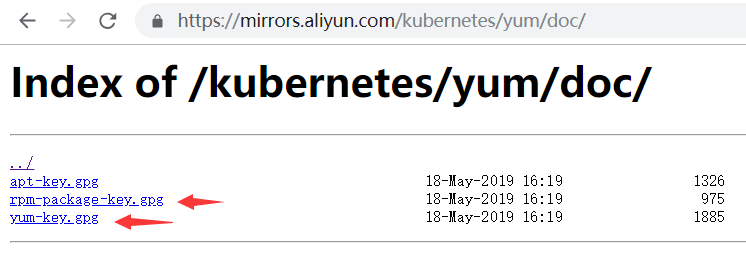

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Also find kubernetes on Ali Mirror, click yum, click repos, and find kubernetes-el7-x86_64/

The link address of baseurl in the file is kubernetes-el7-x86_64/.

The two addresses in gpgkey are the two link addresses in doc in the previous directory

yum repolist check

Look at the package at the beginning of kube

[root@master yum.repos.d]# yum list all |grep "^kube"

kubeadm.x86_64 1.14.2-0 @kubernetes

kubectl.x86_64 1.14.2-0 @kubernetes

kubelet.x86_64 1.14.2-0 @kubernetes

kubernetes-cni.x86_64 0.7.5-0 @kubernetes

kubernetes.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-ansible.noarch 0.6.0-0.1.gitd65ebd5.el7 epel

kubernetes-client.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-master.x86_64 1.5.2-0.7.git269f928.el7 extras

kubernetes-node.x86_64 1.5.2-0.7.git269f928.el7 extras

Installation tools

yum install -y kubeadm kubectl kubelet

Modify the kubelet parameter (used by kubeadm)

[root@master yum.repos.d]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

Look at the default parameters for cluster initialization

[root@master yum.repos.d]# kubeadm config print init-defaults

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master

taints:effect: NoSchedule

key: node-role.kubernetes.io/masterapiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: ""

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.14.0

networking:

dnsDomain: cluster.local

podSubnet: ""

serviceSubnet: 10.96.0.0/12

scheduler: {}

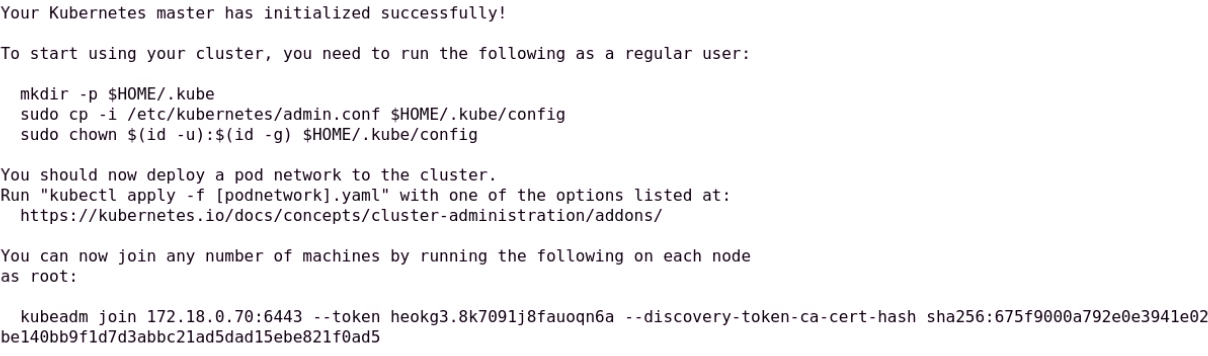

kubeadm init --pod-network-cidr="10.244.0.0/16" --ignore-preflight-errors=Swap

Success will show:

Record the last join command, which will be used later to join the cluster

View node

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 145m v1.14.2

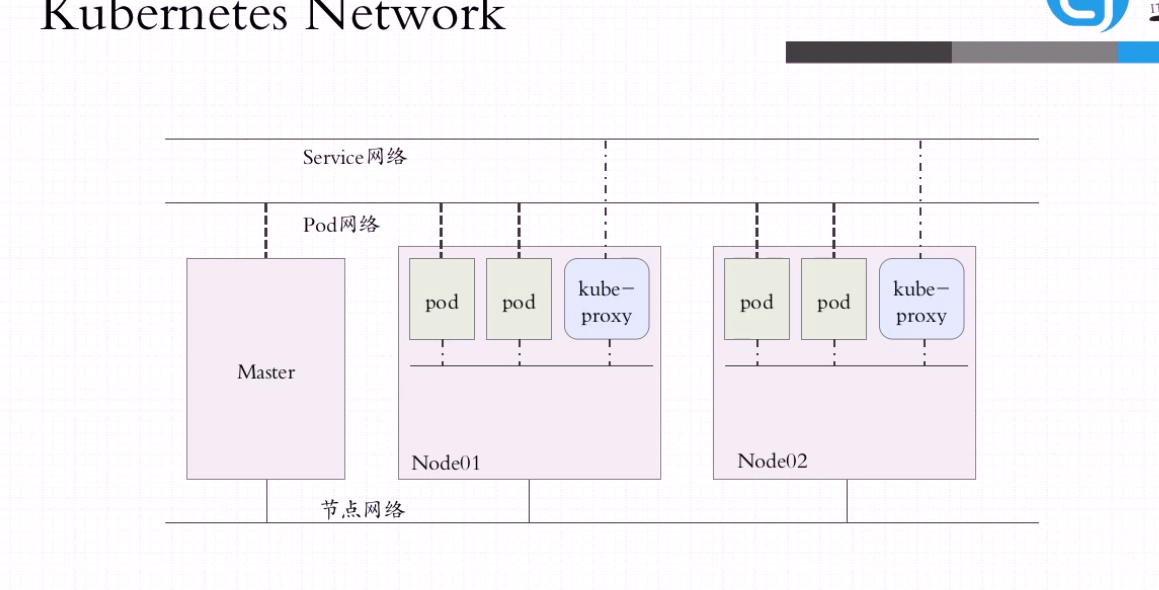

status is NotReady, we need to deploy network plug-ins

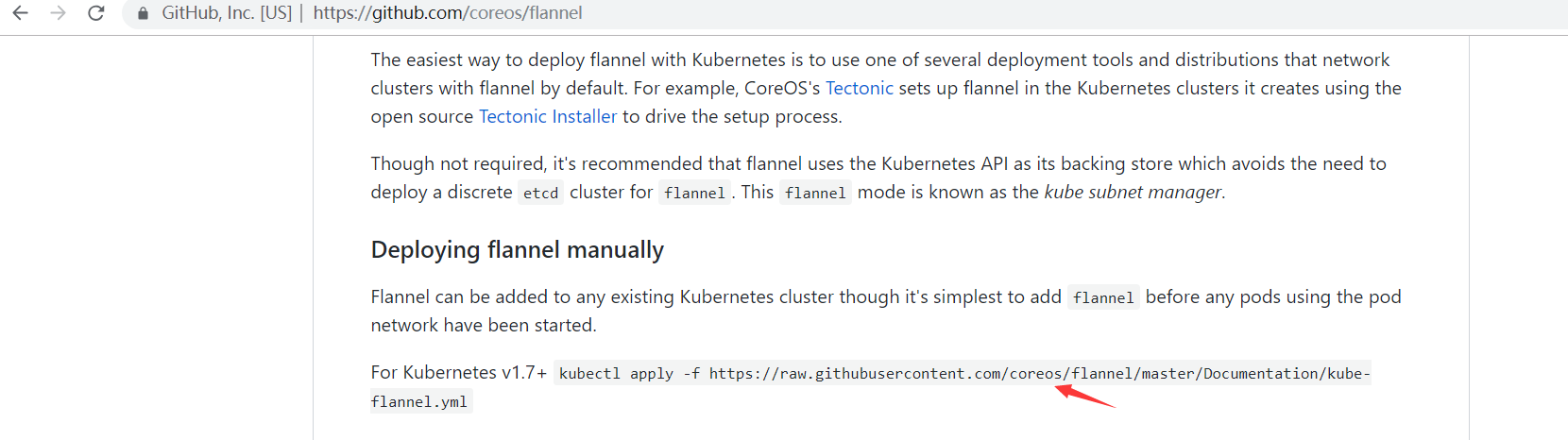

Deployment of flannel

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

podsecuritypolicy.extensions/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-fb8b8dccf-q55g7 1/1 Running 0 150m

coredns-fb8b8dccf-vk7td 1/1 Running 0 150m

etcd-master 1/1 Running 0 149m

kube-apiserver-master 1/1 Running 0 149m

kube-controller-manager-master 1/1 Running 0 149m

kube-flannel-ds-amd64-gfl77 1/1 Running 0 71s

kube-proxy-4s9f6 1/1 Running 0 150m

kube-scheduler-master 1/1 Running 0 149m

The first two are likely to be created. Just a moment.

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 152m v1.14.2

Send it to the other three jobs

[root@master ~]# scp /etc/yum.repos.d/kubernetes.repo node01:/etc/yum.repos.d/

root@node01's password:

kubernetes.repo 100% 269 169.4KB/s 00:00

[root@master ~]# scp /etc/yum.repos.d/kubernetes.repo node02:/etc/yum.repos.d/

root@node02's password:

kubernetes.repo 100% 269 277.9KB/s 00:00

[root@master ~]# scp /etc/yum.repos.d/kubernetes.repo node03:/etc/yum.repos.d/

root@node03's password:

kubernetes.repo

Next, add three work stations to the cluster and execute commands on node01 02 03

[root@node01 ~]# yum install -y kubeadm kubelet

Then go to master and copy the file

[root@master ~]# scp /etc/sysconfig/kubelet node01:/etc/sysconfig/

root@node01's password:

kubelet 100% 42 32.7KB/s 00:00

[root@master ~]# scp /etc/sysconfig/kubelet node02:/etc/sysconfig/

root@node02's password:

kubelet 100% 42 32.9KB/s 00:00

[root@master ~]# scp /etc/sysconfig/kubelet node03:/etc/sysconfig/

root@node03's password:

kubelet 100% 42 29.4KB/s 00:00

[root@master ~]#

Pull up pause image of Ali warehouse on work first

[root@node01 ~]# kubeadm join 192.168.8.130:6443 --token kxmqr4.1vza1kh70vra2d2u --discovery-token-ca-cert-hash sha256:6537d556e18c1799f10ac567dcaa41ee2b3197aa4c464747bc50243a6142bc1c --ignore-preflight-errors=Swap

View node

[root@master /]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 172m v1.14.2

node01 Ready <none> 7m39s v1.14.2

node02 Ready <none> 48s v1.14.2

node03 Ready <none> 43s v1.14.2