-

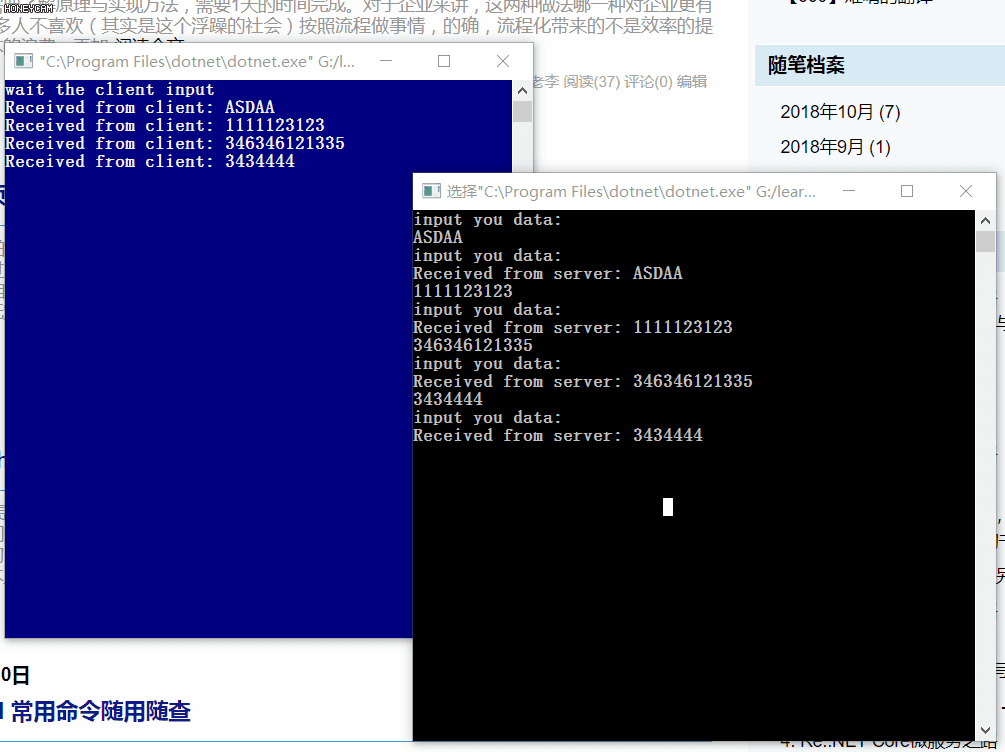

When the server starts, bind and listen on (READ) set ports, such as 1889.

-

When the client starts, bind the specified port and wait for user input.

-

When the user enters any string data, the client transcodes the data into byte format and transfers it to the server.

-

When the server receives the data from the client, it transcodes the data, outputs the console, and returns the data to the client again.

-

The client receives the data and prints it.

Server

Add two static methods, SayHello and SayByebye, to provide remote calls, which are super simple and unexplained.

public static class Say { public static string SayHello(string content) { return $"hello {content}"; } public static string SayByebye(string content) { return $"byebye {content}"; } }

In our original ChannelRead function, the original Echo loop transmission is directly replaced by the following.

1 public override void ChannelRead(IChannelHandlerContext context, object message) 2 { 3 if (message is IByteBuffer buffer) 4 { 5 Console.WriteLine($"message length is {buffer.Capacity}"); 6 var obj = JsonConvert.DeserializeObject<Dictionary<string, string>>(buffer.ToString(Encoding.UTF8).Replace(")", "")); // (1) 7 8 byte[] msg = null; 9 if (obj["func"].Contains("sayHello")) // (2) 10 { 11 msg = Encoding.UTF8.GetBytes(Say.SayHello(json["username"])); 12 } 13 14 if (obj["func"].Contains("sayByebye")) // (2) 15 { 16 msg = Encoding.UTF8.GetBytes(Say.SayByebye(json["username"])); 17 } 18 19 if (msg == null) return; 20 // Set up Buffer Size 21 var b = Unpooled.Buffer(msg.Length, msg.Length); // (3) 22 IByteBuffer byteBuffer = b.WriteBytes(msg); // (4) 23 context.WriteAsync(byteBuffer); // (5) 24 } 25 }

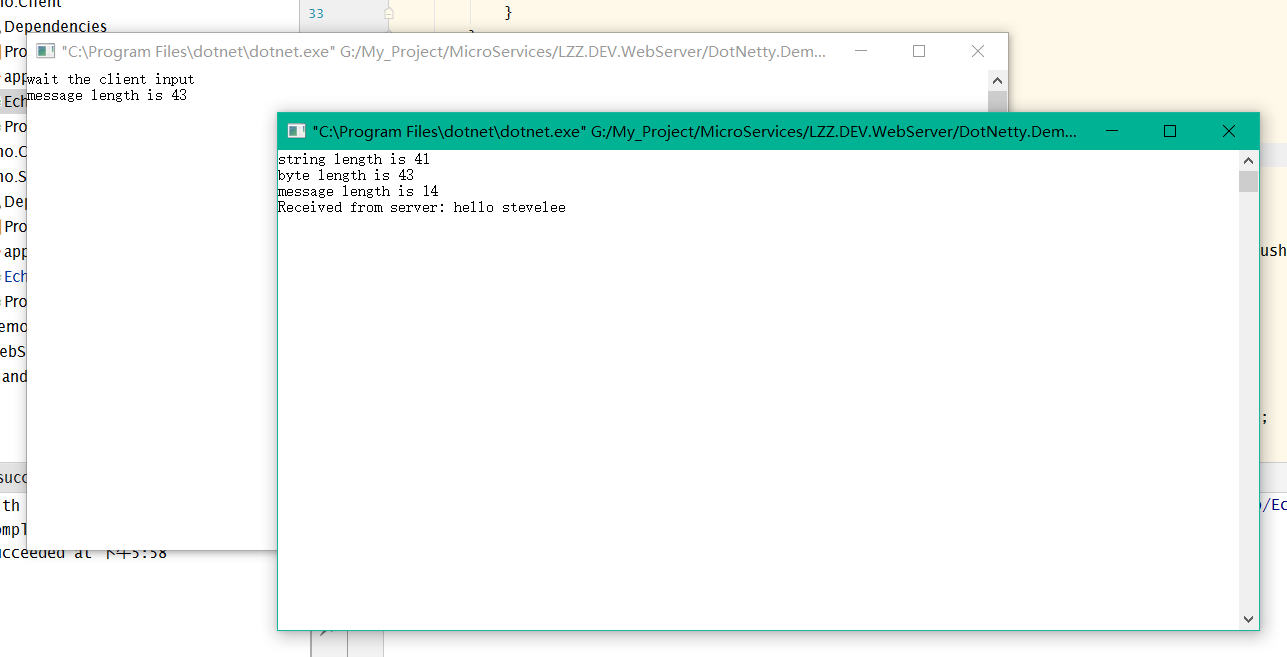

Client

We will make the following modifications to EchoClientHandler in the previous demo to complete a simple request

1 public EchoClientHandler() 2 { 3 var hello = new Dictionary<string, string> // (1) 4 { 5 {"func", "sayHello"}, 6 {"username", "stevelee"} 7 }; 8 SendMessage(ToStream(JsonConvert.SerializeObject(hello))); 9 } 10 11 private byte[] ToStream(string msg) 12 { 13 Console.WriteLine($"string length is {msg.Length}"); 14 using (var stream = new MemoryStream()) // (2) 15 { 16 Serializer.Serialize(stream, msg); 17 return stream.ToArray(); 18 } 19 } 20 21 private void SendMessage(byte[] msg) 22 { 23 Console.WriteLine($"byte length is {msg.Length}"); 24 _initialMessage = Unpooled.Buffer(msg.Length, msg.Length); 25 _initialMessage.WriteBytes(msg); // (3) 26 }

Start

Because the use of the sayHello method is explicitly marked on the client side, the client will receive a "hello stevelee" returned by the server side.

The simplest RPC remote call is done (in fact, the last one belongs to RPC, but here the method and filter are used to specify the call).

Questions

-

It's impossible for a server to invoke a method in this clumsy way of filtering?Yes, this is only a DEMO, to demonstrate and understand the basic concepts, but to move a dynamic proxy to implement the method Invoke.

-

This DEMO is only a point-to-point remote call and does not involve any advanced features such as service routing and forwarding.

-

When there are new interfaces, they need to be recompiled and exposed. If there are tens of thousands of new interfaces, isn't it crazy to do this duplicate work.

-

...etc

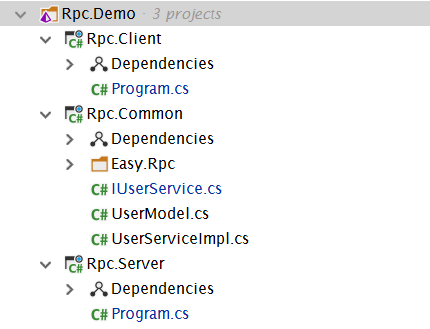

Esay.Rpc

First look at what the interface defines:

1 /// <summary> 2 /// Interface UserService Definition 3 /// </summary> 4 [RpcTagBundle] 5 public interface IUserService 6 { 7 Task<string> GetUserName(int id); 8 9 Task<int> GetUserId(string userName); 10 11 Task<DateTime> GetUserLastSignInTime(int id); 12 13 Task<UserModel> GetUser(int id); 14 15 Task<bool> Update(int id, UserModel model); 16 17 Task<IDictionary<string, string>> GetDictionary(); 18 19 Task Try(); 20 21 Task TryThrowException(); 22 }

1 [ProtoContract] 2 public class UserModel 3 { 4 [ProtoMember(1)] public string Name { get; set; } 5 6 [ProtoMember(2)] public int Age { get; set; } 7 }

There are two different tags above, which are unique to protobuf-net.

protobuf-net pit

-

There is no inheritance of this class in the default example, so there will not be a monster problem, but if UserModel is a subclass and it inherits from a parent that also has multiple subclasses, direct ProtoContract participation in serialization will result in an error, and DataMemberOffset = needs to be added to the attributeX, where x is not a letter, but a serialization order of this subclass.For example, if three subclasses inherit the same parent class, and the offsets of the first two subclasses are 1 and 2, then the offset of this class will be set to 3, and so on.

-

Of the default data types, there is no problem with the standard type defined by the system, but it would also be a nightmare to have an array type like int[] of a monster. The official website team did not explain why serialization of arrays is not supported. I guess it was abandoned because of the irregularity of the array (such as multidimensional arrays, even irregular multidimensional arrays), after all, the orderColumnization does not affect performance.

Next, continue with the server-side code

1 static void Main() 2 { 3 var bTime = DateTime.Now; 4 5 // Automatic assembly 6 var serviceCollection = new ServiceCollection(); 7 { 8 serviceCollection 9 .AddLogging() 10 .AddRpcCore() 11 .AddService() 12 .UseSharedFileRouteManager("d:\\routes.txt") 13 .UseDotNettyTransport(); 14 15 // ** Injecting local test classes 16 serviceCollection.AddSingleton<IUserService, UserServiceImpl>(); 17 } 18 19 // Build the current container 20 var buildServiceProvider = serviceCollection.BuildServiceProvider(); 21 22 // Get Service Management Entity Class 23 var serviceEntryManager = buildServiceProvider.GetRequiredService<IServiceEntryManager>(); 24 var addressDescriptors = serviceEntryManager.GetEntries().Select(i => new ServiceRoute 25 { 26 Address = new[] 27 { 28 new IpAddressModel {Ip = "127.0.0.1", Port = 9881} 29 }, 30 ServiceDescriptor = i.Descriptor 31 }); 32 var serviceRouteManager = buildServiceProvider.GetRequiredService<IServiceRouteManager>(); 33 serviceRouteManager.SetRoutesAsync(addressDescriptors).Wait(); 34 35 // Build internal log processing 36 buildServiceProvider.GetRequiredService<ILoggerFactory>().AddConsole((console, logLevel) => (int) logLevel >= 0); 37 38 // Get Service Host 39 var serviceHost = buildServiceProvider.GetRequiredService<IServiceHost>(); 40 41 Task.Factory.StartNew(async () => 42 { 43 //Start Host 44 await serviceHost.StartAsync(new IPEndPoint(IPAddress.Parse("127.0.0.1"), 9881)); 45 }); 46 47 Console.ReadLine(); 48 }

1 static void Main() 2 { 3 var serviceCollection = new ServiceCollection(); 4 { 5 serviceCollection 6 .AddLogging() // Add Log 7 .AddClient() // Add Client 8 .UseSharedFileRouteManager(@"d:\routes.txt") // Add Shared Route 9 .UseDotNettyTransport(); // Add to DotNetty Communication transmission 10 } 11 12 var serviceProvider = serviceCollection.BuildServiceProvider(); 13 14 serviceProvider.GetRequiredService<ILoggerFactory>().AddConsole((console, logLevel) => (int) logLevel >= 0); 15 16 var services = serviceProvider.GetRequiredService<IServiceProxyGenerater>() 17 .GenerateProxys(new[] {typeof(IUserService)}).ToArray(); 18 19 var userService = serviceProvider.GetRequiredService<IServiceProxyFactory>().CreateProxy<IUserService>( 20 services.Single(typeof(IUserService).GetTypeInfo().IsAssignableFrom) 21 ); 22 23 while (true) 24 { 25 Task.Run(async () => 26 { 27 Console.WriteLine($"userService.GetUserName:{await userService.GetUserName(1)}"); 28 Console.WriteLine($"userService.GetUserId:{await userService.GetUserId("rabbit")}"); 29 Console.WriteLine($"userService.GetUserLastSignInTime:{await userService.GetUserLastSignInTime(1)}"); 30 var user = await userService.GetUser(1); 31 Console.WriteLine($"userService.GetUser:name={user.Name},age={user.Age}"); 32 Console.WriteLine($"userService.Update:{await userService.Update(1, user)}"); 33 Console.WriteLine($"userService.GetDictionary:{(await userService.GetDictionary())["key"]}"); 34 await userService.Try(); 35 Console.WriteLine("client function completed!"); 36 }).Wait(); 37 Console.ReadKey(); 38 } 39 }