Large Data Notebook 06-YARN Construction and Case Study

Keywords:

Big Data

Hadoop

xml

NodeManager

Zookeeper

The construction of yarn

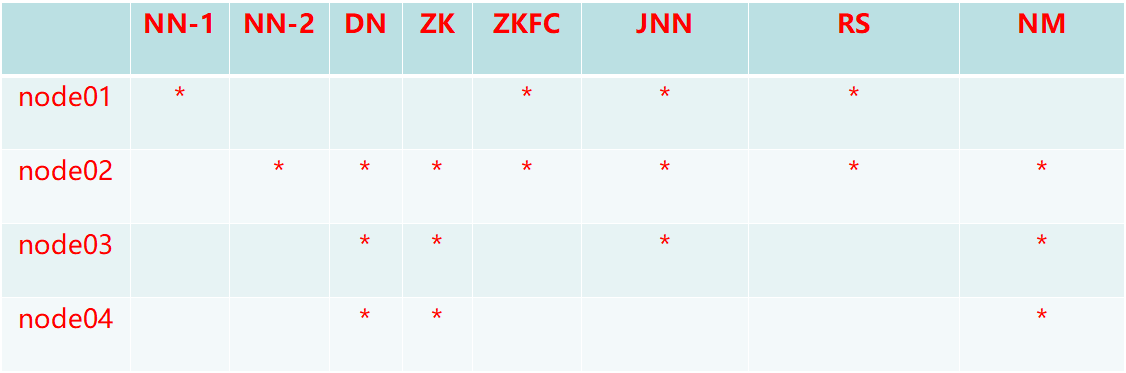

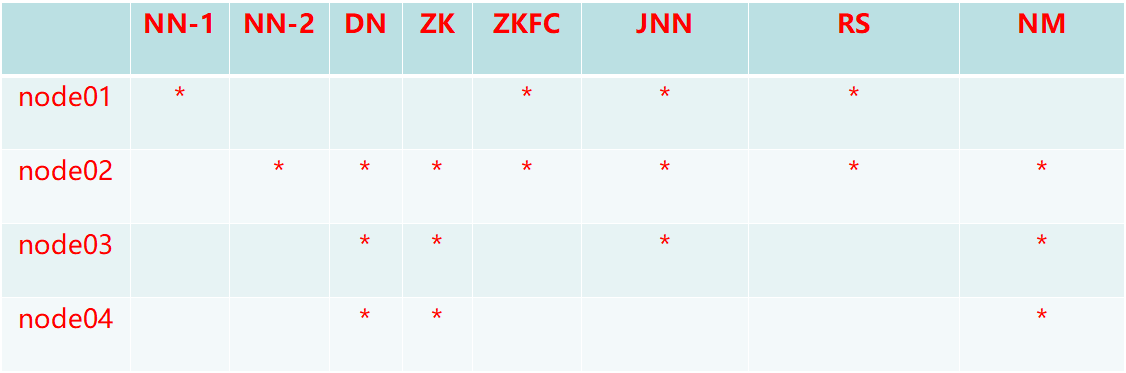

Cluster planning

To configure

- Modify the configuration file mapred-sitex.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<-- Cross-platform configuration -->

<property>

<name>mapreduce.app-submission.cross-platform</name>

<value>true</value>

</property>

- Modify configuration file yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>cluster1</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>node01</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>node02</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>node02:2181,node03:2181,node04:2181</value>

</property>

- Distribution of configurable information to all nodes

- Start zookeeper on node02 node03 node04

./zkServer.sh start

- Start hdfs and yarn clusters on node01

start-dfs.sh

start-yarn.sh

- Start a standby ResourceManager separately on node02

(RM that has automatically started an active on node01)

yarn-daemon.sh start resourcemanager

- View the management page from port 8088

Test case

wordcount

Use the test case wordcount provided by MapReduce

- To mapreduce's jar package directory

cd $HADOOP_HOME/share/hadoop/mapreduce

- Running test cases

hadoop jar hadoop-mapreduce-examples-2.6.5.jar wordcount /input /output

- input: The directory where the data resides in the hdfs file system

- ouput: is a directory that does not exist in hdfs. The result of program running will be output to that directory. If there is a directory, it will report an error.

- View the results of the operation

hdfs dfs -cat /output/*

Posted by marli on Wed, 30 Jan 2019 11:00:15 -0800