This article describes how to deploy a highly available k8s cluster through Kubespray. The k8s version is 1.12.5.

1. Deployment Manual

Code Warehouse: https://github.com/kubernetes-sigs/kubespray

Reference documents: https://kubespray.io/#/

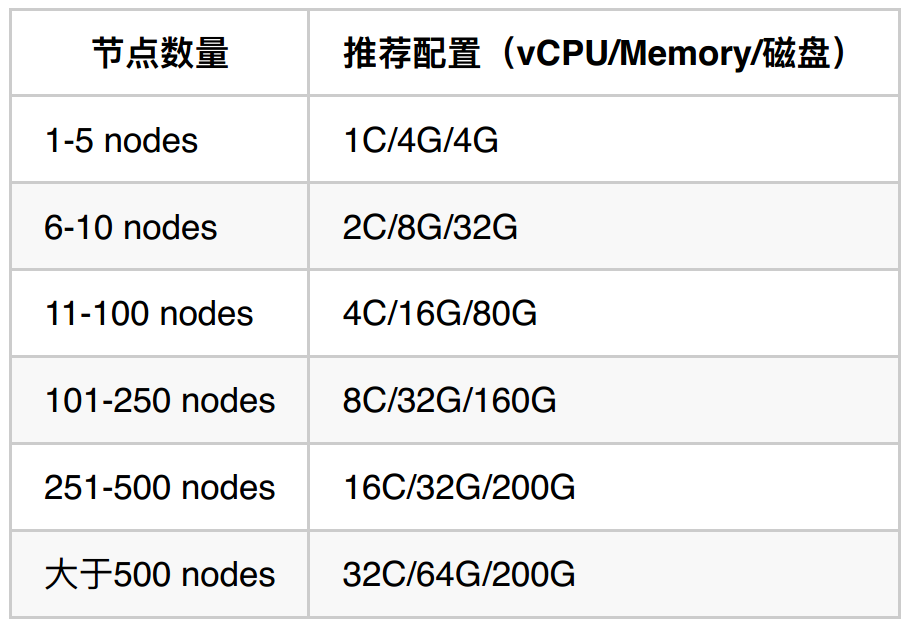

2. k8s master machine configuration

3. Installation steps of k8s cluster

Step 1: Set up Secret-Free Login between Hosts

Since kubespray relies on ansible, ansible accesses hosts through ssh protocol, it is necessary to set up secret-free login between hosts before deployment. The steps are as follows:

ssh-keygen -t rsa scp ~/.ssh/id_rsa.pub root@IP:/root/.ssh ssh root@IP cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

Step 2: Download kubespray

Note: Instead of using the code for the master branch of the github repository, I'm using tag v2.8.3 for deployment.

wget https://github.com/kubernetes-sigs/kubespray/archive/v2.8.3.tar.gz tar -xvf v2.8.3 cd kubespray-v2.8.3

Step 3: Configuration adjustment

3.1 Replacement of Mirror

Kubernetes installations are mostly foreign mirrors, which cannot be obtained due to firewalls, so you need to create your own image warehouse and upload them to the image warehouse.

3.1.1 New Mirror Warehouse

Harbor is the component we choose for the mirror warehouse.

https://github.com/goharbor/harbor/blob/master/docs/installation_guide.md

3.1.2 collates the mirrors needed for k8s cluster deployment

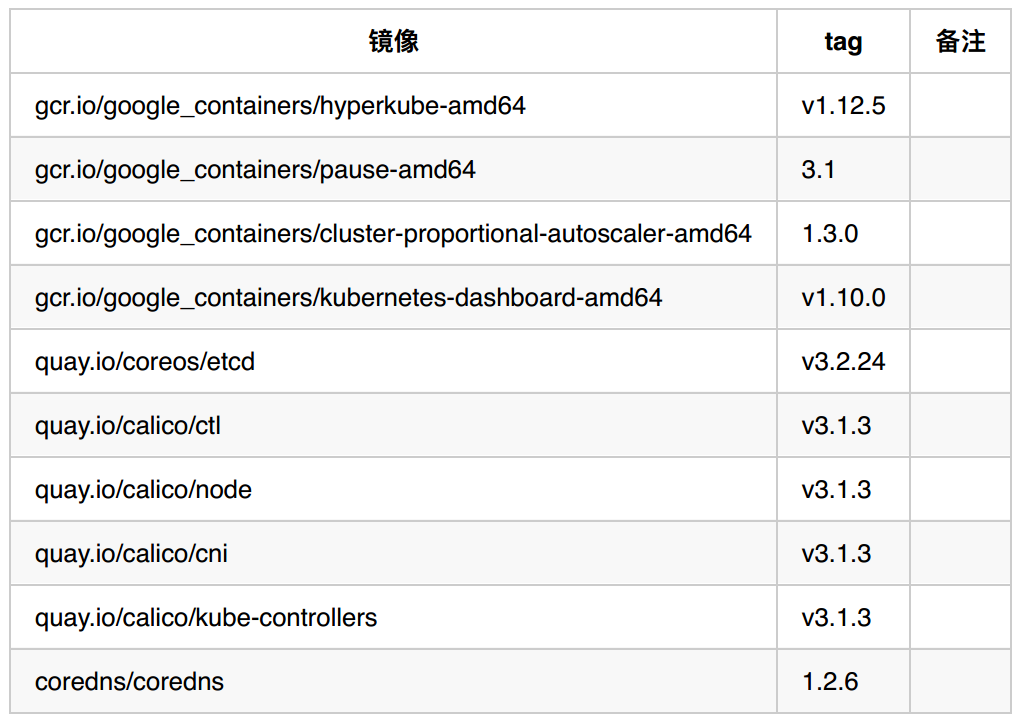

In the file roles/download/defaults/main.yml, you can see the list of full mirrors used. Note that some mirrors are not used for the time being due to unused functions. We mainly use the following mirrors:

3.1.3 Download the required image and upload it to the private image warehouse

The list of images used is as follows. Here I applied for a foreign Aliyun host to download the required images and upload them to the private image warehouse.

For example, when manipulating a mirror, you need to execute the following commands:

docker pull gcr.io/google_containers/kubernetes-dashboard-amd64:v1.10.0 docker tag gcr.io/google_containers/kubernetes-dashboard-amd64:v1.10.0 106.14.219.69:5000/google_containers/kubernetes-dashboard-amd64:v1.10.0 docker push 106.14.219.69:5000/google_containers/kubernetes-dashboard-amd64:v1.10.0

3.1.4 Change Mirror Address and Docker Configuration

Add the following configuration to the inventory/testcluster/group_vars/k8s-cluster/k8s-cluster.yml file:

# kubernetes image repo define kube_image_repo: "10.0.0.183:5000/google_containers" ## modified by: robbin # Comme: Modify the Mirror Warehouse of's Components to the Private Mirror Warehouse Address etcd_image_repo: "10.0.0.183:5000/coreos/etcd" coredns_image_repo: "10.0.0.183:5000/coredns" calicoctl_image_repo: "10.0.0.183:5000/calico/ctl" calico_node_image_repo: "10.0.0.183:5000/calico/node" calico_cni_image_repo: "10.0.0.183:5000/calico/cni" calico_policy_image_repo: "10.0.0.183:5000/calico/kube-controllers" hyperkube_image_repo: "{{ kube_image_repo }}/hyperkube-{{ image_arch }}" pod_infra_image_repo: "{{ kube_image_repo }}/pause-{{ image_arch }}" dnsautoscaler_image_repo: "{{ kube_image_repo }}/cluster-proportional-autoscaler-{ { image_arch }}" dashboard_image_repo: "{{ kube_image_repo }}/kubernetes-dashboard-{{ image_arch }}"

Since our private image repository does not have https certificates configured, the following configuration needs to be added to the inventory/testcluster/group_vars/all/docker.yml file:

docker_insecure_registries: - 10.0.0.183:5000

3.2 Docker Installation Source Change and Execution File Preprocessing

3.2.1 Docker installation source changes

Because docker is installed from Docker official source by default, the speed is very slow. Here we change to domestic Ali source and add the following configuration in inventory/testcluster/group_vars/k8s-cluster/k8s-cluster.yml file:

# CentOS/RedHat docker-ce repo docker_rh_repo_base_url: 'https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable' docker_rh_repo_gpgkey: 'https://mirrors.aliyun.com/docker-ce/linux/centos/gpg' dockerproject_rh_repo_base_url: 'https://mirrors.aliyun.com/docker-engine/yum/repo/main/centos/7' dockerproject_rh_repo_gpgkey: 'https://mirrors.aliyun.com/docker-engine/yum/gpg'

3.2.2 Preprocessing of Executable Files

In addition, due to the need to download some executable files from google and github, we can not download them directly from the server because of firewall. We can download these executable files in advance and upload them to the specified server path.

The download address of executable file can be found in roles/download/defaults/main.yml file. The download path is as follows:

kubeadm_download_url: "https://storage.googleapis.com/kubernetes-release/release/v 1.12.5/bin/linux/amd64/kubeadm" hyperkube_download_url: "https://storage.googleapis.com/kubernetes-release/release /v1.12.5/bin/linux/amd64/hyperkube" cni_download_url: "https://github.com/containernetworking/plugins/releases/downloa d/v0.6.0/cni-plugins-amd64-v0.6.0.tgz"

Next, modify the file permissions and upload them to the / tmp/releases directory of each server

chmod 755 cni-plugins-amd64-v0.6.0.tgz hyperkube kubeadm scp cni-plugins-amd64-v0.6.0.tgz hyperkube kubeadm root@node1:/tmp/releases

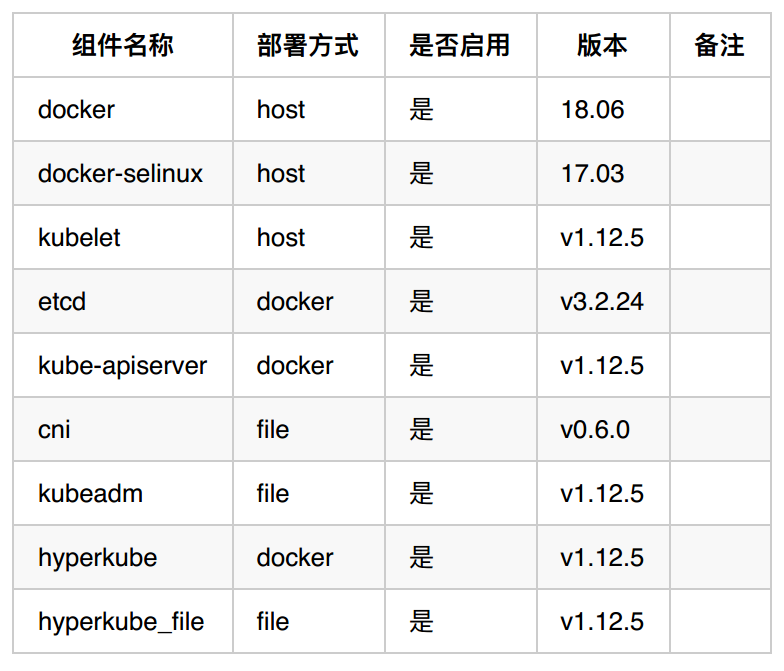

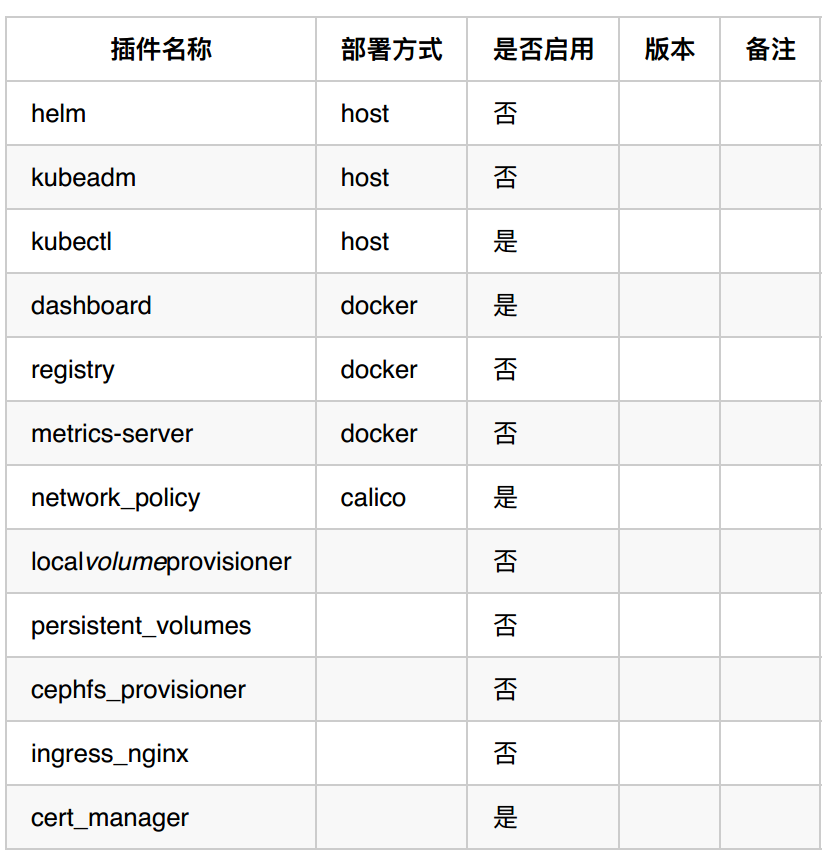

3.3 Component List

Components required by k8s

List of optional plug-ins

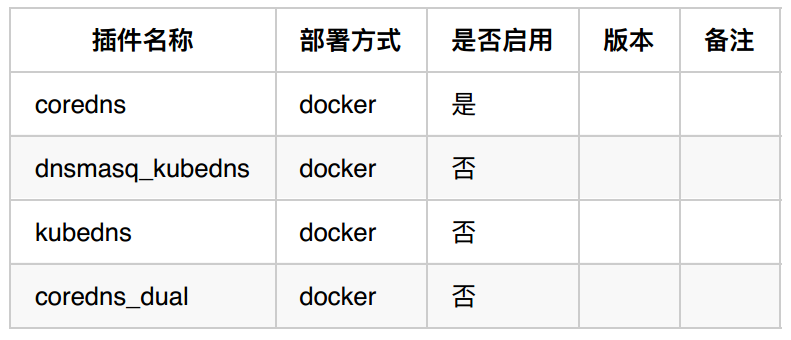

3.4 DNS Scheme

k8s service discovery relies on DNS and involves two types of networks: host network and container network, so Kubespray provides two configurations for management.

3.4.1 dns_mode

Dns_mode is mainly used for domain name resolution in cluster. There are several types as follows. Our technical selection is coredns. Note: to select a dns_mode, you may need to download and install multiple container mirrors, and the mirror versions may be different.

3.4.2 resolvconf_mode

resolvconf_mode is mainly used to solve how to use k8s DNS when the container is deployed as host network mode. Here we use docker_dns

resolvconf_mode: docker_dns

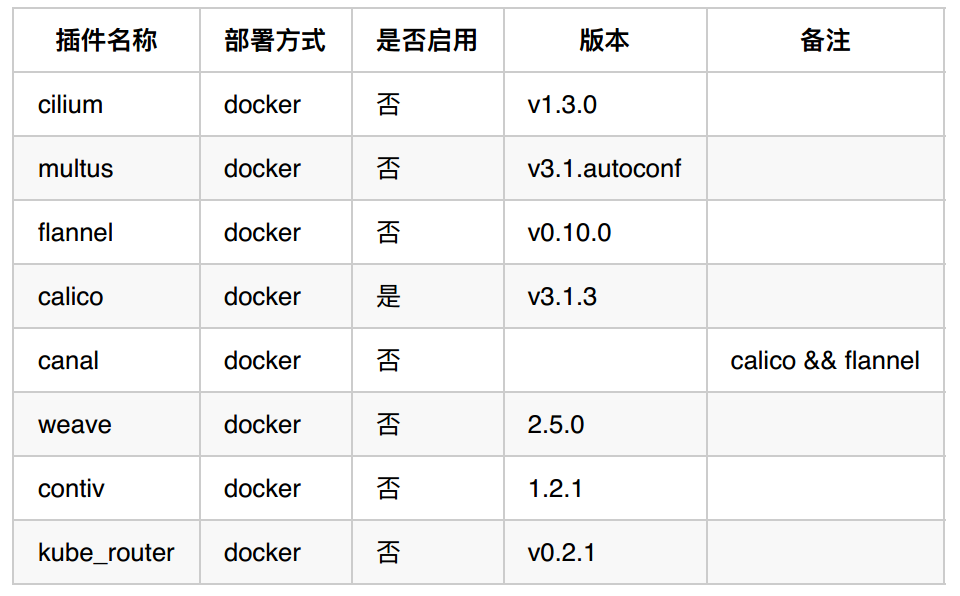

3.5 Network Plug-in Selection

3.5.1 kube-proxy

kube-proxy can choose ipvs or iptables. Here we choose the ipvs mode. The difference between them can be referred to Huawei Cloud's Service Performance Optimization Practice in K8S large-scale scenario.( https://zhuanlan.zhihu.com/p/37230013)

3.5.2 Network Plug-ins List

The list of network plug-ins is as follows. Our technology selection is calico. Note: Selecting a network plug-in may require one or more container mirrors, and the mirror versions may be different.

3.6 High Availability Scheme

Step 4: Follow these steps for installation and deployment

# Install dependencies from ``requirements.txt`` sudo pip install -r requirements.txt # Copy `inventory/sample` as `inventory/mycluster` cp -rfp inventory/sample inventory/mycluster # Update Ansible inventory file with inventory builder declare -a IPS=(10.10.1.3 10.10.1.4 10.10.1.5) CONFIG_FILE=inventory/mycluster/hosts.ini python3 contrib/inventory_builder/invent ory.py ${IPS[@]} # Review and change parameters under `inventory/mycluster/group_vars` cat inventory/mycluster/group_vars/all/all.yml cat inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml # Deploy Kubespray with Ansible Playbook - run the playbook as root # The option `-b` is required, as for example writing SSL keys in /etc/, # installing packages and interacting with various systemd daemons. # Without -b the playbook will fail to run! ansible-playbook -i inventory/mycluster/hosts.ini --become --become-user=root clus ter.yml

After deployment, you can log in to the host where k8s-master is located, and execute the following commands, you can see that the components are normal.

kubectl cluster-info kubectl get node kubectl get pods --all-namespaces

Reference documents:

https://github.com/kubernetes-sigs/kubespray/blob/master/docs/getting-started.md

https://xdatk.github.io/2018/04/16/kubespray2/

https://jicki.me/kubernetes/docker/2018/12/21/k8s-1.13.1-kubespray/