brief introduction

Kubernetes, created and managed by Google in 2014, is an open source version of Borg, Google's 10-year-old large-scale container management technology.Is a container cluster management system, is an open source platform, can achieve automatic deployment of container clusters, automatic scaling, maintenance and other functions.Its goal is to promote the improvement of the ecosystem of components and tools to reduce the burden of applications running in public or private clouds.

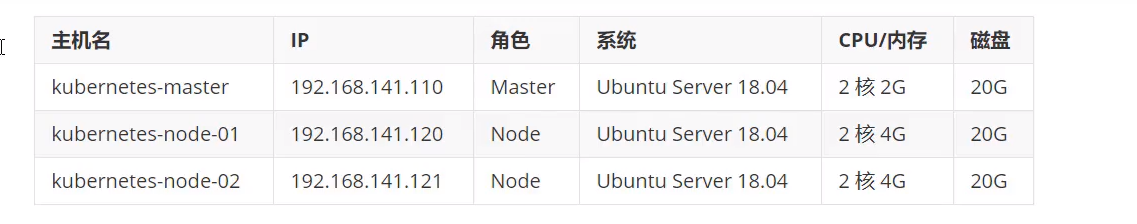

Unified Environment Configuration

Note: Make VMware mirrors to avoid the pain of installing one by one

Turn off swap space (avoid wasting resources)

swapoff -a

Avoid starting swap space on

#Comment/dev/mapper/ubuntu--vg-swap_1 none swap SW 0 vi /etc/fstab

Close Firewall

ufw disable

Configure DNS

#Uncomment DNS lines and add DNS configuration such as 114.114.114.114, Modify Restart Computer vi /etc/systemd/resolved.conf

Install Docker

# Update Software Source sudo apt-get update # Installation Dependencies sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common # Install GPG Certificate curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - # New Software Source Information sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" # Update the software source again sudo apt-get -y update # Install Docker CE Edition sudo apt-get -y install docker-ce

Verification

docker version Client: Docker Engine - Community Version: 19.03.4 API version: 1.40 Go version: go1.12.10 Git commit: 9013bf583a Built: Fri Oct 18 15:53:51 2019 OS/Arch: linux/amd64 Experimental: false Server: Docker Engine - Community Engine: Version: 19.03.4 API version: 1.40 (minimum version 1.12) Go version: go1.12.10 Git commit: 9013bf583a Built: Fri Oct 18 15:52:23 2019 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.2.10 GitCommit: b34a5c8af56e510852c35414db4c1f4fa6172339 runc: Version: 1.0.0-rc8+dev GitCommit: 3e425f80a8c931f88e6d94a8c831b9d5aa481657 docker-init: Version: 0.18.0 GitCommit: fec3683

Configure Accelerators

Add something to / etc/docker/daemon.json

{

"registry-mirrors": [

"https://registry.docker-cn.com"

]

}

Verify successful configuration

sudo systemctl restart docker docker info ... # The following statement indicates that the configuration was successful Registry Mirrors: https://registry.docker-cn.com/ ...

Change Host Name

Host names should not be the same in the same LAN, so we need to modify them. The following steps are to modify the 18.04 version of ostname, or directly modify the name in / etc/hostname if it is 16.04 or below

View the current Hostname

hostnamectl

Modify Hostname

# Modify using the hostnamectl command, where kubernetes-master is the new host name hostnamectl set-hostname kubernetes-maste

Modify cloud.cfg

If the cloud-init package is installed, the cloud.cfg file needs to be modified.This package is usually installed by default to handle clouds

# If there is one vi /etc/cloud/cloud.cfg # This configuration defaults to false and can be modified to true preserve_hostname: true

Verification

root@ubuntu:~# hostnamectl

Static hostname: kubernetes-master

Transient hostname: ubuntu

Icon name: computer-vm

Chassis: vm

Machine ID: e10f7dfb5ddbed0998fef8705dc11573

Boot ID: 75fe751b96b6495b96ca92dd9379efef

Virtualization: vmware

Operating System: Ubuntu 18.04.2 LTS

Kernel: Linux 4.4.0-142-generic

Architecture: x86-64

Install three essential Kubernetes tools, kubeadm,kubelet,kubectl

Configure Software Source

# Install System Tools apt-get update && apt-get install -y apt-transport-https # Install GPG Certificate curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - # Write to the software source; Note: We use the system code bionic, but currently Ali cloud does not support it, so follow the xenial of 16.04 cat << EOF >/etc/apt/sources.list.d/kubernetes.list > deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main > EOF

Install kubeadm,kubelet,kubectl

# install apt-get update apt-get install -y kubelet kubeadm kubectl # Set kubelet self-start and start kubelet systemctl enable kubelet && systemctl start kubelet

-

kubeadm: used to initialize the Kubernetes cluster

-

Kubectl: A command line tool for Kubernetes that deploys and manages applications, views resources, creates, deletes, and updates components

-

kubelet: Primarily responsible for starting Pod and container

Synchronization time zone

dpkg-reconfigure tzdata # Select Asia/Shanghai # Install ntpdate apt-get install ntpdate # Set up system and network time synchronization ntpdate cn.pool.ntp.org # Write system time to hardware time hwclock --systohc # Confirmation time date

Restart shutdown

# Remember to restart before shutting down reboot shutdown now

Create Kubernetes Master Node Host

Configure ip

Create and modify configurations

# Modify the configuration to be as follows apiVersion: kubeadm.k8s.io/v1beta1 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: # Modify to Primary Node IP advertiseAddress: 192.168.100.130 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: kubernetes-master taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta1 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: "" controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd # China can not access Google, change to Aliyun imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration # Modify Version Number kubernetesVersion: v1.14.1 networking: dnsDomain: cluster.local # Configure as Calico's default segment podSubnet: "192.168.0.0/16" serviceSubnet: 10.96.0.0/12 scheduler: {} --- # Turn on IPVS mode apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: SupportIPVSProxyMode: true mode: ipvs

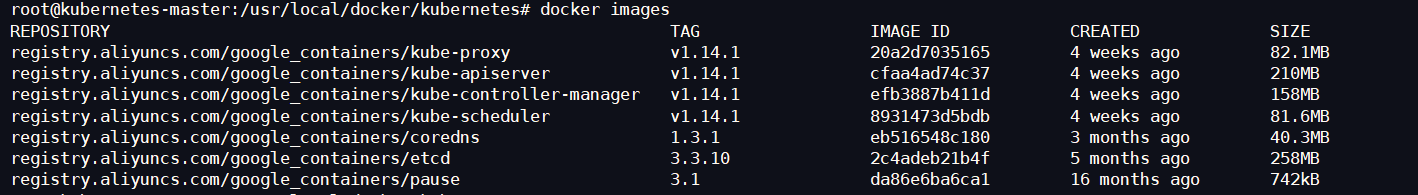

View and pull images

# View a list of required mirrors kubeadm config images list --config kubeadm.yml # Pull mirror kubeadm config images pull --config kubeadm.yml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.3 # An api interface equivalent to docker stop registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.3 # Auto restart POD registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.3 # Controlling service startup registry.aliyuncs.com/google_containers/kube-proxy:v1.16.3 # agent registry.aliyuncs.com/google_containers/pause:3.1 registry.aliyuncs.com/google_containers/etcd:3.3.15-0 # Service Registration Center registry.aliyuncs.com/google_containers/coredns:1.6.2 #

Install primary node

kubeadm init --config=kubeadm.yml --upload-certs | tee kubeadm-init.log

//Output a message

##############################################################

root@kubernetes-master:/usr/local/kubernetes/cluster# kubeadm init --config=kubeadm.yml --upload-certs | tee kubeadm-init.log

[init] Using Kubernetes version: v1.16.3

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.4. Latest validated version: 18.09

[WARNING Hostname]: hostname "kubernetes-master" could not be reached

[WARNING Hostname]: hostname "kubernetes-master": lookup kubernetes-master on 8.8.8.8:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.211.110]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [kubernetes-master localhost] and IPs [192.168.211.110 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [kubernetes-master localhost] and IPs [192.168.211.110 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 63.013185 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

73559de6abcadd6ea91b8b7ebd29c3be58b3ed441ebbb99c3a9709da8457a643

[mark-control-plane] Marking the node kubernetes-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node kubernetes-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.211.110:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:e84858a746767cb660aa546a5966692865181ab52b43e781d421bcd552f85400

#############################################################################################

# Then follow the installation tips:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Join slave to cluster

Clone two virtual machines with kubeadm,kubelet, kebectl, modify the host name of the host, and turn off the swap space and firewall

- Join the master by executing the following command in both node hosts

kubeadm join 192.168.211.110:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:e84858a746767cb660aa546a5966692865181ab52b43e781d421bcd552f85400

########Success is as follows#############

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.4. Latest validated version: 18.09

[WARNING Hostname]: hostname "kubernetes-node-02" could not be reached

[WARNING Hostname]: hostname "kubernetes-node-02": lookup kubernetes-node-02 on 8.8.8.8:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.16" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Explain:

- token

- You can view token information from the log when you install master

- You can print token information through the kubeadm token list command

- If token expires, you can create a new token using the kubeadm token create command

- discovery-token-ca-cert-hash

- sha256 information can be viewed from the log when master is installed

- Sha256 information can be viewed through the OpenSSL x509-pubkey-in/etc/kubernetes/pki/ca.crt | OpenSSL rsa-pubin-outform der 2>/dev/null | OpenSSL dgst-sha256-hex | sed's/^. * /'command

Verify success

Back to master server

root@kubernetes-master:/usr/local/kubernetes/cluster# kubectl get nodes #You can see that two nodes have been added NAME STATUS ROLES AGE VERSION kubernetes-master NotReady master 74m v1.16.3 kubernetes-node-01 NotReady <none> 11m v1.16.3 kubernetes-node-02 NotReady <none> 11m v1.16.3

View pod status

kubectl get pod -n kube-system -o wide #############Show as follows################### root@kubernetes-master:/usr/local/kubernetes/cluster# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-58cc8c89f4-gsl5c 0/1 Pending 0 78m <none> <none> <none> <none> coredns-58cc8c89f4-klblw 0/1 Pending 0 78m <none> <none> <none> <none> etcd-kubernetes-master 1/1 Running 0 20m 192.168.211.110 kubernetes-master <none> <none> kube-apiserver-kubernetes-master 1/1 Running 0 21m 192.168.211.110 kubernetes-master <none> <none> kube-controller-manager-kubernetes-master 1/1 Running 0 20m 192.168.211.110 kubernetes-master <none> <none> kube-proxy-kstvt 1/1 Running 0 15m 192.168.211.122 kubernetes-node-02 <none> <none> kube-proxy-lj5pd 1/1 Running 0 78m 192.168.211.110 kubernetes-master <none> <none> kube-proxy-tvh8s 1/1 Running 0 15m 192.168.211.121 kubernetes-node-01 <none> <none> kube-scheduler-kubernetes-master 1/1 Running 0 20m 192.168.211.110 kubernetes-master <none> <none>

configure network

Detailed configuration can be found in

Install network plugin calico

# Run in Master kubectl apply -f https://docs.projectcalico.org/v3.10/manifests/calico.yaml ###############Show the following output##################### configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.extensions/calico-node created serviceaccount/calico-node created deployment.extensions/calico-kube-controllers created serviceaccount/calico-kube-controllers created

If you find that errors like what you can't find might be a problem with the calico version, upgrade the version

Check to see if the installation was successful

#Function watch kubectl get pods --all-namespaces #############as follows################## NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-74c9747c46-z9dsb 1/1 Running 0 93m kube-system calico-node-gdcd5 1/1 Running 0 93m kube-system calico-node-tsnfm 1/1 Running 0 93m kube-system calico-node-w4wvt 1/1 Running 0 93m kube-system coredns-58cc8c89f4-gsl5c 1/1 Running 0 3h23m kube-system coredns-58cc8c89f4-klblw 1/1 Running 0 3h23m kube-system etcd-kubernetes-master 1/1 Running 0 145m kube-system kube-apiserver-kubernetes-master 1/1 Running 0 146m kube-system kube-controller-manager-kubernetes-master 1/1 Running 0 146m kube-system kube-proxy-kstvt 1/1 Running 0 141m kube-system kube-proxy-lj5pd 1/1 Running 0 3h23m kube-system kube-proxy-tvh8s 1/1 Running 0 140m kube-system kube-scheduler-kubernetes-master 1/1 Running 0 146m # It may take a while for all running s to download

First Kubernetes container

Basic Commands

# Check component health

kubectl get cs

# The output is as follows

NAME STATUS MESSAGE ERROR

# Scheduling services, the main purpose is to schedule POD s to Node

scheduler Healthy ok

# Automated repair service, the main function is to automatically repair Node back to normal operation after Node downtime

controller-manager Healthy ok

# Service Registration and Discovery

etcd-0 Healthy {"health":"true"}

####################################################

# Check Master Status

kubectl cluster-info

# The output is as follows

# Primary Node Status

Kubernetes master is running at https://192.168.211.110:6443

# DNS status

KubeDNS is running at https://192.168.211.110:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

#####################################################

# Check nodes status

kubectl get nodes

# The output is as follows, STATUS is Ready is normal

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready master 3h37m v1.16.3

kubernetes-node-01 Ready <none> 154m v1.16.3

kubernetes-node-02 Ready <none> 154m v1.16.3

Run a container instance

# Use the kubectl command to create two ginx Pods that listen on port 80 (the smallest unit of the Kubernetes run container) # replicas means to start two kubectl run nginx --image=nginx --replicas=2 --port=80 #The display output is as follows kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead. deployment.apps/nginx created

View the status of pods

kubectl get pods # Output the following information STATUS to run successfully NAME READY STATUS RESTARTS AGE nginx-5578584966-shvgb 1/1 Running 0 7m27s nginx-5578584966-th5nm 1/1 Running 0 7m27s

View Deployed Services

kubectl get deployment #The output is as follows NAME READY UP-TO-DATE AVAILABLE AGE nginx 2/2 2 2 10m

Mapping services that allow users to access (equivalent to docker's exposed port)

# The nginx service type that exposes an 80 port is LoadBalancer (debt balance) kubectl expose deployment nginx --port=80 --type=LoadBalancer # The output is as follows service/nginx exposed

View published services

kubectl get service #Output a message kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h48m # Indicates that nginx has been published and exposes port 32603 nginx LoadBalancer 10.111.238.197 <pending> 80:32603/TCP 84s

View service details

kubectl describe service nginx #Show as follows: Name: nginx Namespace: default Labels: run=nginx Annotations: <none> Selector: run=nginx Type: LoadBalancer IP: 10.111.238.197 Port: <unset> 80/TCP TargetPort: 80/TCP NodePort: <unset> 32603/TCP Endpoints: 192.168.140.65:80,192.168.141.193:80 Session Affinity: None External Traffic Policy: Cluster Events: <none>

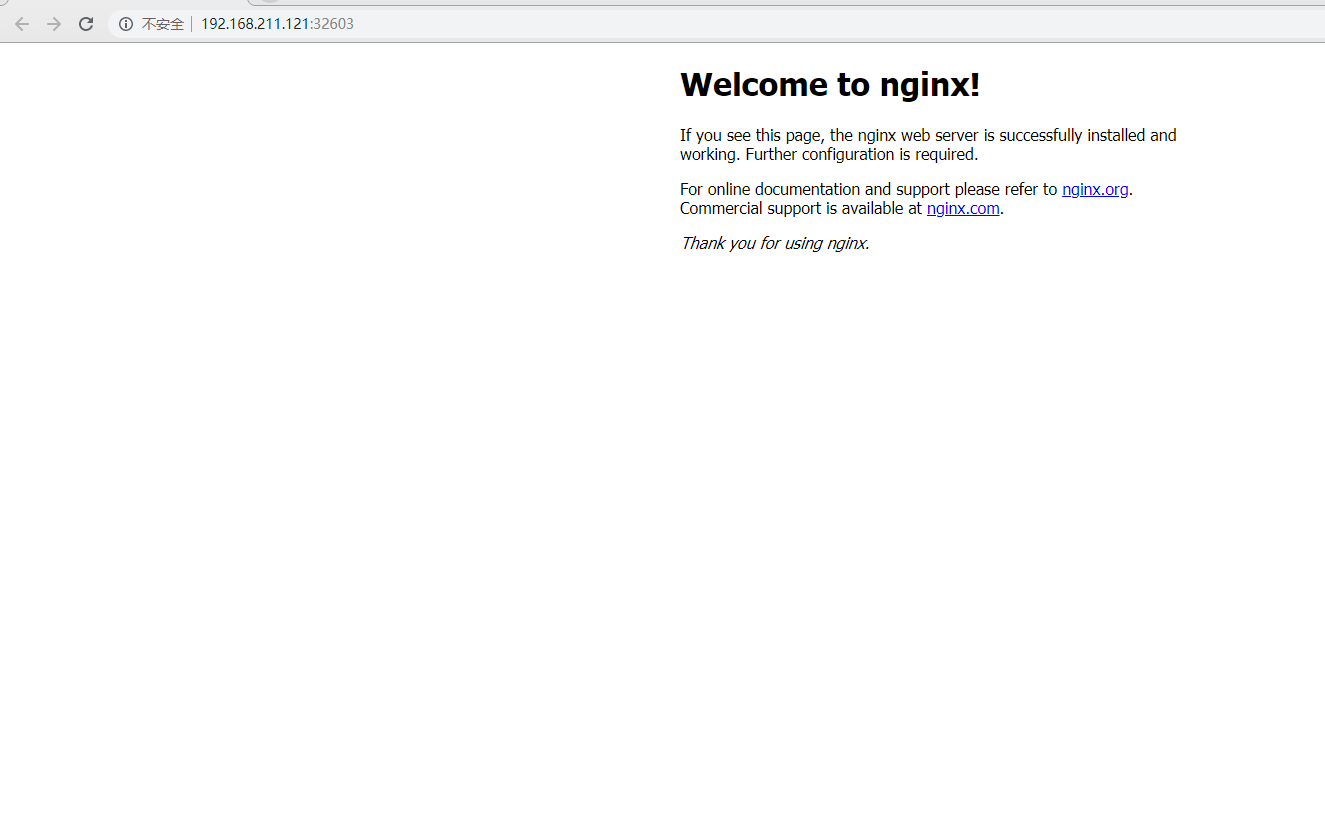

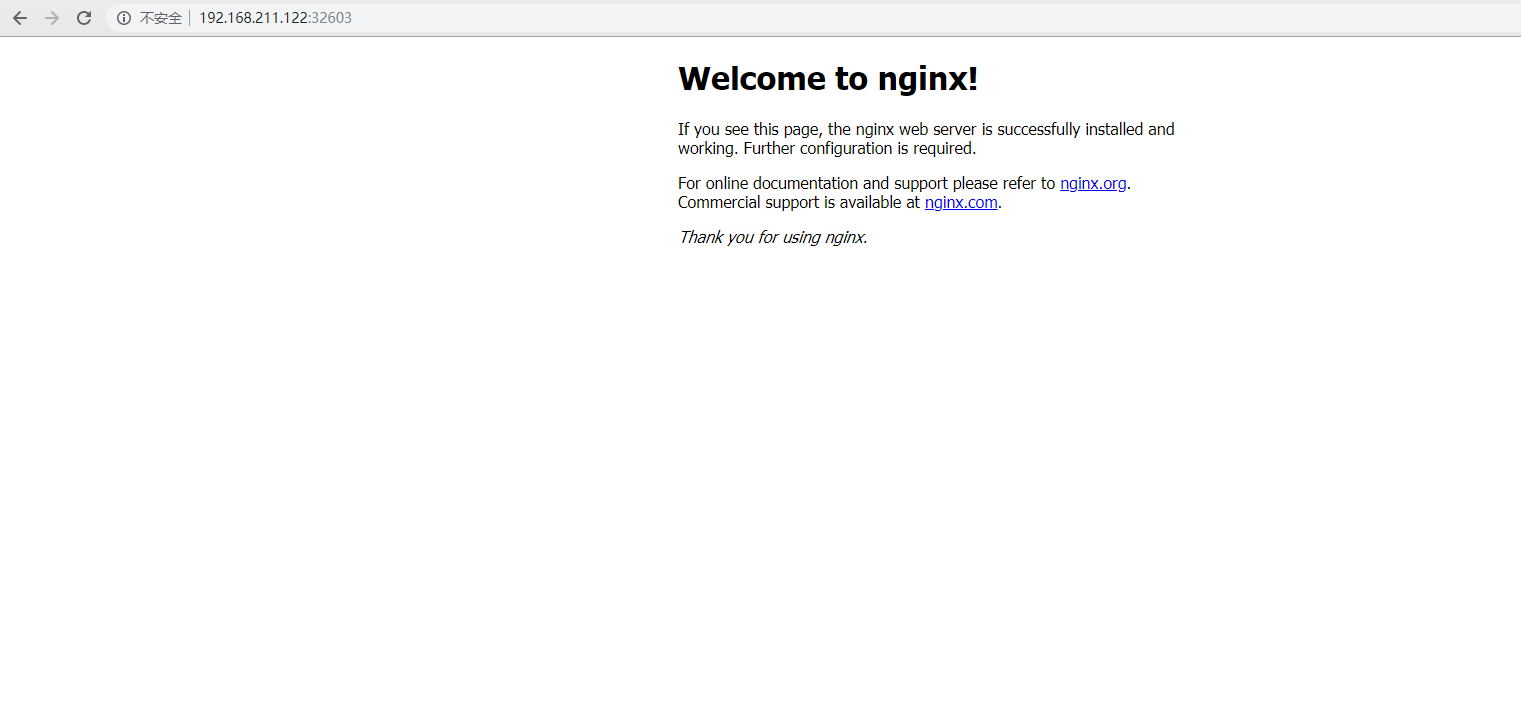

Test access to nginx deployed successfully

Browser Access

http://192.168.211.121:31738/

http://192.168.211.122:31738/

Out of Service

kubectl delete deployment nginx #The output is as follows deployment.apps "nginx" deleted

kubectl delete service nginx #The output is as follows service "nginx" deleted

summary

Rules for job container tasks to run a service at a specified time