catalogue

6. Network plug-in installation configuration

1, k8s overview

1. What is k8s

Kubermetes(k8s)

- K8s is a container cluster management system. It is an open source platform, which can realize the functions of automatic deployment, automatic capacity expansion and maintenance of container clusters

2.k8s applicable scenarios

- There are a large number of containers across hosts that need to be managed

- Rapid deployment of applications

- Rapid expansion of applications

- Seamless connection of new application functions

- Save resources and optimize the use of hardware resources

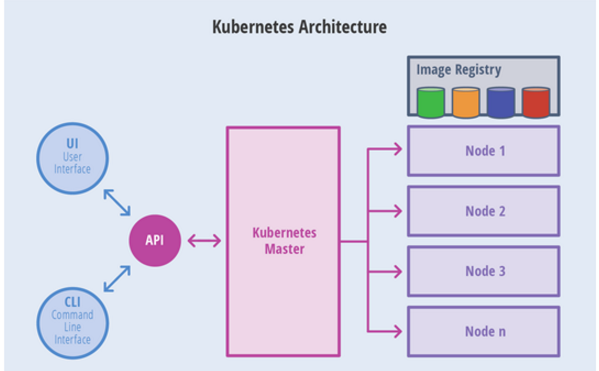

3.Kubernetes architecture

● core roles

- Master (management node)

- Node (compute node)

- Image (image warehouse)

4. Roles and functions

1) Master management node

- The Master provides cluster control

- Make global decisions on the cluster

- Detect and respond to cluster events

- The Master is mainly composed of apiserver, kubproxy, scheduler, controller manager and etcd services

2) Node compute node

- The actual node on which the container is running

- Maintain and run Pod and provide the running environment of specific applications

- node consists of kubelet, Kube proxy and docker

- The computing node is designed to scale horizontally, and the component runs on multiple nodes

5.master node service

APIServer

- It is the external interface of the whole system for clients and other components to call

- Back end metadata is stored in et cd (key value database)

Scheduler

- It is responsible for scheduling resources within the cluster, which is equivalent to "dispatching room"·

Controller manager

- Responsible for managing the controller, equivalent to "main manager"

6. Overview of etcd

- All metadata generated by kubernet during operation is stored in etcd

1) etcd key value management

- In the organization of keys, etcd adopts a hierarchical spatial structure (similar to the concept of directory in file system), and the keys specified by users can be individual names

- If you create a key value, it is actually placed under the root directory / key

- You can also specify a directory structure for, such as / dir1/dir2/key, and the corresponding directory structure will be created

- etcd has kubernet es cluster automatic management, and users do not need manual intervention

- etcdctl is the client manager of etcd

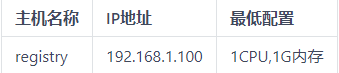

2, Private image warehouse

1. Basic understanding

Official website: https://kuberbetes.io/

Official download address: https://packages.cloud.google.com/

1) Installation and deployment mode

- Source code installation: download the source code or compiled binary, manually add parameters to start the service, kuberbetes adopts certificate authentication, and a large number of certificates need to be created

- Container deployment: make the official service into an image, download the image and start it

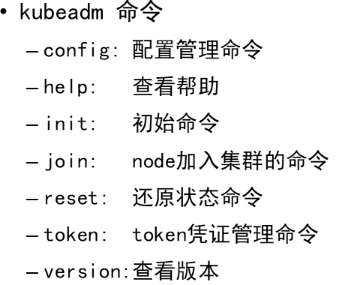

2) The official tool kubedm uses

3) Deployment environment

- Kernel version > = 3.10

- Minimum configuration: 2cpu, 2G memory

- Nodes cannot have duplicate host names, MAC addresses or product UUIDs

- Uninstall firewall firewalld-*

- Disable swap

- Disable selinux

2. Warehouse initialization

1) Delete the original virtual machine and buy it again

2) Install warehouse services

[root@registry ~]# yum makecache [root@registry ~]# yum install -y docker-distribution [root@registry ~]# systemctl enable --now docker-distribution

3) Initialize warehouse using script

Copy the cloud disk kubernetes/v1.17.6/registry/myos directory to the warehouse server

[root@registry ~]# cd myos

[root@registry ~]# chmod 755 init-img.sh

[root@registry ~]# ./init-img.sh

[root@registry ~]# curl http://192.168.1.100:5000/v2/myos/tags/list

{"name":"myos","tags":["nginx","php-fpm","v1804","httpd"]}cat init-img.sh

#!/bin/bash

yum install -y docker-ce

mkdir -p /etc/docker

cat >/etc/docker/daemon.json <<'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://hub-mirror.c.163.com"],

"insecure-registries":["192.168.1.100:5000", "registry:5000"]

}

EOF

systemctl enable --now docker.service

systemctl restart docker.service

docker load -i myos.tar.gz

# init apache images

cat >Dockerfile<<'EOF'

FROM myos:latest

ENV LANG=C

WORKDIR /var/www/html/

EXPOSE 80

CMD ["/usr/sbin/httpd", "-DFOREGROUND"]

EOF

docker build -t 192.168.1.100:5000/myos:httpd .

# init php-fpm images

cat >Dockerfile<<'EOF'

FROM myos:latest

EXPOSE 9000

WORKDIR /usr/local/nginx/html

CMD ["/usr/sbin/php-fpm", "--nodaemonize"]

EOF

docker build -t 192.168.1.100:5000/myos:php-fpm .

# init nginx images

cat >Dockerfile<<'EOF'

FROM myos:latest

EXPOSE 80

WORKDIR /usr/local/nginx/html

CMD ["/usr/local/nginx/sbin/nginx", "-g", "daemon off;"]

EOF

docker build -t 192.168.1.100:5000/myos:nginx .

# upload images

rm -f Dockerfile

docker tag myos:latest 192.168.1.100:5000/myos:v1804

for i in v1804 httpd php-fpm nginx;do

docker push 192.168.1.100:5000/myos:${i}

done

3, kubernetes installation

1. Environmental preparation

1) Prepare the virtual machine according to the following configuration

- Minimum configuration: 2cpu,2G memory

- Uninstall firewall firewalld-*

- Disable selinux and swap

- Configure yum warehouse and install kubedm, kubelet, kubectl, docker CE

- Configure docker private image warehouse and cgroup driver (daemon.json)

- The master services of kuberbetes run in containers

- How to obtain the image:

kubeadm config images list

2. Kube master installation

1) Firewall related configuration and master introduction

Refer to the previous knowledge points to disable selinux, disable swap and uninstall firewalld-*

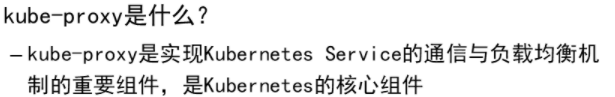

- Kube proxy is an important component to realize the communication and load balancing mechanism of kubernetes Service, and it is the core component of kubernetes

Kube proxy mode

- kubernetes v1.0, user space agent mode

- kubernetes v1.1, iptables mode proxy

- kubernetes v1.8,ipvs proxy mode. If the conditions are not met, return to iptables proxy mode

Enable IPVS mode

- The kernel must support ip_vs,ip_vs_rr,ip_vs_wrr,ip_vs_sh,nf_conntrack_ipv4

- You must have ipvsadm and ipset packages

- Open IPVS parameter in configuration file

mode:ipvs

2) Configure yum warehouse

[root@ecs-proxy ~]# cp -a v1.17.6/k8s-install /var/ftp/localrepo/ [root@ecs-proxy ~]# cd /var/ftp/localrepo/ [root@ecs-proxy localrepo]# createrepo --update .

3) Installation tool package

Install kubedm, kubectl, kubelet, docker CE

[root@master ~]# yum makecache

[root@master ~]# yum install -y kubeadm kubelet kubectl docker-ce

[root@master ~]# mkdir -p /etc/docker

[root@master ~]# vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://hub-mirror.c.163.com"],

"insecure-registries":["192.168.1.100:5000", "registry:5000"]

}

[root@master ~]# systemctl enable --now docker kubelet

[root@master ~]# docker info | grep Cgroup

[root@master ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

#Modify kernel parameters (enable netfilter to monitor bridge devices)

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl --system4) Image import private warehouse

#Copy the image in the cloud disk kubernetes/v1.17.6/base-images to the master

[root@master ~]# cd base-images/

[root@master base-image]# for i in *.tar.gz;do docker load -i ${i};done

[root@master base-image]# docker images

[root@master base-image]# docker images |awk '$2!="TAG"{print $1,$2}'|while read _f _v;do

docker tag ${_f}:${_v} 192.168.1.100:5000/${_f##*/}:${_v};

docker push 192.168.1.100:5000/${_f##*/}:${_v};

docker rmi ${_f}:${_v};

done#View validation

[root@master base-image]# curl http://192.168.1.100:5000/v2/_catalog

5)Tab settings

[root@master ~]# kubectl completion bash >/etc/bash_completion.d/kubectl [root@master ~]# kubeadm completion bash >/etc/bash_completion.d/kubeadm [root@master ~]# exit

6) Install IPVS agent package

[root@master ~]# yum install -y ipvsadm ipset

7) System initialization, troubleshooting

Correct several errors according to the prompts

[root@master ~]# vim /etc/hosts 192.168.1.21 master 192.168.1.31 node-0001 192.168.1.32 node-0002 192.168.1.33 node-0003 192.168.1.100 registry [root@master ~]# kubeadm init --dry-run

8) Deploying with kubedm

The answer file is in the kubernetes/v1.17.6/config directory of the cloud disk

[root@master ~]# mkdir init;cd init

#Copy kubedm-init.yaml to the master virtual machine init directory

cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.21

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: 192.168.1.100:5000

kind: ClusterConfiguration

kubernetesVersion: v1.17.6

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.254.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

[root@master init]# kubeadm init --config=kubeadm-init.yaml |tee master-init.log

#Execute the command at the prompt

[root@master init]# mkdir -p $HOME/.kube [root@master init]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master init]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

9) Verify installation results

[root@master ~]# kubectl version [root@master ~]# kubectl get componentstatuses

3. Overview of computing node

1)node overview

Docker service:

- Container management

kubelet service

- Responsible for monitoring Pod, including creation, modification, deletion, etc

Kube proxy service

- It is mainly responsible for providing proxy for pod objects

- Realize service communication and load balancing

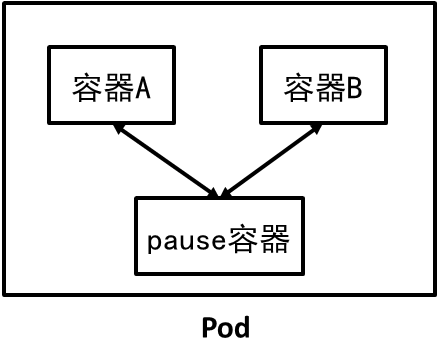

2) Overview of pod

- Pod is the basic unit of Kuberbetes scheduling

- One Pod contains one or more containers

- These containers use the same network namespace and ports

- Pod is the aggregation unit of multiple processes of a service

- As an independent deployment unit, Pod supports horizontal expansion and replication

4.token management

1) How nodes join a cluster

- Find the sample installation instructions from the master installation log

- The node node must have a token provided by the master to join the cluster

kubeadm join 192.168.1.21:6443 --token <token> \ --discovery-token-ca-cert-hash sha256:<token ca hash>

2) Get token

- A token is equivalent to a supporting document

- Token CA cert hash verifies the authenticity of the file

3) Create token

[root@master ~]# kubeadm token create --ttl=0 --print-join-command [root@master ~]# kubeadm token list

4) Get token_hash

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt |openssl rsa -pubin -outform der |openssl dgst -sha256 -hex

5.node installation

Copy kubernetes/v1.17.6/node-install on the cloud disk to the springboard

1) Possible problems with ansible

Empty / root/.ssh/known

Note: I wrote an article about the installation of ansible in my article

[root@ecs-proxy ~]# cd node-install/ [root@ecs-proxy node-install]# vim files/hosts ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 192.168.1.21 master 192.168.1.31 node-0001 192.168.1.32 node-0002 192.168.1.33 node-0003 192.168.1.100 registry

[root@ecs-proxy node-install]# vim node_install.yaml

... ...

vars:

master: '192.168.1.21:6443'

token: 'fm6kui.mp8rr3akn74a3nyn'

token_hash: 'sha256:f46dd7ee29faa3c096cad189b0f9aedf59421d8a881f7623a543065fa6b0088c'

... ...

[root@ecs-proxy node-install]# ansible-playbook node_install.yaml[root@ecs-proxy node-install]# ls

ansible.cfg files hostlist.yaml node_install.yaml

[root@ecs-proxy node-install]# cat node_install.yaml

---

- name: kubernetes node install

hosts:

- nodes

vars:

master: '192.168.1.21:6443'

token: 'boimvb.tyi17p87uiu7br9g'

token_hash: 'sha256:e62b4ecf06b88566ee2be4529be02b0d76a8453730b1481c118c25668696dded'

tasks:

- name: disable swap

lineinfile:

path: /etc/fstab

regexp: 'swap'

state: absent

notify: disable swap

- name: Ensure SELinux is set to disabled mode

lineinfile:

path: /etc/selinux/config

regexp: '^SELINUX='

line: SELINUX=disabled

notify: disable selinux

- name: remove the firewalld

yum:

name:

- firewalld

- firewalld-filesystem

state: absent

- name: install k8s node tools

yum:

name:

- kubeadm

- kubelet

- docker-ce

- ipvsadm

- ipset

state: present

update_cache: yes

- name: Create a directory if it does not exist

file:

path: /etc/docker

state: directory

mode: '0755'

- name: Copy file with /etc/hosts

copy:

src: files/hosts

dest: /etc/hosts

owner: root

group: root

mode: '0644'

- name: Copy file with /etc/docker/daemon.json

copy:

src: files/daemon.json

dest: /etc/docker/daemon.json

owner: root

group: root

mode: '0644'

- name: Copy file with /etc/sysctl.d/k8s.conf

copy:

src: files/k8s.conf

dest: /etc/sysctl.d/k8s.conf

owner: root

group: root

mode: '0644'

notify: enable sysctl args

- name: enable k8s node service

service:

name: "{{ item }}"

state: started

enabled: yes

with_items:

- docker

- kubelet

- name: check node state

stat:

path: /etc/kubernetes/kubelet.conf

register: result

- name: node join

shell: kubeadm join '{{ master }}' --token '{{ token }}' --discovery-token-ca-cert-hash '{{ token_hash }}'

when: result.stat.exists == False

handlers:

- name: disable swap

shell: swapoff -a

- name: disable selinux

shell: setenforce 0

- name: enable sysctl args

shell: sysctl --system

2) Verify installation

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 130m v1.17.6 node-0001 NotReady <none> 2m14s v1.17.6 node-0002 NotReady <none> 2m15s v1.17.6 node-0003 NotReady <none> 2m9s v1.17.6

6. Network plug-in installation configuration

Copy the cloud disk kubernetes/v1.17.6/flannel directory to the master

1) Upload image to private warehouse

[root@ecs-proxy flannel]# ls flannel.tar.gz kube-flannel.yml

[root@master ~]# cd flannel [root@master flannel]# docker load -i flannel.tar.gz [root@master flannel]# docker tag quay.io/coreos/flannel:v0.12.0-amd64 192.168.1.100:5000/flannel:v0.12.0-amd64 [root@master flannel]# docker push 192.168.1.100:5000/flannel:v0.12.0-amd64

2) Modify the configuration file and install

[root@master flannel]# vim kube-flannel.yml 128: "Network": "10.244.0.0/16", 172: image: 192.168.1.100:5000/flannel:v0.12.0-amd64 186: image: 192.168.1.100:5000/flannel:v0.12.0-amd64 227-ending: delete

[root@master flannel]# kubectl apply -f kube-flannel.yml

Note: because the file is too long, it is placed at the bottom

3) Verification results

[root@master flannel]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 26h v1.17.6 node-0001 Ready <none> 151m v1.17.6 node-0002 Ready <none> 152m v1.17.6 node-0003 Ready <none> 153m v1.17.6

Network plug-in installation configuration yml file

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg