1, Environment introduction

Two centos7.4 system machines:

| machine | host name |

|---|---|

| 10.1.31.36 | kubernetes master |

| 10.1.31.24 | kubernetes node1 |

2, Preparations

The following steps are to be performed on both hosts.

You need to pull docker image. Please install docker first.

1. Relationship between K8s and docker version

[`https://github.com/kubernetes/kubernetes`]()

2. Install docker

If you use docker from the CentOS OS OS repository, the version of docker may be older and cannot be used with Kubernetes v1.13.0 or later.

Here we install version 18.06.3

yum install -y -q yum-utils device-mapper-persistent-data lvm2 > /dev/null 2>&1 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo > /dev/null 2>&1 yum install -y -q docker-ce-18.06.3.ce >/dev/null 2>&1

Start docker

systemctl enable docker && systemctl start docker docker version

3. Turn off the firewall running the system

If the system turns on the firewall, follow the steps below to turn off the firewall

[root@localhost]# systemctl stop firewalld [root@localhost]# systemctl disable firewalld

4. Turn off selinux

[root@localhost]# setenforce 0 [root@localhost]# sed -i '/^SELINUX=/cSELINUX=disabled' /etc/sysconfig/selinux

Or edit / etc/selinux/config

root@localhost]# vi /etc/selinux/config Change SELinux = forcing to SELINUX=disabled, and it will take effect after restart View status [root@localhost]# sestatus SELinux status: disabled

5. Turn off swap

kubeadm does not support swap

implement

[root@localhost yum.repos.d]# swapoff -a #Or edit / etc/fstab to comment out swap [root@localhost yum.repos.d]# cat /etc/fstab # /etc/fstab # Created by anaconda on Fri Oct 18 00:03:01 2019 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # UUID=1a73fb9d-095b-4302-aa87-4a49783c0133 / xfs defaults 0 0 UUID=e293a721-617e-4cdd-8cb9-d2e895427b03 /boot xfs defaults 0 0 #UUID=73e88a54-4574-4f0a-8a4f-d57d2bc2ee46 swap swap defaults 0 0

6. Modify host name

Set master and node1 for three hosts respectively

[root@localhost kubernetes]# cat >> /etc/sysconfig/network << EOF hostname=master EOF [root@localhost kubernetes]# cat /etc/sysconfig/network # Created by anaconda hostname=master

perhaps

[root@localhost]#hostnamectl set-hostname master [root@localhost]#hostnamectl set-hostname node1 [root@localhost]#hostnamectl set-hostname node2

7. Configure / etc/hosts

Execute on each host

[root@localhost]#echo "192.168.3.100 master" >> /etc/hosts [root@localhost]#echo "192.168.3.101 node1" >> /etc/hosts [root@localhost]#echo "192.168.3.102 node2" >> /etc/hosts

8. Configure kernel parameters

Passing bridged IPv4 traffic to iptables' chain

[root@localhost]#cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 ----net.bridge.bridge-nf-call-arptables = 1 net.ipv4.ip_forward = 1 EOF

Make the above configuration effective

[root@localhost]#sysctl --system

9. Configure CentOS YUM source

//Configure domestic tencent yum source address, epel source address, Kubernetes source address

[root@localhost]#mkdir -p /etc/yum.repos.d/repos.bak [root@localhost]#mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/repos.bak [root@localhost]#wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.cloud.tencent.com/repo/centos7_base.repo [root@localhost]#wget -O /etc/yum.repos.d/epel.repo http://mirrors.cloud.tencent.com/repo/epel-7.repo [root@localhost]#yum clean all && yum makecache //Configure domestic Kubernetes source address [root@localhost]#cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

10. Installation tools

Specify version number

[root@localhost]#yum install -y kubelet-1.16.0 kubeadm-1.16.0 kubectl-1.16.0 --disableexcludes=kubernetes

Do not specify version number

install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Note: – disableexcludes=kubernetes ා disable warehouses other than kubernetes without this parameter

Launch tool

[root@localhost]#systemctl enable kubelet [root@localhost]#systemctl start kubelet

Other commands

systemctl status kubelet systemctl stop kubelet systemctl restart kubelet

##3 × 11. Initialize to obtain the list of images to download

[root@master ~]# cd /home [root@master home]# ls apache-zookeeper-3.5.6-bin jdk1.8.0_231 kafka_2.12-2.3.1 [root@master home]# kubeadm config images list W1115 18:05:31.432259 17146 version.go:101] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers) W1115 18:05:31.436956 17146 version.go:102] falling back to the local client version: v1.16.3 k8s.gcr.io/kube-apiserver:v1.16.3 k8s.gcr.io/kube-controller-manager:v1.16.3 k8s.gcr.io/kube-scheduler:v1.16.3 k8s.gcr.io/kube-proxy:v1.16.3 k8s.gcr.io/pause:3.1 k8s.gcr.io/etcd:3.3.15-0 k8s.gcr.io/coredns:1.6.2

12. Generate the default kubeadm.conf file

Execute the following command to generate the kubeadm.conf file in the current directory

[root@master home]#kubeadm config print init-defaults > kubeadm.conf

13. Modify kubeadm.conf image source

kubeadm.conf will download the image from google's image warehouse address k8s.gcr.io by default, which is not available in China. Therefore, we can change the address to domestic one through the following methods, such as Alibaba's.

Edit the kubeadm.conf file and change the value of the imageRepository to the following

imageRepository: registry.aliyuncs.com/google_containers

The versions of kubeadm and k8s in the kubeadm.conf file must match, not one higher and one lower. If they do not match, change the version number of k8s under kubeadm.conf

[root@master home]#vi kubeadm.conf

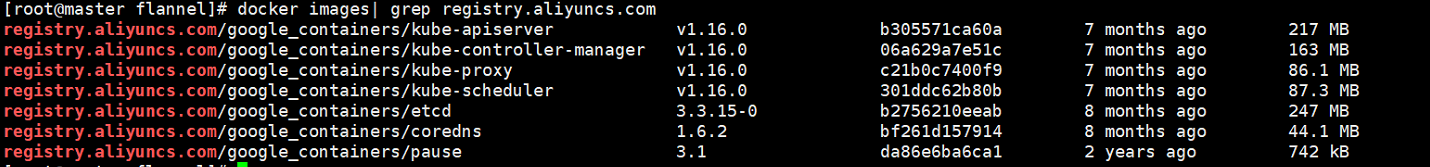

14. Download the required image

Execute in the directory of kubeadm.conf

[[root@master home]#kubeadm config images pull --config kubeadm.conf [root@master home]# docker images

15. docker image replays tag

After the image is downloaded, you need to tag the downloaded image so that all the downloaded images are marked with k8s.gcr.io. If you do not tag the image to k8s.gcr.io, then there will be problems in the later installation with kubeadm, because kubeadm only recognizes google's own mode. After tagging, delete the image with the logo of registry.aliyuncs.com.

Re tap

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.0 k8s.gcr.io/kube-apiserver:v1.16.0 docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.16.0 k8s.gcr.io/kube-proxy:v1.16.0 docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.0 k8s.gcr.io/kube-controller-manager:v1.16.0 docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.0 k8s.gcr.io/kube-scheduler:v1.16.0 docker tag registry.aliyuncs.com/google_containers/etcd:3.3.15-0 k8s.gcr.io/etcd:3.3.15-0 docker tag registry.aliyuncs.com/google_containers/coredns:1.6.2 k8s.gcr.io/coredns:1.6.2 docker tag registry.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

3, Deploy master node

1. Kubernetes cluster initialization

implement

[root@master ~]# kubeadm init --kubernetes-version=v1.16.0 --pod-network-cidr= 10.244.0.0/16 --apiserver-advertise-address=192.168.3.100

Execution success results

[init] Using Kubernetes version: v1.16.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.3.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.3.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.3.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 209.010947 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: vmuuvn.q7q14t5135zm9xk0

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.3.100:6443 --token vmuuvn.q7q14t5135zm9xk0 \

--discovery-token-ca-cert-hash sha256:c302e2c93d2fe86be7f817534828224469a19c5ccbbf9b246f3695127c3ea611Record the final output, which is needed for node joining

kubeadm join 192.168.3.100:6443 --token vmuuvn.q7q14t5135zm9xk0 –discovery-token-ca-cert-hash sha256:c302e2c93d2fe86be7f817534828224469a19c5ccbbf9b246f3695127c3ea611

If init fails, restart kubeadm and execute init

[root@master ~]# kubeadm reset After successful initialization, the following file will be generated in / etc/kubernetes /. If there is no need to reinitiate

[root@master home]# ll /etc/kubernetes/ total 36 -rw------- 1 root root 5453 Nov 15 17:42 admin.conf -rw------- 1 root root 5485 Nov 15 17:42 controller-manager.conf -rw------- 1 root root 5457 Nov 15 17:42 kubelet.conf drwxr-xr-x. 2 root root 113 Nov 15 17:42 manifests drwxr-xr-x 3 root root 4096 Nov 15 17:42 pki -rw------- 1 root root 5437 Nov 15 17:42 scheduler.conf

Note: kubeadm reset is to clean up the environment. After execution, the master needs to init again. If the node has joined the cluster, then the node should reset, restart kubelet and join again

[root@node1 home]#kubeadm reset

[root@node1 home]#systemctl daemon-reload && systemctl restart kubelet

[root@node1 home]# kubeadm join 192.168.3.100:6443 --token vmuuvn.q7q14t5135zm9xk0 \

--discovery-token-ca-cert-hash sha256:c302e2c93d2fe86be7f817534828224469a19c5ccbbf9b246f3695127c3ea6112. Configure permissions

For the current user, perform the following steps (the system starts kubernetes from the admin.conf file, which will not work if it is not configured)

root@master home]# mkdir -p $HOME/.kube [root@master home]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master home]# chown $(id -u):$(id -g) $HOME/.kube/config

3. Deploy the flannel network (install the pod network add-on)

k8s supports many kinds of network schemes. The classic flannel scheme and Calico mode are used here.

View the flannel version

https://github.com/coreos/flannel

Execute the command to start the installation. Note: the following address is for version 0.11.0-amd 6

[root@master home]#kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml //Installation results clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "PodSecurityPolicy" in version "extensions/v1beta1" unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "DaemonSet" in version "extensions/v1beta1" unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "DaemonSet" in version "extensions/v1beta1" unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "DaemonSet" in version "extensions/v1beta1" unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "DaemonSet" in version "extensions/v1beta1" unable to recognize "https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml": no matches for kind "DaemonSet" in version "extensions/v1beta1"

If you can't pull it all the time, the default flannel configuration file pulls the image abroad, and the domestic pull fails. Many online articles fail to pay attention to this step, which leads to the failure of flannel deployment. The solution is as follows:

visit: https://blog.51cto.com/14040759/2492764

[root@master home]# mkdir flannel #Paste the content into the following text and save it [root@master home]# vim kube-flannel.yml [root@master home]# kubectl apply -f flannel/kube-flannel.yml [root@master home]#

Execute the command to get the pods list to view the relevant status

[root@master home]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-5644d7b6d9-5zsbb 0/1 Pending 0 6h17m kube-system coredns-5644d7b6d9-pvsm9 0/1 Pending 1 6h17m kube-system etcd-master 1/1 Running 0 6h16m kube-system kube-apiserver-master 1/1 Running 0 6h16m kube-system kube-controller-manager-master 1/1 Running 0 6h16m kube-system kube-flannel-ds-amd64-7x9fv 1/1 Running 0 6h16m kube-system kube-flannel-ds-amd64-n6kpw 1/1 Running 0 6h14m kube-system kube-proxy-8zl82 1/1 Running 0 6h14m kube-system kube-proxy-gqmxx 1/1 Running 0 6h17m kube-system kube-scheduler-master 1/1 Running 0 6h16m

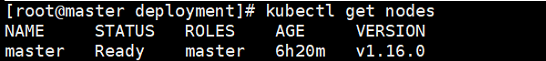

View the master node

[root@master ~]# kubectl get nodes

4. Use Master as work node (optional)

For a cluster initialized with kubeadm, Pod will not be dispatched to the Master Node for security reasons, that is to say, the Master Node does not participate in the workload, so the resources of the Master are wasted. On the Master Node, you can run the following command to make it a work node

[root@master ~]#kubectl taint nodes --all node-role.kubernetes.io/master-

Disable master to deploy pod

[root@master ~]#kubectl taint nodes k8s node-role.kubernetes.io/master=true:NoSchedule

For stains, please refer to:

The cluster built with kubeadm adds a stain to the master node by default, so we can see that our usual pod is not scheduled to the master.

[root@master ~]#Kubectl describe node master | grep taints (Note: master node name)

There is a piece of information about taints: node role.kubernets.io/master: NoSchedule, which means that the master node is marked with a stain. The parameter affected is NoSchedule, which means that the pod will not be scheduled to the nodes marked as taints. In addition to NoSchedule, there are two other options:

PreferNoSchedule: the soft policy version of NoSchedule, which means that it is not scheduled to the dirty node as much as possible

NoExecute: this option means that once Taint takes effect, if the pod running in this node does not have the corresponding tolerance setting, it will be evicted directly

4, Deploy node node

Jian cluster

[root@node1 ~]# kubeadm join 10.1.31.36:6443 --token vmuuvn.q7q14t5135zm9xk0 \

--discovery-token-ca-cert-hash sha256:c302e2c93d2fe86be7f817534828224469a19c5ccbbf9b246f3695127c3ea611

If the JSON command is not recorded during the initialization of the master, use kubeadm token create -- print join command query on the master

5, Unloading k8s

1. Clean up pod running to k8s cluster

$ kubectl delete node --all

2. Delete data volumes and backups

$ for service in kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler; do

systemctl stop $service

done

$ yum -y remove kubernetes #if it's registered as a service3. Uninstall tool

yum remove -y kubelet kubectl

4. Clean up files / folders

rm -rf ~/.kube/ rm -rf /etc/kubernetes/ rm -rf /etc/systemd/system/kubelet.service.d rm -rf /etc/systemd/system/kubelet.service rm -rf /usr/bin/kube* rm -rf /etc/cni rm -rf /opt/cni rm -rf /var/lib/etcd rm -rf /var/etcd rm -rf /var/lib/cni/flannel/* && rm -rf /var/lib/cni/networks/cbr0/* && ip link delete cni0 rm -rf /var/lib/cni/networks/cni0/*

5. Clean container

docker rm -f $(docker ps -qa) #Delete all data volumes docker volume rm $(docker volume ls -q) #Show all containers and data volumes again to make sure there are no residues docker ps -a docker volume ls

6, Verification

[root@master ~]# kubectl get nodes -o wide