role

| IP | role | operating system | Remarks |

|---|---|---|---|

| 192.168.10.210 | master | CentOS 7 | haproxy,keepalived master |

| 192.168.10.211 | master | CentOS 7 | Haproxy, keep alived ready |

| 192.168.10.212 | master | CentOS 7 | Haproxy, keep alived ready |

| 192.168.10.213 | node | CentOS 7 | Do Nodes Only |

Host preparation:

1. Install necessary software and upgrade all software

yum -y install vim-enhanced wget curl net-tools conntrack-tools bind-utils ipvsadm ipset yum -y update

2. Close selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

3. Turn off unnecessary services

systemctl disable auditd systemctl disable postfix systemctl disable irqbalance systemctl disable remote-fs systemctl disable tuned systemctl disable rhel-configure systemctl disable firewalld

4. Install kernel-lt(kernel 4.4.178)

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org yum -y install https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm yum --enablerepo=elrepo-kernel install kernel-lt -y grub2-set-default 0 grub2-mkconfig -o /etc/grub2.cfg

5. Optimize Kernel

cat >>/etc/sysctl.conf <<EOF net.ipv4.ip_forward = 1 vm.swappiness = 0 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.tcp_max_syn_backlog = 65536 net.core.netdev_max_backlog = 32768 net.core.somaxconn = 32768 net.core.wmem_default = 8388608 net.core.rmem_default = 8388608 net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 net.ipv4.tcp_timestamps = 0 net.ipv4.tcp_synack_retries = 2 net.ipv4.tcp_syn_retries = 2 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_mem = 94500000 915000000 927000000 net.ipv4.tcp_max_orphans = 3276800 net.ipv4.ip_local_port_range = 1024 65535 EOF sysctl -p

6. Modify the number of open files

cat >>/etc/security/limits.conf <<EOF * soft memlock unlimited * hard memlock unlimited * soft nofile 65535 * hard nofile 65535 * soft nproc 65535 * hard nproc 65535 EOF

7. System shutdown using swap memory

echo "swapoff -a">>/etc/rc.local chmod +x /etc/rc.local swapoff -a

8. Install docker

yum -y install docker curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://e2a6d434.m.daocloud.io sed -i 's#,##g' /etc/docker/daemon.json service docker start chkconfig docker on

9. Kernel loads ipvs_rr, ipvs_wrr, ipvs_sh modules

cat <<EOF>/etc/sysconfig/modules/ipvs.modules

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack_ipv4"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod +x /etc/sysconfig/modules/ipvs.modules

sh /etc/sysconfig/modules/ipvs.modules10. Install kubeadm, kubelet, kubectl

mkdir /etc/yum.repos.d/bak && mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.cloud.tencent.com/repo/centos7_base.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.cloud.tencent.com/repo/epel-7.repo yum clean all && yum makecache cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet kubeadm kubectl systemctl enable kubelet

11. Install and configure haproxy

yum -y install haproxy

cat <<EOF >/etc/haproxy/haproxy.cfg

global

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

defaults

mode tcp

log global

retries 3

timeout connect 10s

timeout client 1m

timeout server 1m

frontend kubernetes

bind *:8443

mode tcp

default_backend kubernetes_master

backend kubernetes_master

balance roundrobin

server 210 192.168.10.210:6443 check maxconn 2000

server 211 192.168.10.211:6443 check maxconn 2000

server 212 192.168.10.212:6443 check maxconn 2000

EOF

systemctl start haproxy

systemctl enable haproxy12. Install and configure keepalived

yum -y install keepalived

cat <<EOF >/etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

// Vrrp_string with this parameter VIP cannot PING

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER #Roles need to be modified

interface ens32 #Here you need to change to the name of the network card, some are eth0

virtual_router_id 51

priority 100 #Each machine is different here

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.200

}

}

EOF

service keepalived start

systemctl enable keepalived Be careful:

1. If it is any other machine, the state should be modified to BACKUP and the value of priority should be lower than MASTER

2.interface needs to be modified to the name of the network card

3. VIP was already on a machine when keepalived was initially configured, but then found that ping was not available and the port was inaccessible. Finally, the parameter vrrp_string needed comment.

13. Configure the kubeadm configuration file

cat << EOF > /root/init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

localAPIEndpoint:

advertiseAddress: 192.168.10.210

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: node210

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

clusterName: kubernetes

kubernetesVersion: v1.15.1

certificatesDir: /etc/kubernetes/pki

controllerManager: {}

controlPlaneEndpoint: "192.168.10.200:8443"

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

timeoutForControlPlane: 4m0s

certSANs:

- "node210"

- "node211"

- "node212"

- "192.168.10.210"

- "192.168.10.211"

- "192.168.10.212"

- "192.168.10.212"

- "192.168.10.200"

- "127.0.0.1"

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

networking:

dnsDomain: cluster.local

serviceSubnet: 10.253.0.0/16

podSubnet: 172.60.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

EOF

#Perform initialization cluster on 210

kubeadm init --config=init.yamlSave the prompt information after execution:

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.10.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4f542d1d54cbbf2961bed56fac7fe8a195ffef5f33f2ae699908ab0379d7f568 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4f542d1d54cbbf2961bed56fac7fe8a195ffef5f33f2ae699908ab0379d7f568On 210 machines:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

14. Execute copying of relevant certificate files to 211, 212 on 210

ssh 192.168.10.211 "mkdir -p /etc/kubernetes/pki/etcd"

ssh 192.168.10.212 "mkdir -p /etc/kubernetes/pki/etcd"

scp -r /etc/kubernetes/admin.conf 192.168.10.211:/etc/kubernetes/admin.conf

scp -r /etc/kubernetes/admin.conf 192.168.10.212:/etc/kubernetes/admin.conf

scp -r /etc/kubernetes/pki/{ca.*,sa.*,front*} 192.168.10.211:/etc/kubernetes/pki/

scp -r /etc/kubernetes/pki/{ca.*,sa.*,front*} 192.168.10.212:/etc/kubernetes/pki/

scp -r /etc/kubernetes/pki/etcd/ca.* 192.168.10.211:/etc/kubernetes/pki/etcd/

scp -r /etc/kubernetes/pki/etcd/ca.* 192.168.10.212:/etc/kubernetes/pki/etcd/ 15.Execute on 211, 212 machines. Here is the prompt generated when initializing the cluster, 211, 212 complete master deployment

kubeadm join 192.168.10.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4f542d1d54cbbf2961bed56fac7fe8a195ffef5f33f2ae699908ab0379d7f568 \

--control-plane

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config16.Execute on 213 machines to join nodes

kubeadm join 192.168.10.200:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:4f542d1d54cbbf2961bed56fac7fe8a195ffef5f33f2ae699908ab0379d7f56817. Install the network, we use calico here, note that the segment here needs to correspond to the podSubnet in the initial network profile

curl -s https://docs.projectcalico.org/v3.7/manifests/calico.yaml -O sed 's#192.168.0.0/16#172.60.0.0/16#g' calico.yaml |kubectl apply -f -

If flannel is required,

curl -s https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml -O sed 's#0.244.0.0/16#172.60.0.0/16#g' kube-flannel.yml|kubectl apply -f -

18. View Nodes

[root@node210 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node210 Ready master 3h7m v1.15.1 node211 Ready master 175m v1.15.1 node212 Ready master 176m v1.15.1 node213 Ready <none> 129m v1.15.1

19. Test Services

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port=80 --type=NodePort [root@node210 ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx ClusterIP 10.253.103.72 <none> 80:30230/TCP 151m kubernetes ClusterIP 10.253.0.1 <none> 443/TCP 3h9m

curl 192.168.10.210:90230 if normal

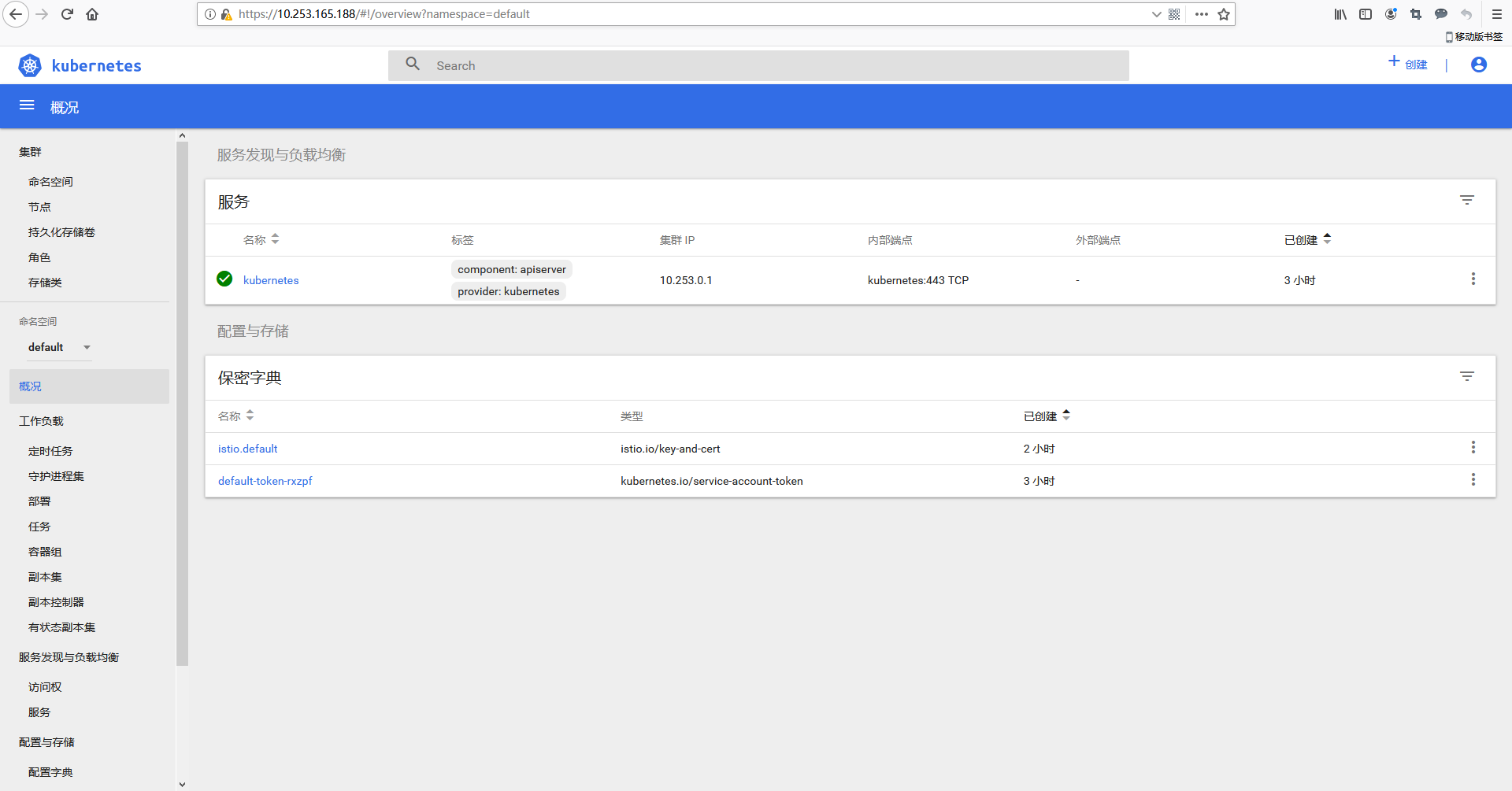

20. Install kubernetes-dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

sed 's#k8s.gcr.io#gcrxio#g' kubernetes-dashboard.yaml |kubectl apply -f -

cat <<EOF > dashboard-admin.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

EOF

kubectl apply -f dashboard-admin.yaml#Perform Add NAT on 210

echo "iptables -t nat -A POSTROUTING -d 10.253.0.0/16 -j MASQUERADE">>/etc/rc.local chmod +x /etc/rc.local iptables -t nat -A POSTROUTING -d 10.253.0.0/16 -j MASQUERADE

#View SVC IP

[root@node210 ~]# kubectl get svc -nkube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.253.0.10 <none> 53/UDP,53/TCP,9153/TCP 3h13m kubernetes-dashboard ClusterIP 10.253.165.188 <none> 443/TCP 29s

#View secret

kubectl describe secret/$(kubectl get secret -nkube-system|grep admin-token|awk '{print $1}') -nkube-system#Add static routes to the local machine

route add 10.253.0.0 mask 255.255.0.0 192.168.10.210 -p

#View secret

kubectl describe secret/$(kubectl get secret -nkube-system|grep admin-token|awk '{print $1}') -nkube-system

21. Check ETCD service

docker exec -it $(docker ps |grep etcd_etcd|awk '{print $1}') sh

etcdctl --endpoints=https://192.168.10.212:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key member list

etcdctl --endpoints=https://192.168.10.212:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health22. How do I reset the cluster if there are configuration errors?

kubeadm reset

ipvsadm --clear

rm -rf /etc/kubernetes/

service kubelet stop

docker stop $(docker ps -a |awk '{print $1}')

docker rm $(docker ps -a -q)23. Other issues

When the cluster runs, it finds that containers cannot run on 210, 211, 212 because the contamination was set at the time of the initial cluster parameters, if the contamination was to be removed

#Here are 211 and 212 to remove the stains kubectl taint nodes node211 node-role.kubernetes.io/master- kubectl taint nodes node212 node-role.kubernetes.io/master-