The class loader mentioned earlier. We all know that classes need to be loaded into memory. How much memory does this class need to occupy in memory? When we call a method, how does the JVM apply for memory and ensure data consistency?

Data consistency

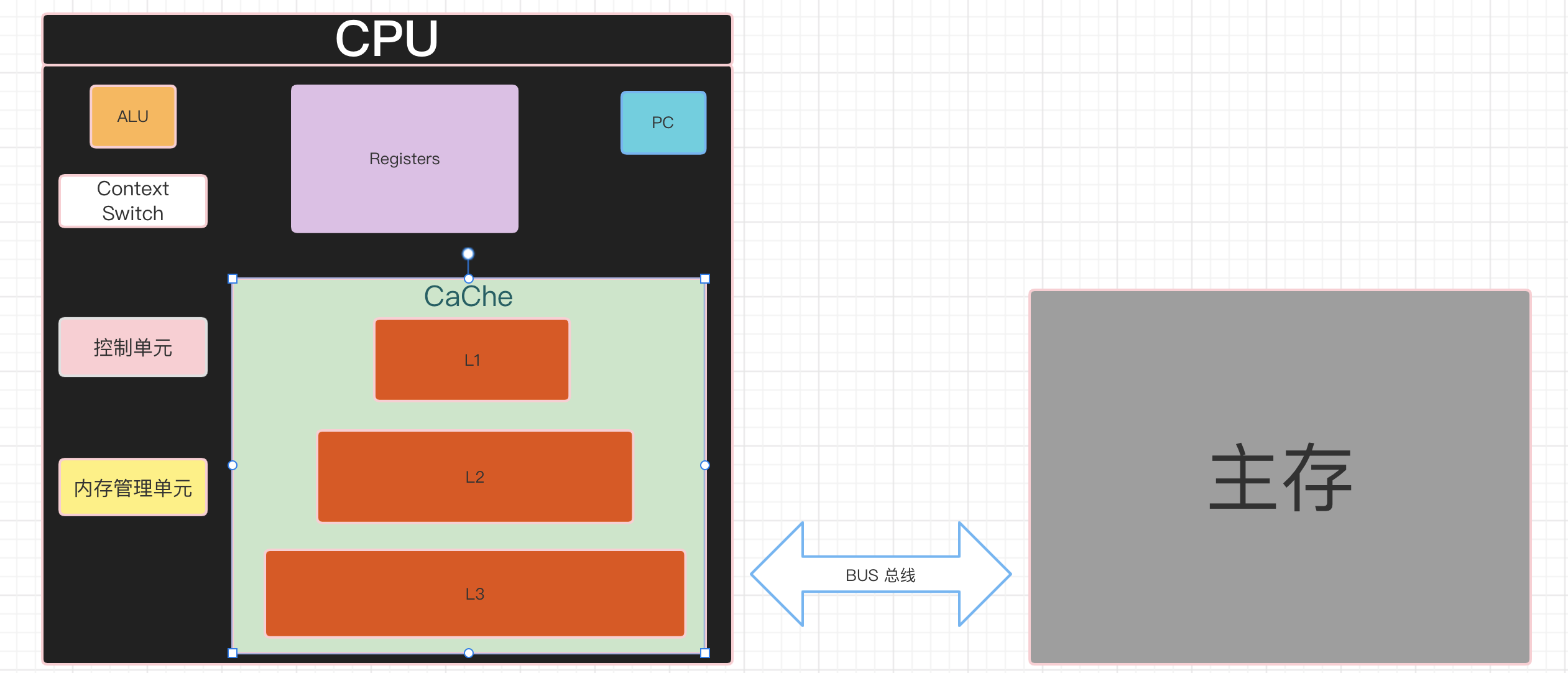

Before understanding data consistency, first understand the CPU.

ALU: Calculation unit Registers: Register group, the data needs to be stored in the register group PC: Registers are used to store CPU Instructions to be executed chache: Used to store instructions and data for thread switching ContextSwitch: Thread online text switching Cache: L1,L2,L3(CPU (shared)

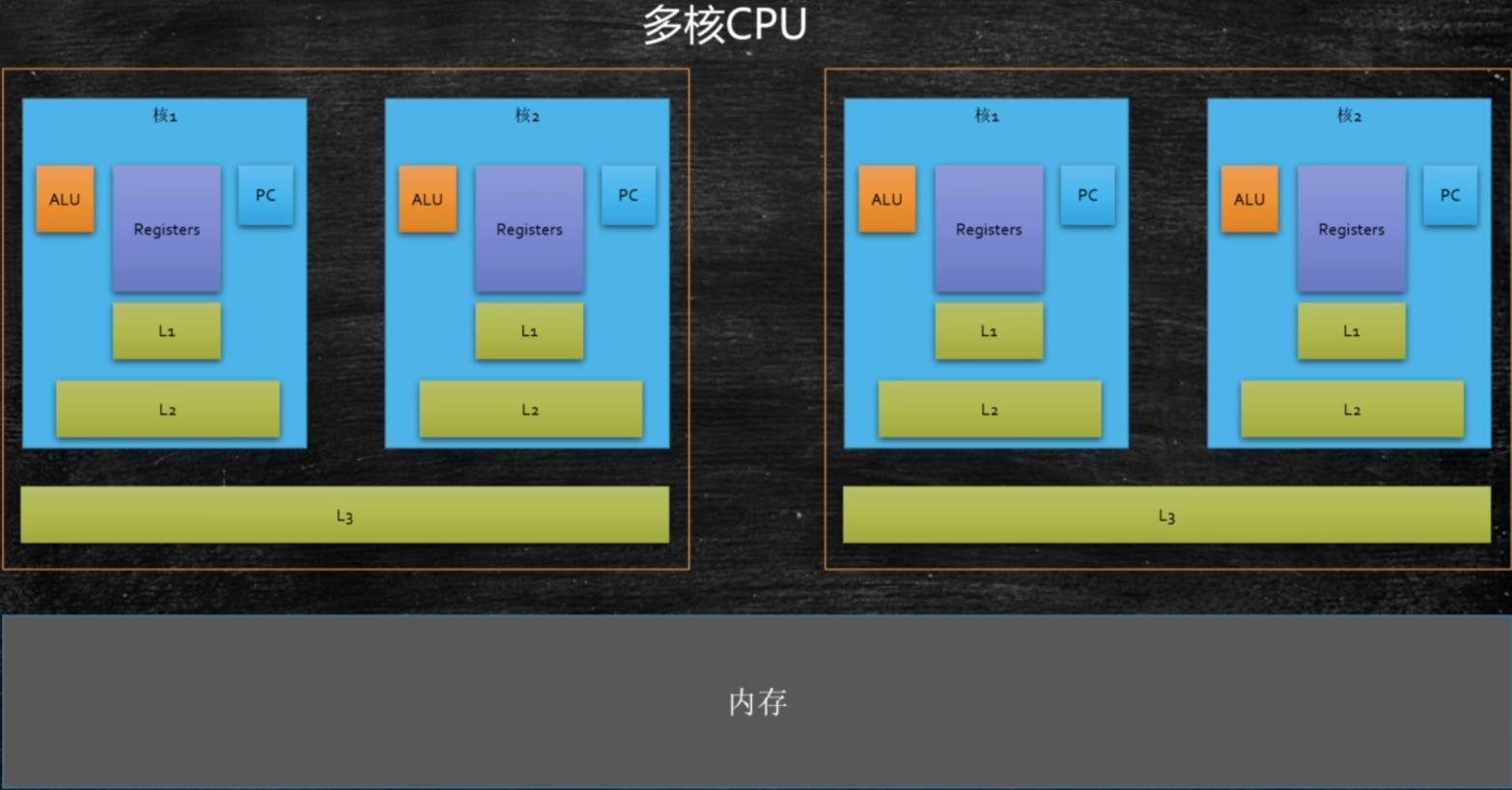

Why understand data consistency? With the development of science and technology, we rarely see servers with only one core, most of which are multi-core (this adds a small knowledge point. A CPU has multiple cores, and context switching through Context Switch is hyper threading). In this way, a problem is exposed. How can multi-core modify a certain data in main memory at the same time???

Let's start with the answer: cache lock (MESI...) + bus lock

Why do I need a cache lock and a bus lock? Can only cache locks be implemented? Only bus locks?

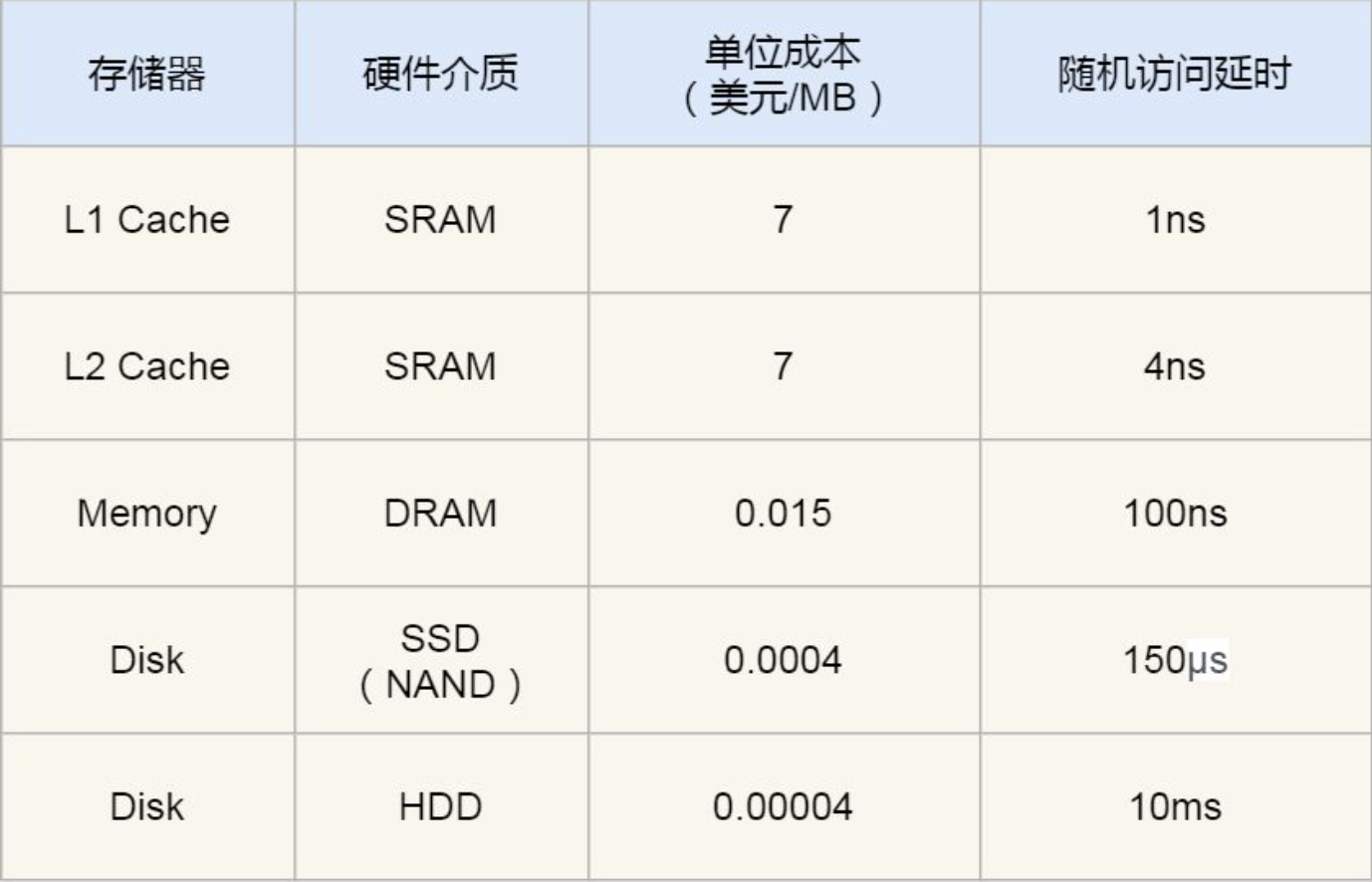

Let's first understand the process of CPU operation data: when the program receives the data loading command, first go to L1 to find the data. If L1 is found, it will return directly. If it is not found, continue to find L2. If L2 is not found, continue to find L3. If L3 is not found, it will go to main memory to load the data.

Why is the CPU so designed? Let's just look at a picture. There is a large gap in access latency overhead

At this point, some people may ask, what does this have to do with data consistency?

It should be emphasized here that the CPU reads data based on cache line, which is currently 64byte.

Some people may have questions: are two different data located in the same cache line locked by multiple different CPU s affecting each other?

The answer is that it will certainly affect each other, and using cached row alignment can improve efficiency.

For example, an int array {1,2,3} requires 128byte of memory when the CPU loads. If the array element is a multiple of 2, it can be loaded completely without alignment.

Just mentioned the word locking. How do they ensure the consistency between cache and main memory?

Learn about the next step first MESI cache consistency protocol

Disorder problem

In order to improve the execution efficiency, the CPU will execute another instruction during the execution of one instruction (such as reading data in memory (100 times slower)), provided that the two instructions have no dependency.

such as

int var1 = 1; int var2 = 2; int var3 = var1 + var2; //When loading var1 and var2, the CPU does not necessarily load var1 first. //However, when loading var3, you need to load var1 and var2 first

Memory barrier

Hardware level

Sfence: store | the write operation before the sfence instruction must be completed before the write operation after the sfence instruction

Lfence: load | the read operation before the lfence instruction must be completed before the read operation after the lfence instruction.

Mfence: mix | the read / write operation before the mfence instruction must be completed before the read / write operation after the mfence instruction.

Atomic instructions, such as the "lock..." instruction on x86, are a Full Barrier. The execution will lock the memory subsystem to ensure the execution order, even across multiple CPU s. Software Locks usually uses memory barriers or atomic instructions to achieve variable visibility and maintain order

How does the JVM regulate

LoadLoad barrier:

example: load1-->LoadLoad-->load2 ensure Load1 stay Load2 Finished reading data before being read

StoreStore barrier:

example: store1-->StoreStore-->store2 ensure Store1 Write operation on Store2 The execution is completed before. Namely guarantee Store1 The write operation of is visible to other processors in subsequent operations.

LoadStore barrier:

example: load1-->LoadStore-->store2 ensure Store2 And subsequent write operations before being brushed out Load1 The data to be read has been read

StoreLoad barrier:

example: store1-->StoreLoad-->load2 stay load2 And subsequent read operations Store1 Writes to are visible to all processors.

Object creation process

1,class loading

2,class linking(verification,preparation,resolution)

3,class initializing

4. Application object memory

5. Assign default values to member variables

6. Call constructor

1,Assign initial values to member variables in sequence 2,Execute constructor statement

Object layout in memory

Print parameters java -XX:+PrintCommandLineFlags -version

Common object

1,Object header: markword 8 byte 2,classPointer Pointer:-XX:+UseCompressedClassPointers It is 4 bytes, and it is 8 bytes when it is not turned on 3,Instance data OOPS: Ordinary Object Pointers 4,padding Align multiples of 8 (not enough for 64 bytes)

Array object

1,Object header: markword 8 2,classPointer Pointer 3,Array length: 4 bytes 4,Array data 5,Align multiples of 8

Problems with IdentityHashCode

When an object is calculated IdentityHashCode After that, it cannot enter the bias lock state

How to locate the object

1,Handle pool: reference handle pool (including two references, one pointing to memory and the other pointing to memory) class Object) GC High efficiency 2,Direct pointer: direct pointer to memory( HotPot)

This blog is used for technical learning. All resources come from the network. Some are forwarding and some are personal summary. Welcome to learn and reprint together. Please mark the original text in a prominent position. If there is infringement, please leave a message and remove it in time.