From: https://javadoop.com/post/java-concurrent-queue

Recently, I want to write an article to talk about the java thread pool problem. I believe that many people do not know anything about it, including myself. Before I carefully looked at the source code, there were also many puzzles, even some places I still didn't understand.

When it comes to thread pool implementations, you have to deal with a variety of BlockingQueue implementations, so I want to share some of what I know about BlockingQueue.

This article does not do the same line-by-line source analysis as the previous analysis of AQS, but it also describes the most important and difficult code, so it is a bit longer.This article covers a lot of Doug Lea's design ideas for BlockingQueue, and hopefully interested readers will really get some results. I think I've written some dry work.

This article refers directly to the Java doc and notes written by Doug Lea, which is also the best material for learning java and packaging.I hope you can think, understand, learn Doug Lea's code style, and apply its elegant and rigorous style to every line of code we write.

Catalog

- Overview of blocking queues

- Blocking Queue in Java

- BlockingQueue Source Analysis

- ArrayBlockingQueue implemented by BlockingQueue

- LinkedBlockingQueue implemented by BlockingQueue

- SynchronousQueue implemented by BlockingQueue

- PriorityBlockingQueue implemented by BlockingQueue

- summary

Overview of blocking queues

1. What is a blocking queue?

A blocking queue is a queue that supports two additional operations.The two additional operations are that when the queue is empty, the thread that gets the element waits for the queue to become non-empty.When the queue is full, threads that store elements wait for the queue to become available.Blocking queues is a common scenario for producers and consumers, where producers are threads that add elements to the queue and consumers are threads that take elements from the queue.Blocking queues are containers where producers store elements, while consumers only take elements from containers.

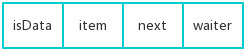

Blocking queues provide four processing methods:

| Method\Processing | throw | Return Special Values | Always Blocked | Timeout Exit |

|---|---|---|---|---|

| Insert Method | add(e) | offer(e) | put(e) | offer(e,time,unit) |

| Remove Method | remove() | poll() | take() | poll(time,unit) |

| Check Method | element() | peek() | Not available | Not available |

- Throw an exception: An IllegalStateException("Queue full") exception is thrown when an element is inserted into the queue when the blocking queue is full.When the queue is empty, a NoSuchElementException exception is thrown when an element is retrieved from the queue.

- Returns a special value: the insert method returns success, and success returns true.Remove method takes an element out of the queue and returns null if not

- Blocked all the time: When the blocked queue is full, if the producer thread put s an element into the queue, the queue will block the producer thread until it gets the data or exits in response to an interruption.When the queue is empty, the consumer thread attempts to take elements from the queue, and the queue also blocks the consumer thread until the queue is available.

- Timeout exit: When the blocking queue is full, the queue blocks the producer thread for a period of time, and if it exceeds a certain period of time, the producer thread exits.

2. Blocked queues in Java

JDK7 provides seven blocking queues.Namely

- ArrayBlockingQueue: A bounded blocking queue consisting of an array structure.

- LinkedBlockingQueue: A bounded blocking queue consisting of a chain table structure.

- PriorityBlockingQueue: An unbounded blocking queue that supports priority ordering.

- DelayQueue: An unbounded blocking queue implemented using a priority queue.

- SynchronousQueue: A blocked queue that does not store elements.

- LinkedTransferQueue: An unbounded blocking queue consisting of a chain table structure.

- LinkedBlockingDeque: A two-way blocking queue consisting of a chain table structure.

ArrayBlockingQueue

ArrayBlockingQueue is a bounded blocking queue implemented as an array.This queue sorts elements according to the first in, first out (FIFO) principle.By default, a fair access queue does not guarantee fair access for visitors. A fair access queue refers to blocked all producer or consumer threads. When a queue is available, the queue can be accessed in the blocked order, that is, the blocked producer threads can be blocked first, the elements can be inserted into the queue, and the blocked consumers can be blocked first.Threads, you can get elements from the queue first.Typically, throughput is reduced to ensure fairness.

LinkedBlockingQueue

LinkedBlockingQueue is a bounded blocking queue implemented with a chain table.The default and maximum length of this queue is Integer.MAX_VALUE.This queue sorts elements according to FIFO principle.

PriorityBlockingQueue

PriorityBlockingQueue is an unbounded queue that supports priority.By default, elements are ordered in a natural order, or you can specify the ordering of elements through a comparator.The elements are arranged in ascending order.

DelayQueue

DelayQueue is an unbounded blocking queue that supports delayed acquisition of elements.Queues are implemented using PriorityQueue.The elements in the queue must implement the Delayed interface and specify how long it takes to get the current element from the queue when the element is created.Elements can only be extracted from the queue at the end of the delay period.We can use DelayQueue in the following scenarios:

- The design of a caching system: DelayQueue can be used to save the validity period of cached elements, and a thread loop is used to query DelayQueue. Once an element is retrieved from DelayQueue, the validity period of the cache is reached.

- Timed task scheduling.Use DelayQueue to save the tasks that will be executed that day and the execution time. Once the task is retrieved from DelayQueue, it starts executing. For example, TimerQueue is implemented using DelayQueue.

BlockingQueue

First, basically, BlockingQueue is a first-in-first-out Queue. Why is it Blocking?This is because BlockingQueue supports Blocking the Queue from waiting for elements to return when it gets Queue elements but the Queue is empty; it also supports adding elements when the Queue is full and waiting until the Queue can put new elements.

BlockingQueue is an interface that inherits from Queue, so its implementation class can also be used as an implementation of Queue, which in turn inherits from the Collection interface.

BlockingQueue provides four different methods for inserting, removing, and getting elements for use in different scenarios: 1, throwing an exception; 2, returning a special value (null or true/false, depending on the operation); 3, blocking waits for the operation until it succeeds; 4, blocking waits for the operationDo until success or time out is specified.The summary is as follows:

| Throws exception | Special value | Blocks | Times out | |

|---|---|---|---|---|

| Insert | add(e) | offer(e) | put(e) | offer(e, time, unit) |

| Remove | remove() | poll() | take() | poll(time, unit) |

| Examine | element() | peek() | not applicable | not applicable |

The BlockingQueue implementations follow these rules, and of course we don't have to remember this table, we know it, and then write code with comments that look at the method as you want to select the appropriate method.

For BlockingQueue, our focus should be on put(e) and take() because they are blocked.

BlockingQueue does not accept insertion of null values, and the corresponding method throws a NullPointerException exception when it encounters a null insertion.The null value is commonly used here to return as a special value (the third column in the table), representing a poll failure.Therefore, if null values are allowed to be inserted, it is not a good idea to use null to determine whether a failure or a null value is obtained.

A BlockingQueue may be bounded, and if the queue is full when you insert it, the put operation will be blocked.Usually, what we're talking about here is not really unbounded, but its capacity is Integer.MAX_VALUE (over 2.1 billion).

BlockingQueue is designed to implement a producer-consumer queue, but of course you can also use it as a common Collection, which implements the java.util.Collection interface, as mentioned earlier.For example, we can use remove(x) to delete any element, but this type of operation is usually not efficient, so try to use it only in a few situations, such as when a message is queued but needs to be cancelled.

BlockingQueue implementations are thread-safe, but bulk collection operations such as addAll, containsAll, retainAll, and removeAll are not necessarily atomic.If addAll(c) throws an exception midway after adding some elements, some elements have already been added to BlockingQueue, which is allowed, depending on the implementation.

BlockingQueue does not support close or shutdown operations, because developers may want no new elements to be added, depending on the implementation and not enforced constraints.

Finally, BlockingQueue supports multiple consumers and producers in the producer-consumer scenario, which is really a thread security issue.

Believe that each of the above statements is clear. BlockingQueue is a simpler thread-safe container. Now I'll analyze its implementation in JDK. It's time for Doug Lea to perform again.

ArrayBlockingQueue Implemented by BlockingQueue

ArrayBlockingQueue is a bounded queue implementation class for the BlockingQueue interface, with an array at the bottom.

Its concurrency control is controlled by reentrant locks, which are acquired for both insert and read operations.

If the reader has read my previous " Source line by line analysis clearly AbstractQueuedSynchronizer (2) If you read about Conditions in the article, you can easily read the source code of ArrayBlockingQueue, which is implemented using a ReentrantLock and two corresponding Conditions.

ArrayBlockingQueue has the following properties:

// Array to hold elements

final Object[] items;

// Location of next read operation

int takeIndex;

// Location of next write operation

int putIndex;

// Number of elements in the queue

int count;

// The following are the synchronizers that you control and use

final ReentrantLock lock;

private final Condition notEmpty;

private final Condition notFull;

Let's use a diagram to describe its synchronization mechanism:

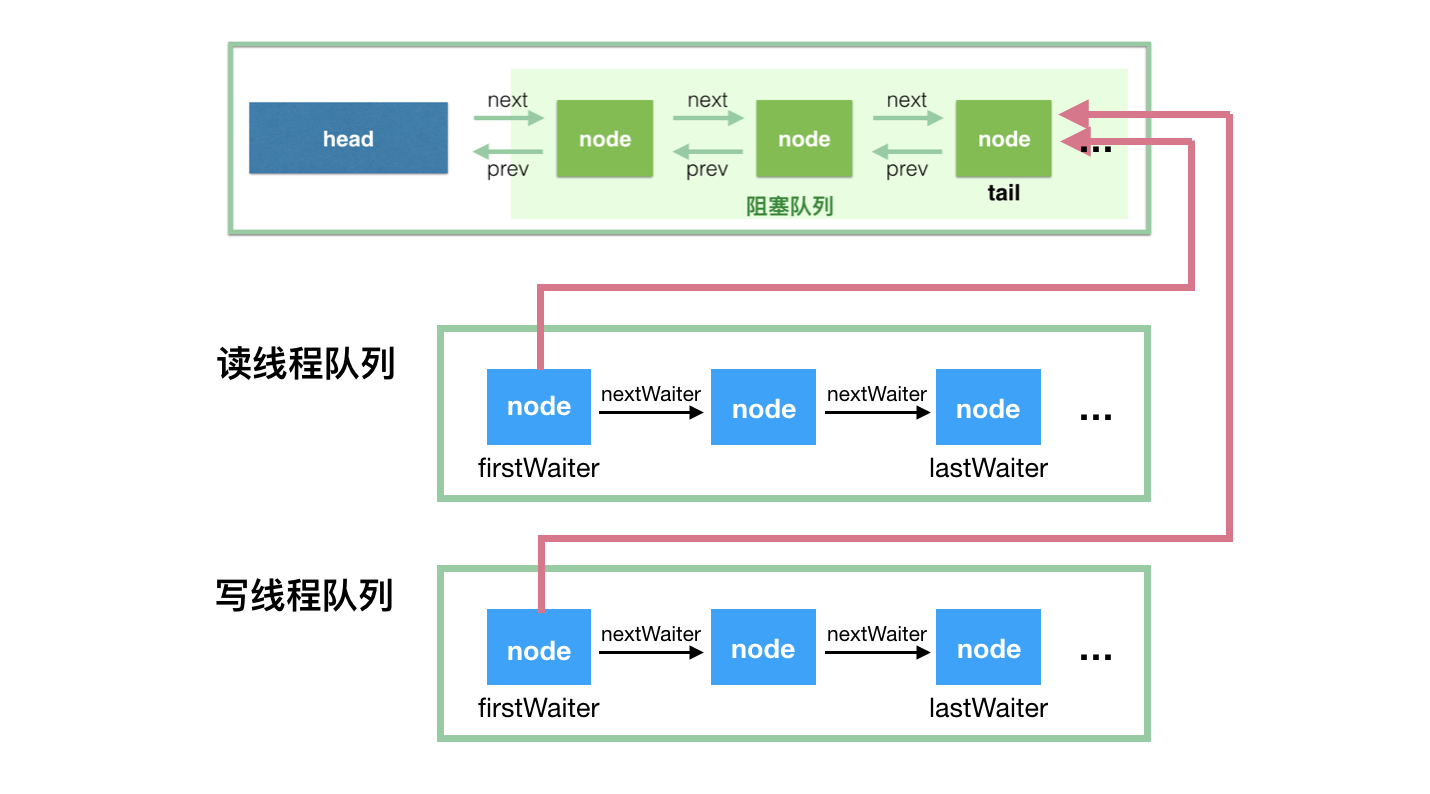

ArrayBlockingQueue implements concurrent synchronization by requiring an AQS exclusive lock for both read and write operations.If the queue is empty, the read thread enters the read thread queue, waits for the write thread to write a new element, and wakes up the first wait thread in the read thread queue.If the queue is full, the writing thread enters the writing thread queue, waits for the reading thread to make room for the queue elements, and wakes up the first waiting thread in the writing thread queue.

For ArrayBlockingQueue, we can specify the following three parameters at the time of construction:

- Queue capacity, which limits the maximum number of elements allowed in a queue;

- Specifies whether an exclusive lock is a fair lock or an unfair lock.Unfair locks have a high throughput, and fair locks ensure that the thread that waits the longest to acquire a lock each time.

- You can specify that elements in a collection be initialized by adding them to the queue during the construction method.

I won't analyze the more specific source code, because it's the use of Condition s in AbstractQueuedSynchronizer. For interested readers, see my " Source line by line analysis clearly AbstractQueuedSynchronizer (2) ), because if you read that article, the code for ArrayBlockingQueue will not be necessary for analysis. Of course, if you don't understand Condition at all, you can basically say you don't understand the source code for ArrayBlockingQueue.

LinkedBlockingQueue Implemented by BlockingQueue

The underlying blocked queue based on a one-way chain table can be used as either an unbound queue or a bounded queue.Look at the construction method:

// The legendary boundless queue

public LinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

// The legendary bounded queue

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node<E>(null);

}

Let's see what the properties of this class are:

// Queue capacity

private final int capacity;

// Number of elements in the queue

private final AtomicInteger count = new AtomicInteger(0);

// Team Head

private transient Node<E> head;

// End of Team

private transient Node<E> last;

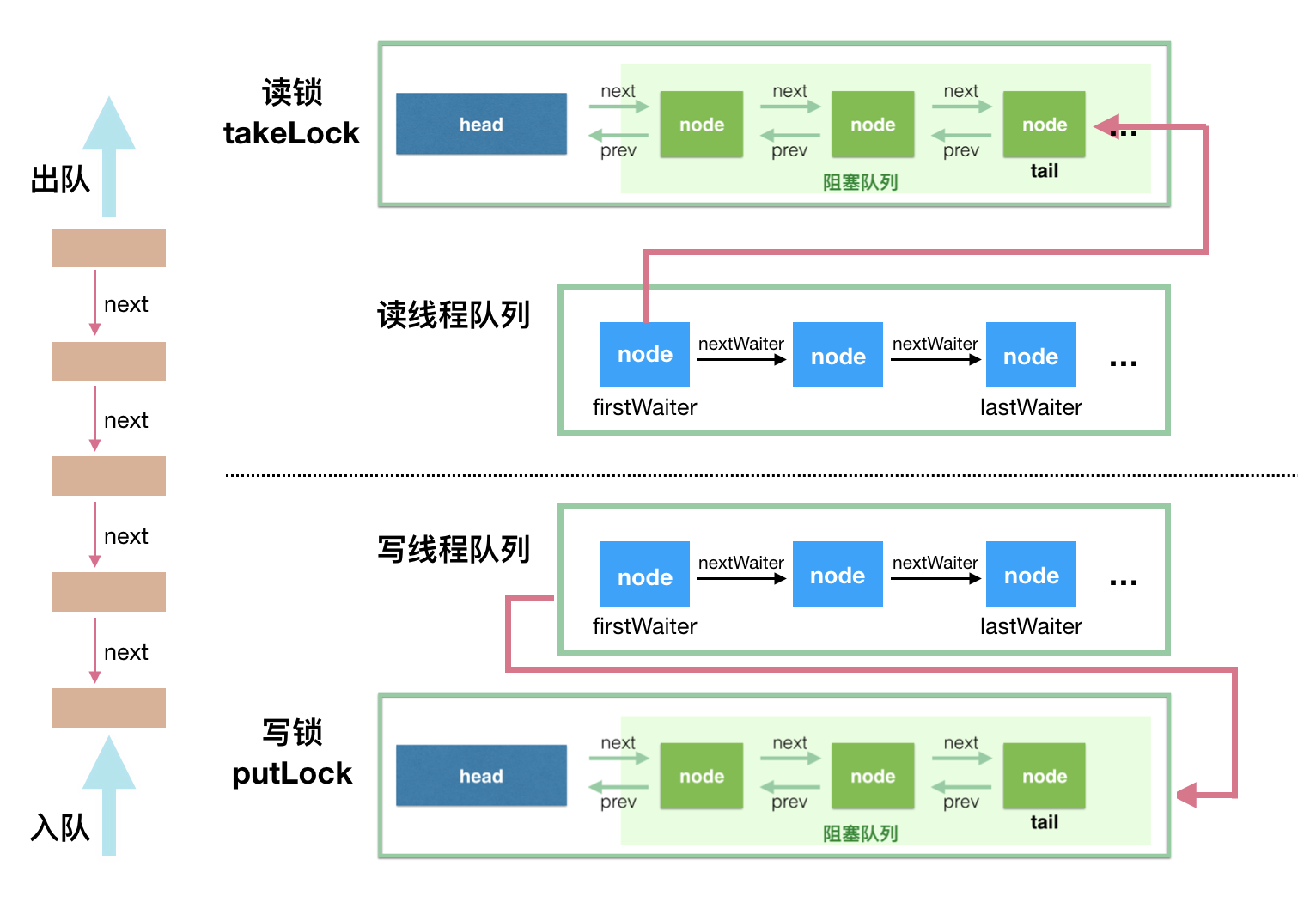

// The take, poll, peek, and other methods of reading operations need to acquire this lock

private final ReentrantLock takeLock = new ReentrantLock();

// If the queue is empty during the read operation, wait for the notEmpty condition

private final Condition notEmpty = takeLock.newCondition();

// Writing methods such as put, offer, etc. need to acquire this lock

private final ReentrantLock putLock = new ReentrantLock();

// If the queue is full while writing, wait for the notFull condition

private final Condition notFull = putLock.newCondition();

Two locks and two Condition s are used here. Here is a brief description:

How does takeLock work with notEmpty: If you want to take an element, you need to acquire a takeLock lock, but not enough. If the queue is empty at this time, you also need the Condition that the queue is not empty.

putLock needs to be paired with notFull: If you want to insert an element, you need to acquire a put Lock lock, but not enough. If the queue is full at this time, you also need the Condition that the queue is not full.

First, here's a diagram to look at the concurrent read-write control for LinkedBlockingQueue, then start analyzing the source code:

Understanding this diagram will make the source code simple. Read operations are queued and write operations are queued. The only concurrency problem is that a write operation and a read operation are performed simultaneously, as long as this is well controlled.

First construct method:

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node<E>(null);

}

Note that an empty header node is initialized, so when the first element is queued, there will be two elements in the queue.When reading elements, always get a node after the head node.The count value of count does not include this header node.

Let's see how the put method inserts elements at the end of the queue:

public void put(E e) throws InterruptedException {

if (e == null) throw new NullPointerException();

// If you're confused about why -1 is here, take a look at the offer method.It's just a sign of success and failure.

int c = -1;

Node<E> node = new Node(e);

final ReentrantLock putLock = this.putLock;

final AtomicInteger count = this.count;

// putLock must be obtained to insert

putLock.lockInterruptibly();

try {

// If the queue is full, wait until the condition for notFull is met.

while (count.get() == capacity) {

notFull.await();

}

// Entry

enqueue(node);

// count atom plus 1, c or before 1

c = count.getAndIncrement();

// If this element is queued and at least one slot is available, call notFull.signal() to wake up the waiting thread.

// Which threads are waiting on the notFull Condition?

if (c + 1 < capacity)

notFull.signal();

} finally {

// Release putLock after enlistment

putLock.unlock();

}

// If c == 0, then the queue is empty before this element is queued (excluding the head er empty node),

// So all the read threads are waiting for notEmpty to wake up, and this is a wake up operation

if (c == 0)

signalNotEmpty();

}

// The entry code is very simple, pointing the last attribute to this new element and letting the next at the end of the original queue point to this element

// There is no concurrency issue with queuing here, as this operation can only be performed after the putLock exclusive lock has been acquired

private void enqueue(Node<E> node) {

// assert putLock.isHeldByCurrentThread();

// assert last.next == null;

last = last.next = node;

}

// When an element is queued, call this method to wake up the reading thread to read it, if necessary

private void signalNotEmpty() {

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

notEmpty.signal();

} finally {

takeLock.unlock();

}

}

Let's look at the take method again:

public E take() throws InterruptedException {

E x;

int c = -1;

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

// First, you need to get takeLock to queue

takeLock.lockInterruptibly();

try {

// If the queue is empty, wait for notEmpty to meet before continuing

while (count.get() == 0) {

notEmpty.await();

}

// Queue

x = dequeue();

// count subtracts 1 atom

c = count.getAndDecrement();

// If there is at least one element in the queue after this exit, call notEmpty.signal() to wake up the other read threads

if (c > 1)

notEmpty.signal();

} finally {

// Release takeLock after leaving the queue

takeLock.unlock();

}

// If c == capacity, the queue is full when this take method occurs

// Now that one is out of the queue, it means that the queue is not satisfied, wake up the writing thread to write

if (c == capacity)

signalNotFull();

return x;

}

// Take the lead and leave the team

private E dequeue() {

// assert takeLock.isHeldByCurrentThread();

// assert head.item == null;

// As mentioned earlier, the header node is empty

Node<E> h = head;

Node<E> first = h.next;

h.next = h; // help GC

// Set this as the new header node

head = first;

E x = first.item;

first.item = null;

return x;

}

// When an element is queued, call this method to wake up the writing thread to write if necessary

private void signalNotFull() {

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

notFull.signal();

} finally {

putLock.unlock();

}

}

Source analysis is over here. After all, the source code is relatively simple, basically as long as the reader carefully understands it.

SynchronousQueue implemented by BlockingQueue

It is a special queue whose name actually implies its characteristic - synchronous queue.Why synchronous?This is not a multithreaded concurrency problem, but because when a thread writes an element to a queue, the write operation does not immediately return, and it needs to wait for another thread to remove the element; similarly, when a read thread does a read operation, it also needs a write operation from a matching write threadDo.Synchronous here means that both read and write threads need to be synchronized, one read thread matches one write thread.

We rarely use the SynchronousQueue class, but it is used in ScheduledThreadPoolExecutor, an implementation class of the thread pool, and interested readers can check it out later.

Although I mentioned queues above, SynchronousQueue's queues are virtual and do not provide any space (none at all) to store elements.Data must be given to a read thread from a write thread, not to a queue waiting to be consumed.

You cannot use the peek method in SynchronousQueue (where this method returns null directly). The semantics of the peek method are read-only and not removed. Obviously, the semantics of this method do not conform to the characteristics of SynchronousQueue.SynchronousQueue cannot be iterated either, because there are no elements to iterate over.Although SynchronousQueue implements the Collection interface indirectly, the collection is empty if you use it as a Collection.Of course, this class does not allow null values to be passed (container classes in concurrent packages do not seem to support inserting null values, because null values are often used for other purposes, such as returning values for methods representing operation failures).

Next, let's take a look at the implementation of the specific source code. Its source code is not that simple, we need to clarify its design ideas first.

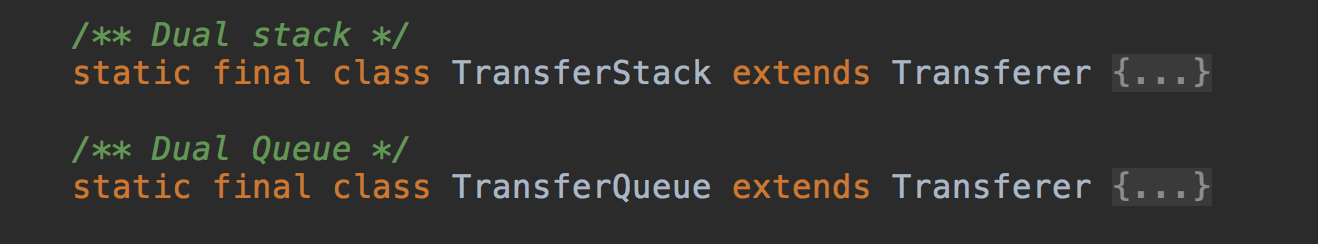

Source code annotated about 1200 lines, let's look at the big frame first:

// When we construct it, we can specify whether it is fair or unfair, and then we can make a difference.

public SynchronousQueue(boolean fair) {

transferer = fair ? new TransferQueue() : new TransferStack();

}

abstract static class Transferer {

// As you probably know from the method name, this method is used to transfer elements from the producer to the consumer

// It can also be passively invoked by consumers to take elements from the producer's hand

// The first parameter e, if not null, represents the scenario where elements are transferred from the producer to the consumer

// If null, wait for the producer to supply the element on behalf of the consumer, and the return value is the element provided by the corresponding producer

// The second parameter represents whether to set the timeout, which is the value of the third parameter if it is set

// If the return value is null, it represents a timeout or an interrupt.Which one can be obtained by detecting the interrupt state.

abstract Object transfer(Object e, boolean timed, long nanos);

}

Transferer has two internal implementation classes because we can specify a fair policy when constructing SynchronousQueue.Fair mode means that all read and write threads follow FIFO, which corresponds to TransferQueue.The unfair pattern corresponds to the TransferStack.

SynchronousQueue uses queue TransferQueue for fairness policy and stack TransferStack for unfairness policy. Both of them are implemented through a chain table with QNode and SNode nodes.TransferQueue and TransferStack play very important roles in SynchronousQueue, whose put and take operations are implemented by delegates.

Let's first analyze the source code using a fair model, then talk about the differences between fair and unfair models.

Next, let's look at the put and take methods:

// Write Value

public void put(E o) throws InterruptedException {

if (o == null) throw new NullPointerException();

if (transferer.transfer(o, false, 0) == null) { // 1

Thread.interrupted();

throw new InterruptedException();

}

}

// Read Values and Remove

public E take() throws InterruptedException {

Object e = transferer.transfer(null, false, 0); // 2

if (e != null)

return (E)e;

Thread.interrupted();

throw new InterruptedException();

}

We see that both write put(E o) and read take() call Transferer.transfer(...) method, the difference is whether the first parameter is a null value.

Let's take a look at the design of transfer, and its basic algorithm is as follows:

- When this method is called, if the queue is empty, or if the nodes in the queue are of the same type as the current thread operation (for example, the current operation is a put operation and the elements in the queue are also write threads).In this case, join the current thread to the waiting queue.

- If there are waiting nodes in the queue and they match the current operation (for example, if the queue is full of read operation threads, the current thread is write operation threads, and vice versa).In this case, match the head of the waiting queue, queue out, and return the corresponding data.

In fact, there is an implicit condition that has been met. If the queue is not empty, it must be all nodes of the same type, either read or write.This is when you see whether the read or write threads are backlogged.

We can imagine a male-to-female pairing scenario: a man came in, if he didn't have anyone, he needed to wait; if he found a bunch of men waiting, he needed to be behind the queue; if he found a bunch of women in the queue, he would lead the woman who was in the queue directly.

Now that we're talking about waiting queues, let's first look at their implementation, which is QNode:

static final class QNode {

volatile QNode next; // You can see that the waiting queue is a one-way chain table

volatile Object item; // CAS'ed to or from null

volatile Thread waiter; // Save Thread objects here for suspension and wakeup

final boolean isData; // Used to determine whether a write thread node (isData == true) or a read thread node

QNode(Object item, boolean isData) {

this.item = item;

this.isData = isData;

}

......

Believe that after all this, it's much easier for us to look at the code for the transfer method.

/**

* Puts or takes an item.

*/

Object transfer(Object e, boolean timed, long nanos) {

QNode s = null; // constructed/reused as needed

boolean isData = (e != null);

for (;;) {

QNode t = tail;

QNode h = head;

if (t == null || h == null) // saw uninitialized value

continue; // spin

// The queue is empty or the node type in the queue is the same as the current node.

// That is, in the first case, we can just queue the nodes.Readers want to think that the way in this if is actually to join the team

if (h == t || t.isData == isData) { // empty or same-mode

QNode tn = t.next;

// T!= tail indicates that just one node has joined the queue, continue is OK

if (t != tail) // inconsistent read

continue;

// There are other nodes queued, but tail still points to the original, set tail now

if (tn != null) { // lagging tail

// This is done by setting tail to tn if it is t at this time

advanceTail(t, tn);

continue;

}

//

if (timed && nanos <= 0) // can't wait

return null;

if (s == null)

s = new QNode(e, isData);

// Insert the current node after tail

if (!t.casNext(null, s)) // failed to link in

continue;

// Set the current node to a new tail

advanceTail(t, s); // swing tail and wait

// When you see this, ask the reader to slide down to this method first, and come back here after reading it, the thought will not stop.

Object x = awaitFulfill(s, e, timed, nanos);

// At this point, the previously enlisted thread is awakened and ready to go down

if (x == s) { // wait was cancelled

clean(t, s);

return null;

}

if (!s.isOffList()) { // not already unlinked

advanceHead(t, s); // unlink if head

if (x != null) // and forget fields

s.item = s;

s.waiter = null;

}

return (x != null) ? x : e;

// The else branch here is the second case mentioned above, where there is a corresponding read or write match

} else { // complementary-mode

QNode m = h.next; // node to fulfill

if (t != tail || m == null || h != head)

continue; // inconsistent read

Object x = m.item;

if (isData == (x != null) || // m already fulfilled

x == m || // m cancelled

!m.casItem(x, e)) { // lost CAS

advanceHead(h, m); // dequeue and retry

continue;

}

advanceHead(h, m); // successfully fulfilled

LockSupport.unpark(m.waiter);

return (x != null) ? x : e;

}

}

}

void advanceTail(QNode t, QNode nt) {

if (tail == t)

UNSAFE.compareAndSwapObject(this, tailOffset, t, nt);

}

// Spin or block until condition is met, this method returns

Object awaitFulfill(QNode s, Object e, boolean timed, long nanos) {

long lastTime = timed ? System.nanoTime() : 0;

Thread w = Thread.currentThread();

// Determine the number of times you need to spin,

int spins = ((head.next == s) ?

(timed ? maxTimedSpins : maxUntimedSpins) : 0);

for (;;) {

// If interrupted, cancel this node

if (w.isInterrupted())

// Is to set the item property in the current node s to this

s.tryCancel(e);

Object x = s.item;

// This is the only exit for this method

if (x != e)

return x;

// Detect if timeout is required

if (timed) {

long now = System.nanoTime();

nanos -= now - lastTime;

lastTime = now;

if (nanos <= 0) {

s.tryCancel(e);

continue;

}

}

if (spins > 0)

--spins;

// If the spin reaches the maximum number of times, then detection

else if (s.waiter == null)

s.waiter = w;

// If the spin reaches its maximum number of times, the thread hangs and waits to wake up

else if (!timed)

LockSupport.park(this);

// spinForTimeoutThreshold, when we talked about AQS earlier, actually said that when the remaining time is less than this threshold, we would

// Do not suspend, spin performance will be better

else if (nanos > spinForTimeoutThreshold)

LockSupport.parkNanos(this, nanos);

}

}

The clever thing about Doug Lea is that it keeps the code simple by bringing it together, and of course it adds to the burden of reading. When you look at the code, you still have to think carefully about the possibilities.

Next, let's talk about the differences between fair and unfair models.

Believe that you already have the concept of a fair mode workflow in mind, I will simply say the algorithm of TransferStack without analyzing the source code.

- When this method is called, if the queue is empty, or if the node in the queue is the same as the current thread operation type (for example, the current operation is a put operation and the elements in the stack are also write threads).In this case, the current thread is added to the wait stack, waiting for pairing.Then return the corresponding element, or null if cancelled.

- If there are waiting nodes in the stack and they match the current operation (for example, if the stack is full of read operation threads, the current thread is write operation threads, and vice versa).Push the current node onto the top of the stack, match the nodes in the stack, and then stack the two nodes out.Pairing and stacking aren't really necessary, because the next one does the same thing.

- If the top of the stack is a node that has been matched and stacked, help it match and get out of the stack before proceeding.

It should be said that the source code for TransferStack is more complex than TransferQueue, so read the source code yourself if the reader is interested.

PriorityBlockingQueue Implemented by BlockingQueue

BlockingQueue implementation with sorting, which uses ReentrantLock for concurrency control, queues with no bounds (ArrayBlockingQueue is a bounded queue, LinkedBlockingQueue can also specify the maximum capacity of the queue by passing capacity into the constructor, but PriorityBlockingQueue can only specify the initial queue sizeSmall, when the element is inserted behind it, it will expand automatically if there is not enough space).

Simply put, it is the thread-safe version of PriorityQueue.A null value cannot be inserted, and the object inserted into the queue must be comparable in size, otherwise a ClassCastException exception is reported.Its insert operation put method will not block because it is an unbound queue (the take method will block when the queue is empty).

Its source code is relatively simple. This section describes its core source part.

Let's see what it has:

// In the construction method, if no size is specified, the default size is 11

private static final int DEFAULT_INITIAL_CAPACITY = 11;

// Maximum capacity of arrays

private static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8;

// This is the array that holds the data

private transient Object[] queue;

// Current size of queue

private transient int size;

// Size comparator, this property can be set to null if sorted in natural order

private transient Comparator<? super E> comparator;

// Locks used for concurrency control, all public s, and methods involving thread security, must be acquired first

private final ReentrantLock lock;

// This is understandable, and its instance is created by the lock property above

private final Condition notEmpty;

// This is also used for locks, which need to be acquired before expanding an array

// It uses CAS operations

private transient volatile int allocationSpinLock;

// For serialization and deserialization, we should use less serialization for PriorityBlockingQueue

private PriorityQueue q;

This class implements all interface methods in the Collection and Iterator interfaces and does not guarantee order when iterating over its objects and traversing them.If you want to achieve ordered traversal, Arrays.sort(queue.toArray()) is recommended.PriorityBlockingQueue provides drainTo methods for orderly filling (or, more precisely, transferring, deleting elements from the original queue) some or all of the elements into another collection.Another note is that if two objects have the same priority (the compare method returns 0), this queue does not guarantee the order between them.

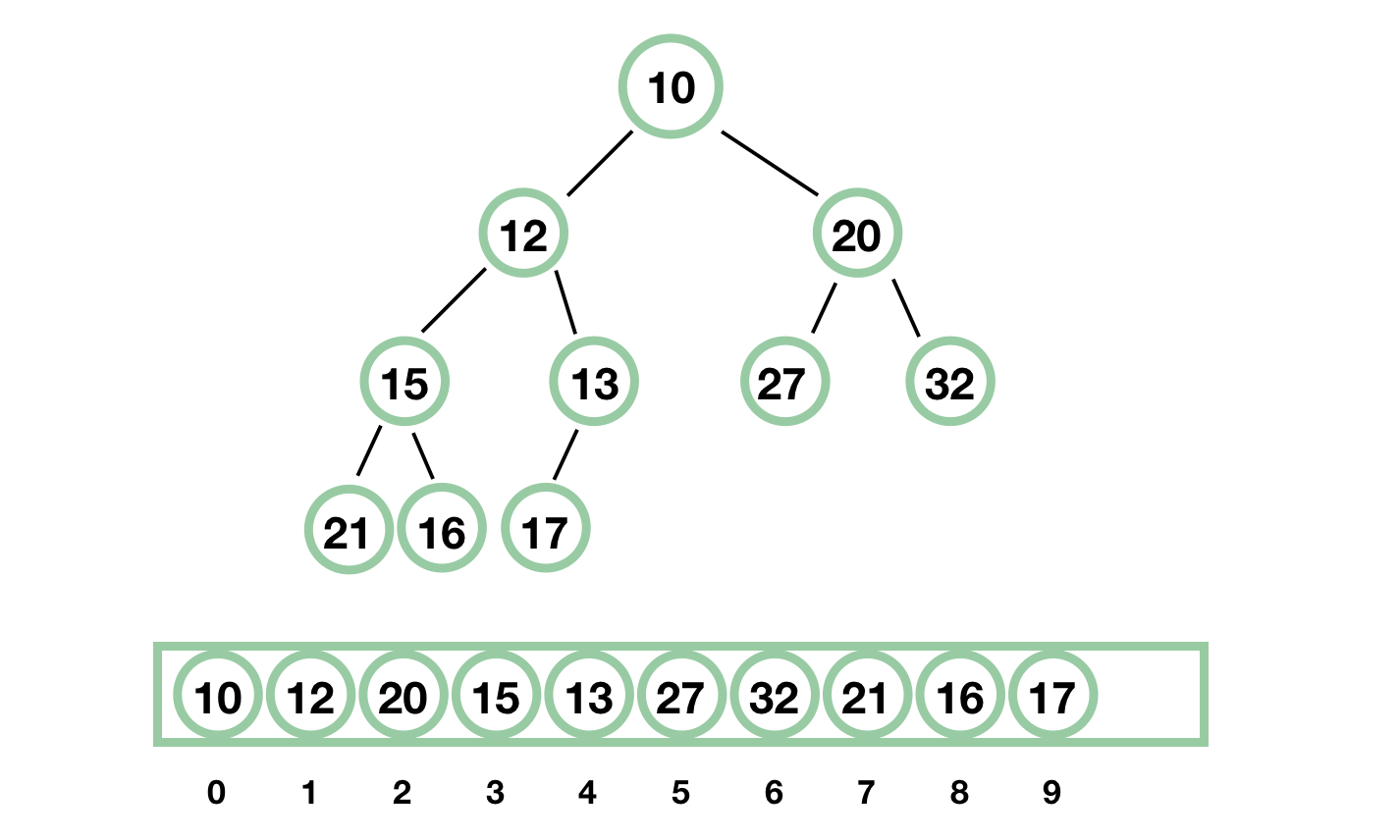

PriorityBlockingQueue uses an array-based binary heap to store elements, and all public methods use the same lock for concurrency control.

Binary heap: A complete binary tree that is well suited for storage in arrays. For element a[i], its left child node is a[2i+1], its right child node is a[2\i+2], and its parent node is a[(i-1)/2]. Its heap order property is that each node has a value less than its left and right child nodes.The minimum value in the binary heap is the root node, but deleting the root node can be cumbersome because the tree needs to be adjusted.

By simply explaining the binary heap with a diagram, I won't say too many technical terms. The advantage of this data structure is obvious. The smallest element must be the root element. It is a full tree. Apart from the last layer, the nodes of the last layer are arranged tightly from left to right.

To begin source analysis for PriorityBlockingQueue, let's first look at the construction method:

// Default construction method, initialized with default value (11)

public PriorityBlockingQueue() {

this(DEFAULT_INITIAL_CAPACITY, null);

}

// Specify the initial size of the array

public PriorityBlockingQueue(int initialCapacity) {

this(initialCapacity, null);

}

// Specify Comparator

public PriorityBlockingQueue(int initialCapacity,

Comparator<? super E> comparator) {

if (initialCapacity < 1)

throw new IllegalArgumentException();

this.lock = new ReentrantLock();

this.notEmpty = lock.newCondition();

this.comparator = comparator;

this.queue = new Object[initialCapacity];

}

// Fills the elements in the specified set first in the construction method

public PriorityBlockingQueue(Collection<? extends E> c) {

this.lock = new ReentrantLock();

this.notEmpty = lock.newCondition();

//

boolean heapify = true; // true if not known to be in heap order

boolean screen = true; // true if must screen for nulls

if (c instanceof SortedSet<?>) {

SortedSet<? extends E> ss = (SortedSet<? extends E>) c;

this.comparator = (Comparator<? super E>) ss.comparator();

heapify = false;

}

else if (c instanceof PriorityBlockingQueue<?>) {

PriorityBlockingQueue<? extends E> pq =

(PriorityBlockingQueue<? extends E>) c;

this.comparator = (Comparator<? super E>) pq.comparator();

screen = false;

if (pq.getClass() == PriorityBlockingQueue.class) // exact match

heapify = false;

}

Object[] a = c.toArray();

int n = a.length;

// If c.toArray incorrectly doesn't return Object[], copy it.

if (a.getClass() != Object[].class)

a = Arrays.copyOf(a, n, Object[].class);

if (screen && (n == 1 || this.comparator != null)) {

for (int i = 0; i < n; ++i)

if (a[i] == null)

throw new NullPointerException();

}

this.queue = a;

this.size = n;

if (heapify)

heapify();

}

Next, let's look at its internal implementation of auto-scaling:

private void tryGrow(Object[] array, int oldCap) {

// This side does a lock release operation

lock.unlock(); // must release and then re-acquire main lock

Object[] newArray = null;

// Using CAS to change allocationSpinLock from 0 to 1 is also a lock acquisition

if (allocationSpinLock == 0 &&

UNSAFE.compareAndSwapInt(this, allocationSpinLockOffset,

0, 1)) {

try {

// Increase oldCap + 2 capacity if number of nodes is less than 64

// If the number of nodes is greater than or equal to 64, increase oldCap by half

// So when the number of nodes is small, it grows faster

int newCap = oldCap + ((oldCap < 64) ?

(oldCap + 2) :

(oldCap >> 1));

// There may be an overflow

if (newCap - MAX_ARRAY_SIZE > 0) { // possible overflow

int minCap = oldCap + 1;

if (minCap < 0 || minCap > MAX_ARRAY_SIZE)

throw new OutOfMemoryError();

newCap = MAX_ARRAY_SIZE;

}

// If queue!= array, then there are other threads allocating additional space to queue

if (newCap > oldCap && queue == array)

// Assign a new large array

newArray = new Object[newCap];

} finally {

// Reset, that is, release the lock

allocationSpinLock = 0;

}

}

// If there are other threads doing expansion

if (newArray == null) // back off if another thread is allocating

Thread.yield();

// Re-acquire Lock

lock.lock();

// Copy elements from the original array into the newly allocated large array

if (newArray != null && queue == array) {

queue = newArray;

System.arraycopy(array, 0, newArray, 0, oldCap);

}

}

The expansion method is also clever at controlling concurrency, releasing the original exclusive lock so that both expansion and read operations can be performed simultaneously to improve throughput.

Next, let's analyze the write-down put and read-operation take methods.

public void put(E e) {

// Call the offer method directly, because as we've already said, the put method won't block here

offer(e);

}

public boolean offer(E e) {

if (e == null)

throw new NullPointerException();

final ReentrantLock lock = this.lock;

// Acquire exclusive locks first

lock.lock();

int n, cap;

Object[] array;

// If the number of elements in the current queue >=the size of the array, then you need to expand

while ((n = size) >= (cap = (array = queue).length))

tryGrow(array, cap);

try {

Comparator<? super E> cmp = comparator;

// Node added to binary heap

if (cmp == null)

siftUpComparable(n, e, array);

else

siftUpUsingComparator(n, e, array, cmp);

// Update size

size = n + 1;

// Wake Up Waiting Read Threads

notEmpty.signal();

} finally {

lock.unlock();

}

return true;

}

For a binary heap, inserting a node is simple. If the inserted nodes are smaller than the parent node, swap them and continue comparing with the parent node.

// This is done by inserting data x at position k of the array array and then adjusting the tree

private static <T> void siftUpComparable(int k, T x, Object[] array) {

Comparable<? super T> key = (Comparable<? super T>) x;

while (k > 0) {

// Parent Node Location of a[k] Node in Binary Heap

int parent = (k - 1) >>> 1;

Object e = array[parent];

if (key.compareTo((T) e) >= 0)

break;

array[k] = e;

k = parent;

}

array[k] = key;

}

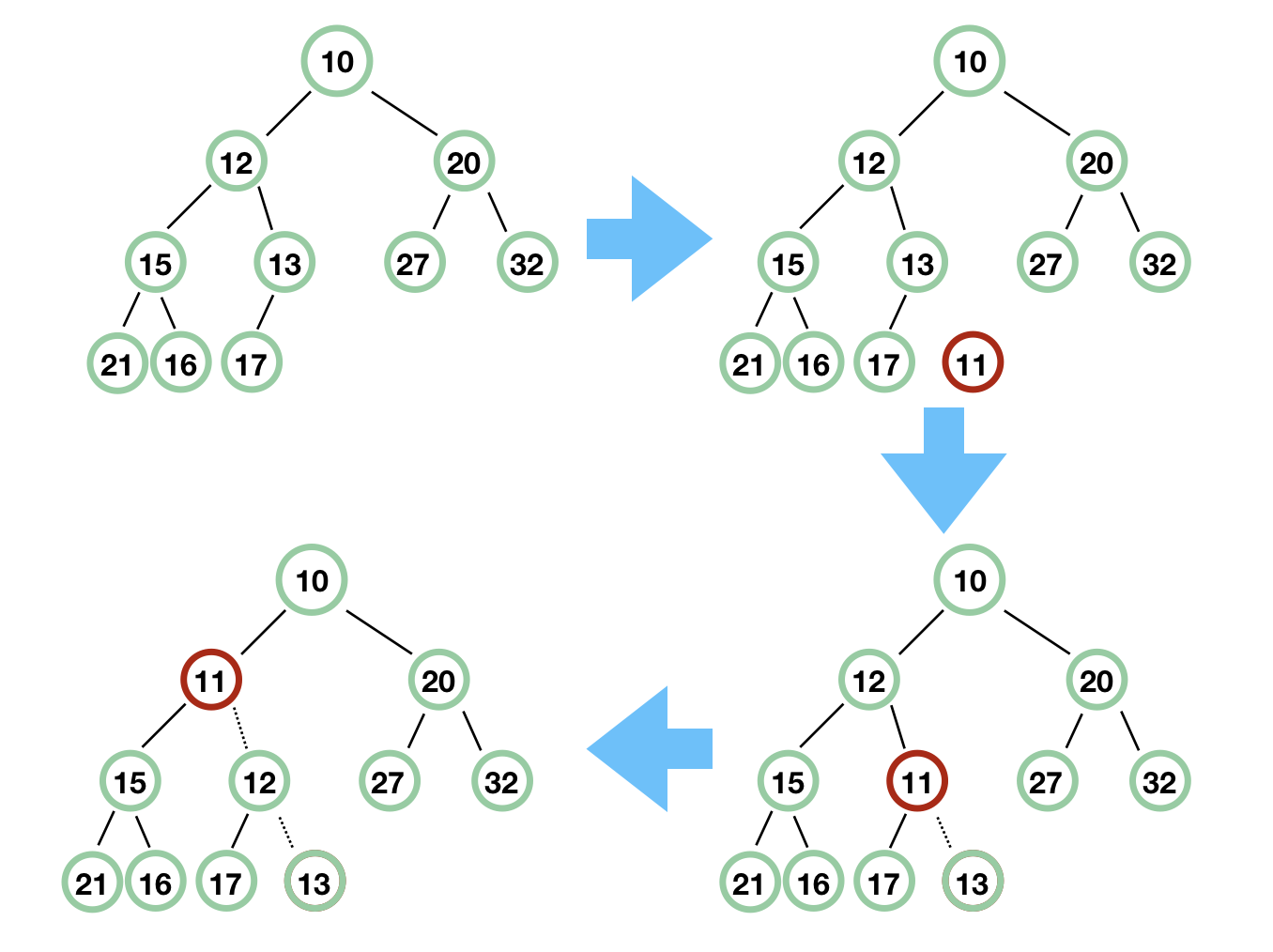

Let's illustrate with a diagram that we're going to insert 11 into the queue to see how siftUp works.

Let's look at the take method again:

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

// exclusive lock

lock.lockInterruptibly();

E result;

try {

// dequeue leaves the team

while ( (result = dequeue()) == null)

notEmpty.await();

} finally {

lock.unlock();

}

return result;

}

private E dequeue() {

int n = size - 1;

if (n < 0)

return null;

else {

Object[] array = queue;

// Queue head, for return

E result = (E) array[0];

// End of Queue Element Remove First

E x = (E) array[n];

// End of queue empty

array[n] = null;

Comparator<? super E> cmp = comparator;

if (cmp == null)

siftDownComparable(0, x, array, n);

else

siftDownUsingComparator(0, x, array, n, cmp);

size = n;

return result;

}

}

The dequeue method returns the header of the queue and adjusts the tree of the binary heap. Calling this method must first acquire an exclusive lock.

Nonsense, queuing is very simple because the head of the queue is the smallest element, corresponding to the first element of the array.The difficulty is that after the team leader leaves the queue, the tree needs to be adjusted.

private static <T> void siftDownComparable(int k, T x, Object[] array,

int n) {

if (n > 0) {

Comparable<? super T> key = (Comparable<? super T>)x;

// The half you get here must be a non-leaf node

// a[n] is the last element whose parent node is a[(n-1)/2].So the node represented by n >>>> 1 is definitely not a leaf node

// Next, let's do a line-by-line analysis with the diagram so it's intuitive and simple

// k is zero, x is 17, n is 9

int half = n >>> 1; // Get half = 4

while (k < half) {

// Left Child Node First

int child = (k << 1) + 1; // Get child = 1

Object c = array[child]; // c = 12

int right = child + 1; // right = 2

// If the right child node exists and is smaller than the left child node

// At this point array[right] = 20, so the condition is not satisfied

if (right < n &&

((Comparable<? super T>) c).compareTo((T) array[right]) > 0)

c = array[child = right];

// key = 17, c = 12, so the condition is not satisfied

if (key.compareTo((T) c) <= 0)

break;

// Fill 12 into the root node

array[k] = c;

// k is assigned to 1

k = child;

// After a round, we found that the subtree on the left of 12 is almost the same as the one we just had, lacking the root node, so the next step is simple.

}

array[k] = key;

}

}

Remember that the binary heap is a complete binary tree, so when root node 10 is removed, element 17 at the end must find a suitable place to place it.First, 17 and 10 cannot be directly exchanged, then the smaller nodes of the left and right children of root node 10 are slided up, that is, 12 is sliding up, then the original 12 leaves an empty node, then the smaller children of this empty node are slided up, that is, 13 is sliding up, and finally, a bit is left and 17 is added.

I adjusted the tree a little so that the reader could understand:

Okay, we're done with Priority BlockingQueue.

DelayQueue

html http://cmsblogs.com/ 『chenssy』

DelayQueue is an unbounded blocking queue that supports delayed acquisition of elements.The elements inside are all "deferable" elements, and the elements of the queue header are the first "expired" elements. If there are no elements in the queue that are expired, you cannot get elements from the queue header, even if there are elements.This means that elements can only be taken from the queue when the delay period expires.

DelayQueue is mainly used in two ways:

-Cache: Clear out cached data that has timed out in the cache

-Task timeout processing

DelayQueue

The key to DelayQueue's implementation are as follows:

- ReentrantLock ReentrantLock

- Condition object for blocking and notification

- Priority queue sorted by Delay time: PriorityQueue

- Thread element leader for optimizing blocking notifications

ReentrantLock, Condition are two objects that need not be addressed; they are the core of the BlockingQueue implementation.PriorityQueue is a queue that supports priority thread ordering (see [Dead Java Concurrency]----Blocked Queue for J.U.C: PriorityBlockingQueue ), followed by leader.Let's start with Delay, who is the key to delayed operations.

Delayed

The Delayed interface is used to mark objects that should execute after a given delay time and defines a long getDelay(TimeUnit unit) method that returns the remaining time associated with the object.Objects implementing the interface must also define a compareTo method that provides ordering consistent with the interface's getDelay method.

public interface Delayed extends Comparable<Delayed> { long getDelay(TimeUnit unit); }

How do I use this interface?The above is very clear, implement the getDelay() method of the interface and define the compareTo() method at the same time.

internal structure

First look at the definition of DelayQueue:

public class DelayQueue<E extends Delayed> extends AbstractQueue<E> implements BlockingQueue<E> { /** Re-lockable */ private final transient ReentrantLock lock = new ReentrantLock(); /** BlockingQueue Supporting Priority */ private final PriorityQueue<E> q = new PriorityQueue<E>(); /** For optimizing blocking */ private Thread leader = null; /** Condition */ private final Condition available = lock.newCondition(); /** * Omit a lot of code */ }

It's easy to see the internal structure of DelayQueue, but it's important to note that all elements of DelayQueue must inherit the Delayed interface.The mechanisms behind DelayQueue's internal implementation can also be understood here: PriorityQueue, which supports priority unbound queues, acts as a container, and the elements inside the container should implement the Delayed interface. Each time an element is added to the priority queue, it is ordered by the expiration time of the element and expires first.The elements of the have the highest priority.

offer()

public boolean offer(E e) { final ReentrantLock lock = this.lock; lock.lock(); try { // Insert element into PriorityQueue q.offer(e); // If the first element of the current element (highest priority), the leader is set to null, waking up all waiting threads if (q.peek() == e) { leader = null; available.signal(); } // Unbounded Queue, always return true return true; } finally { lock.unlock(); } }

Offer (E) is to add elements to PriorityQueue, which you can refer to ( [Dead Java Concurrency]----Blocked Queue for J.U.C: PriorityBlockingQueue ).The whole process is relatively simple, but it is a critical step to determine if the current element is the first element and, if so, to set leader=null, which is discussed later.

take()

public E take() throws InterruptedException { final ReentrantLock lock = this.lock; lock.lockInterruptibly(); try { for (;;) { // First element E first = q.peek(); // Empty first, blocked, waiting for off() operation to wake up if (first == null) available.await(); else { // Gets the timeout for the first element long delay = first.getDelay(NANOSECONDS); // <=0 means expired, paired, return if (delay <= 0) return q.poll(); first = null; // don't retain ref while waiting // Lead!= null proves other threads are operating, blocking if (leader != null) available.await(); else { // Otherwise, set the leader to the current thread, exclusive Thread thisThread = Thread.currentThread(); leader = thisThread; try { // Timeout Blocking available.awaitNanos(delay); } finally { // Release leader if (leader == thisThread) leader = null; } } } } } finally { // Wake up blocked threads if (leader == null && q.peek() != null) available.signal(); lock.unlock(); } }

First, get the first element, if the delay time for the first element is <= 0, then you can be right, just return.Otherwise, set first = null, which is set here primarily to avoid memory leaks.If leader!= null indicates that a thread is currently occupied, it will block, otherwise set leader to the current thread, and call awaitNanos() method to wait timed out.

first = null

Why is there a memory leak if first = null is not set here?Thread A arrives, the first element of the column does not expire, set leader =thread A, this is thread B coming because leader!= null, it will block, thread C is the same.If the thread is blocked, getting the first element of the column succeeds, listing the column.The first element of the column should be recycled at this time, but the problem is that it is also held by thread B, thread C, so it will not be recycled. There are only two threads here, if there are threads D, thread E...This will not be recycled indefinitely and will cause a memory leak.

This queue entry, exit, and other blocking queues are not very different, except to add an expiration time judgment when they are right.leader also reduces unnecessary blocking.

ConcurrentLinkedQueue

html http://cmsblogs.com/ 『chenssy』

There are two ways to implement a thread-safe queue: blocked and non-blocked.Blocking queues are all applications of locks, not of CAS algorithms.Let's start with a non-blocking algorithm: CoucurrentLinkedQueue.

ConcurrentLinkedQueue is a boundless thread-safe queue based on link nodes that uses FIFO principles to sort elements.It is implemented by using the "wait-free" algorithm (CAS algorithm).

CoucurrentLinkedQueue specifies the following invariants:

- The next of the last element in the queue is null

- Items for all undeleted nodes in the queue cannot be null and can be traversed from the head node to

- For a node to be deleted, instead of setting it to null directly, set its item field to null first (iterators skip nodes with null item)

- Allow header and tail updates to lag.What does this mean?That means head, tail don't always point to the first element and the last one (described later).

head immutability and variability:

- invariance

- All undeleted nodes can be traversed through the head node to

- head cannot be null

- next of head node cannot point to itself

- variability

- head item may or may not be null

2. Allow tail to lag the head, that is, call the succc() method and not reach tail from the head

- head item may or may not be null

Invariance and variability of tail

- invariance

- tail cannot be null

- variability

- tail's item may or may not be null

- The tail node's next domain can point to itself

3. Allow tail to lag the head, that is, call the succc() method and not reach tail from the head

Are these features already dizzy?That's OK. Let's see the source analysis below to understand these features.

ConcurrentLinkedQueue Source Analysis

The structure of CoucurrentLinkedQueue consists of a head node and a tail node, each of which consists of a node element item and a next reference to the next node through which the relationship between the node and the node is related to form a queue of a chain table.Node is the internal class of ConcurrentLinkedQueue, defined as follows:

private static class Node<E> { /** Node Element Domain */ volatile E item; volatile Node<E> next; //Initialize, get offsets for item and next, prepare for later CAS Node(E item) { UNSAFE.putObject(this, itemOffset, item); } boolean casItem(E cmp, E val) { return UNSAFE.compareAndSwapObject(this, itemOffset, cmp, val); } void lazySetNext(Node<E> val) { UNSAFE.putOrderedObject(this, nextOffset, val); } boolean casNext(Node<E> cmp, Node<E> val) { return UNSAFE.compareAndSwapObject(this, nextOffset, cmp, val); } // Unsafe mechanics private static final sun.misc.Unsafe UNSAFE; /** Offset */ private static final long itemOffset; /** Offset of the next element */ private static final long nextOffset; static { try { UNSAFE = sun.misc.Unsafe.getUnsafe(); Class<?> k = Node.class; itemOffset = UNSAFE.objectFieldOffset (k.getDeclaredField("item")); nextOffset = UNSAFE.objectFieldOffset (k.getDeclaredField("next")); } catch (Exception e) { throw new Error(e); } } }

Entry

Enrollment, we think, is a very simple process: the tail node next executes the new node, and then updates tail to the new node.From a single-threaded perspective, it should be OK, but what about multithreaded?If a thread is inserting, it must first get the tail node, then set the next node of the tail node to be the current node, but if there is already a thread that has just finished inserting, has the tail node changed?What about ConcurrentLinkedQueue in this case?Let's look at the source code first:

Offer (E): Insert the specified element at the end of the queue:

public boolean offer(E e) { //Check if node is null checkNotNull(e); // Create a new node final Node<E> newNode = new Node<E>(e); //Dead Loop Until Success for (Node<E> t = tail, p = t;;) { Node<E> q = p.next; // q == null indicates that p is already the last node and tries to join the end of the queue // If the insert fails, it means that other threads have modified the pointing of p if (q == null) { // --- 1 // casNext:t node's next point to the current node // casTail: Set tail tail node if (p.casNext(null, newNode)) { // --- 2 // Joining a node causes tail to differ more than one from the last node and needs to be updated if (p != t) // --- 3 casTail(t, newNode); // --- 4 return true; } } // p == q equals itself else if (p == q) // --- 5 // p == q means the node has been deleted // For multithreaded reasons, we also poll when offer(), if offer() happens to have poll() by that node // The updateHead() method in the poll() method then points the head to the current q and the p.next to itself, that is, p.next == p // This causes tail nodes to lag head (tail is in front of head), which needs to be reset p p = (t != (t = tail)) ? t : head; // --- 6 // Tail does not point to a tail node else // tail is no longer the last node, point p to the last node p = (p != t && t != (t = tail)) ? t : q; // --- 7 } }

Looking at the source code is still a bit confusing, inserting a node at a time can be a lot clearer.

Initialization

When ConcurrentLinkedQueue is initialized, the elements stored in head and tail are null, and head equals tail:

Add element A

Procedural analysis: insert element A for the first time, head = tail = dummyNode, all q = p.next = null, go directly to step 2:p.casNext(null, newNode), because p == t holds, step 3:casTail(t, newNode) will not be executed, and return directly.Insert node A as follows:

Add element B

Q = p.next = A, P = tail = dummyNode, so skip directly to step 7:p = (p!= T & & t!= (t = tail))? T: q;.At this point p = q, then do a second loop q = p.next = null, step 2:p == null holds, insert the node because p = q, t = tail, so step 3:p!= t holds, step 4: casTail(t, newNode), and then return.The following:

Add Node C

In this case t = tail, P = t, q = p.next = null, and insert element A as follows:

The entire offer() process has been analyzed here. Perhaps P == q is a bit difficult to understand. Is P not equal to q.next? Why is p == q?This question is analyzed in the list poll().

List

ConcurrentLinkedQueue provides the poll() method for listing operations.Listing mainly involves tail, while listing involves head.Let's look at the source code first:

public E poll() { // If p is deleted, you need to start over from the head restartFromHead: // What is this grammar?Never seen one for (;;) { for (Node<E> h = head, p = h, q;;) { // Node item E item = p.item; // Item is not null, set item to null if (item != null && p.casItem(item, null)) { // --- 1 // P!= head updates the head if (p != h) // --- 2 // P.next!= null, updates the head to p.next, otherwise updates to P. updateHead(h, ((q = p.next) != null) ? q : p); // --- 3 return item; } // p.next == null queue empty else if ((q = p.next) == null) { // --- 4 updateHead(h, p); return null; } // When one thread poll s, another thread has deleted the current P from the queue - p.next = p, P has been removed and cannot continue and needs to be restarted else if (p == q) // --- 5 continue restartFromHead; else p = q; // --- 6 } } }

This is simpler than the offer() method, which has an important one: updateHead(), which is used by CAS to update the head node as follows:

final void updateHead(Node<E> h, Node<E> p) { if (h != p && casHead(h, p)) h.lazySetNext(h); }

First, we delete the chain poll() of offer() above and add the A, B, C node structure as follows:

poll A

head = dumy, p = head, item = p.item = null, step 1 is not established, step 4: (q = p.next) == null is not established, p.next = A, skip to step 6, next cycle, p = A, so step 1 item!= null, p.casItem(item, null) succeeds, P == A!= h, so execute step 3:updateHead (h, ((q = P = p).next)!= null? Q: p), q = p.next = B!= null, updates head CAS to B, as follows:

poll B

Head = B, p = head = B, item = p.item = B, step is established, step 2:p!= h is not established, return directly, as follows:

poll C

Head = dumy, p = head = dumy, tiem = p.item = null, step 1 is not established, skip to step 4: (q = p.next) == null, not established, and skip to step 6, where p = q = C, item = C(item), step 1 is established, so C (item) is set to null, step 2:p!= h is established, execute step 3:updateHead (h, ((q = C)P.next)!= null? Q: p), as follows:

To see if it's clear here, let's analyze step 5 of offer():

else if(p == q){ p = (t != (t = tail))? t : head; }

ConcurrentLinkedQueue specifies that p == q indicates that the node has been deleted, meaning tail lags behind head and head cannot traverse tail through succ() method. What should I do?(t!= (t = tail)? T: head; (This code is really too readable to understand: Don't know if it can be interpreted as t!= tail? Tail: head) This code is mainly to see if the tail node has changed, if so, tail has been repositioned and just needs to be repositionedFind tail, or you'll just point to the head.

On the top one we insert element D again.Then p = head, q = p.next = null, execute step 1:q = null and p!= t, so execute step 4:, as follows:

Then insert element E, q = p.next = null, p == t, so insert E as follows:

At this point, the entire enrollment and queue of ConcurrentLinkedQueue has been analyzed. ConcurrentLinkedQueue LZ is really hard to understand, and after reading it, I also marvel at the ingenuity of the design, the use of CAS to complete data operations while allowing inconsistencies in the queue. This weak consistency is really strong.Once again exclamate Doug Lea's genius.

LinkedTransferQueue

html http://cmsblogs.com/ 『chenssy』

The various BlockingQueues mentioned above lock the entire queue for reading or writing. When there is a large amount of concurrency, the various locks are more resource-consuming and time-consuming. While the previous BlockingQueue does not lock the entire queue, it is a "queue" with no capacity. If there is such a queue, it canCan I have as much capacity as any other BlockingQueue and not lock the entire queue like SynchronousQueue?Yes!The answer is LinkedTransferQueue.

LinkedTransferQueue is a chain-based FIFO unbounded blocking queue that appears in JDK7.Doug Lea said LinkedTransferQueue was a smart queue.It is ConcurrentLinkedQueue,SynchronousQueue (in fair mode), unbounded LinkedBlockingQueues Superset of equal.Now that it's so bullish, it's necessary to figure out how it works.

LinkedTransferQueue

Before we look at the source code, let's take a look at how it works, so that we can see the source code.

LinkedTransferQueue uses a preemptive mode.What does that mean?Take it away if you have it, or take it up until you get it or time out or interrupt it.That is, when the consumer thread fetches elements from the queue, if it finds that the queue is empty, a null node is generated, and the park waits for the producer.Later, if a null element node is found when the producer thread is queued, the producer will not be queued, fill the node with elements directly, wake up the thread of the node, and the awakened consumer thread will pick up something and walk away.Is it a little bit SynchronousQueue What does it taste like?

structure

LinkedTransferQueue, like other BlockingQueues, inherits the AbstractQueue class, but it implements TransferQueue, and the TransferQueue interface inherits BlockingQueue, so TransferQueue is an extension of BlockingQueue, which provides a complete set of transfer interfaces:

public interface TransferQueue<E> extends BlockingQueue<E> { /** * If there is currently a consumer thread waiting to be fetched (using take() or poll() functions), the object element e is immediately transferred/transferred using this method; * If it does not exist, it returns false and does not enter the queue.This is a non-blocking operation */ boolean tryTransfer(E e); /** * If there is currently a consumer thread waiting to be acquired, hand it over immediately; * Otherwise, the current element e is inserted at the end of the queue and waiting to be blocked until the consumer thread removes it */ void transfer(E e) throws InterruptedException; /** * If there is currently a consumer thread waiting to be fetched, it is immediately transferred to it; otherwise, element e is inserted to the end of the queue and waiting to be consumed by the consumer thread; * If element e cannot be acquired by the consumer thread within the specified time, false is returned and the element is removed. */ boolean tryTransfer(E e, long timeout, TimeUnit unit) throws InterruptedException; /** * Determine if there is a consumer thread */ boolean hasWaitingConsumer(); /** * Gets the number of consumer threads waiting to get elements */ int getWaitingConsumerCount(); }

LinkedTransferQueue has several more methods than other BlockingQueue s.These methods play a central role in LinkedTransferQueue.

LinkedTransferQueue defines the following variables:

// Determine if it is multicore private static final boolean MP = Runtime.getRuntime().availableProcessors() > 1; // Number of spins private static final int FRONT_SPINS = 1 << 7; // Number of times the current node needs to spin while the precursor node is processing private static final int CHAINED_SPINS = FRONT_SPINS >>> 1; static final int SWEEP_THRESHOLD = 32; // Head Node transient volatile Node head; // End Node private transient volatile Node tail; // Number of failures to delete nodes private transient volatile int sweepVotes; /* * The xfer() method needs to be passed in to distinguish between different processes * xfer()Method is the core method of LinkedTransferQueue */ private static final int NOW = 0; // for untimed poll, tryTransfer private static final int ASYNC = 1; // for offer, put, add private static final int SYNC = 2; // for transfer, take private static final int TIMED = 3; // for timed poll, tryTransfer

Node Node

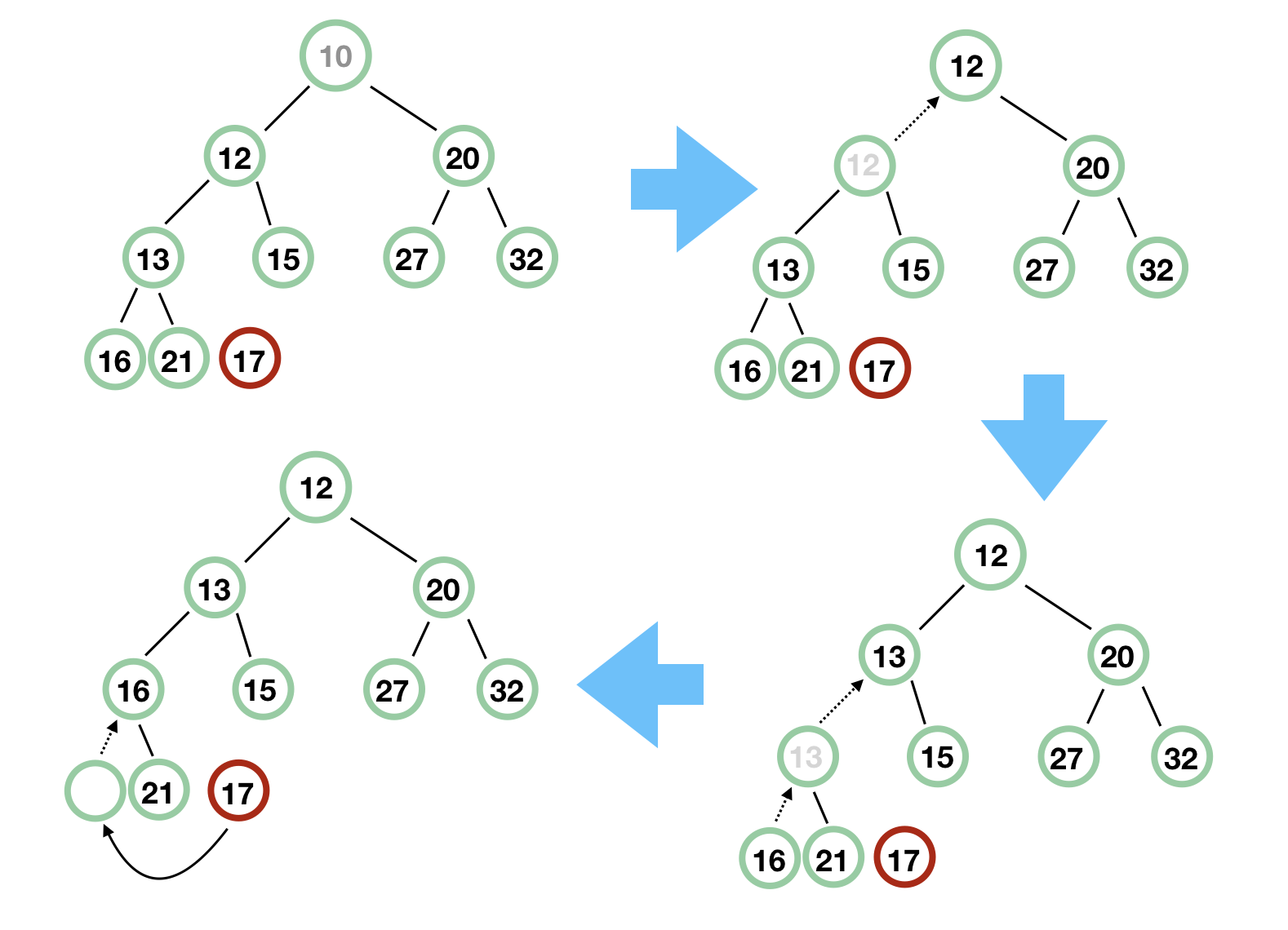

Node nodes are composed of four parts:

- isData: Indicates whether the node stores or retrieves data

- item: holds data, when isData is false, the node is null, when true, the node is null when matched

- Next: point to the next node

- waiter:park holds the consumer thread, and the thread is here

The structure is as follows:

The source code is as follows:

static final class Node { // Indicates whether the node stores or retrieves data final boolean isData; // Stores data, when isData is false, the node is null, when true, after matching, the node is null volatile Object item; //Point to Next Node volatile Node next; // park holds the consumer thread, and the thread is here volatile Thread waiter; // null until waiting /** * CAS Next field */ final boolean casNext(Node cmp, Node val) { return UNSAFE.compareAndSwapObject(this, nextOffset, cmp, val); } /** * CAS itme field */ final boolean casItem(Object cmp, Object val) { return UNSAFE.compareAndSwapObject(this, itemOffset, cmp, val); } /** * Constructor */ Node(Object item, boolean isData) { UNSAFE.putObject(this, itemOffset, item); // relaxed write this.isData = isData; } /** * Pointing the next field to itself is actually culling the nodes */ final void forgetNext() { UNSAFE.putObject(this, nextOffset, this); } /** * Called when a match has been made or when a node is cancelled */ final void forgetContents() { UNSAFE.putObject(this, itemOffset, this); UNSAFE.putObject(this, waiterOffset, null); } /** * Check whether the nodes have been matched, and if the match is canceled, the item changes */ final boolean isMatched() { Object x = item; return (x == this) || ((x == null) == isData); } /** * Is it an unmatched request node * If so, isData should be false, item == null, and item will have a value if the bits match */ final boolean isUnmatchedRequest() { return !isData && item == null; } /** * Returns true if a given node type cannot be hung after the current node */ final boolean cannotPrecede(boolean haveData) { boolean d = isData; Object x; return d != haveData && (x = item) != this && (x != null) == d; } /** * Match a data node */ final boolean tryMatchData() { // assert isData; Object x = item; if (x != null && x != this && casItem(x, null)) { LockSupport.unpark(waiter); return true; } return false; } private static final long serialVersionUID = -3375979862319811754L; // Unsafe mechanics private static final sun.misc.Unsafe UNSAFE; private static final long itemOffset; private static final long nextOffset; private static final long waiterOffset; static { try { UNSAFE = sun.misc.Unsafe.getUnsafe(); Class<?> k = Node.class; itemOffset = UNSAFE.objectFieldOffset (k.getDeclaredField("item")); nextOffset = UNSAFE.objectFieldOffset (k.getDeclaredField("next")); waiterOffset = UNSAFE.objectFieldOffset (k.getDeclaredField("waiter")); } catch (Exception e) { throw new Error(e); } } }

Node Node is the internal class of LinkedTransferQueue, which has a similar internal structure and a fair way of SynchronousQueue, and also provides some important methods.

put operation

LinkedTransferQueue provides add, put, offer methods for inserting elements into a queue, as follows:

public void put(E e) { xfer(e, true, ASYNC, 0); } public boolean offer(E e, long timeout, TimeUnit unit) { xfer(e, true, ASYNC, 0); return true; } public boolean offer(E e) { xfer(e, true, ASYNC, 0); return true; } public boolean add(E e) { xfer(e, true, ASYNC, 0); return true; }

Since LinkedTransferQueue is unbound and unblocked, calling the xfer method passes in ASYNC and returns true directly.

take operation

LinkedTransferQueue provides poll, take methods for listing elements:

public E take() throws InterruptedException { E e = xfer(null, false, SYNC, 0); if (e != null) return e; Thread.interrupted(); throw new InterruptedException(); } public E poll() { return xfer(null, false, NOW, 0); } public E poll(long timeout, TimeUnit unit) throws InterruptedException { E e = xfer(null, false, TIMED, unit.toNanos(timeout)); if (e != null || !Thread.interrupted()) return e; throw new InterruptedException(); }

This is a bit different from the put operation. The take() method passes in SYNC, blocking.Pol() passes in NOW, and poll(long timeout, TimeUnit unit) passes in TIMED.

tranfer operation

To implement the TransferQueue interface, you need to implement it:

public boolean tryTransfer(E e, long timeout, TimeUnit unit) throws InterruptedException { if (xfer(e, true, TIMED, unit.toNanos(timeout)) == null) return true; if (!Thread.interrupted()) return false; throw new InterruptedException(); } public void transfer(E e) throws InterruptedException { if (xfer(e, true, SYNC, 0) != null) { Thread.interrupted(); // failure possible only due to interrupt throw new InterruptedException(); } } public boolean tryTransfer(E e) { return xfer(e, true, NOW, 0) == null; }

xfer()

From the source code of the core methods above, we can clearly see that the xfer() method is called ultimately, which accepts four parameters, E for item or null, havaData with true put operation and false take operation, how (four values NOW, ASYNC, SYNC, or TIMED, respectively), timeout nanos.

private E xfer(E e, boolean haveData, int how, long nanos) { // havaData is true, but e == null throws a null pointer if (haveData && (e == null)) throw new NullPointerException(); Node s = null; // the node to append, if needed retry: for (;;) { // Matching from the first node // p == null queue empty for (Node h = head, p = h; p != null;) { // Model, request or data boolean isData = p.isData; // item field Object item = p.item; // Find a node that does not match // Item!= P is itself, indicating no match // (item!= null) == isData, indicating that the model conforms if (item != p && (item != null) == isData) { // Node type is identical to pending type, so it must not match if (isData == haveData) // can't match break; // Match, add E to the item field // If the item of p is data, e is null, and if the item of p is null, e is data if (p.casItem(item, e)) { // match // for (Node q = p; q != h;) { Node n = q.next; // update by 2 unless singleton if (head == h && casHead(h, n == null ? q : n)) { h.forgetNext(); break; } // advance and retry if ((h = head) == null || (q = h.next) == null || !q.isMatched()) break; // unless slack < 2 } // Wake up the waiter thread of p after a match; reservation s call people to receive goods, data calls null to receive goods LockSupport.unpark(p.waiter); return LinkedTransferQueue.<E>cast(item); } } // Advance if already matched Node n = p.next; // If the next of p points to p itself, the p node has already been processed by another thread and can only be restarted from the head p = (p != n) ? n : (h = head); // Use head if p offlist } // Processing if no matching node is found // NOW is untimed poll, tryTransfer, no queue required if (how != NOW) { // No matches available // s == null, create a new node if (s == null) s = new Node(e, haveData); // Enqueue, return to the precursor node Node pred = tryAppend(s, haveData); // The returned precursor node is null, that is, race, grabbed by others, then continue for the whole if (pred == null) continue retry; // ASYNC does not require blocking wait if (how != ASYNC) return awaitMatch(s, pred, e, (how == TIMED), nanos); } return e; } }

The core of the algorithm is to find matching nodes and return them, otherwise join the queue (NOW returns directly):

- matched.Determine the matching criteria (isData is not the same, it does not match itself), casItem after matching, then unpark matches the waiter thread of the node, or null receipt if reservation s.

- unmatched.If no matching node is found, it is handled according to the incoming how, NOW returns directly, the other three first-in pairs, ASYNC returns after enlistment, and SYNC and TIMED block waiting for matching.

In fact, this logic is simpler than SynchronousQueue.

If no matching node is found and how!= NOW will join the queue, then call the tryAppend method:

private Node tryAppend(Node s, boolean haveData) { // Start with tail for (Node t = tail, p = t;;) { Node n, u; // Set node S to head if queue is empty if (p == null && (p = head) == null) { if (casHead(null, s)) return s; } // If data else if (p.cannotPrecede(haveData)) return null; // Not the last node else if ((n = p.next) != null) p = p != t && t != (u = tail) ? (t = u) : (p != n) ? n : null; // CAS failed, generally due to p.next!= null, there may be other tail s added, recommended forward else if (!p.casNext(null, s)) p = p.next; // re-read on CAS failure else { if (p != t) { // update if slack now >= 2 while ((tail != t || !casTail(t, s)) && (t = tail) != null && (s = t.next) != null && // advance and retry (s = s.next) != null && s != t); } return p; } } }

The tryAppend method adds an S node to tail and returns its precursor node.Okay, I admit I'm a little dizzy with this code!!!

After joining the queue, call the awaitMatch() method to block the wait if how is not ASYNC:

private E awaitMatch(Node s, Node pred, E e, boolean timed, long nanos) { // Timeout control final long deadline = timed ? System.nanoTime() + nanos : 0L; // Current Thread Thread w = Thread.currentThread(); // Number of spins int spins = -1; // initialized after first item and cancel checks // random number ThreadLocalRandom randomYields = null; // bound if needed for (;;) { Object item = s.item; //Matched. There may be other threads that match the thread if (item != e) { // Undo the node s.forgetContents(); return LinkedTransferQueue.<E>cast(item); } // Thread interrupted or timed out.Then the call sets s node item to e and waits for cancellation if ((w.isInterrupted() || (timed && nanos <= 0)) && s.casItem(e, s)) { // cancel // Disconnect Node unsplice(pred, s); return e; } // spin if (spins < 0) { // Calculating the number of spins if ((spins = spinsFor(pred, s.isData)) > 0) randomYields = ThreadLocalRandom.current(); } // spin else if (spins > 0) { --spins; // Random number generated == 0, stop thread?Not quite understand.... if (randomYields.nextInt(CHAINED_SPINS) == 0) Thread.yield(); } // Set the current thread to the waiter domain of the node // s.waiter == null is bound to work in the beginning. else if (s.waiter == null) { s.waiter = w; // request unpark then recheck } // Timeout Blocking else if (timed) { nanos = deadline - System.nanoTime(); if (nanos > 0L) LockSupport.parkNanos(this, nanos); } else { // Not timeout blocking LockSupport.park(this); } } }

The entire awaitMatch process is not very different from the awaitFulfill of SynchronousQueue, but Thread.yield() is called during spinning; what's wrong?

During the awaitMatch process, if the thread is interrupted or timed out, the unsplice() method is called to remove the node:

final void unsplice(Node pred, Node s) { s.forgetContents(); // forget unneeded fields if (pred != null && pred != s && pred.next == s) { Node n = s.next; if (n == null || (n != s && pred.casNext(s, n) && pred.isMatched())) { for (;;) { // check if at, or could be, head Node h = head; if (h == pred || h == s || h == null) return; // at head or list empty if (!h.isMatched()) break; Node hn = h.next; if (hn == null) return; // now empty if (hn != h && casHead(h, hn)) h.forgetNext(); // advance head } if (pred.next != pred && s.next != s) { // recheck if offlist for (;;) { // sweep now if enough votes int v = sweepVotes; if (v < SWEEP_THRESHOLD) { if (casSweepVotes(v, v + 1)) break; } else if (casSweepVotes(v, 0)) { sweep(); break; } } } } } }

The main process has been completed, which summarizes:

- Whether it's an in-pair, an out-pair, or an exchange, it eventually runs into the xfer (E, Boolean haveData, int how, long nanos) method, but the incoming how is different

- If the queue is not empty, try to find if there is a matching node in the queue, set the item of the matching node to e, and wake up the waiter thread of the matching node.If reservation s call for receipt, data calls for null receipt

- If the queue is empty or no matching node is found and how!= NOW, the tryAppend() method is called to add a node to the tail of the queue and return its precursor node

- If the node how!= NOW && how!= ASYNC, call the awaitMatch() method to block the wait. During the blocking wait, the awaitFulfill() logic of SynchronousQuque ue is similar to that of the awaitFulfill(), which spins first, then determines whether the spin is required, and if it is interrupted or timed out, moves the node out of the queue

Example

This excerpt from Understanding the LinkedTransferQueue principle of JAVA 1.7 concurrency .I feel that after reading the above source code, I will have a better understanding and mastery in combination with this example.

1: Head->Data Input->Data

Match: cannot match is found based on their attributes because they are homogeneous

Processing nodes: so put the new data behind the original data and move the head er one bit backward, the same is true for Reservation

HEAD=DATA->DATA

2:Head->Data Input->Reservation

Match: Successful match changes the item of the Data to the value of reservation s (null, master) and returns the data.

Processing node: No move, head is still in place

HEAD=DATA (used)

3:Head->Reservation Input->Data

Match: Successful match changes the Reservation item to the value of the Data (owner) and calls waiter to fetch it

Processing Node: Inactive

HEAD=RESERVATION (used)

summary

BlockingQueue

The BlockingQueue interface implements the Queue interface, which supports two additional operations: waiting for the queue to become non-empty when the element is acquired and waiting space to become available when the element is stored.He provides four mechanisms relative to the same operation: throwing exceptions, returning special values, blocking waits, and timeouts:

BlockingQueue is often used in producer and consumer scenarios.

Seven blocking queues are available in JDK 8 (DelayedWorkQueue in the figure above is an internal class of ScheduledThreadPoolExecutor):

- ArrayBlockingQueue: A bounded blocking queue consisting of an array structure.

- LinkedBlockingQueue: An unbounded blocking queue consisting of a chain table structure.

- PriorityBlockingQueue: An unbounded blocking queue that supports priority ordering.

- DelayQueue: An unbounded blocking queue implemented using a priority queue.

- SynchronousQueue: A blocked queue that does not store elements.

- LinkedTransferQueue: An unbounded blocking queue consisting of a chain table structure.