I. what is AQS?

AQS is the abbreviation of abustatqueuedsynchronizer. It is a Java provided underlying synchronization tool class. It uses an int type variable to represent the synchronization state, and provides a series of CAS operations to manage the synchronization state. The main function of AQS is to provide unified underlying support for concurrent synchronization components in Java. For example, ReentrantLock and CountdowLatch are implemented based on AQS. The usage of AQS is to implement its template method by inheriting AQS, and then take subclasses as internal classes of synchronization components.

In addition, we need to know what is spinlock: when a thread is acquiring a lock, if the lock has been acquired by another thread, the thread will wait in a loop, and then constantly judge whether the lock can be acquired successfully, and will not exit the loop until the lock is acquired.

Two modes of AQS (sharing mode and exclusive mode)

2.1 introduction to exclusive mode and sharing mode

- Exclusive mode refers to the application scenario in which one thread is not allowed to write the file when another thread is writing the file. (read lock of CountDownLatch and ReadWriteLock)

- Shared mode means that multiple threads are allowed to acquire the same lock and may succeed. Exclusive mode means that if a lock is held by one thread, other threads must wait. Multiple threads can read a file in shared mode. (ReentrantLock)

2.2 implementation differences between exclusive lock and shared lock (specific implementation will be discussed later)

- The synchronization state value of exclusive lock is 1, that is, only one thread can obtain the synchronization state successfully at the same time

- The synchronization status of the shared lock is > 1, and the value is determined by the upper synchronization component.

- Release the direct successor node after the head node in exclusive lock queue finishes running

- Release all nodes after the head node in the shared lock queue runs

- In the shared lock, multiple threads (i.e. nodes in the synchronization queue) will obtain the synchronization status successfully at the same time.

Whether exclusive lock or shared lock, it is essentially the acquisition of a variable state in AQS. State is an atomic int variable, which can be used to represent the lock state, resource number, etc.

III. synchronization queue

Synchronous queue is an important part of AQS. It is a two terminal queue. It follows the FIFO (first in, first out) principle. It is mainly used to store the blocked threads on the lock. When a thread attempts to acquire the lock, if it has been occupied, the current thread will be constructed into a Node node. If it reaches the end of the synchronous queue, the head Node of the queue is successfully acquired. When the thread of the header Node releases the lock, it wakes up the later Node and releases the reference of the current header Node.

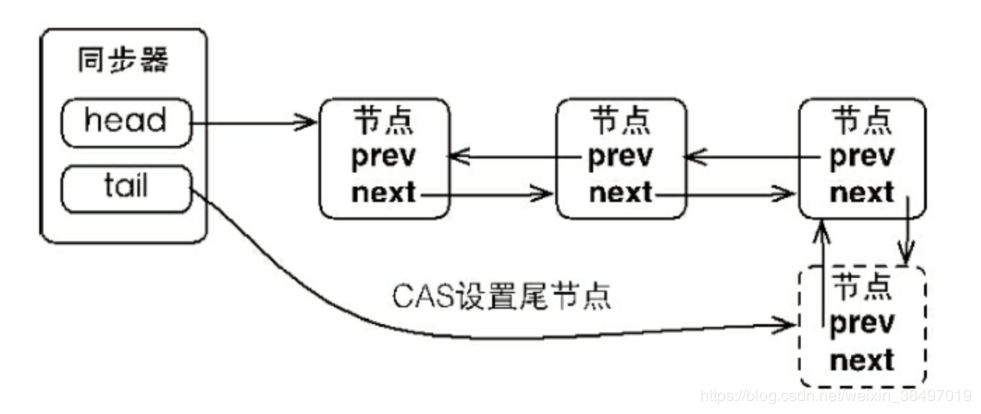

Nodes join the synchronization queue:

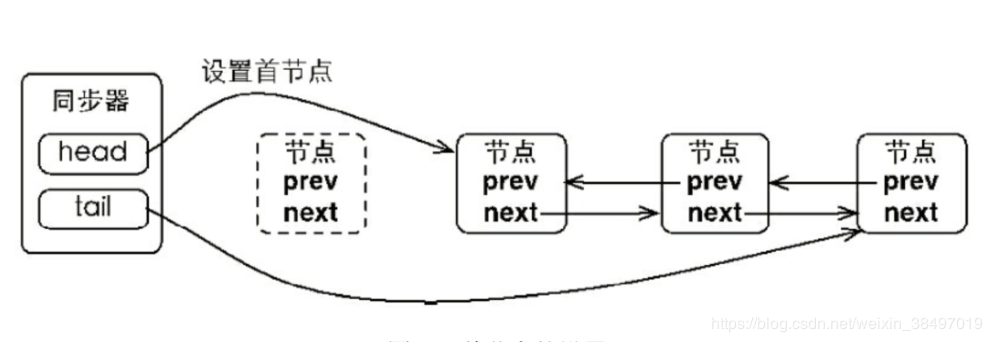

Settings of the head node

The internal part of the synchronization queue is composed of Node. First, the internal class Node of AQS is introduced. The source code is as follows:

static final class Node { /** Marker to indicate a node is waiting in shared mode */ static final Node SHARED = new Node(); /** Marker to indicate a node is waiting in exclusive mode */ static final Node EXCLUSIVE = null; /** waitStatus value to indicate thread has cancelled */ static final int CANCELLED = 1; /** waitStatus value to indicate successor's thread needs unparking */ static final int SIGNAL = -1; /** waitStatus value to indicate thread is waiting on condition */ static final int CONDITION = -2; /** * waitStatus value to indicate the next acquireShared should * unconditionally propagate */ static final int PROPAGATE = -3; /** * Status field, taking on only the values: * SIGNAL: The successor of this node is (or will soon be) * blocked (via park), so the current node must * unpark its successor when it releases or * cancels. To avoid races, acquire methods must * first indicate they need a signal, * then retry the atomic acquire, and then, * on failure, block. * CANCELLED: This node is cancelled due to timeout or interrupt. * Nodes never leave this state. In particular, * a thread with cancelled node never again blocks. * CONDITION: This node is currently on a condition queue. * It will not be used as a sync queue node * until transferred, at which time the status * will be set to 0. (Use of this value here has * nothing to do with the other uses of the * field, but simplifies mechanics.) * PROPAGATE: A releaseShared should be propagated to other * nodes. This is set (for head node only) in * doReleaseShared to ensure propagation * continues, even if other operations have * since intervened. * 0: None of the above * * The values are arranged numerically to simplify use. * Non-negative values mean that a node doesn't need to * signal. So, most code doesn't need to check for particular * values, just for sign. * * The field is initialized to 0 for normal sync nodes, and * CONDITION for condition nodes. It is modified using CAS * (or when possible, unconditional volatile writes). */ volatile int waitStatus; /** * Link to predecessor node that current node/thread relies on * for checking waitStatus. Assigned during enqueuing, and nulled * out (for sake of GC) only upon dequeuing. Also, upon * cancellation of a predecessor, we short-circuit while * finding a non-cancelled one, which will always exist * because the head node is never cancelled: A node becomes * head only as a result of successful acquire. A * cancelled thread never succeeds in acquiring, and a thread only * cancels itself, not any other node. */ volatile Node prev; /** * Link to the successor node that the current node/thread * unparks upon release. Assigned during enqueuing, adjusted * when bypassing cancelled predecessors, and nulled out (for * sake of GC) when dequeued. The enq operation does not * assign next field of a predecessor until after attachment, * so seeing a null next field does not necessarily mean that * node is at end of queue. However, if a next field appears * to be null, we can scan prev's from the tail to * double-check. The next field of cancelled nodes is set to * point to the node itself instead of null, to make life * easier for isOnSyncQueue. */ volatile Node next; /** * The thread that enqueued this node. Initialized on * construction and nulled out after use. */ volatile Thread thread; /** * Link to next node waiting on condition, or the special * value SHARED. Because condition queues are accessed only * when holding in exclusive mode, we just need a simple * linked queue to hold nodes while they are waiting on * conditions. They are then transferred to the queue to * re-acquire. And because conditions can only be exclusive, * we save a field by using special value to indicate shared * mode. */ Node nextWaiter; }

The implementation of Node is very simple, that is, the implementation of a common two-way linked list. Here are some internal waiting states:

- Canceled: the value is 1. The current node is CANCELLED due to timeout or interrupt.

- SIGNAL: the value is - 1, indicating that the front node of the current node is blocked, and the current node needs to execute unpark to wake up the subsequent node during release or cancel.

- Condition: the value is - 2. The current node is waiting for the condition, which will be used when the condition is used.

- Promote: the value is - 3, (for sharing lock) releaseShared() operation needs to be passed to other nodes. This state is set in doReleaseShared to ensure that subsequent nodes can obtain shared resources.

- 0: initial state. The current node is in sync queue, waiting to acquire the lock.

In addition, AQS has provided us with basic operation of synchronizer. If we want to customize synchronizer, we must implement the following methods: (key point here)

- tryAcquire(int): exclusive mode. When trying to get the resource, it returns true if it succeeds, and false if it fails.

- tryRelease(int): exclusive. When trying to release a resource, it returns true if it succeeds, and false if it fails.

- tryAcquireShared(int): sharing mode. Try to get resources. A negative number indicates failure; a 0 indicates success, but there are no remaining resources available; a positive number indicates success, and there are remaining resources.

- Tryreleased (int): shared by. When trying to release resources, it returns true if successful and false if failed.

- Isheldexclusive(): whether the thread is exclusive of resources. Only Condition is needed to implement it.

IV. acquisition and release process of exclusive lock and shared lock

4.1 acquisition and release process of exclusive lock

Exclusive lock acquire lock

The source code is as follows:

//Exclusive mode acquire lock public final void acquire(int arg) { if (!tryAcquire(arg) && acquireQueued(addWaiter(Node.EXCLUSIVE), arg)) selfInterrupt(); }

From the source code, we can see that three methods are called internally, tryAcquire(int),addWaiter(Node), and acquireQueued(Node,int). Later, each method will be analyzed in detail. The flow of acquire(int) method is as follows:

- Call the entry method acquire(int).

- Call the template method tryAcquire(int) to try to acquire the lock. If it succeeds, it will return. The thread will enter the waiting queue and block. If it fails, it will enter the next step.

- Call addWaiter(Node) to construct the current thread into a Node node, and use CAS to add it to the synchronization queue to the tail, then the Node corresponds to the thread to enter the spin state.

- Call acquirequeueueued (node, int) when spinning. First, judge whether ① its predecessor node is head node (head node) and ② whether the synchronization state is obtained successfully. If both conditions are true, set the node of the current thread as the head node. If not, use LockSupport.park(this) to suspend the current thread and wait for it to wake up.

- If the thread is interrupted during the waiting process and does not respond, call selfInterrupt() method according to the returned interrupt state after obtaining the resource successfully and then fill in the interrupt state.

Exclusive lock release lock

Release the specified amount of resources in exclusive mode. After successful release, call unparkSuccessor to wake up the next node of head.

// Exclusive mode release lock public final boolean release(int arg) { if (tryRelease(arg)) {//Try to free resources Node h = head;//Head node if (h != null && h.waitStatus != 0) unparkSuccessor(h);//Wake up the next node of the head return true; } return false; }

We can see from the source code that three methods tryRelease(int) and unparsuccessor (node) are mainly called internally. The process is as follows: tryRelease(int) and unparsuccessor (node). Later, each method will be analyzed in detail. The process of release(int) method is as follows:

- Call the entry method release(int).

- Call the template method tryRelease(int) to release the synchronization state.

- Gets the next node of the current node.

- Use LockSupport.unpark(currentNode.next.thread) to wake up the successor node (next step).

4.2 acquisition and release process of shared lock

Shared lock acquire lock

// Shared mode acquire lock public final void acquireShared(int arg) { if (tryAcquireShared(arg) < 0) doAcquireShared(arg); }

We can see from the source code that there are mainly two methods called internally, tryAcquireShared(int) and doAcquireShared(int). tryAcquireShared needs to be implemented by a custom synchronizer. Each method will be analyzed in detail later. The acquireShared method flow is as follows:

- Call acquiresshared (int) entry method.

- Enter the tryAcquireShared(int) template method to obtain the synchronization state. If the return value is > = 0, it means that there is a surplus of synchronization state. If the lock is acquired successfully, the thread will enter the waiting queue to block.

- If the tryAcquireShared(int) return value is less than 0, it means that obtaining the synchronization state failed. Add a Node of shared type to the end of the queue, and then the Node will enter the spin state.

- When spinning, first check whether the precursor node is released as the head node & & tryacquireshared() is > = 0 (that is, the synchronization status is successfully obtained).

- If yes, the current node is executable. At the same time, the current node is set as the head node, and all subsequent nodes are awakened.

- If not, use LockSupport.unpark(this) to suspend the current thread and wait for it to wake up by the predecessor node.

Shared lock release lock

// Shared mode release lock public final boolean releaseShared(int arg) { if (tryReleaseShared(arg)) { doReleaseShared();//Release lock and wake up subsequent nodes return true; } return false; }

Call the releaseShared(arg) template method to release the synchronization state.

If it is released to, it traverses the entire queue and wakes up all subsequent nodes with LockSupport.unpark(nextNode.thread).

V. ReentrantLock

Reentry lock means that after the current line successfully acquires the lock, if it accesses the critical area again, it will not generate mutually exclusive behavior to itself. ReentrantLock and synchronized are reentrant locks in Java. Synchronized is implemented by jvm. Even though ReentrantLock is implemented based on AQS.

At the same time, ReentrantLock also provides two modes: Fair lock and unfair lock.

The basic principle of reentry lock is to determine whether the thread obtaining the lock last time is the current thread. If so, it can enter the critical area again. If not, it will block. The most important logic of reentry lock is to judge whether the thread obtaining lock last time is the current thread.

5.1 fair lock

Fair lock means that when multiple threads try to acquire a lock, the order of successful acquisition of the lock is the same as that of the request for acquisition of the lock. Therefore, the core of fair lock is to determine whether the current node in the synchronization queue has a precursor node.

Since ReentrantLock is implemented based on AQS, the underlying layer obtains the lock by operating the synchronization state. Here is the implementation logic of fair lock:

/** * tryAcquire Fair version of. Access is not granted unless it is a recursive call or there is no caller or the first call. */ protected final boolean tryAcquire(int acquires) { // Get current thread final Thread current = Thread.currentThread(); // Get synchronization status through AQS int c = getState(); // Here is the core of fair lock, that is, to determine whether the current node in the synchronization queue has a precursor node (the key point of fair lock) // And if the synchronization state is 0, the critical area is unlocked. if (c == 0) { if (!hasQueuedPredecessors() && compareAndSetState(0, acquires)) { //Set current thread as lock holder setExclusiveOwnerThread(current); return true; } } // If the critical area is locked, and the thread that last acquired the lock is the current thread (re-enter the lock key) else if (current == getExclusiveOwnerThread()) { // Incremental synchronization status int nextc = c + acquires; if (nextc < 0) throw new Error("Maximum lock count exceeded"); setState(nextc); return true; } return false; } }

5.2 unfair lock

Let's look at the implementation logic of unfair locks:

Unfair lock means that when the lock status is available, whether there are other threads waiting on the current lock or not, the latest thread has the opportunity to seize the lock.

The following code is unfair lock and core implementation. It can be seen that as long as the synchronization status is 0, any thread calling lock is likely to acquire the lock, instead of following the FIFO (first in first out) principle of lock request.

/** * Perform unfair tryLock. tryAcquire is implemented in a subclass, but both require an unfair attempt by the tryf method. */ final boolean nonfairTryAcquire(int acquires) { // Get current thread final Thread current = Thread.currentThread(); // Get synchronization status through AQS int c = getState(); // If the synchronization state is 0, the critical area is unlocked. if (c == 0) { // Modify synchronization status, i.e. lock if (compareAndSetState(0, acquires)) { //Set current thread as lock holder setExclusiveOwnerThread(current); return true; } } // If the critical area is locked, and the thread that last acquired the lock is the current thread (re-enter the lock key) else if (current == getExclusiveOwnerThread()) { // Incremental synchronization status int nextc = c + acquires; if (nextc < 0) // overflow throw new Error("Maximum lock count exceeded"); setState(nextc); return true; } return false; }

From the above code, we can see that the difference between fair lock and unfair lock is only to judge whether the current node has a precursor node! Hasqueuedpredecessors() & &. From AQS, if the current thread fails to acquire the lock, it will be added to the AQS synchronization queue. Then, if the node in the synchronization queue has a precursor node, it means that the existing precursor node threads are comparable. The front node thread acquires the lock earlier, so it can only acquire the lock after waiting for the previous thread to release the lock.

Vi. ReentrantReadWriteLock

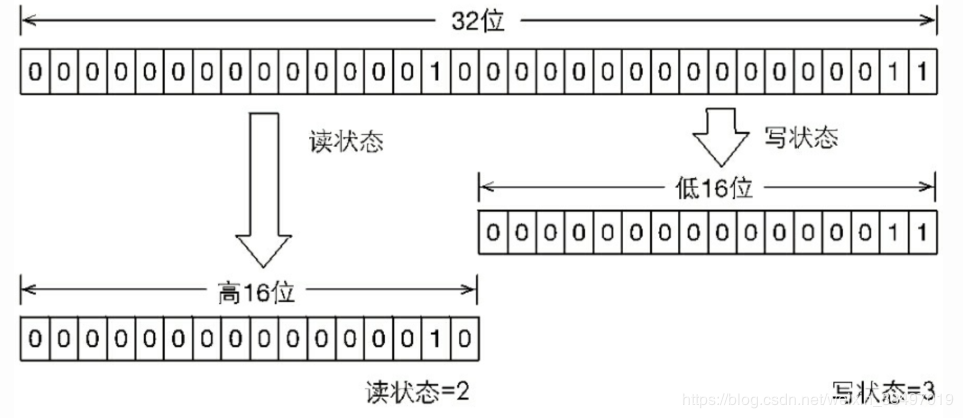

Java provides an implementation of ReentrantReadWriteLock based on AQS to read-write lock. The implementation principle of the read-write lock is to split the synchronous variable state according to the high 16 bits and the low 16 bits. The high 16 bits represent the read lock and the low 16 bits represent the write lock.

6.1 acquisition and release of read lock

Read lock is a shared lock. First look at the source code: ReadLock.java

public static class ReadLock implements Lock, java.io.Serializable { private static final long serialVersionUID = -5992448646407690164L; //AQS objects held private final Sync sync; protected ReadLock(ReentrantReadWriteLock lock) { sync = lock.sync; } //Get shared lock public void lock() { sync.acquireShared(1); } //Get shared lock (response interrupt) public void lockInterruptibly() throws InterruptedException { sync.acquireSharedInterruptibly(1); } //Attempt to acquire shared lock public boolean tryLock(long timeout, TimeUnit unit) throws InterruptedException { return sync.tryAcquireSharedNanos(1, unit.toNanos(timeout)); } //Release lock public void unlock() { sync.releaseShared(1); } //New conditions public Condition newCondition() { throw new UnsupportedOperationException(); } public String toString() { int r = sync.getReadLockCount(); return super.toString() + "[Read locks = " + r + "]"; } }

Acquisition of read lock

The acquisition lock method of the read lock calls acquiresshared and acquiresshared of the synchronizer sync, which share the lock before. The exclusive lock has been analyzed. This article mainly introduces the implementation of tryAcquireShared in ReentrantReadWriteLock. The source code of tryAcquireShared(int) is as follows:

protected final int tryAcquireShared(int unused) { // Get current thread Thread current = Thread.currentThread(); // Get synchronization status through AQS int c = getState(); // The thread holding the write lock can acquire the read lock. If the thread acquiring the lock is not the current thread, return - 1. if (exclusiveCount(c) != 0 && getExclusiveOwnerThread() != current) return -1; // Acquire number of read locks int r = sharedCount(c); // Qualified to acquire read lock during inspection if (!readerShouldBlock() && r < MAX_COUNT && compareAndSetState(c, c + SHARED_UNIT)) { // Get read lock for the first time, initialize firstReader and firstReaderHoldCount if (r == 0) { firstReader = current; firstReaderHoldCount = 1; } else if (firstReader == current) { // The current thread is the first one to acquire a read lock firstReaderHoldCount++; } else { // Update cachedHoldCounter HoldCounter rh = cachedHoldCounter; if (rh == null || rh.tid != getThreadId(current)) cachedHoldCounter = rh = readHolds.get(); else if (rh.count == 0) readHolds.set(rh); rh.count++; // Number of read locks acquired by update } return 1; } return fullTryAcquireShared(current); // The next step will be }

Read lock acquisition steps are explained as follows:

- If the write lock has been held, you can continue to acquire the read lock at this time. However, if the thread holding the write lock is not the current thread, you can directly return - 1 (indicating acquisition failure).

- If there is no need to block waiting (determined by fairness) when trying to acquire the lock, and the share count of the read lock is less than the max count, then directly update the share count of the read lock through CAS function, and finally increase the number of times the current thread acquires the read lock by + 1.

- If the second step fails, call fullTryAcquireShared to try to acquire the read lock (next).

Obtain the fullTryAcquireShared source code as follows:

final int fullTryAcquireShared(Thread current) { HoldCounter rh = null; for (;;) {//spin int c = getState(); //The thread holding the write lock can acquire the read lock. If the thread acquiring the lock is not the current thread, return - 1. if (exclusiveCount(c) != 0) { if (getExclusiveOwnerThread() != current) return -1; // else we hold the exclusive lock; blocking here // would cause deadlock. } else if (readerShouldBlock()) {//Need obstruction // Make sure we're not acquiring read lock reentrantly //If the current thread is the first thread to acquire the read lock, continue to execute. if (firstReader == current) { // assert firstReaderHoldCount > 0; } else { //Update lock counter if (rh == null) { rh = cachedHoldCounter; if (rh == null || rh.tid != getThreadId(current)) { rh = readHolds.get(); if (rh.count == 0) readHolds.remove();//Current thread holds 0 read locks, remove counter } } if (rh.count == 0) return -1; } } if (sharedCount(c) == MAX_COUNT)//Maximum number of read locks exceeded throw new Error("Maximum lock count exceeded"); if (compareAndSetState(c, c + SHARED_UNIT)) {//Number of CAS update read locks if (sharedCount(c) == 0) {//Get read lock for the first time firstReader = current; firstReaderHoldCount = 1; } else if (firstReader == current) {//The current thread is the first one to acquire read lock, and update the number of holds firstReaderHoldCount++; } else { //Update lock counter if (rh == null) rh = cachedHoldCounter; if (rh == null || rh.tid != getThreadId(current)) rh = readHolds.get();//Update to counter for current thread else if (rh.count == 0) readHolds.set(rh); rh.count++; cachedHoldCounter = rh; // cache for release } return 1; } } }

Release of read lock

The release unlock method of read lock calls releaseShared, releaseShared of synchronizer sync. The two shared the lock in the front. The exclusive lock has been analyzed. This article mainly introduces the implementation of tryreleased in ReentrantReadWriteLock. The source code of tryreleased (int) is as follows:

protected final boolean tryReleaseShared(int unused) { Thread current = Thread.currentThread(); if (firstReader == current) {//Currently the first thread to acquire a read lock // assert firstReaderHoldCount > 0; //Update thread Holdings if (firstReaderHoldCount == 1) firstReader = null; else firstReaderHoldCount--; } else { HoldCounter rh = cachedHoldCounter; if (rh == null || rh.tid != getThreadId(current)) rh = readHolds.get();//Get counter for current thread int count = rh.count; if (count <= 1) { readHolds.remove(); if (count <= 0) throw unmatchedUnlockException(); } --rh.count; } for (;;) {//spin int c = getState(); int nextc = c - SHARED_UNIT;//Get remaining resources / locks if (compareAndSetState(c, nextc)) // Releasing the read lock has no effect on readers, // but it may allow waiting writers to proceed if // both read and write locks are now free. return nextc == 0; } }

Read lock release steps are explained as follows:

- Update the lock count of the current thread counter;

- When CAS updates the state after releasing the lock, spin is used here to ensure the successful execution of CAS during state contention.

6.2 acquisition and release of write lock

Acquisition of write lock

Write lock is an exclusive lock, so let's take a look at the implementation of tryAcquire(int) in ReentrantReadWriteLock.WriteLock:

protected final boolean tryAcquire(int acquires) { // Get current thread Thread current = Thread.currentThread(); // Get synchronization status through AQS int c = getState(); // Get synchronization status int w = exclusiveCount(c); // If the synchronization status is not 0, there is a read lock or a write lock if (c != 0) { // If there is a read lock (c! =0 & & W = = 0), the write lock cannot be acquired (ensure the visibility of write to read) // If the current thread is not the thread that last acquired the write lock, the write lock cannot be acquired (the write lock is exclusive) if (w == 0 || current != getExclusiveOwnerThread()) return false; // Exclusive count (acquires) uses CAS to modify the write lock synchronization status at low 16 (increase the write lock synchronization status to realize reentry) if (w + exclusiveCount(acquires) > MAX_COUNT) throw new Error("Maximum lock count exceeded"); // Reentrant acquire setState(c + acquires); return true; } if (writerShouldBlock() || !compareAndSetState(c, c + acquires)) return false; // Set the current thread as the acquiring thread of write lock setExclusiveOwnerThread(current); return true; }

Write lock release

The release process of the write lock is basically the same as that of the exclusive lock: during the release process, the read lock synchronization state is continuously reduced, and only when the synchronization state is 0, the write lock is completely released.

/* * Note that conditions can call tryRelease and tryAcquire. * As a result, their parameters may include read and write holds released during a conditional wait and re established in tryAcquire. */ protected final boolean tryRelease(int releases) { if (!isHeldExclusively()) throw new IllegalMonitorStateException(); int nextc = getState() - releases; boolean free = exclusiveCount(nextc) == 0; if (free) setExclusiveOwnerThread(null); setState(nextc); return free; }

VII. Locking CountDownLatch

CountDownLatch is a concurrent construct that allows one or more threads to wait for a series of specified operations to complete.

Sometimes there is a requirement that multiple threads work at the same time, and then several of them can be executed concurrently at will, but one thread needs to wait for other threads to finish work before it can start. For example, start multiple threads to download a large file in blocks. Each thread only downloads a fixed part. At last, another thread splices all segments. Then we can consider using CountDownLatch to control concurrency.

CountDownLatch is initialized with a given number. Every time countDown() is called, the number is reduced by one. By calling one of the await() methods, the thread can block and wait for this number to reach zero.

Let's first look at the construction method of CountDownLatch:

public CountDownLatch(int count) { if (count < 0) throw new IllegalArgumentException("count < 0"); this.sync = new Sync(count); }

The construction method introduces an integer count. After that, the countDown0() method that calls CountDownLatch will reduce one to count. Until the count is reduced to 0, the thread that calls the await () method continues.

The basic usage of the construction method is listed below:

public static final int requestTotal = 20;// Total requests CountDownLatch countDownLatch = new CountDownLatch(requestTotal);

CountDownLatch's methods are not many. First, list them one by one:

- await(): the thread calling the method can not continue to execute until the count passed in by the construction method is reduced to 0;

- await(long timeout, TimeUnit unit): it is consistent with the above await method function, but there is a time limit. The thread calling the method will continue to execute after the specified timeout time, no matter whether the count is reduced to 0 or not.

- countDown(): reduce the initial count of CountDownLatch by 1;

- long getCount(): gets the value maintained by the current CountDownLatch;

Here are some important methods of CountDownLatch.

Source code of await() method:

public void await() throws InterruptedException { sync.acquireSharedInterruptibly(1); } //---------------------------I'm the divider------------------------------------ //The implementation of acquieshared interruptible (1) in AQS public final void acquireSharedInterruptibly(int arg) throws InterruptedException { if (Thread.interrupted()) throw new InterruptedException(); if (tryAcquireShared(arg) < 0) doAcquireSharedInterruptibly(arg); } //---------------------------I'm the divider------------------------------------ //Implementation of tryAcquireShared in CountDownLatch protected int tryAcquireShared(int acquires) { return (getState() == 0) ? 1 : -1; }

The implementation of wait() is very simple. The internal call to obtain the shared lock is to determine whether to obtain the lock through the residual amount of resource state (state = = 0? 1: - 1). As we mentioned earlier, the tryAcquireShared function specifies its return value type when obtaining the shared lock: a positive number is returned when the shared lock is successfully acquired and there are still available resources; a 0 is returned when the shared lock is successfully acquired but there are no available resources; a negative number is returned when the shared lock is failed. That is to say, as long as state!=0, the thread will enter the waiting queue to block.

countDown() method source code:

public void countDown() { sync.releaseShared(1); } //---------------------------I'm the divider------------------------------------ //Implementation of releaseShared(1) in AQS public final boolean releaseShared(int arg) { if (tryReleaseShared(arg)) { doReleaseShared();//Wake up subsequent nodes return true; } return false; } //---------------------------I'm the divider------------------------------------ //Implementation of tryreleased in CountDownLatch protected boolean tryReleaseShared(int releases) { // Decrement count; signal when transition to zero for (;;) { int c = getState(); if (c == 0) return false; int nextc = c-1; if (compareAndSetState(c, nextc)) return nextc == 0; } }

countDown() is also well understood. Internal calls are made to release the shared lock. If state==0 after releasing the resource, it means that the latch has been reached. At this time, you can call the thread waiting for doReleaseShared to wake up.

By analyzing the source code, we can see that CountDownLatch is actually the simplest synchronization class implementation. Its internal call completely depends on AQS. As long as you can understand AQS, it is natural to understand CountDownLatch.