1. Virtualized network

Network Namespace is a function provided by Linux kernel and an important function to realize network virtualization. It can create multiple isolated network spaces with independent network stack information. Whether it is a virtual machine or a container, it seems as if it is running in an independent network. Moreover, the resources of different network namespaces are invisible to each other and cannot communicate with each other.

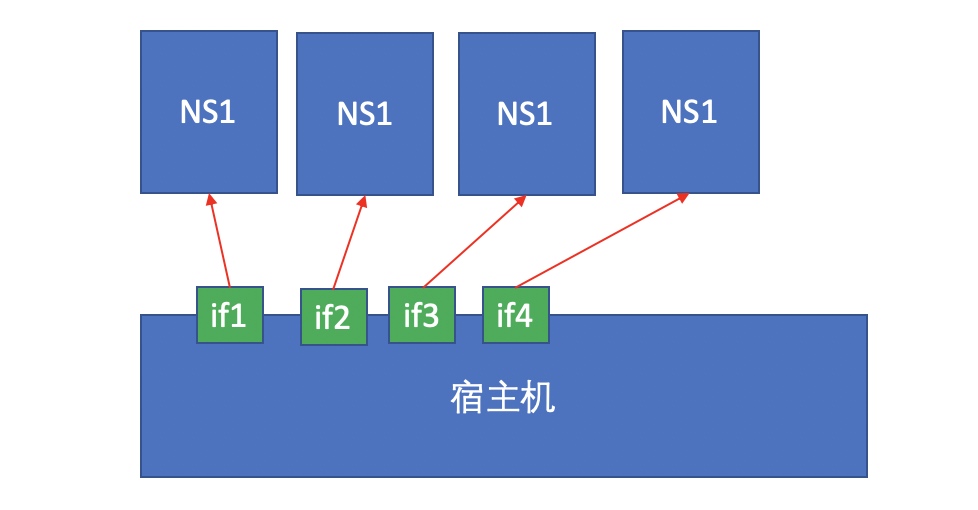

If our physical machine has four physical network cards, we need to create four namespaces, and these devices can be independently associated with a separate namespace

As shown in the figure above, assign the first network card to the first namespace, the second to the second namespace, the third to the third namespace, and the fourth to the fourth namespace. At this time, other namespaces cannot see the current namespace, because a device can only belong to one namespace.

In this way, each namespace can be configured with an IP address and communicate directly with the external network because they use a physical network card.

But what if we have more namespaces than physical network cards?

At this time, we can use the virtual network card device to simulate a group of devices in a pure software way. The Linux kernel level supports the simulation of two levels of devices, one is a layer 2 device and the other is a layer 3 device.

Layer 2 equipment (link layer)

-

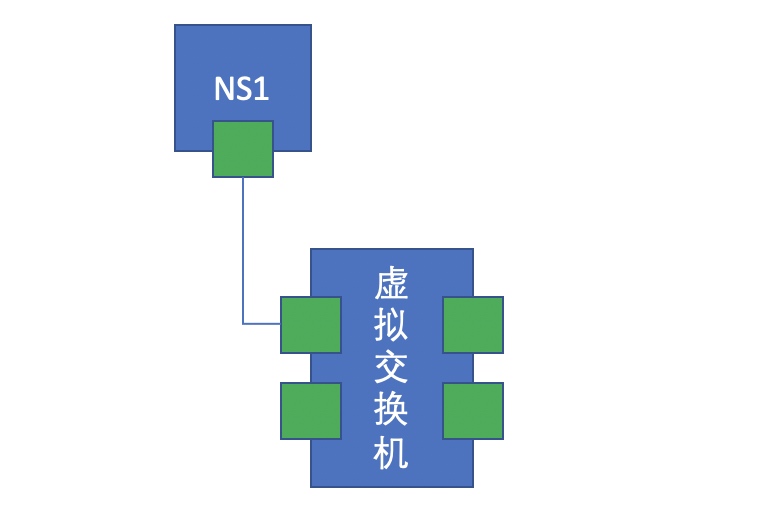

The link layer is the equipment to realize message forwarding. Using the simulation of layer-2 devices by the kernel, a virtual network card interface is created. This network interface appears in pairs and is simulated as two ends of a network cable. One end is plugged into the host and the other end is plugged into the switch.

-

The native kernel supports layer-2 virtual bridge devices and uses software to build switches. For example, brctl of bridge utils tool.

-

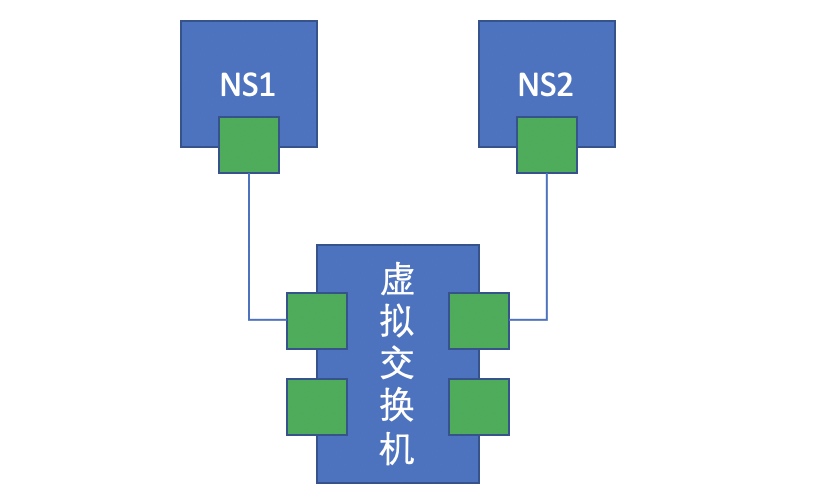

Using software switches and software implemented namespaces, you can simulate a host connected to the switch to realize the function of network connection. Two namespaces are equivalent to two hosts connected to the same switch.

Layer 3 equipment (software switch)

-

OVS: Open VSwitch is an open source virtual switch that can simulate and implement advanced three-layer network devices, such as VLAN, VxLAN, GRE, etc. it does not belong to the module of the Linux kernel itself, so it needs to be installed additionally. It is developed by many network equipment production companies such as Cisco, and its function is very powerful.

-

SDN: Software Defined Network / software driven network. It needs to support virtualization network at the hardware level and build complex virtualization network on each host to run multiple virtual machines or containers.

Each network interface device simulated by the Linux kernel appears in pairs and can be simulated as both ends of a network cable. One end simulates the virtual network card of the host and the other end simulates the virtual switch, which is equivalent to connecting a host to a switch. The Linux kernel natively supports the layer-2 virtual bridge device, that is, the function of software virtual switch. As shown in the figure below:

At this time, if there is another namespace, it creates a pair of virtual network cards. One end is connected to the namespace and the other end is connected to the virtual switch. At this time, it is equivalent to that two namespaces are connected to the same switch network. At this time, if the network card addresses of the two namespaces are configured in the same network segment, it is obvious that they can communicate with each other. As shown in the figure below:

From the physical equipment of network communication to network card, it is realized by pure software, which is called virtual network.

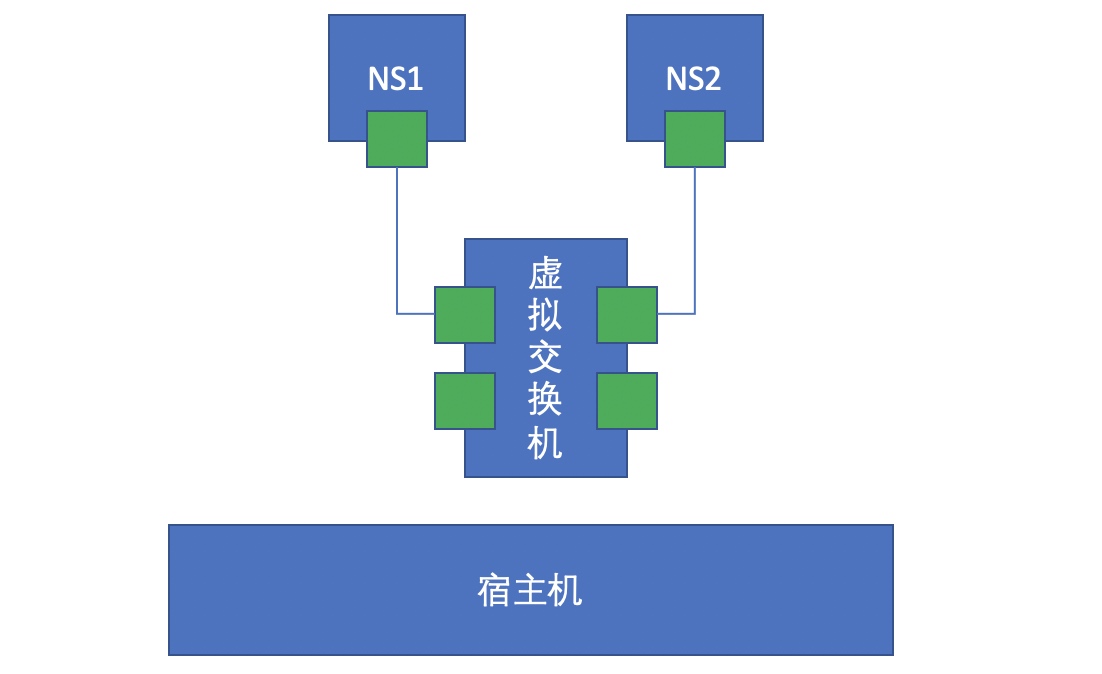

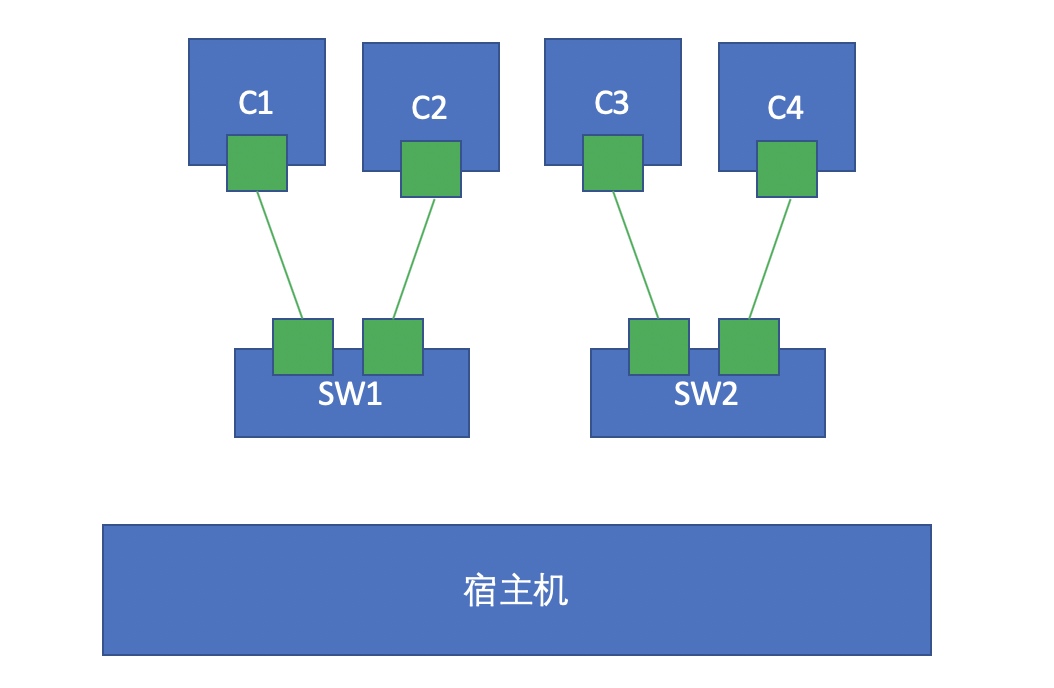

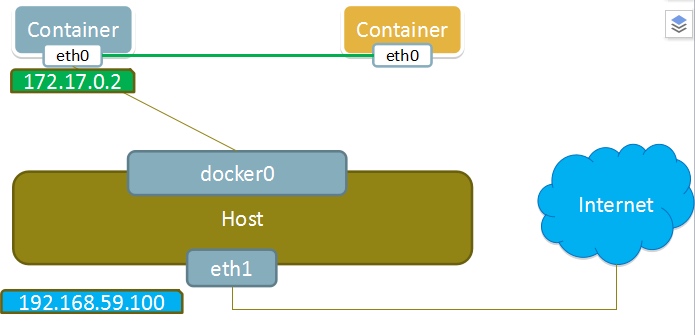

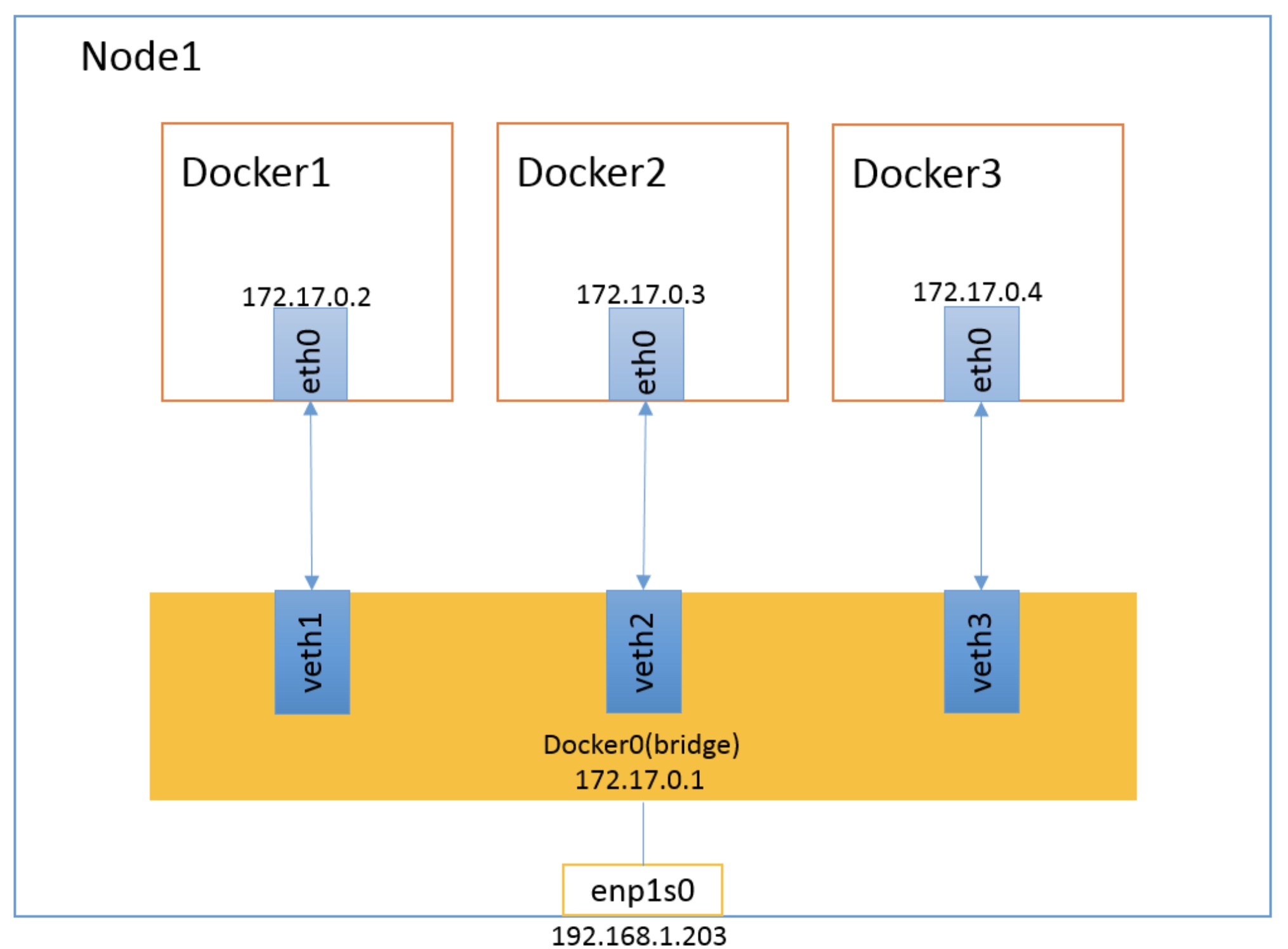

2. Single node inter container communication

If two containers on the same physical machine want to communicate, our method is to establish a virtual switch on this host, and then let the two containers create a pair of virtual network cards in pure software, half on the container and half on the virtual switch, so as to realize communication. As shown in the figure below:

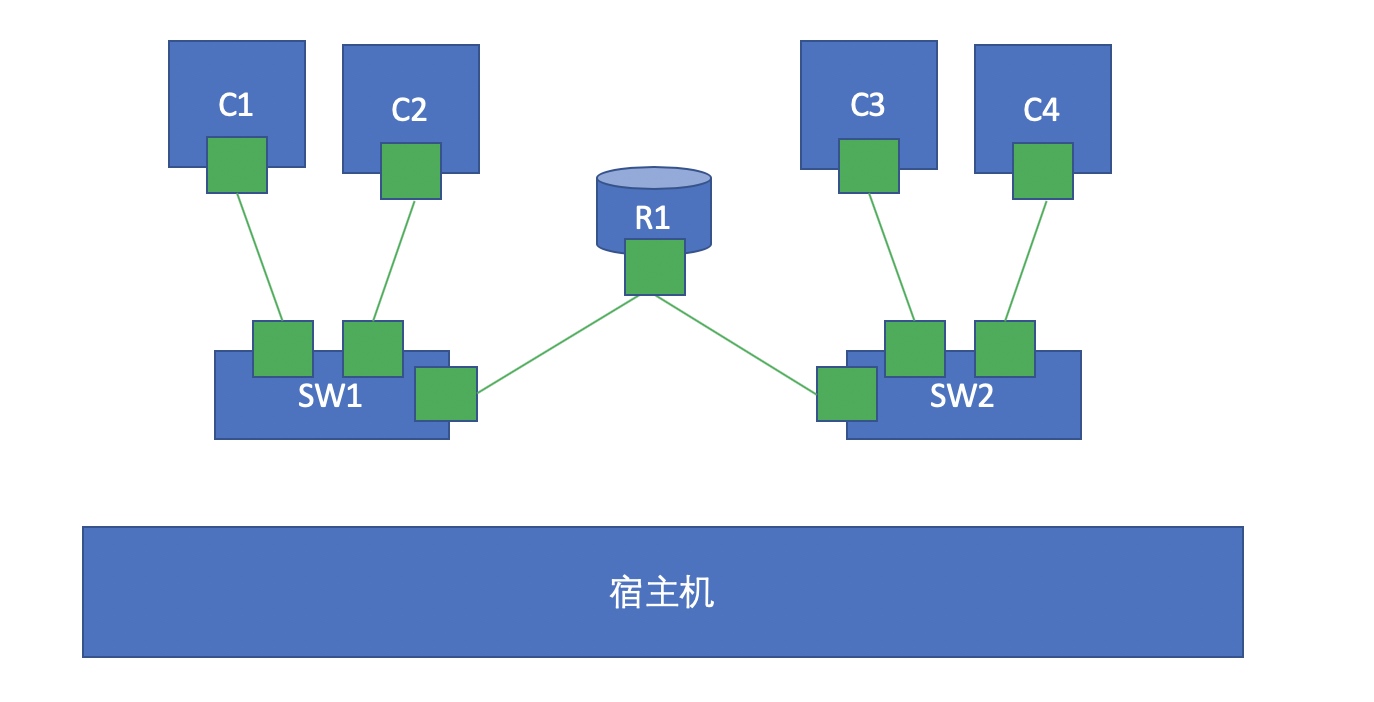

This is the communication mode between two containers on a single node. The communication between two containers on a single node is also complicated. For example, do we expect the container to communicate across switches?

We make two virtual switches, each of which is connected to different containers, as shown in the figure above. At this time, how to realize C1 and C3 communication? In fact, we can create a pair of network cards through the namespace. One end is connected to SW1 and the other end is connected to SW2. In this way, the two switches C1 and C3 can communicate in different switches. However, there is another problem, that is, if C1 and C3 are in different networks? If we are not in the same network, we must use routing forwarding to make it communicate, that is, we have to add a router between the two switches. In fact, the Linux kernel itself supports routing forwarding. We only need to turn on the routing forwarding function. At this point, we can start another container, run a kernel in this container, and turn on its forwarding function. In this way, we simulate a router to realize routing forwarding through this router.

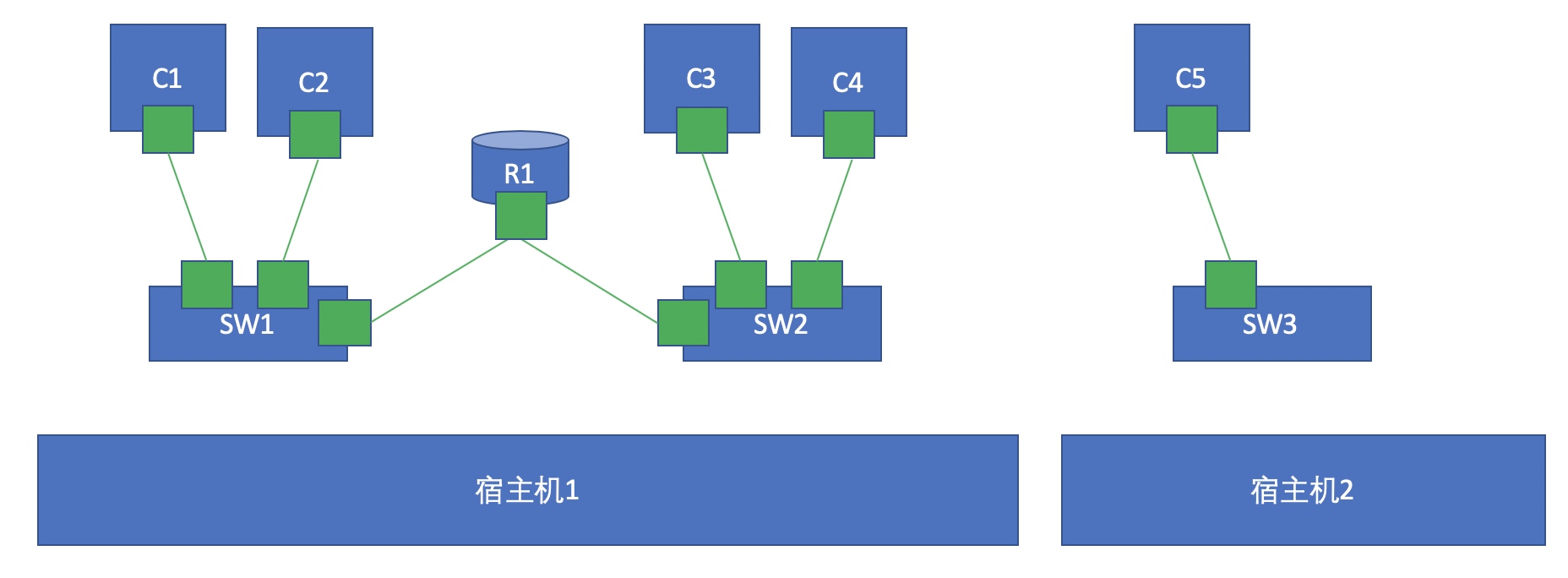

3. Communication between different node containers

As shown in the figure above, how can C1 communicate with C5? If we use bridging, it is easy to generate broadcast storm. Therefore, in the scene of large-scale virtual machine or container, using bridging is undoubtedly self destruction, so we should not use bridging to realize communication.

If we can't bridge and need to communicate with the outside world, we can only use NAT technology. The port of the container is exposed to the host through DNAT, and the purpose of accessing the interior of the container is realized by accessing the port of the host. On the requesting side, we need to do SNAT to forward the data packet through the real network card of the host. However, in this way, the efficiency will be low because two NAT conversions are required.

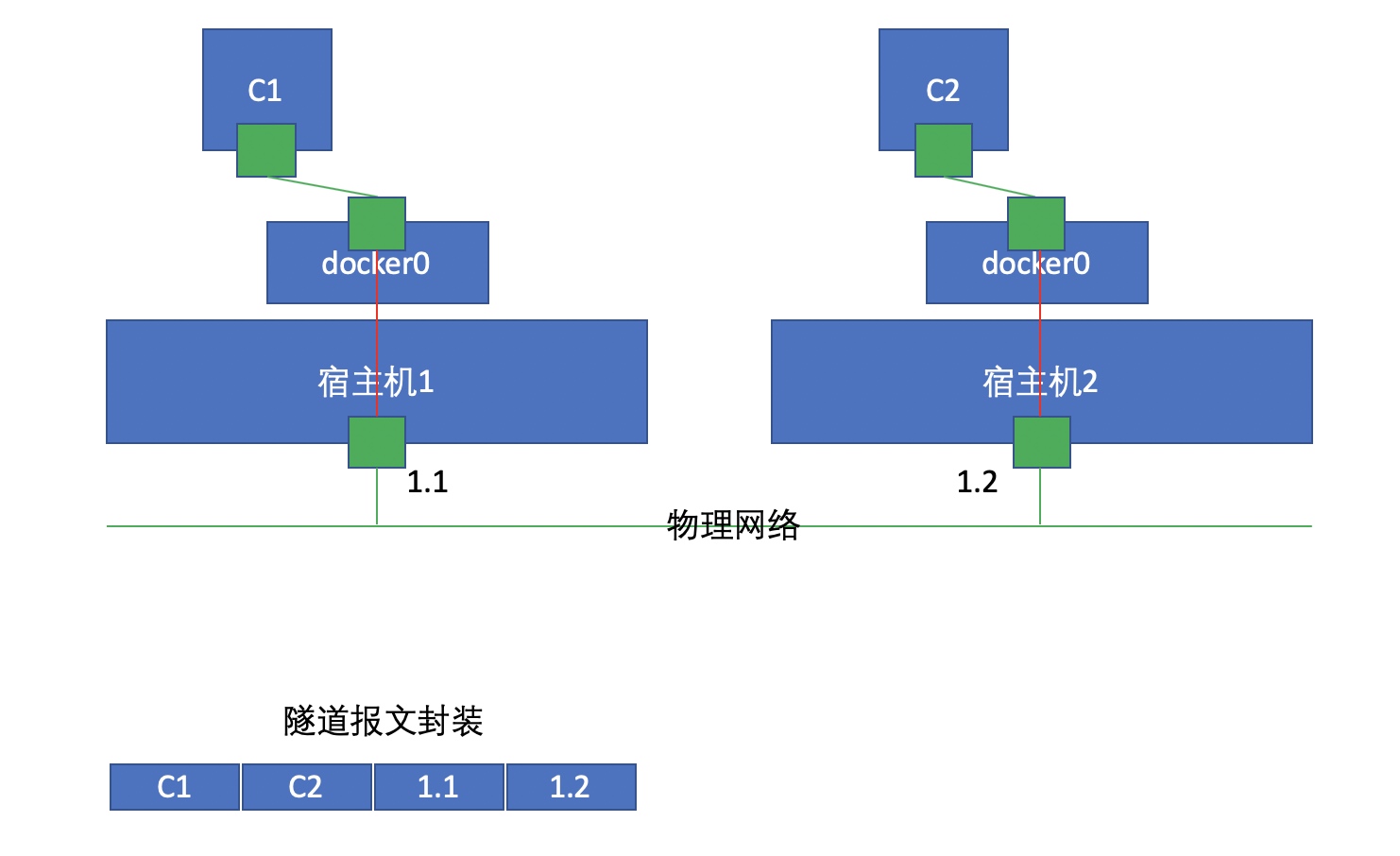

At this time, we can use a technology called Overlay Network to realize the mutual communication function of containers between different nodes.

Overlay Network will tunnel forward the message, that is, add an IP header for the message before sending it, that is, parts 1.1 and 1.2 in the figure above. Here, 1.1 is the source and 1.2 is the target. After receiving the message, host 2 unpacks and finds that the target container to be found is C2, so it forwards the packet to C2.

4. docker container network

Docker automatically provides three types of networks after installation, which can be viewed using the docker network ls command

[root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 046ea413332a bridge bridge local 6a1e12ee95d3 host host local 464d28c1143a none null local

Docker uses Linux bridging. A docker container bridge (docker0) is virtualized on the host. When docker starts a container, an IP address, called container IP, will be assigned to the container according to the network segment of the docker bridge. At the same time, the docker bridge is the default gateway of each container. Because the containers in the same host are connected to the same bridge, the containers can communicate directly through the container IP of the container.

5. Four network modes of docker

| Network mode | to configure | explain |

|---|---|---|

| host | –network host | The container and host share the Network namespace |

| container | –network container:NAME_OR_ID | The container shares the Network namespace with another container |

| none | –network none | The container has an independent Network namespace, but it does not have any network settings, such as assigning veth pair and bridge connection, configuring IP, etc |

| bridge | –network bridge | Default mode |

When Docker creates a container, use the option – network to specify which network model to use. The default is bridge (docker0)

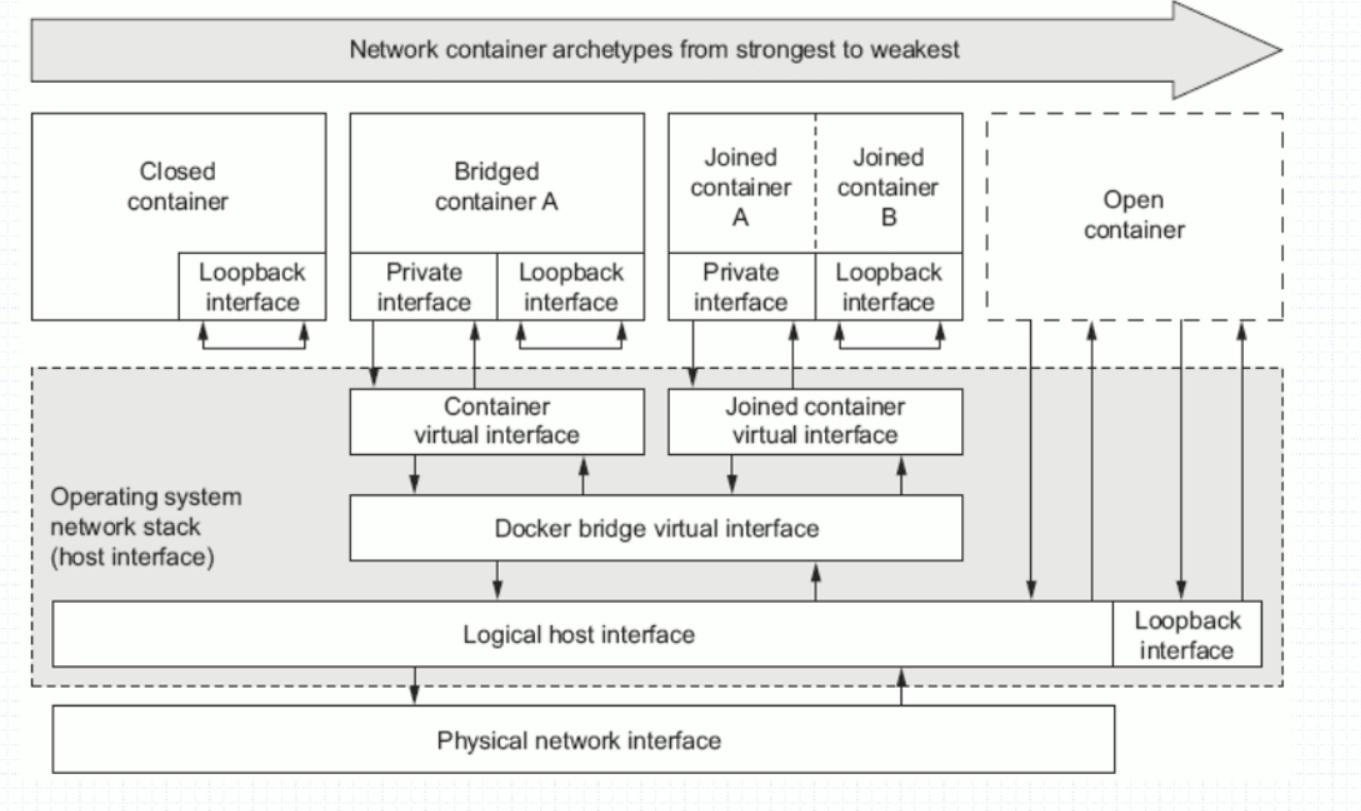

- Closed container: only loop interface, that is, none type

- Bridge container a: bridge network type. The container network is connected to the docker0 network

- joined container A: container network type, which allows two containers to have part of the namespace isolation (User, Mount and Pid), so that the two containers have the same network interface and network protocol stack

- Open container: Open Network: it directly shares the three namespaces (UTS, IPC and Net) of the physical machine. The world uses the network card communication of the physical host to give the container the privilege to manage the physical host network, that is, the host network type

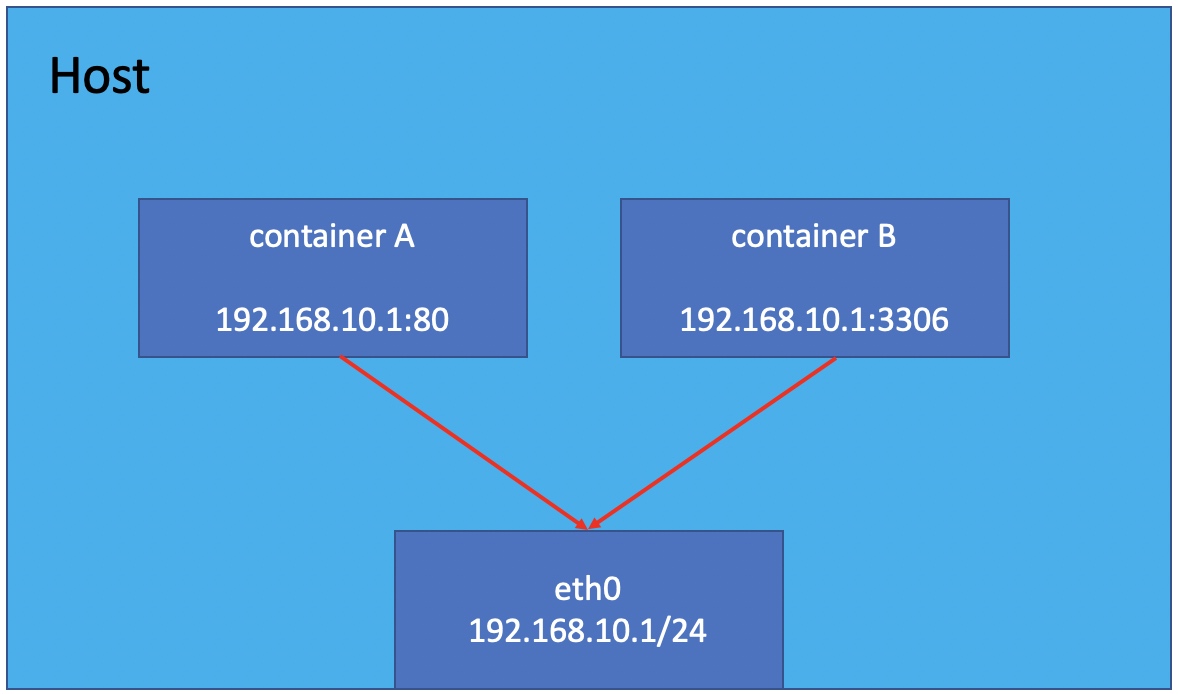

5.1 host mode

If the host mode is used when starting the container, the container will not obtain an independent Network Namespace, but share a Network Namespace with the host. The container will not virtualize its own network card, configure its own IP, etc., but use the IP and port of the host. However, other aspects of the container, such as file system, process list, etc., are still isolated from the host.

The container using the host mode can directly use the IP address of the host to communicate with the outside world. The service port inside the container can also use the port of the host without NAT. The biggest advantage of the host is that the network performance is relatively good, but the ports already used on the docker host can no longer be used, and the network isolation is not good.

example

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gaofan1225/amu v0.1 59ca4fc4b9a3 22 hours ago 549MB

centos latest 5d0da3dc9764 2 months ago 231MB

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:c0:44:3d brd ff:ff:ff:ff:ff:ff

inet 192.168.91.138/24 brd 192.168.91.255 scope global dynamic noprefixroute ens33

valid_lft 1033sec preferred_lft 1033sec

inet6 fe80::b44a:bd1a:fcf1:9863/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:ef:2f:88:e4 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[root@localhost ~]# docker run -it --network host --rm --name nginx 59ca4fc4b9a3 /bin/sh

sh-4.4# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:c0:44:3d brd ff:ff:ff:ff:ff:ff

inet 192.168.91.138/24 brd 192.168.91.255 scope global dynamic noprefixroute ens33

valid_lft 1765sec preferred_lft 1765sec

inet6 fe80::b44a:bd1a:fcf1:9863/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:ef:2f:88:e4 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

5.2 container mode

This pattern specifies that the newly created container shares a Network Namespace with an existing container, rather than with the host. The newly created container will not create its own network card and configure its own IP, but share IP and port range with a specified container. Similarly, in addition to the network, the two containers are isolated from each other, such as file system and process list. The processes of the two containers can communicate through lo network card devices.

example

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gaofan1225/amu v0.1 59ca4fc4b9a3 22 hours ago 549MB

centos latest 5d0da3dc9764 2 months ago 231MB

[root@localhost ~]# docker run -it --rm --name nginx 59ca4fc4b9a3 /bin/sh

sh-4.4# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@localhost ~]# docker ps // Open another terminal

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3b22c4988cd3 59ca4fc4b9a3 "/bin/sh" 10 seconds ago Up 8 seconds nginx

[root@localhost ~]# docker run -it --rm --name cont1 --network container:3b22c4988cd3 5d0da3dc9764 // Create a new container contain1. Specify the container mode used by the network

[root@3b22c4988cd3 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

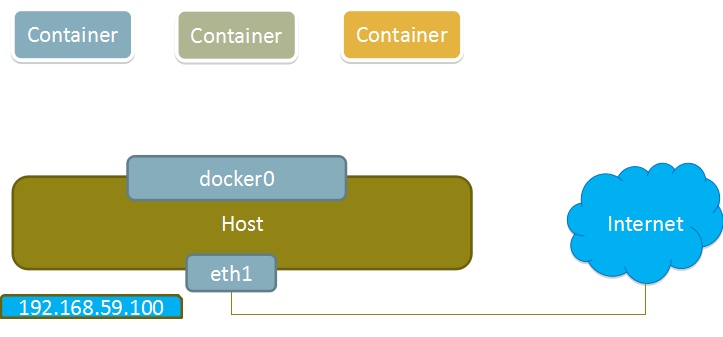

5.3 none mode

Using the none mode, the Docker container has its own Network Namespace, but no network configuration is performed for the Docker container. In other words, the Docker container has no network card, IP, routing and other information. We need to add network card and configure IP for Docker container.

In this network mode, the container has only lo loopback network and no other network card. The none mode can be specified through – network none when the container is created. This type of network has no way to network, and the closed network can well ensure the security of the container.

Application scenario:

- Start a container to process data, such as converting data formats

- Some background computing and processing tasks

example

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gaofan1225/amu v0.1 59ca4fc4b9a3 22 hours ago 549MB

centos latest 5d0da3dc9764 2 months ago 231MB

[root@localhost ~]# docker run -it --rm --name nginx --network none 59ca4fc4b9a3 /bin/bash // Creating a new container nginx specifies that the network uses none mode

[root@a0878607ae4f /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

5.4 bridge mode

When the Docker process starts, a virtual bridge named docker0 will be created on the host, and the Docker container started on the host will be connected to this virtual bridge. The virtual bridge works similar to the physical switch, so that all containers on the host are connected to a layer-2 network through the switch.

Assign an IP to the container from the docker0 subnet, and set the IP address of docker0 as the default gateway of the container. Create a pair of virtual network card veth pair devices on the host. Docker places one end of the veth pair device in the newly created container and names it eth0 (container network card), and the other end in the host with a similar name like vethxxx, and adds this network device to the docker0 bridge. You can view it through the brctl show command.

Bridge mode is the default network mode of docker. If the - network parameter is not written, it is the bridge mode. When using docker run -p, docker actually makes DNAT rules in iptables to realize port forwarding function. You can use iptables -t nat -vnL to view.

Docker bridge is virtualized by the host, not a real network device. The external network cannot be addressed, which also means that the external network cannot access the container directly through container IP. If the container wants external access, it can be accessed by mapping the container port to the host host host (port mapping), that is, when docker run creates the container, it can be enabled through the - P or - P parameter, and when accessing the container, it can access the container through [host IP]: [container port].

example

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gaofan1225/amu v0.1 59ca4fc4b9a3 22 hours ago 549MB

centos latest 5d0da3dc9764 2 months ago 231MB

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:c0:44:3d brd ff:ff:ff:ff:ff:ff

inet 192.168.91.138/24 brd 192.168.91.255 scope global dynamic noprefixroute ens33

valid_lft 1774sec preferred_lft 1774sec

inet6 fe80::b44a:bd1a:fcf1:9863/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:ef:2f:88:e4 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:efff:fe2f:88e4/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# docker run -it --rm --name nginx 59ca4fc4b9a3 /bin/bash

[root@0f488049e563 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@localhost ~]# docker run -it --rm --name centos -p 8080:80 5d0da3dc9764

[root@6c3c754669c0 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

// View port

[root@localhost ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:8080 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:8080 [::]:*

LISTEN 0 128 [::]:22 [::]:*

[root@localhost ~]# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 13 packets, 1108 bytes)

pkts bytes target prot opt in out source destination

6 312 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 6 packets, 312 bytes)

pkts bytes target prot opt in out source destination

......Omitted later

// View the detailed configuration of the bridge network

[root@localhost ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "046ea413332a03c5427a3e5f8dccfe678cbb52f78e93f10728e398c0479cf695",

"Created": "2021-12-03T18:16:45.662030086+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"0f488049e563b84a0e3a6e0a51d02cc74babb37eed80b910d010154d031ab1db": {

"Name": "nginx",

"EndpointID": "15c43f428f55f12a6a3d54a6767563b8976d1c052d700a992eca59d012105346",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"6c3c754669c069e5d7a370cc63dcaa7cca014d85efb5e9ed9b9cd179ee98484e": {

"Name": "centos",

"EndpointID": "4e832ed497996eefc587908d02f03fedd5256751d3293e897f901b982d4bd14e",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

[root@localhost ~]# yum -y install epel-release

[root@localhost ~]# yum install bridge-utils

[root@localhost ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242ef2f88e4 no veth9eef33a

vethe33d728