Install k8s cluster (I): install three centOS under Windows 10

Install k8s cluster (II): install k8s cluster

Install k8s cluster (II): install k8s cluster

Step 1: prepare

1. Virtual machine preparation

Prepare three virtual machines:

| host name | IP | System version | Installation service | Function description |

|---|---|---|---|---|

| master | 172.17.142.244 | centOS 7.4 | 1 | Cluster master node |

| node1 | 172.17.142.252 | centOS 7.4 | 2 | Cluster node |

| node2 | 172.17.142.246 | centOS 7.4 | 1 | Cluster node |

2. Initialize virtual machine

Add configuration to the hosts files of the three virtual machines:

vim /etc/hosts

172.17.142.244 master 172.17.142.244 etcd 172.17.142.244 registry 172.17.142.252 node1 172.17.142.246 node2

Turn off the virtual machine firewall:

systemctl stop firewalld systemctl disable firewalld

Step 2: deploy the master node

The master node needs to install the following components:

- etcd

- flannel

- docker

- kubernets

- Etcd installation etcd:

yum install etcd -y

To modify the etcd configuration file:

vim /etc/etcd/etcd.conf

#[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_LISTEN_PEER_URLS="http://localhost:2380" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="master" #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" # #[Clustering] #ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" ETCD_ADVERTISE_CLIENT_URLS="http://etcd:2379,http://etcd:4001" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" #ETCD_INITIAL_CLUSTER="default=http://localhost:2380" #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true" # #[Proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[Security] #ETCD_CERT_FILE="" #ETCD_KEY_FILE="" #ETCD_CLIENT_CERT_AUTH="false" #ETCD_TRUSTED_CA_FILE="" #ETCD_AUTO_TLS="false" #ETCD_PEER_CERT_FILE="" #ETCD_PEER_KEY_FILE="" #ETCD_PEER_CLIENT_CERT_AUTH="false" #ETCD_PEER_TRUSTED_CA_FILE="" #ETCD_PEER_AUTO_TLS="false" # #[Logging] #ETCD_DEBUG="false" #ETCD_LOG_PACKAGE_LEVELS="" #ETCD_LOG_OUTPUT="default" # #[Unsafe] #ETCD_FORCE_NEW_CLUSTER="false" # #[Version] #ETCD_VERSION="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[Profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[Auth] #ETCD_AUTH_TOKEN="simple"

To start the etcd service:

systemctl start etcd

To view etcd health status:

etcdctl -C http://etcd:2379 cluster-health etcdctl -C http://etcd:4001 cluster-health

- flannel installation command:

yum install flannel

Configure flannel:

vim /etc/sysconfig/flanneld

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://etcd:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass #FLANNEL_OPTIONS=""

Configure the key of flannel in etcd:

etcdctl mk /atomic.io/network/config '{ "Network": "10.0.0.0/16" }'

Start flanneld and set the startup:

systemctl start flanneld.service systemctl enable flanneld.service

- Docker install, install and start docker, and set startup:

yum install docker -y service docker start chkconfig docker on

- kubernets installation k8s:

yum install kubernetes

To configure the / etc/kubernetes/apiserver file:

### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--address=0.0.0.0" # The port on the local server to listen on. KUBE_API_PORT="--port=8080" # Port minions listen on KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://etcd:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies # KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" # Add your own! KUBE_API_ARGS=""

Configure the / etc/kubernetes/config file

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://master:8080"

Start k8s components:

systemctl start kube-apiserver.service systemctl start kube-controller-manager.service systemctl start kube-scheduler.service

Set k8s components to start up:

systemctl enable kube-apiserver.service systemctl enable kube-controller-manager.service systemctl enable kube-scheduler.service

Step 3: deploy node node

The node node requires the following components to be installed:

- flannel

- docker

- kubernetes

- flannel installation command:

yum install flannel

Configure flannel:

vim /etc/sysconfig/flanneld

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://etcd:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass #FLANNEL_OPTIONS=""

Start the flannel and set the startup:

systemctl start flanneld.service systemctl enable flanneld.service

- Docker install, install and start docker, and set startup:

yum install docker -y service docker start chkconfig docker on

- kubernetes installation command:

yum install kubernetes

Different from the master node, the following components of kubernetes need to be run on the slave node:

* kubelet * kubernets-proxy

Configure / etc/kubernetes/config:

vim /etc/kubernetes/config

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://master:8080"

Configure / etc/kubernetes/kubelet:

vim /etc/kubernetes/kubelet

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=node1" # location of the api-server KUBELET_API_SERVER="--api-servers=http://master:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" # Add your own! KUBELET_ARGS=""

To start the kube service:

systemctl start kubelet.service systemctl start kube-proxy.service

Set the k8s component to start automatically:

systemctl enable kubelet.service systemctl enable kube-proxy.service

So far, the process of building k8s cluster has been completed. Next, let's verify whether the cluster has been built successfully.

Step 4: verify the cluster status

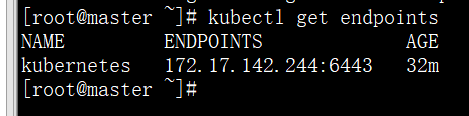

To view endpoint information:

kubectl get endpoints

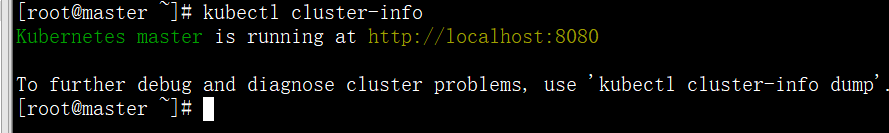

To view cluster information:

kubectl cluster-info

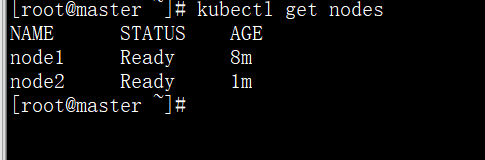

Get the node status in the cluster:

kubectl get nodes