Installing K8S in China has always been a headache for everyone. The key to all kinds of wall-climbing is that you do not know what tools you need to download.Fucking.The key is that most tutorials are not too old or frustrated.Today, let me tell you how to have a pleasant installation in China.

Installation Environment

Installation is done using kubeadm, which essentially installs the official tutorial.

| Categories | Model |

| platform | Aliyun VPC |

| system | Centos 7.3 |

| Docker Version | 1.12.6 |

| K8S Version | 1.6.* |

Although it writes Ali Cloud VPC, virtual machines also support it.It has little effect.

| node | Effect | Number | Recommended Configuration |

| Master |

K8S master node (etcd, API, controller.) | 1 | 1 Core 2G |

| Node | Apply Node | 2 | 2-core 4G |

You can configure 1G core for a quiet local installation.

If you have a wall-flipping host, it's best to install it with your own source if you don't.

setup script

The installation process basically includes downloading software, downloading mirror, host configuration, starting Master node, configuring network, starting Node node.

Download a software

This step can be downloaded directly by someone who has the means to turn over the wall, or I can use my packaged 1.6.2 package if not.

First configure the K8S source on your own wall-flipping host.

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF

Once configured, download the package

yum install -y -downloadonly kubelet kubeadm kubectl kubernetes-cni

Package all downloaded RPM s and send them back locally.This completes the download of the K8S package.

Downloaded RPM:

https://pan.baidu.com/s/1clIpjC cp6h

Download Mirror

Download the mirror to use the script I provided directly, provided you can climb over the wall, you understand.I've downloaded one myself.For everyone to use.

#!/usr/bin/env bash images=( kube-proxy-amd64:v1.6.2 kube-controller-manager-amd64:v1.6.2 kube-apiserver-amd64:v1.6.2 kube-scheduler-amd64:v1.6.2 kubernetes-dashboard-amd64:v1.6.0 k8s-dns-sidecar-amd64:1.14.1 k8s-dns-kube-dns-amd64:1.14.1 k8s-dns-dnsmasq-nanny-amd64:1.14.1 etcd-amd64:3.0.17 pause-amd64:3.0 ) for imageName in ${images[@]} ; do docker pull gcr.io/google_containers/$imageName docker tag gcr.io/google_containers/$imageName registry.cn-beijing.aliyuncs.com/bbt_k8s/$imageName docker push registry.cn-beijing.aliyuncs.com/bbt_k8s/$imageName done quay.io/coreos/flannel:v0.7.0-amd64 docker tag quay.io/coreos/flannel:v0.7.0-amd64 registry.cn-beijing.aliyuncs.com/bbt_k8s/flannel:v0.7.0-amd64 docker push registry.cn-beijing.aliyuncs.com/bbt_k8s/flannel:v0.7.0-amd64

Let me explain this script.This script downloads a commonly used image and then returns it locally. You can change registry.cn-beijing.aliyuncs.com/bbt_k8s to your own address, which you need read and write permissions to recommend using Ali Cloud or NetEase.This is convenient for you to access.

| Software | Edition | Explain | Remarks |

| kube-proxy-amd64 kube-controller-manager-amd64 kube-apiserver-amd64 kube-scheduler-amd64 |

v1.6.2 | These mirror versions follow the K8S version. For example, if I installed K8S version 1.6.2, the version number is v1.6.2 | K8S Base Package |

| kubernetes-dashboard-amd64 | v1.6.0 | This is the console for K8S (although not very useful, single but at least suitable for beginners), and one version follows the larger version of K8S, such as 1.6.2 where I installed K8S and 1.6 where the larger version is 1.6, so the version number is v1.6.0 | |

| k8s-dns-sidecar-amd64 k8s-dns-kube-dns-amd64 k8s-dns-dnsmasq-nanny-amd64 |

1.14.1 | This is a DNS service and does not normally follow K8S for upgrade. For specific versions, refer to https://kubernetes.io/docs/get-start-guides/kubeadm/ | |

| etcd-amd64 | 3.0.17 | This is an etcd service that does not normally follow K8S for upgrade. For specific versions, refer to https://kubernetes.io/docs/get-start-guides/kubeadm/ | |

| pause-amd64 | 3.0 | K8S is generally not followed for upgrades, specific versions can refer to https://kubernetes.io/docs/get-start-guides/kubeadm/ | Versions have been 3.0 for a long time. |

| flannel | v0.7.0-amd64 | Network components, the flannel I use here, and of course other ones.Specific version information refers to the corresponding network component, for example, flannel is https://github.com/coreos/flannel/tree/master/Documentation |

OK, these mirrors are OK when they are downloaded. Without the wall-flipping tool, you can use the address inside the script directly.

Host Configuration

Once the above content is downloaded, we can install it. If you are using a virtual machine, it is recommended that you copy three copies directly after the installation. This will simplify the installation process, or the host can write a script.

Update System

There is nothing to introduce.

yum update -y

Install docker

K8S version 1.6.x has only been tested on Docker 1.12. Although the latest version of Docker can run, it is not recommended to install the latest version in order to avoid any problems.

curl -sSL http://acs-public-mirror.oss-cn-hangzhou.aliyuncs.com/docker-engine/internet | sh /dev/stdin 1.12.6

When the installation is complete, Docker updates are finally disabled.Disabled by adding in/etc/yum.conf

exclude=docker-engine*

Configure Docker

The main thing is to configure some accelerators so that you don't download the images yourself too slowly.

Modify/etc/docker/daemon.json to add the following:

{ "registry-mirrors": ["https://My own acceleration address'] }

Then you start the Docker service.

systemctl daemon-reload systemctl enable docker systemctl start docker

OK, so our Docker is configured.

Modify Network

Mainly turn on bridge-related support. This is the configuration flannel needs. Whether it needs or not, it depends on what your network components choose.

Modify/usr/lib/sysctl.d/00-system.conf to change net.bridge.bridge-nf-call-iptables to 1.After that, modify the current kernel state

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

OK, network configuration is complete.

Install K8S package

Upload the RPM package, go to your own server, and execute

yum install -y *.rpm

Then turn on kubelet to start

systemctl enable kubelet

Then configure kubelet, modify/etc/system d/system/kubelet.service.d/10-kubeadm.conf to the following file

[Service] Environment="KUBELET_KUBECONFIG_ARGS=--kubeconfig=/etc/kubernetes/kubelet.conf --require-kubeconfig=true" Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true" Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin" Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local" Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt" Environment="KUBELET_ALIYUN_ARGS=--pod-infra-container-image=registry-vpc.cn-beijing.aliyuncs.com/bbt_k8s/pause-amd64:3.0" ExecStart= ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_EXTRA_ARGS $KUBELET_ALIYUN_ARGS

Here we will fix two main problems, one is to modify the POD's basic container to our own source, the other is the conflict between the resource management of the latest version of K8S and the default resource management of Docker, which is deleted here.

Then reload the service again.

systemctl daemon-reload

This way, the host's environment is initialized, and if you're using a virtual machine, three copies are all you need.If it's a physical machine, just follow this step once for all three.Then set the hostname for each host according to its type, and K8S will use HostName as the host identity.

Start Master

After you start your own Master, we can start our Master node. Usually, 2-3 Master nodes are recommended. Local testing is simpler and one node is OK.

export KUBE_REPO_PREFIX="registry-vpc.cn-beijing.aliyuncs.com/bbt_k8s" export KUBE_ETCD_IMAGE="registry-vpc.cn-beijing.aliyuncs.com/bbt_k8s/etcd-amd64:3.0.17" kubeadm init --kubernetes-version=v1.6.2 --pod-network-cidr=10.96.0.0/12

The first two environment variables can be downloaded from our mirror source when kubeadm is initialized.The last one is to initialize the Master node.I'll explain the parameters that need to be configured.

| Parameters | Significance | Remarks |

| --kubernetes-version | The version number of K8S is selected according to the mirror and RPM version you downloaded. | I'm using 1.6.2 here, so the version is v1.6.2. |

| --pod-network-cidr | POD network, as long as it does not conflict with the host network, I use 10.96.0.0/12 here | This is hooked to the KUBELET_DNS_ARGS declared above in/etc/system d/system/kubelet.service.d/10-kubeadm.conf, please modify it together. |

When the execution is complete, wait a while and it's done.So we have our Master node.

kubeadm init --kubernetes-version=v1.6.2 --pod-network-cidr=10.96.0.0/12 [kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters. [init] Using Kubernetes version: v1.6.2 [init] Using Authorization mode: RBAC [preflight] Running pre-flight checks [preflight] Starting the kubelet service [certificates] Generated CA certificate and key. [certificates] Generated API server certificate and key. [certificates] API Server serving cert is signed for DNS names [node0 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.61.41] [certificates] Generated API server kubelet client certificate and key. [certificates] Generated service account token signing key and public key. [certificates] Generated front-proxy CA certificate and key. [certificates] Generated front-proxy client certificate and key. [certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf" [kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf" [apiclient] Created API client, waiting for the control plane to become ready [apiclient] All control plane components are healthy after 14.583864 seconds [apiclient] Waiting for at least one node to register [apiclient] First node has registered after 6.008990 seconds [token] Using token: e7986d.e440de5882342711 [apiconfig] Created RBAC rules [addons] Created essential addon: kube-proxy [addons] Created essential addon: kube-dns Your Kubernetes master has initialized successfully! To start using your cluster, you need to run (as a regular user): sudo cp /etc/kubernetes/admin.conf $HOME/ sudo chown $(id -u):$(id -g) $HOME/admin.conf export KUBECONFIG=$HOME/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: http://kubernetes.io/docs/admin/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join --token 1111.1111111111111 *.*.*.*:6443

Once the installation is complete, it is important to review the installation process and make a copy similar to the following statement, which is used to initialize the subsequent nodes.

kubeadm join --token 11111.11111111111111 *.*.*.*:6443

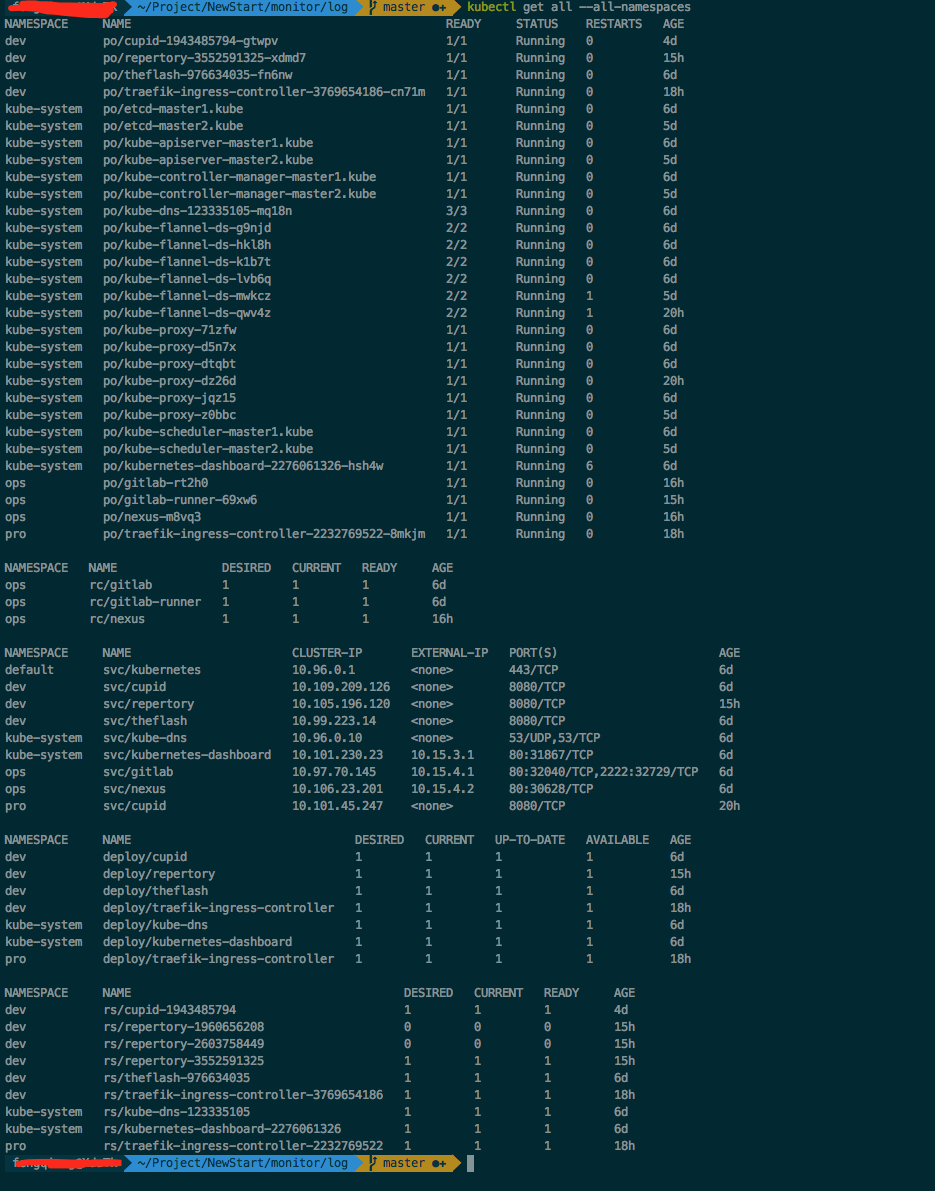

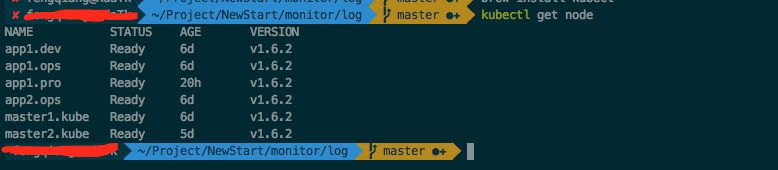

Here we can take a simple look at the status of K8S.I'm using a Mac here.

Install kubectl

brew install kubectl

Then copy the / etc/kubernetes/admin.conf file on the Master node to ~/.kube/config on your machine

kebectl get node is then executed.I've already installed it here, so I have all the information to see the node and it's a success.

Install Network Components

Next we'll install the network components, and I'm using flannel here.Create 2 files

kube-flannel-rbac.yml

# Create the clusterrole and clusterrolebinding: # $ kubectl create -f kube-flannel-rbac.yml # Create the pod using the same namespace used by the flannel serviceaccount: # $ kubectl create --namespace kube-system -f kube-flannel.yml --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system

kube-flannel-ds.yaml

--- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "type": "flannel", "delegate": { "isDefaultGateway": true } } net-conf.json: | { "Network": "10.96.0.0/12", "Backend": { "Type": "vxlan" } } --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: amd64 tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule serviceAccountName: flannel containers: - name: kube-flannel image: registry.cn-beijing.aliyuncs.com/bbt_k8s/flannel:v0.7.0-amd64 command: [ "/opt/bin/flanneld", "--ip-masq", "--kube-subnet-mgr" ] securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: install-cni image: registry.cn-beijing.aliyuncs.com/bbt_k8s/flannel:v0.7.0-amd64 command: [ "/bin/sh", "-c", "set -e -x; cp -f /etc/kube-flannel/cni-conf.json /etc/cni/net.d/10-flannel.conf; while true; do sleep 3600; done" ] volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

Then use the command to configure.

kubectl create -f kube-flannel-rbac.yml kubectl create -f kube-flannel-ds.yaml

So our network host is initialized.

Start NODE

Not to mention the 2 NODE nodes, follow the command yourself.

export KUBE_REPO_PREFIX="registry-vpc.cn-beijing.aliyuncs.com/bbt_k8s" export KUBE_ETCD_IMAGE="registry-vpc.cn-beijing.aliyuncs.com/bbt_k8s/etcd-amd64:3.0.17" kubeadm join --token 1111.111111111111 *.*.*.*:6443

Where kubeadm join refers to Starting Master Node.

OK so all our nodes are initialized.

Other

Theoretically we installed it here, K8S is ready to use, the next content is purely reference.Choose according to your own conditions.

Install the DashBoard tool

Create file kubernetes-dashboard.yaml

# Copyright 2015 Google Inc. All Rights Reserved. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # Configuration to deploy release version of the Dashboard UI compatible with # Kubernetes 1.6 (RBAC enabled). # # Example usage: kubectl create -f <this_file> apiVersion: v1 kind: ServiceAccount metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard labels: app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system --- kind: Deployment apiVersion: extensions/v1beta1 metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: kubernetes-dashboard template: metadata: labels: app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: registry.cn-beijing.aliyuncs.com/bbt_k8s/kubernetes-dashboard-amd64:v1.6.0 imagePullPolicy: Always ports: - containerPort: 9090 protocol: TCP args: # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port livenessProbe: httpGet: path: / port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ports: - port: 80 targetPort: 9090 selector: app: kubernetes-dashboard

Create the file dashboard-rbac.yaml

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: dashboard-admin roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: default namespace: kube-system

After execution

kubectl create -f dashboard-rbac.yml kubectl create -f kubernetes-dashboard.yaml

OK, so the installation is complete.

Then use the following command to get the corresponding port number.Mainly look at NodePort: <unset> 31867/TCP.You can then access it using http://NodeIp:NodePort, where NodeIp is the IP of the Master or node and NodePort is the port of NodePort.

kubectl describe --namespace kube-system service kubernetes-dashboard

Finally, a post-installation screenshot is provided: