HashMap for JDK Source Reading

Introduction to HashMap

Implementation of Map interface based on hash table. This implementation provides all optional mapping operations and allows null values and null keys to be used. (The HashMap class is roughly the same as Hashtable except for asynchronism and null allowed.) This kind of mapping does not guarantee the order of mapping, especially that it does not guarantee that the order will remain unchanged.

Emphasis: Implementing the map interface, allowing null values and null keys (why null values are allowed? Because null value invocation methods report null pointer exceptions, there is no guarantee of order (why not guarantee order?)

This implementation assumes that hash functions distribute elements appropriately among buckets, providing stable performance for basic operations (get and put). The time required to iterate the collection view is proportional to the "capacity" (number of buckets) of the HashMap instance and its size (number of key-value mapping relationships). So, if iteration performance is important, don't set the initial capacity too high (or set the load factor too low).

HashMap The example has two parameters that affect its performance: initial capacity and load factor. Capacity is the number of buckets in the hash table, and the initial capacity is only the capacity of the hash table at the time of creation. Loading factor is a measure of how full a hash table can be before its capacity increases automatically. When the number of entries in a hash table exceeds the product of the load factor and the current capacity, the hash table is to be processed. rehash Operations (that is, rebuilding the internal data structure), so that the hash table will have about twice the number of buckets.

Usually, the default load factor (.75) seeks a compromise between time and space costs. The high load factor reduces the space overhead, but also increases the query cost (which is reflected in most HashMap class operations, including get and put operations). When setting the initial capacity, the number of entries needed in the mapping and their loading factors should be taken into account in order to minimize the number of rehash operations. If the initial capacity is greater than the maximum number of entries divided by the load factor, the rehash operation will not occur.

These three paragraphs are all about rehash, an important method of HashMap, and the variables associated with it, default load factor, initial capacity, and current capacity (number of entries in the hash table)

In addition, HashMap is not thread synchronization. If you want a thread synchronization map, you'd better do this at creation time to prevent unexpected asynchronous access to the map, as follows:

Map m = Collections.synchronizedMap(new HashMap(...));

Iterators also use fail-fast mode.

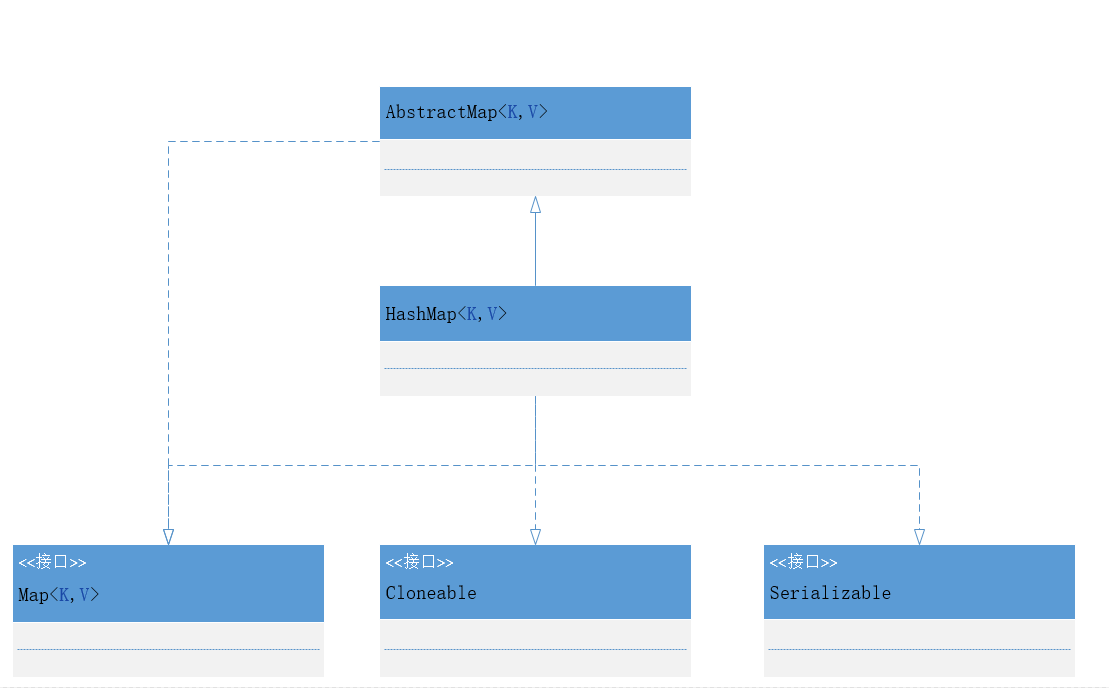

HashMap class diagram

Important Method of HashMap

Method variables

// Default initialization capacity static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16 // Maximum capacity static final int MAXIMUM_CAPACITY = 1 << 30; // Default load factor static final float DEFAULT_LOAD_FACTOR = 0.75f; // Threshold from Link List to Tree static final int TREEIFY_THRESHOLD = 8; //Threshold from tree to linked list static final int UNTREEIFY_THRESHOLD = 6; // Minimum hash table capacity when bin in bucket is tree static final int MIN_TREEIFY_CAPACITY = 64;

Construction method

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}

The purpose of all constructions is to determine only two variables, loadFactor and threshold.

Essence method

putVal

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

// The table is uninitialized or has a length of 0.

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// (n - 1) & hash determines which bucket the element is stored in, the bucket is empty, and the newly generated node is placed in the bucket (in this case, the node is placed in the array)

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

// Elements already exist in barrels

else {

Node<K,V> e; K k;

// Compare the hash value of the first element in the bucket (the node in the array) to be equal, and the key to be equal

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

// Assign the first element to e and record with e

e = p;

// hash value is not equal, that is, key is not equal; it is a red-black tree node.

else if (p instanceof TreeNode)

// In the tree

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

// For linked list nodes

else {

// Insert a node at the end of the list

for (int binCount = 0; ; ++binCount) {

// Arrive at the end of the list

if ((e = p.next) == null) {

// Insert a new node at the tail

p.next = newNode(hash, key, value, null);

// The number of nodes reaches the threshold and is transformed into a red-black tree.

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

// Break

break;

}

// Determine whether the key value of the node in the list is equal to the key value of the inserted element

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

// Equality, out of the loop

break;

// Used to traverse the list in the bucket, combined with the previous e = p.next, you can traverse the list

p = e;

}

}

// Represents finding a node whose key, hash, and insertion elements are equal in the bucket

if (e != null) {

// Record the value of e

V oldValue = e.value;

// onlyIfAbsent is false or the old value is null

if (!onlyIfAbsent || oldValue == null)

//Replace old values with new ones

e.value = value;

// Post-visit callback

afterNodeAccess(e);

// Return old value

return oldValue;

}

}

// Structural modification

++modCount;

// When the actual size is larger than the threshold, the capacity will be expanded.

if (++size > threshold)

resize();

// Callback after insertion

afterNodeInsertion(evict);

return null;

}

resize

final Node<K,V>[] resize() {

// Current table save

Node<K,V>[] oldTab = table;

// Save table size

int oldCap = (oldTab == null) ? 0 : oldTab.length;

// Save the current threshold

int oldThr = threshold;

int newCap, newThr = 0;

// Former table size greater than 0

if (oldCap > 0) {

// Former table s were larger than maximum capacity

if (oldCap >= MAXIMUM_CAPACITY) {

// Maximum shaping threshold

threshold = Integer.MAX_VALUE;

return oldTab;

}

// Double capacity, use left shift, more efficient

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

// Double threshold

newThr = oldThr << 1; // double threshold

}

// Previous thresholds greater than 0

else if (oldThr > 0)

newCap = oldThr;

// oldCap = 0 and oldThr = 0, using default values (such as using HashMap() constructor, and then inserting an element calls resize function, which goes into this step)

else {

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

// The new threshold is 0.

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

// Initialize table

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

// The previous table has been initialized

if (oldTab != null) {

// Duplicate the elements and re-hash

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

// The elements in the same barrel are divided into two different linked lists according to whether they are 0 (e. hash & oldCap), and rehash is completed.

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

Important method

getNode

final Node<K,V> getNode(int hash, Object key) {

Node<K,V>[] tab; Node<K,V> first, e; int n; K k;

// The table has been initialized and is longer than 0. Finding items in the table based on hash is not empty.

if ((tab = table) != null && (n = tab.length) > 0 &&

(first = tab[(n - 1) & hash]) != null) {

// The first item in the bucket (array element) is equal

if (first.hash == hash && // always check first node

((k = first.key) == key || (key != null && key.equals(k))))

return first;

// More than one node in a bucket

if ((e = first.next) != null) {

// It's a red-black tree node.

if (first instanceof TreeNode)

// Search in the red and black trees

return ((TreeNode<K,V>)first).getTreeNode(hash, key);

// Otherwise, look in the linked list

do {

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);

}

}

return null;

}

putMapEntries

final void putMapEntries(Map<? extends K, ? extends V> m, boolean evict) {

int s = m.size();

if (s > 0) {

// Determine whether the table has been initialized

if (table == null) { // pre-size

// Uninitialized, the actual number of elements whose s is m

float ft = ((float)s / loadFactor) + 1.0F;

int t = ((ft < (float)MAXIMUM_CAPACITY) ?

(int)ft : MAXIMUM_CAPACITY);

// If the calculated t is greater than the threshold value, the initialization threshold is obtained.

if (t > threshold)

threshold = tableSizeFor(t);

}

// Initialized, and the number of m elements is greater than the threshold, the expansion process is carried out.

else if (s > threshold)

resize();

// Add all elements in m to HashMap

for (Map.Entry<? extends K, ? extends V> e : m.entrySet()) {

K key = e.getKey();

V value = e.getValue();

putVal(hash(key), key, value, false, evict);

}

}

}

clone

public Object clone() {

HashMap<K,V> result;

try {

result = (HashMap<K,V>)super.clone();

} catch (CloneNotSupportedException e) {

// this shouldn't happen, since we are Cloneable

throw new InternalError(e);

}

result.reinitialize();

result.putMapEntries(this, false);

return result;

}

It can be seen from the source code that although the clone method generates new HashMap objects and the table array in the new HashMap is also newly generated, the elements in the array still refer to the elements in the previous HashMap. This results in the modification of elements in HashMap, that is to say, the modification of elements in the array will result in the change of both the original object and the clone object, but the addition or deletion will not affect the other party, because this is equivalent to the change of the array, and the clone object will be a new array.

HashMap changes

hashMap before jdk1.8:

HashMap's data structure is a linked list hash that converts stored key s and value s into Entry classes

Encapsulated as an entry object by key and value, the hash value of the entry is calculated by the value of the key, and the position of the entry in the array is calculated by the hash value of the entry and the length length of the array. If there are other elements in the position, the element is stored by a linked list.

After jdk1.8

hashmap The data structure is an array+linked list+red-black tree

HashMap Reading Feelings

1) Know that hashMap was a linked list hash data structure before JDK 1.8, and after JDK 1.8 was an array plus linked list plus red-black tree data structure.

2) By learning the source code, hashMap is a collection of value s that can be quickly obtained by key, because the hash search method is used internally.

Explain

This article is written by myself. I would be honored if this article could give you some harvest or insight. If you have different opinions or find errors in this article, please leave a message to correct or contact me: zlh8013gsf@126.com