1. Environmental preparation:

A linux machine can be a virtual machine installed by local VMware or a real linux machine.

If it is a locally installed virtual machine, the following points need to be pre configured:

- Configure the static IP of the machine (to prevent IP changes during restart)

- Modify host name (easy to configure)

- Turn off the firewall (prevent ports from being disabled)

2. Preparation of installation package

- jdk installation package Download address: https://www.oracle.com/java/technologies/javase-jdk8-downloads.html Recommended version: jdk8 and above

- hadopp installation package Download address http://archive.apache.org/dist/hadoop/core/ Recommended version: 2.7.2

3. Perform installation

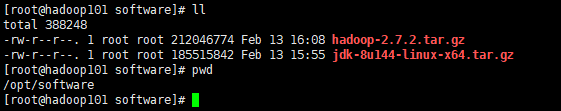

1. Upload files

- Create a new directory on linux / opt/software, / opt/module

- Upload jdk installation package and hadoop installation package

2. install jdk

- Extract the jdk package to the / opt/module directory

[root@hadoop101 software]# tar -zxvf jdk-8u144-linux-x64.tar.gz -C /opt/module/

- Configure jdk environment variables

First obtain the path of the JDK decompression. In this example, the path of decompression is: / opt/module/jdk1.8.0_144 Then use the command vim /etc/profile to open the / etc/profile file Add the following at the end of the / etc/profile file

#Java home configuration export JAVA_HOME=/opt/module/jdk1.8.0_144 export PATH=$PATH:$JAVA_HOME/bin

Save after modification exit

3. Make environment variable configuration effective

Execute command: source /etc/profile

4. Verify that the installation is successful

Enter the command java -version, and the result is as follows: JDK is installed successfully

[root@hadoop101 software]# java -version openjdk version "1.8.0_222-ea" OpenJDK Runtime Environment (build 1.8.0_222-ea-b03) OpenJDK 64-Bit Server VM (build 25.222-b03, mixed mode) [root@hadoop101 software]#

3. Install hadoop

- Extract the installation package to the / opt/module directory

[root@hadoop101 software]# tar -zxvf hadoop-2.7.2.tar.gz -C /opt/module/

- Configure environment variables First get the path of the jdk decompression. In this example, the path of decompression is: / opt/module/hadoop-2.7.2 Then use the command vim /etc/profile to open the / etc/profile file Add the following at the end of the / etc/profile file

##Hadoop? Home configuration export HADOOP_HOME=/opt/module/hadoop-2.7.2 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

Save after modification exit

3. Make environment variable configuration effective

Execute command: source /etc/profile

4. Verify that the installation is successful

Enter the command hadoop version, and the result is as follows: HADOOP installation succeeded

[root@hadoop101 software]# hadoop version Hadoop 2.7.2 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r b165c4fe8a74265c792ce23f546c64604acf0e41 Compiled by jenkins on 2016-01-26T00:08Z Compiled with protoc 2.5.0 From source with checksum d0fda26633fa762bff87ec759ebe689c This command was run using /opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-common-2.7.2.jar [root@hadoop101 software]#

4. HADOOP directory structure description

The structure of hadoop directory after installation is as follows:

[root@hadoop101 hadoop-2.7.2]# pwd /opt/module/hadoop-2.7.2 [root@hadoop101 hadoop-2.7.2]# ll total 32 drwxr-xr-x. 2 10011 10011 194 Jan 26 2016 bin drwxr-xr-x. 3 10011 10011 20 Jan 26 2016 etc drwxr-xr-x. 2 10011 10011 106 Jan 26 2016 include drwxr-xr-x. 3 10011 10011 20 Jan 26 2016 lib drwxr-xr-x. 2 10011 10011 239 Jan 26 2016 libexec -rw-r--r--. 1 10011 10011 15429 Jan 26 2016 LICENSE.txt -rw-r--r--. 1 10011 10011 101 Jan 26 2016 NOTICE.txt -rw-r--r--. 1 10011 10011 1366 Jan 26 2016 README.txt drwxr-xr-x. 2 10011 10011 4096 Jan 26 2016 sbin drwxr-xr-x. 4 10011 10011 31 Jan 26 2016 share [root@hadoop101 hadoop-2.7.2]#

- bin directory: store scripts for Hadoop related services (HDFS,YARN)

- etc Directory: Hadoop configuration file directory, which stores Hadoop configuration files

- lib Directory: a local library for Hadoop (compression and decompression of data)

- sbin Directory: store scripts to start or stop Hadoop related services

- share Directory: store Hadoop's dependent jar packages, documents, and official cases

In this way, the installation of hadoop is completed, which can be said to be very simple. Next, you can modify the configuration file to make hadoop run.