Hadoop cluster build-03 compile and install Hadoop

Hadoop Cluster Build-02 Installation Configuration Zookeeper

Preparations for Hadoop Cluster Building-01

HDFS is a distributed file system used with Hadoop, which is divided into two parts.

namenode: nn1.hadoop nn2.hadoop

datanode: s1.hadoop s2.hadoop s3.hadoop

(If you can't understand the five virtual machines, please see the preparation of the first 01.)

Unzip the configuration file

[hadoop@nn1 hadoop_base_op]$ ./ssh_all.sh mv /usr/local/hadoop/etc/hadoop /usr/local/hadoop/etc/hadoop_back [hadoop@nn1 hadoop_base_op]$ ./scp_all.sh ../up/hadoop.tar.gz /tmp/ [hadoop@nn1 hadoop_base_op]$ #Unzip the custom configuration compression package into / usr/local/hadoop/etc in batches/ #Batch Check Configuration for Correct Decompression [hadoop@nn1 hadoop_base_op]$ ./ssh_all.sh head /usr/local/hadoop/etc/hadoop/hadoop-env.sh

[hadoop@nn1 hadoop_base_op]$ ./ssh_root.sh chmown -R hadoop:hadoop /usr/local/hadoop/etc/hadoop [hadoop@nn1 hadoop_base_op]$ ./ssh_root.sh chmod -R 770 /usr/local/hadoop/etc/hadoop

Initialize HDFS

Technological process:

- Start zookeeper

- Start journalnode

- Start the zookeeper client and initialize the zookeeper information of HA

- Format namenode on nn1

- Start the namenode on nn1

- Start synchronous namenode on nn2

- Start namenode on nn2

- Start ZKFC

- Start dataname

1. View zookeeper status

[hadoop@nn1 zk_op]$ ./zk_ssh_all.sh /usr/local/zookeeper/bin/zkServer.sh status ssh hadoop@"nn1.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status" ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower OK! ssh hadoop@"nn2.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status" ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: leader OK! ssh hadoop@"s1.hadoop" "/usr/local/zookeeper/bin/zkServer.sh status" ZooKeeper JMX enabled by default Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg Mode: follower OK!

See two follower s and one leader indicating that they are working properly. If not, start with the following command

[hadoop@nn1 zk_op]$ ./zk_ssh_all.sh /usr/local/zookeeper/bin/zkServer.sh start

2. Start journalnode

This is the namenode synchronizer.

#Start journalnode on nn1 [hadoop@nn1 zk_op]$ hadoop-daemon.sh start journalnode #Start journalnode on nn2 [hadoop@nn1 zk_op]$ hadoop-daemon.sh start journalnode #You can open the log separately to see the startup status [hadoop@nn1 zk_op]$ tail /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-nn1.hadoop.log 2019-07-22 17:15:54,164 INFO org.apache.hadoop.ipc.Server: Starting Socket Reader #1 for port 8485 2019-07-22 17:15:54,190 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting 2019-07-22 17:15:54,191 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 8485: starting #It was found that IPC communication had been established and the journalnode process was in 8485.

3. Initialize HA information (first run only, not later)

[hadoop@nn1 zk_op]$ hdfs zkfc -formatZK [hadoop@nn1 zk_op]$ /usr/local/zookeeper/bin/zkCli.sh [zk: localhost:2181(CONNECTED) 0] ls / [zookeeper, hadoop-ha] [zk: localhost:2181(CONNECTED) 1] quit Quitting...

4. Format the namenode on nn1 (only for the first time, not later)

[hadoop@nn1 zk_op]$ hadoop namenode -format #Successful initialization appears in the following instructions #19/07/22 17:23:09 INFO common.Storage: Storage directory /data/dfsname has been successfully formatted.

5. Start nn1's namenode

[hadoop@nn1 zk_op]$ hadoop-daemon.sh start namenode [hadoop@nn1 zk_op]$ tail /usr/local/hadoop/logs/hadoop-hadoop-namenode-nn1.hadoop.log # #2019-07-22 17:24:57,321 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting #2019-07-22 17:24:57,322 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 9000: starting #2019-07-22 17:24:57,385 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: NameNode RPC up at: nn1.hadoop/192.168.10.6:9000 #2019-07-22 17:24:57,385 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Starting services required for standby state #2019-07-22 17:24:57,388 INFO org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer: Will roll logs on active node at nn2.hadoop/192.168.10.7:9000 every 120 seconds. #2019-07-22 17:24:57,394 INFO org.apache.hadoop.hdfs.server.namenode.ha.StandbyCheckpointer: Starting standby checkpoint thread... #Checkpointing active NN at http://nn2.hadoop:50070 #Serving checkpoints at http://nn1.hadoop:50070

6. Synchronize the namenode status of nn1 on the nn2 machine (only the first run, not later)

Let's go to the console of nn2!

###########Be sure to be there nn2 Run this on the machine!!!!############ [hadoop@nn2 ~]$ hadoop namenode -bootstrapStandby ===================================================== About to bootstrap Standby ID nn2 from: Nameservice ID: ns1 Other Namenode ID: nn1 Other NN's HTTP address: http://nn1.hadoop:50070 Other NN's IPC address: nn1.hadoop/192.168.10.6:9000 Namespace ID: 1728347664 Block pool ID: BP-581543280-192.168.10.6-1563787389190 Cluster ID: CID-42d2124d-9f54-4902-aa31-948fb0233943 Layout version: -63 isUpgradeFinalized: true ===================================================== 19/07/22 17:30:24 INFO common.Storage: Storage directory /data/dfsname has been successfully formatted.

7. Start nn2's namenode

Or run in the nn2 console!!

[hadoop@nn2 ~]$ hadoop-daemon.sh start namenode #Check the log to see if it started successfully. [hadoop@nn2 ~]$ tail /usr/local/hadoop-2.7.3/logs/hadoop-hadoop-namenode-nn2.hadoop.log

8. Start ZKFC

At this time, ZKFC is started in nn1 and nn2 respectively. At this time, the namenode of the two machines becomes active and standby!! ZKFC realizes HA high availability automatic switching!!

#############stay nn1 Function################# [hadoop@nn1 zk_op]$ hadoop-daemon.sh start zkfc

#############stay nn2 Function#################### [hadoop@nn2 zk_op]$ hadoop-daemon.sh start zkfc

At this time, access the hadoop interface of the two machines at the browser input address

http://192.168.10.6:50070/dfshealth.html#tab-overview

http://192.168.10.7:50070/dfshealth.html#tab-overview

These two have an active and one is a standby state.

9. Starting dataname is the last three machines to start

########First, determine who is the datanode that needs to be configured in the slaves file [hadoop@nn1 hadoop]$ cat slaves s1.hadoop s2.hadoop s3.hadoop ###########In the display as active Running on a machine############## [hadoop@nn1 zk_op]$ hadoop-daemons.sh start datanode

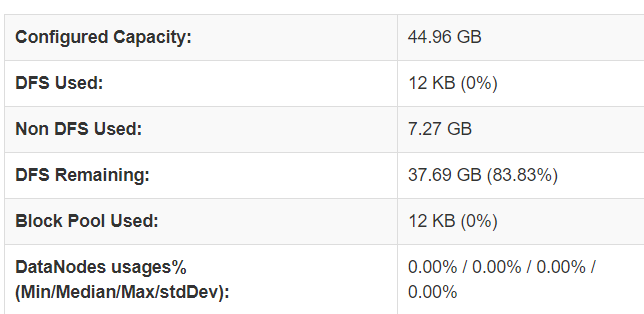

10. View Hard Disk Capacity

Open the hadoop page just now to see if the hard disk of hdfs is well formatted.

Here is the HDFS system, which reserves 2G by default for each physical machine's hard disk (which can be changed in the configuration file hdfs-site.xml), and then actually uses 15G for each machine to make hdfs, so the total number of three machines is 45G.

The HDFS is successfully configured as shown in the figure.

The previous articles are here:

Hadoop cluster build-03 compile and install Hadoop

Hadoop Cluster Build-02 Installation Configuration Zookeeper