gRPC Service Discovery & Load Balancing

To build high-availability and high-performance communication services, service registration and discovery, load balancing and fault-tolerant processing are usually used. According to the location of load balancing implementation, there are usually three solutions:

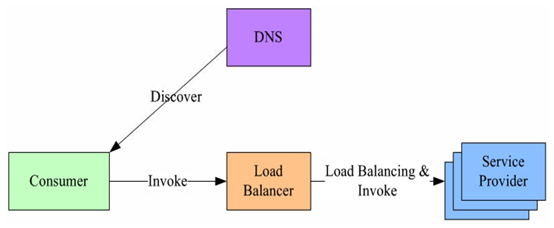

1. Centralized LB (Proxy Model)

There is an independent LB between service consumers and service providers, usually based on specialized hardware devices such as F5, or software such as LVS, HAproxy, etc. The address mapping table of all services on LB is usually registered by operation and maintenance configuration. When a service consumer invokes a target service, it initiates a request to LB. The request is forwarded to the target service by LB after load balancing with some strategy, such as Round-Robin. LB generally has the ability of health examination and can automatically remove unhealthy service instances. The main problems of the programme are:

For a single point problem, all service invocation flows through LB. When the number and amount of services are large, LB easily becomes a bottleneck, and once LB fails, the whole system will be affected.

There is an additional level between service consumers and providers, and there is a certain performance overhead.

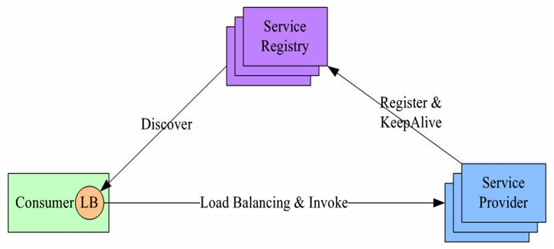

2. In-process LB (Balancing-aware Client)

In view of the shortcomings of the first scheme, this scheme integrates the functions of LB into the process of service consumers, also known as soft load or client load scheme. When the service provider starts up, it first registers the service address to the service registry, and periodically reports the heartbeat to the service registry to indicate the survival status of the service, which is equivalent to a health check. When the service consumer wants to access a service, it queries the service registry through the built-in LB component, caches and periodically refreshes the list of target service addresses, and then balances the load with some kind of load. The policy chooses a target service address and finally makes a request to the target service. LB and service discovery capabilities are distributed within the process of each service consumer, while direct calls are made between service consumers and service providers, with no additional overhead and better performance. The main problems of the programme are:

The development cost of the scheme integrates the service caller into the process of the client. If there are many different language stacks, it is necessary to cooperate with the development of many different clients, with a certain cost of research and development and maintenance.

In addition, in the production environment, if the customer library is to be upgraded in the future, it is necessary for the service caller to modify the code and redistribute it. The upgrade is more complex.

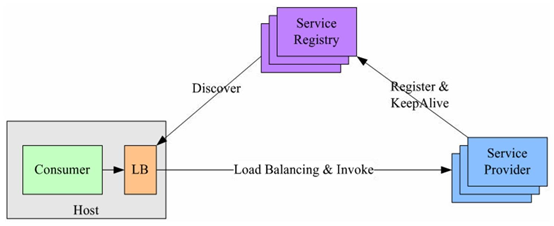

3. External Load Balancing Service

This scheme is a compromise scheme to overcome the shortcomings of the second scheme. Its principle is basically similar to that of the second scheme.

The difference is that the LB and service discovery functions are moved from within the process to a separate process on the host. When one or more services on a host want to access the target service, they do service discovery and load balancing through independent LB processes on the same host. This scheme is also a distributed scheme with no single point problem. A LB process hangs and only affects the service caller on the host. The performance of in-process calls between the service caller and LB is good. At the same time, the scheme simplifies the service caller and does not need to develop client libraries for different languages. The upgrade of LB does not need to change the code of the service caller.

The main problems of the scheme are: complex deployment, many links, inconvenient debugging and troubleshooting.

gRPC Service Discovery and Load Balancing Implementation

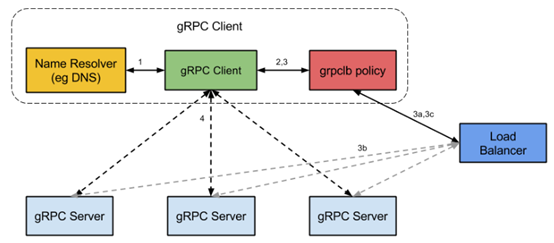

Open source components of gRPC do not officially provide service registration and discovery directly, but their design documents have provided ideas for implementation, and have provided named parsing and load balancing interfaces for expansion in different languages'gRPC code API s.

Its basic realization principle is as follows:

After the service starts, the gRPC client sends a name resolution request to the named server. The name will be resolved into one or more IP addresses. Each IP address indicates whether it is a server address or a load balancer address, and indicates which client load balancing strategy or service configuration to use.

The client instantiates the load balancing strategy. If the parsed return address is the load balancer address, the client will use the grpclb policy, otherwise the client will use the load balancing strategy of the service configuration request.

Load balancing strategy creates a sub channel for each server address.

When there is an rpc request, the load balancing policy determines which subchannel, the grpc server, will receive the request, and when the available server is empty, the client's request will be blocked.

According to the design ideas officially provided by gRPC, based on in-process LB scheme (case 2, Dubbo, Ali open source service framework, also adopts similar mechanism), and combined with distributed and consistent components (such as Zookeeper, Consul, Etcd), a feasible solution for gRPC service discovery and load balancing can be found. Next, taking GO language as an example, the key code implementation based on Etcd3 is briefly introduced.

1) Name parsing implementation: resolver.go

package etcdv3 import ( "errors" "fmt" "strings" etcd3 "github.com/coreos/etcd/clientv3" "google.golang.org/grpc/naming" ) // resolver is the implementaion of grpc.naming.Resolver type resolver struct { serviceName string // service name to resolve } // NewResolver return resolver with service name func NewResolver(serviceName string) *resolver { return &resolver{serviceName: serviceName} } // Resolve to resolve the service from etcd, target is the dial address of etcd // target example: "http://127.0.0.1:2379,http://127.0.0.1:12379,http://127.0.0.1:22379" func (re *resolver) Resolve(target string) (naming.Watcher, error) { if re.serviceName == "" { return nil, errors.New("grpclb: no service name provided") } // generate etcd client client, err := etcd3.New(etcd3.Config{ Endpoints: strings.Split(target, ","), }) if err != nil { return nil, fmt.Errorf("grpclb: creat etcd3 client failed: %s", err.Error()) } // Return watcher return &watcher{re: re, client: *client}, nil }

2) Service discovery implementation: watcher.go

package etcdv3 import ( "fmt" etcd3 "github.com/coreos/etcd/clientv3" "golang.org/x/net/context" "google.golang.org/grpc/naming" "github.com/coreos/etcd/mvcc/mvccpb" ) // watcher is the implementaion of grpc.naming.Watcher type watcher struct { re *resolver // re: Etcd Resolver client etcd3.Client isInitialized bool } // Close do nothing func (w *watcher) Close() { } // Next to return the updates func (w *watcher) Next() ([]*naming.Update, error) { // prefix is the etcd prefix/value to watch prefix := fmt.Sprintf("/%s/%s/", Prefix, w.re.serviceName) // check if is initialized if !w.isInitialized { // query addresses from etcd resp, err := w.client.Get(context.Background(), prefix, etcd3.WithPrefix()) w.isInitialized = true if err == nil { addrs := extractAddrs(resp) //if not empty, return the updates or watcher new dir if l := len(addrs); l != 0 { updates := make([]*naming.Update, l) for i := range addrs { updates[i] = &naming.Update{Op: naming.Add, Addr: addrs[i]} } return updates, nil } } } // generate etcd Watcher rch := w.client.Watch(context.Background(), prefix, etcd3.WithPrefix()) for wresp := range rch { for _, ev := range wresp.Events { switch ev.Type { case mvccpb.PUT: return []*naming.Update{{Op: naming.Add, Addr: string(ev.Kv.Value)}}, nil case mvccpb.DELETE: return []*naming.Update{{Op: naming.Delete, Addr: string(ev.Kv.Value)}}, nil } } } return nil, nil } func extractAddrs(resp *etcd3.GetResponse) []string { addrs := []string{} if resp == nil || resp.Kvs == nil { return addrs } for i := range resp.Kvs { if v := resp.Kvs[i].Value; v != nil { addrs = append(addrs, string(v)) } } return addrs }

3) Service registration implementation: register.go

package etcdv3 import ( "fmt" "log" "strings" "time" etcd3 "github.com/coreos/etcd/clientv3" "golang.org/x/net/context" "github.com/coreos/etcd/etcdserver/api/v3rpc/rpctypes" ) // Prefix should start and end with no slash var Prefix = "etcd3_naming" var client etcd3.Client var serviceKey string var stopSignal = make(chan bool, 1) // Register func Register(name string, host string, port int, target string, interval time.Duration, ttl int) error { serviceValue := fmt.Sprintf("%s:%d", host, port) serviceKey = fmt.Sprintf("/%s/%s/%s", Prefix, name, serviceValue) // get endpoints for register dial address var err error client, err := etcd3.New(etcd3.Config{ Endpoints: strings.Split(target, ","), }) if err != nil { return fmt.Errorf("grpclb: create etcd3 client failed: %v", err) } go func() { // invoke self-register with ticker ticker := time.NewTicker(interval) for { // minimum lease TTL is ttl-second resp, _ := client.Grant(context.TODO(), int64(ttl)) // should get first, if not exist, set it _, err := client.Get(context.Background(), serviceKey) if err != nil { if err == rpctypes.ErrKeyNotFound { if _, err := client.Put(context.TODO(), serviceKey, serviceValue, etcd3.WithLease(resp.ID)); err != nil { log.Printf("grpclb: set service '%s' with ttl to etcd3 failed: %s", name, err.Error()) } } else { log.Printf("grpclb: service '%s' connect to etcd3 failed: %s", name, err.Error()) } } else { // refresh set to true for not notifying the watcher if _, err := client.Put(context.Background(), serviceKey, serviceValue, etcd3.WithLease(resp.ID)); err != nil { log.Printf("grpclb: refresh service '%s' with ttl to etcd3 failed: %s", name, err.Error()) } } select { case <-stopSignal: return case <-ticker.C: } } }() return nil } // UnRegister delete registered service from etcd func UnRegister() error { stopSignal <- true stopSignal = make(chan bool, 1) // just a hack to avoid multi UnRegister deadlock var err error; if _, err := client.Delete(context.Background(), serviceKey); err != nil { log.Printf("grpclb: deregister '%s' failed: %s", serviceKey, err.Error()) } else { log.Printf("grpclb: deregister '%s' ok.", serviceKey) } return err }

4) Interface description file: helloworld.proto

syntax = "proto3"; option java_multiple_files = true; option java_package = "com.midea.jr.test.grpc"; option java_outer_classname = "HelloWorldProto"; option objc_class_prefix = "HLW"; package helloworld; // The greeting service definition. service Greeter { // Sends a greeting rpc SayHello (HelloRequest) returns (HelloReply) { } } // The request message containing the user's name. message HelloRequest { string name = 1; } // The response message containing the greetings message HelloReply { string message = 1; }

5) Implementing the Server Interface: helloworldserver.go

package main import ( "flag" "fmt" "log" "net" "os" "os/signal" "syscall" "time" "golang.org/x/net/context" "google.golang.org/grpc" grpclb "com.midea/jr/grpclb/naming/etcd/v3" "com.midea/jr/grpclb/example/pb" ) var ( serv = flag.String("service", "hello_service", "service name") port = flag.Int("port", 50001, "listening port") reg = flag.String("reg", "http://127.0.0.1:2379", "register etcd address") ) func main() { flag.Parse() lis, err := net.Listen("tcp", fmt.Sprintf("0.0.0.0:%d", *port)) if err != nil { panic(err) } err = grpclb.Register(*serv, "127.0.0.1", *port, *reg, time.Second*10, 15) if err != nil { panic(err) } ch := make(chan os.Signal, 1) signal.Notify(ch, syscall.SIGTERM, syscall.SIGINT, syscall.SIGKILL, syscall.SIGHUP, syscall.SIGQUIT) go func() { s := <-ch log.Printf("receive signal '%v'", s) grpclb.UnRegister() os.Exit(1) }() log.Printf("starting hello service at %d", *port) s := grpc.NewServer() pb.RegisterGreeterServer(s, &server{}) s.Serve(lis) } // server is used to implement helloworld.GreeterServer. type server struct{} // SayHello implements helloworld.GreeterServer func (s *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) { fmt.Printf("%v: Receive is %s\n", time.Now(), in.Name) return &pb.HelloReply{Message: "Hello " + in.Name}, nil }

6) Implementing client interface: helloworldclient.go

package main import ( "flag" "fmt" "time" grpclb "com.midea/jr/grpclb/naming/etcd/v3" "com.midea/jr/grpclb/example/pb" "golang.org/x/net/context" "google.golang.org/grpc" "strconv" ) var ( serv = flag.String("service", "hello_service", "service name") reg = flag.String("reg", "http://127.0.0.1:2379", "register etcd address") ) func main() { flag.Parse() r := grpclb.NewResolver(*serv) b := grpc.RoundRobin(r) ctx, _ := context.WithTimeout(context.Background(), 10*time.Second) conn, err := grpc.DialContext(ctx, *reg, grpc.WithInsecure(), grpc.WithBalancer(b)) if err != nil { panic(err) } ticker := time.NewTicker(1 * time.Second) for t := range ticker.C { client := pb.NewGreeterClient(conn) resp, err := client.SayHello(context.Background(), &pb.HelloRequest{Name: "world " + strconv.Itoa(t.Second())}) if err == nil { fmt.Printf("%v: Reply is %s\n", t, resp.Message) } } }

7) Running test

-

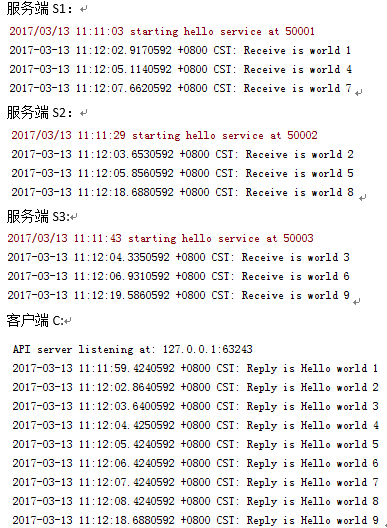

Run three servers S1, S2, S3, and one client C to see if the number of requests received by each server is equal.

-

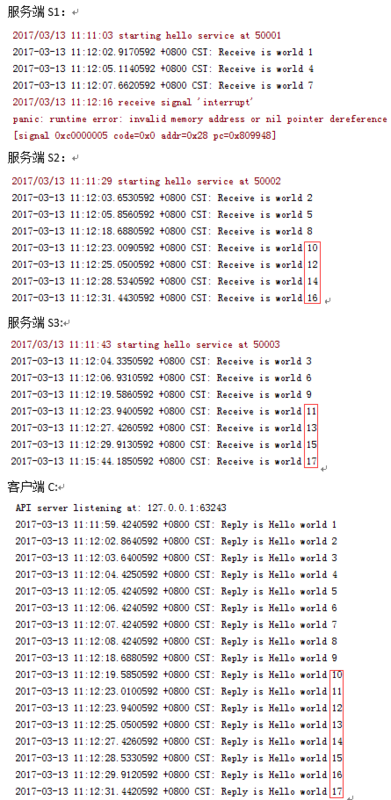

Close one server S1 and see if the request will be transferred to the other two servers.

-

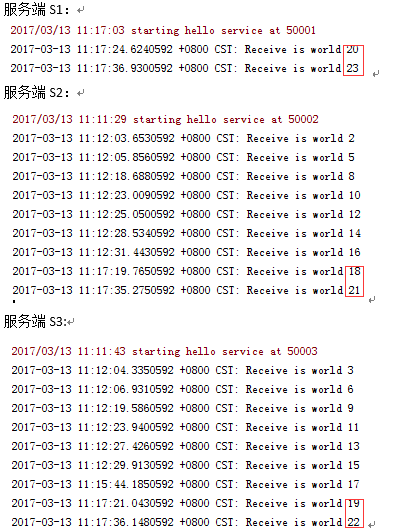

Restart the S1 server and see if the other two server requests are evenly allocated to S1?

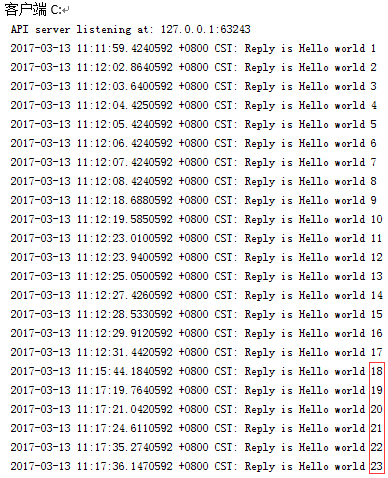

Close the Etcd3 server and observe whether the communication between client and server is normal.

It's still normal to shut down the communication, but the new server will not be registered, and the server will not be able to remove the offline.Restart the Etcd3 server, the server can automatically restore to normal.

Close all servers and client requests will be blocked.

Reference resources:

http://www.grpc.io/docs/ https://github.com/grpc/grpc/blob/master/doc/load-balancing.md