Context&log items

context

Method to cancel goroutine in general scenarios

var wg sync.WaitGroup

var exit bool

func worker(exitChan chan struct{}) {

LOOP:

for {

fmt.Printf("work\n")

time.Sleep(time.Second)

/*if exit {

break

}

*/

select {

case <- exitChan:

break LOOP

default:

}

}

wg.Done()

}

func main() {

var exitChan chan struct{} = make(chan struct{},1)

wg.Add(1)

go worker(exitChan)

time.Sleep(time.Second*3)

exitChan <- struct{}{}

//exit = true

wg.Wait()

}- Scene:

- Returns a cancel function that, when called, triggers the context.Done() function [gracefully exits the thread]

var wg sync.WaitGroup

func worker(ctx context.Context) {

LOOP:

for {

fmt.Printf("work\n")

time.Sleep(time.Second)

select {

case <- ctx.Done():

break LOOP

default:

}

}

wg.Done()

}

func main() {

cxt := context.Background()

cxt,cancel := context.WithCancel(cxt)

wg.Add(1)

go worker(cxt)

time.Sleep(time.Second*3)

cancel() //Cancel goroutine

wg.Wait()

}- trace instance

var wg sync.WaitGroup

func worker(cxt context.Context) {

traceCode,ok := cxt.Value("TRACE_CODE").(string)

if ok {

fmt.Printf("traceCode=%s\n",traceCode)

}

LOOP:

for {

fmt.Printf("worker\n")

time.Sleep(time.Millisecond)

select {

case <- cxt.Done():

break LOOP

default:

}

}

fmt.Printf("worker Done,trace_Code:%s\n",traceCode)

wg.Done()

}

func main() {

ctx := context.Background()

ctx,cancel := context.WithTimeout(ctx,time.Millisecond*50)

ctx = context.WithValue(ctx,"TRACE_CODE","212334121")

wg.Add(1)

go worker(ctx)

time.Sleep(time.Second*3)

cancel() //Release contex resources

wg.Wait()

}Log Items (1)

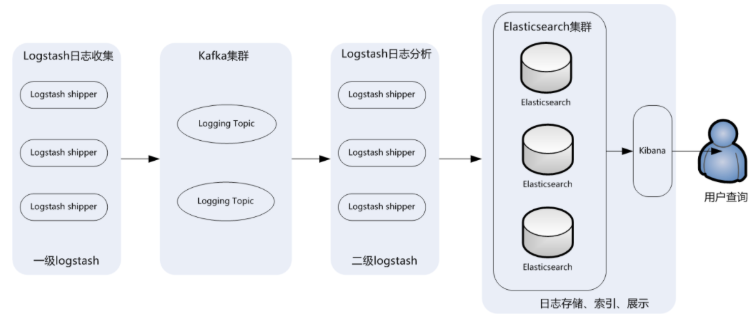

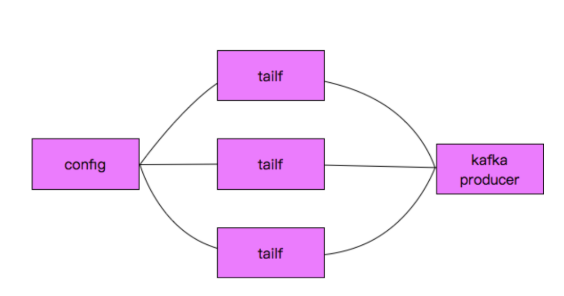

Design of Log Collection System

-

Project Background

- Every system has a log, which is used to troubleshoot problems when they occur

- Log on to the server to see how satisfied you are when your system is relatively small

- When the system and its size are huge, it is almost impossible to log on machine by machine

-

Solution

- Collect logs on your machine in real time and store them in a centralized system

- These logs are then indexed and searched to find the corresponding logs

- Complete log search through a friendly web interface

-

Issues facing

- Real-time daily quality is very large, billions a day

- Quasi-real-time log collection, delay control at the minute level

- Ability to expand horizontally

- Industry Solution ELK

- Problems

- Operations and maintenance costs are high, and each additional log collection needs to be manually configured

- Missing monitoring to get logstash status accurately

- Unable to customize development and maintenance

- Problems

Design of Log Collection System

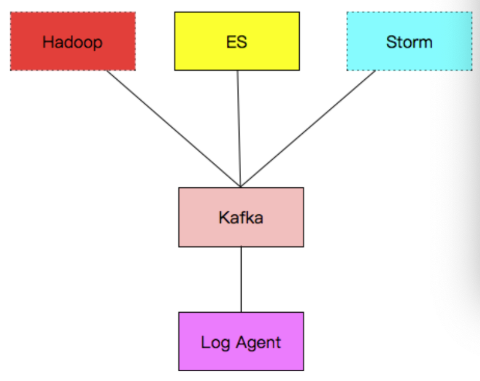

- Component introduction

- Log Agent: A log collection client used to collect logs on the server

- kafka: high throughput distributed message queue, linkin development, apache top open source project

- ES: Open source search engine, web interface based on http restful style

- Hadoop: Distributed Computing Framework

Application scenarios for kafka

Highly available kafka cluster deployment: https://blog.51cto.com/navyaijm/2429959?source=drh

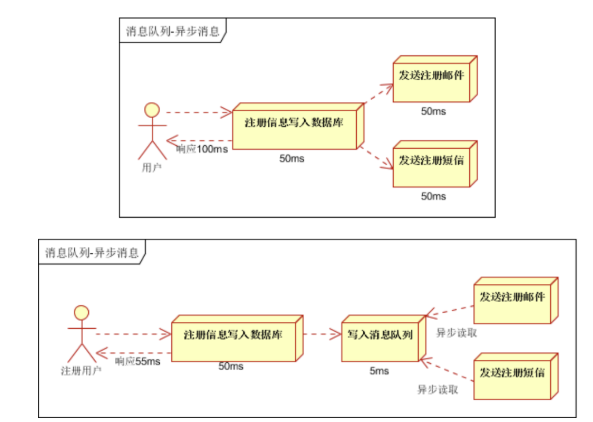

- Asynchronous processing, asynchronizing non-critical processes, improving system response time and robustness

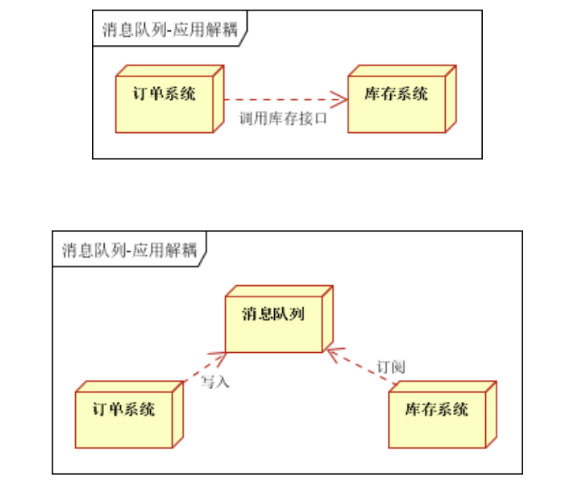

- Applying decoupling

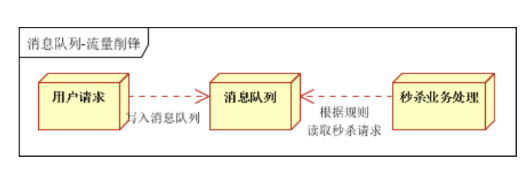

- Traffic spike: high concurrency scenarios

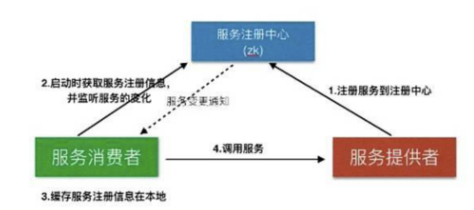

zookeeper application scenarios

- Service Registration and Discovery

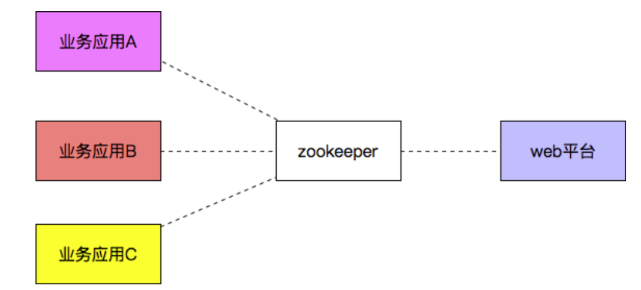

- Configuration Center

- Distributed Lock

- zookeeper is strongly consistent

- Multiple client colleagues create the same znode on zookeeper, only one successfully created

Log Client Development

-

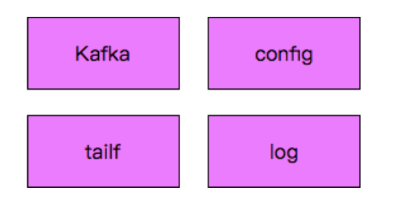

logAgent design

- Process of logagent

Use of kafka

- Imported library: go get github.com/Shopify/sarama

- Use code

import (

"fmt"

"github.com/Shopify/sarama"

"time"

)

func main() {

//Initialize Configuration

config := sarama.NewConfig()

config.Producer.RequiredAcks = sarama.WaitForAll

config.Producer.Partitioner = sarama.NewRandomPartitioner

config.Producer.Return.Successes = true

//Message Configuration

msg := &sarama.ProducerMessage{}

msg.Topic = "nginx_log"

msg.Value = sarama.StringEncoder("this is a test,messsage transfer ok")

//Connection Configuration

client,err := sarama.NewSyncProducer([]string{"192.168.56.11:9092"},config)

if err != nil {

fmt.Printf("send message faild,error:%v\n",err)

return

}

defer client.Close()

for {

pid,offset,err := client.SendMessage(msg)

if err != nil {

fmt.Printf("send message faild,err:%v\n",err)

return

}

fmt.Printf("pid:%v,offset:%v\n",pid,offset)

time.Sleep(time.Second)

}

}Use of tailf components

- Imported library: go get github.com/hpcloud/tail

- Use code

import (

"fmt"

"github.com/hpcloud/tail"

"time"

)

func main() {

filename := "./my.log"

tails,err := tail.TailFile(filename,tail.Config{

Location: &tail.SeekInfo{

Offset: 0,

Whence: 2,

},

ReOpen: true,

MustExist: false,

Poll: true,

Follow: true,

})

if err != nil {

fmt.Printf("tail file error:%v\n",err)

return

}

var msg *tail.Line

var ok bool

for true {

msg,ok = <- tails.Lines

if !ok {

fmt.Printf("tail file close reopen,filename:%s\n",filename)

time.Sleep(100*time.Millisecond)

continue

}

fmt.Printf("msg=%v\n",msg)

}

}- kafka Consumption Log Command Reference

[root@centos7-node1 ~]# cd /opt/application/kafka/bin/ [root@centos7-node1 bin]# ./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic nginx_log --from-beginning