Combined with the previous post: Introduction to Time and Window in Flink , we learned that Flink's streaming process involves three time concepts. They are Event Time, induction time and Processing Time.

If you still don't understand these three concepts of time, it's suggested that you jump to the previous blog post to understand them. From the above, we also know that EventTime is the most meaningful time for the business. Next, we will analyze the use of EventTime in parallel Source and non parallel Source.

The following is a log. According to the log format, we analyze the order quantity of customers.

Log format:

1581490623000,James,5

1581490624150,John,2

...

1.EventTime processing real-time data

Next, we use EventTime to process real-time data from two directions: parallel Source and non parallel Source

1. Non parallel Source

Non parallel Source. Take socketTextStream as an example to introduce Flink's use of EventTime to process real-time data.

1.1 code

/** * TODO Nonparallel Source EventTime * * @author liuzebiao * @Date 2020-2-12 15:25 */ public class EventTimeDemo { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); //Set EventTime as time standard env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime); //Read the source and specify which field in (1581490623000,Mary,3) is the EventTime time SingleOutputStreamOperator<String> source = env.socketTextStream("localhost", 8888).assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<String>(Time.seconds(0)) { @Override public long extractTimestamp(String line) { String[] split = line.split(","); return Long.parseLong(split[0]); } }); SingleOutputStreamOperator<Tuple2<String, Integer>> mapOperator = source.map(line -> { String[] split = line.split(","); return Tuple2.of(split[1], Integer.parseInt(split[2])); }).returns(Types.TUPLE(Types.STRING,Types.INT)); KeyedStream<Tuple2<String, Integer>, Tuple> keyedStream = mapOperator.keyBy(0); //EventTime scrolling window WindowedStream<Tuple2<String, Integer>, Tuple, TimeWindow> windowedStream = keyedStream.window(TumblingEventTimeWindows.of(Time.seconds(5))); SingleOutputStreamOperator<Tuple2<String, Integer>> sum = windowedStream.sum(1); sum.print(); env.execute("EventTimeDemo"); } }

1.2 test results

1.3 result analysis

Note: (1581490623000 converted to: 2020-02-12 14:57:03

1581490624000: 2020-02-12 14:57:04)

When we input the following data in Socket:

1581490623000,Mary,2

1581490624000,John,3

1581490624500,Clerk,1

1581490624998,Maria,4

1581490624999,Mary,3

1581490626000,Mary,3

1581490630800,Steve,3 (2020-02-12 14:57:10.800)

The window definition time is: including the head and not including the tail. Namely: [0,5),

Image analysis: (we define the scrolling window as 5s. When we analyze the image and find that 4998, there is no output. Because 4998 has not exceeded 5s and the window is set to be triggered when it is > = critical value, when we input 4999 critical value, we find the output content, which means that a window scrolling is completed and the output content contains the value of 4999 at this time; when input 6000, 6000 does not have > 10 between [5,10], so it is not output. Input 30800 [2020-02-12 14:57:10.800)], it has been more than 10s, so the result only outputs one (Mary,3), because Steve has been assigned to another window)

There is another problem: when input to 4999, only Mary meets the 5s condition, but other groups John, Clerk, etc. also output the results synchronously. Obviously it's not logical. Why does this happen? This is because SocketStream is a nonparallel data stream. (next, we use the parallel data stream KafkaSource to analyze.)

2. Parallel Source

Parallel Source, taking KafkaSouce as an example, introduces that Flink uses EventTime to process real-time data.

2.1 code

The parallel KafkaSource EventTime example (reading the message whose topic is window demo) has the following code:

/** * TODO Parallel KafkaSource EventTime example (read the message whose topic is window demo) * * @author liuzebiao * @Date 2020-2-12 15:25 */ public class EventTimeDemo { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); //Set EventTime as time standard env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime); //Kafka props Properties properties = new Properties(); //Specify the Broker address of Kafka properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.204.210:9092,192.168.204.211:9092,192.168.204.212:9092"); //Specified group ID properties.put(ConsumerConfig.GROUP_ID_CONFIG, "flinkDemoGroup"); //If the offset is not recorded, the first consumption is from the beginning properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest"); FlinkKafkaConsumer<String> kafkaSource = new FlinkKafkaConsumer("window_demo", new SimpleStringSchema(), properties); //2. Create Kafka DataStream through addSource() //Read the source and specify which field in (1581490623000,Mary,3) is the EventTime time SingleOutputStreamOperator<String> source = env.addSource(kafkaSource).assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<String>(Time.seconds(0)) { @Override public long extractTimestamp(String line) { String[] split = line.split(","); return Long.parseLong(split[0]); } }); SingleOutputStreamOperator<Tuple2<String, Integer>> mapOperator = source.map(line -> { String[] split = line.split(","); return Tuple2.of(split[1], Integer.parseInt(split[2])); }).returns(Types.TUPLE(Types.STRING,Types.INT)); KeyedStream<Tuple2<String, Integer>, Tuple> keyedStream = mapOperator.keyBy(0); //EventTime scrolling window WindowedStream<Tuple2<String, Integer>, Tuple, TimeWindow> windowedStream = keyedStream.window(TumblingEventTimeWindows.of(Time.seconds(5))); SingleOutputStreamOperator<Tuple2<String, Integer>> sum = windowedStream.sum(1); sum.print(); env.execute("EventTimeDemo"); } }

2.2 test results

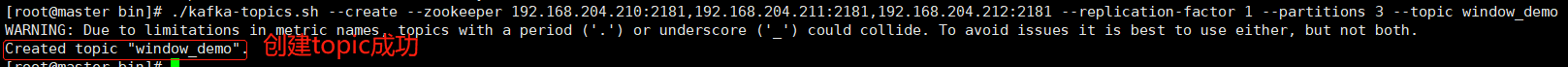

2.1.1 create Topic command as follows:

bin/kafka-topics.sh --create --zookeeper 192.168.204.210:2181,192.168.204.211:2181,192.168.204.212:2181 --replication-factor 1 --partitions 3 --topic window_demo

(note in particular: three partitions are created here)

2.1.2 create a successful screenshot of Topic (click to enlarge):

2.1.3 use the command to write data to Kafka:

bin/kafka-console-producer.sh --broker-list 192.168.204.210:9092 --topic window_demo

Use the command to write the following data:

1581490623000,Mary,2

1581490624000,John,3

1581490624500,Clerk,1

1581490624998,Maria,4

1581490624999,Mary,3

2.1.4 test results:

2.3 result analysis

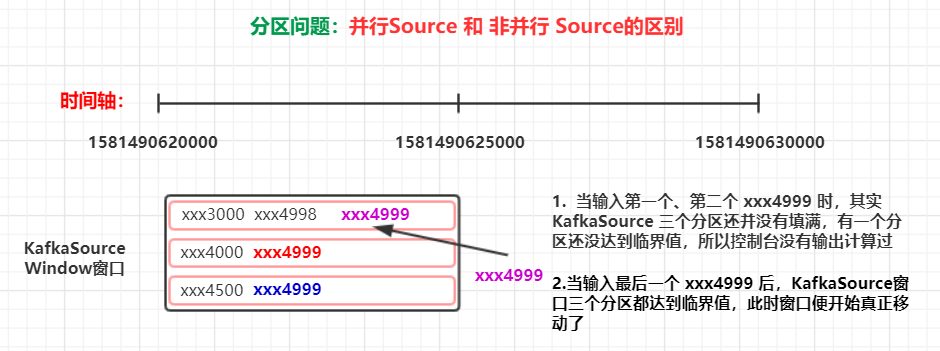

In the example of 1. Parallel Source, when we input 1581490624999,Mary,3, we see that the console will directly help us output the calculation results.

However, when using KafkaSource, we input 1581490624999,Mary,3 three times in a row, and then we see that the console helps us output the calculation results.

So why is that? This is caused by the reasons of parallel Source and non parallel Source (the topic created by KafkaSource is involved here, and there are three partitions, as shown in the figure below)

Parallel / non parallel Source, Flink uses EventTime to process real-time data, so far

The articles are all written by the blogger's heart. If this article helps you, please give me a compliment_

End