Establishment of private image

- Download and modify the daemon file

[root@docker ~]# docker pull registry

[root@docker ~]# vim /etc/docker/daemon.json

{

"insecure-registries": ["192.168.100.21:5000"], #Add line, local ip

"registry-mirrors": ["https://xxxxx.mirror.aliyuncs.com "] # own alicloud image accelerator

}

[root@docker ~]# systemctl restart docker

- Set in docker engine terminal

vim /etc/docker/daemon.json systemctl restart docker

{

"insecure-registries": ["192.168.112.100:5000"], #add to

"registry-mirrors": ["https://483txl0m.mirror.aliyuncs.com"]

}

- The / data/registry of the host automatically creates / tmp/registry in the mount container

[root@docker ~]# docker run -d -p 5000:5000 -v /data/registry:/tmp/registry registr y

2792c5e1151a50f6a1131ac3b687f5f4314fe85d9f6f7ba2c82f96a964135dc4

#Start registry and mount the directory

[root@docker ~]# docker tag nginx:v1 192.168.112.100:5000/nginx

#Label locally

[root@docker ~]# docker push 192.168.112.100:5000/nginx

#Upload to local warehouse

Using default tag: latest

The push refers to repository [192.168.112.100:5000/nginx]

[root@docker ~]# curl -XGET http://192.168.112.100:5000/v2/_catalog

#View mirror list

{"repositories":["nginx"]}

[root@docker ~]# docker rmi 192.168.112.100:5000/nginx:latest

#Test delete mirror

[root@docker ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx v1 8ac85260ea00 2 hours ago 205MB

centos 7 8652b9f0cb4c 9 months ago 204MB

[root@docker ~]# docker pull 192.168.112.100:5000/nginx:latest

#download

latest: Pulling from nginx

Digest: sha256:ccb90b53ff0e11fe0ab9f5347c4442d252de59f900c7252660ff90b6891c3be4

Status: Downloaded newer image for 192.168.112.100:5000/nginx:latest

192.168.112.100:5000/nginx:latest

[root@docker ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.112.100:5000/nginx latest 8ac85260ea00 2 hours ago 205MB

nginx v1 8ac85260ea00 2 hours ago 205MB

centos 7 8652b9f0cb4c 9 months ago 204MB

cgroup resource allocation

Docker controls the resource quota used by the container through Cgroup, including CPU, memory and disk. It basically covers the common resource quota and usage control.

Cgroup is the abbreviation of Control Groups. It is a mechanism provided by the Linux kernel that can limit, record and isolate the physical resources (such as CPU, memory, disk IO, etc.) used by process groups

These specific resource management functions become cgroup Subsystem, which is realized by the following subsystems: blkio: Set input and output controls that limit each block device, such as disks, discs, and USB etc. CPU: Use scheduler for cgroup Task provision CPU Visit cpuacct: produce cgroup Task cpu Resource report cpuset: If it is multi-core cpu,This subsystem will cgroup Task assignment separate cpu And memory devices: Allow or deny cgroup Task access to the device freezer: Pause and resume cgroup task memory: Set each cgroup Memory limit and generate memory resource report net_cls: Mark each network packet for cgroup Easy to use ns: Namespace subsystem

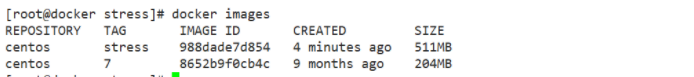

- Use Dockerfile to create a centos based stress tool image.

mkdir l opt/ stress vim /opt/ stress/ Dockerfile

FROM centos:7 RUN yum install -y wget RUN wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo RUN yum install -y stress

docker build -t centos:stress .

- Use the following command to create a container. The value of the – CPU shares parameter in the command does not guarantee that one vcpu or how many GHz CPU resources can be obtained. It is only an elastic weighted value.

docker run -itd --cpu-shares 100 centos:stress

Cgroups takes effect only when the resources allocated by a container are scarce, that is, when it is necessary to limit the resources used by the container. Therefore, it is impossible to determine how many CPU resources are allocated to a container simply according to the CPU share of a container. The resource allocation result depends on the CPU allocation of other containers running at the same time and the operation of processes in the container.

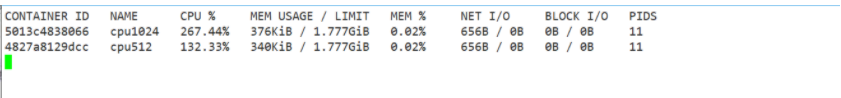

You can set the priority of CPU used by containers through cpu share, such as starting two containers and running to view the percentage of CPU used.

docker run -tid --name cpu512 --cpu-shares 512 centos:stress stress -c 10 //The container generates 10 sub function processes //Open another container for comparison docker run -tid --name cpu1024 --cpu-shares 1024 centos:stress stress -c 10 docker stats View resource usage

- CPU cycle limit

Docker provides two parameters – CPU period and – CPU quota to control the CPU clock cycles that can be allocated by the container. – CPU period is used to specify how long the container needs to reallocate CPU.

cd /sys/fs/cgroup/cpu/docker container ID/cpu.cfs_quota_us

How the host provides resources and controls applications in the docker container:

CPU --->VCPU-->Embodied in a process vorkstation environment(docker In the environment))--->docker The expression is container -->Vcpu Control containers as a process-->Applications in the container need service process support-->Host kernel cpu Can be cgroup Administration((by means of allocating resources)-->linux In kernel cgroup Can control management docker Application in container

- Method for limiting container cpu

[root@docker cpu]# docker run -itd --name centos_quota --cpu-period 100000 --cpu-quota 200000 centos:stress [root@docker cpu]# docker inspect centos_quota

- CPU Core control

For servers with multi-core CPUs, Docker can also control which CPU cores are used by the container, that is, use the – cpuset CPUs parameter. This is particularly useful for servers with multi-core CPUs, and can configure containers that require high-performance computing for optimal performance.

docker run -itd --name cpu1 --cpuset-cpus 0-1 centos:stress

To execute the above command, the host computer needs to be dual core, which means that the container created can only use 0 and 1 cores. The CPU core configuration of the cgroup finally generated is as follows:

cat /sys/fs/cgroup/cpuset/docker/1600f3d735a849583d22043507e999e69a5b240f26b02273322e4b229c9081a7/cpuset.cpus 0-1

You can see the binding relationship between the processes in the container and the CPU kernel through the following instructions to bind the CPU kernel.

[ root@localhost stress]# docker exec container ID taskset -c -p 1 //The first process inside the container with pid 1 is bound to the specified CPU for running

Summary:

How containers restrict resources:

1. Use parameters directly to specify resource limits when creating containers

2. After creating the container, specify the resource allocation

Modify the file in / sys/fs/cgroup / of the container resource control corresponding to the host

Mixed use of CPU quota control parameters

Specify that container A uses CPU core 0 and container B only uses CPU core 1 through the cpuset CPUs parameter.

On the host, only these two containers use the corresponding CPU kernel. They each occupy all kernel resources, and CPU shares has no obvious effect.

cpuset-cpus,cpuset-mems

Parameters are only valid on servers on multi-core and multi memory nodes, and must match the actual physical configuration, otherwise the purpose of resource control cannot be achieved.

When the system has multiple CPU cores, you need to set the container CPU core through the cpuset CPUs parameter to test conveniently.

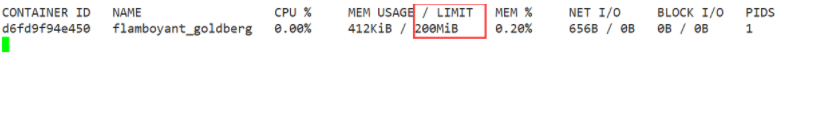

- memory limit

Similar to the operating system, the memory available to the container includes two parts: physical memory and Swap

Docker controls the memory usage of the container through the following two sets of parameters.

-M or – memory: set the usage limit of memory, such as 100M, 1024M.

– memory swap: set the usage limit of memory + swap.

Execute the following command to allow the container to use up to 200M of memory and 300M of swap

docker run -itd --name memory1 -m 200M --memory-swap=300M centos:stress #By default, the container can use all free memory on the host. #Similar to the cgroups configuration of the CPU, Docker automatically displays the container in the directory / sys / FS / CGroup / memory / Docker / < full long ID of the container > Create corresponding in cgroup configuration file

docker stats

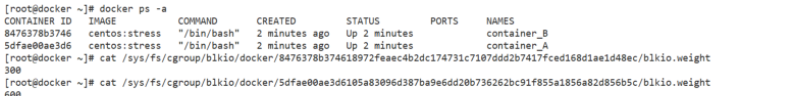

- Block IO limitations

By default, all containers can read and write disks equally. You can change the priority of container block Io by setting the – blkio weight parameter. The – blkio weight is similar to – CPU shares. It sets the relative weight value, which is 500 by default.

In the following example, container A reads and writes twice as much disk bandwidth as container B.

docker run -it --name container_A --blkio-weight 600 centos:stress docker run -it --name container_B --blkio-weight 300 centos:stress

- Limitations of bps and iops

bps is byte per second, the amount of data read and written per second.

iops is io per second, the number of IOS per second. bps and iops of the container can be controlled through the following parameters:

--device-read-bps,Restrict access to a device bps --device-write-bps,Restrict writing to a device bps. --device-read-iops,Restrict access to a device iops --device-write-iops,I Restrict writing to a device iops

The following example limits the container to write / dev/sda at a rate of 5 MB/s

Test the speed of writing to the disk in the container through the dd command. Because the file system of the container is on host /dev/sda, writing to the file in the container is equivalent to writing to host /dev/sda. In addition, oflag=direct specifies to write the file in direct Io mode, so -- device write BPS can take effect.

The speed limit is 5MB/S,10MB/S and no speed limit. The results are as follows:

- Specify resource limits when building images

--build-arg=[] : Sets the variable when the mirror is created; --cpu-shares : set up cpu Use weights; --cpu-period : limit CPU CFS cycle; --cpu-quota : limit CPU CFS quota; --cpuset-cpus : Specify the used CPU id; --cpuset-mems : Specifies the memory used id; --disable-content-trust : Ignore verification and enable by default; -f : Specify the to use Dockerfile route; --force-rm : Set the intermediate container to be deleted during mirroring; --isolation : Use container isolation technology; --label=[] : Set the metadata used by the mirror; -m : Set maximum memory; --memory-swap : set up Swap The maximum value for is memory swap, "-1"Indicates unlimited swap; --no-cache : The process of creating a mirror does not use caching; --pull : Try to update the new version of the image; --quiet, -q : Quiet mode. Only the image is output after success ID; --rm : Delete the intermediate container after setting the image successfully; --shm-size : set up/dev/shm The default value is 64 M; --ulimit : Ulimit to configure; --squash : take Dockerfile All operations in are compressed into one layer; --tag, -t: The name and label of the image, usually name:tag perhaps name format;You can set multiple labels for a mirror in a single build. --network : default default;Set during build RUN Network mode of instruction