Deployment architecture:

I. installing docker

Only docker18.06 is supported at this time

1.1. Add source:

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

1.2. View package:

# yum list docker-ce.x86_64 --showduplicates | sort -r

1.3. Install docker

# yum -y install docker-ce-18.06.0.ce-3.el7

This version of docker needs to be installed on all three servers

II. Installation of kubelet, kubeadm and kubectl

kubelet runs on all nodes of Cluster and is responsible for starting Pod and container.

kubeadm is used to initialize Cluster

2.1. Add aliyuan, what you know abroad:

# vim /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0

2.2. Install kubelet kubeadm kubectl

# yum install -y kubelet kubeadm kubectl

Enable kubelet:

# systemctl enable kubelet.service

Close swap:

# swapoff -a

2.3. Create a cluster using kubeadm

2.3.1. Initialize master

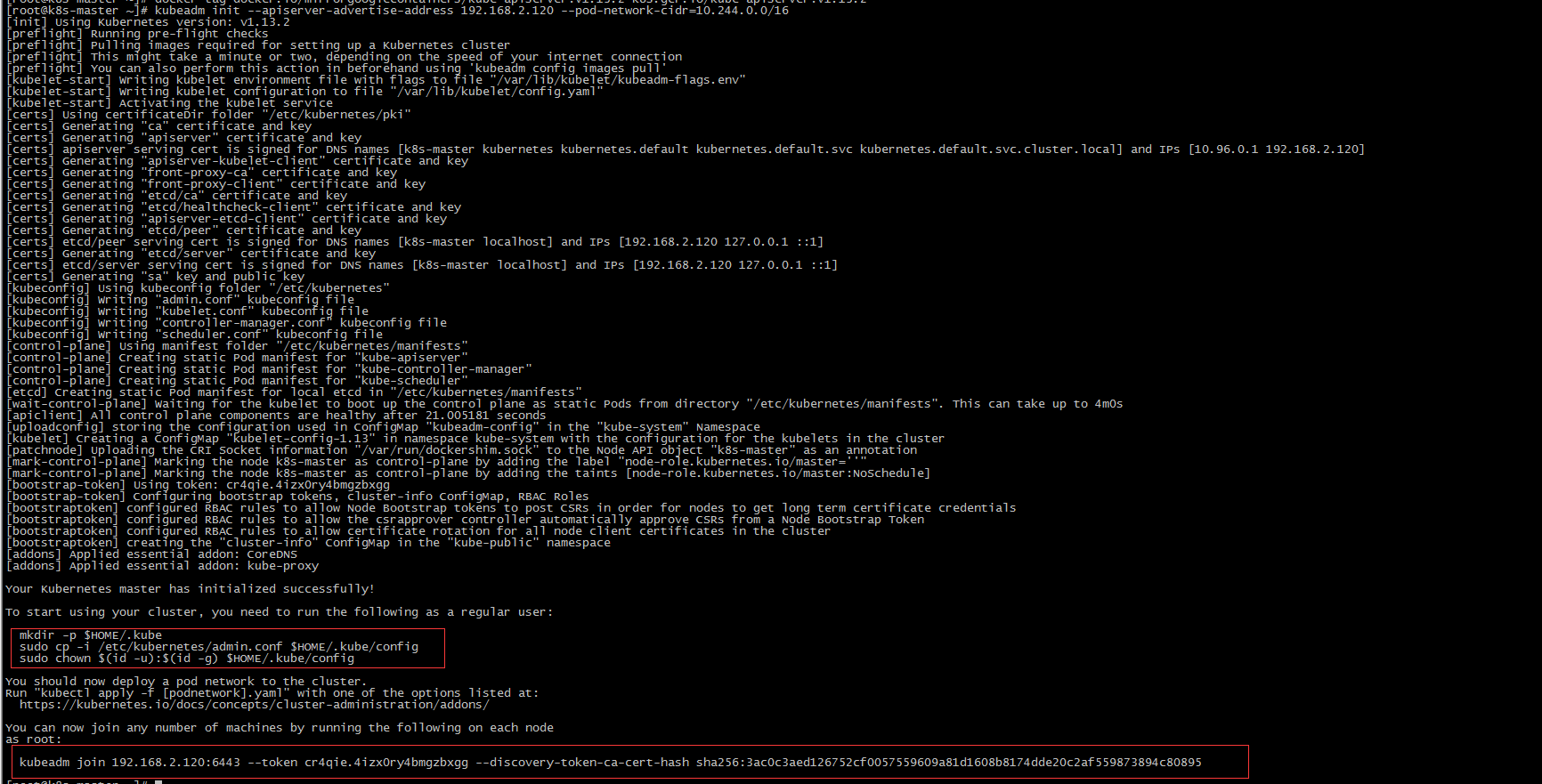

kubeadm init --apiserver-advertise-address 192.168.2.120 --pod-network-cidr=10.244.0.0/16

Parameter Description:

--apiserver-advertise-address string The IP address the API Server will advertise it's listening on. Specify '0.0.0.0' to use the address of the default network interface. -pod-network-cidr string Specify range of IP addresses for the pod network. If set, the control plane will automatically allocate CIDRs for every node.

Report errors:

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address 192.168.2.120 --pod-network-cidr=10.244.0.0/16 [init] Using Kubernetes version: v1.13.2 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.13.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.13.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.13.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.13.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.2.24: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.2.6: output: Error response from daemon: Get https://k8s.gcr.io/v2/: dial tcp 64.233.188.82:443: connect: connection timed out , error: exit status 1 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

Solve:

The docker.io warehouse mirrors google's containers. You can pull down the relevant images with the following commands:

docker pull mirrorgooglecontainers/kube-apiserver:v1.13.2 docker pull mirrorgooglecontainers/kube-controller-manager:v1.13.2 docker pull mirrorgooglecontainers/kube-scheduler:v1.13.2 docker pull mirrorgooglecontainers/kube-proxy:v1.13.2 docker pull mirrorgooglecontainers/pause:3.1 docker pull mirrorgooglecontainers/etcd:3.2.24 docker pull coredns/coredns:1.2.6

tag the image:

docker tag docker.io/mirrorgooglecontainers/kube-apiserver:v1.13.2 k8s.gcr.io/kube-apiserver:v1.13.2 docker tag docker.io/mirrorgooglecontainers/kube-controller-manager:v1.13.2 k8s.gcr.io/kube-controller-manager:v1.13.2 docker tag docker.io/mirrorgooglecontainers/kube-scheduler:v1.13.2 k8s.gcr.io/kube-scheduler:v1.13.2 docker tag docker.io/mirrorgooglecontainers/kube-proxy:v1.13.2 k8s.gcr.io/kube-proxy:v1.13.2 docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag docker.io/mirrorgooglecontainers/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24 docker tag docker.io/coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

Reinitialize:

2.4. Configure kubectl

2.4.1. On the master, switch to ckl:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.4.2. Add flannel network

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.5. Configure node1 to execute on two nodes

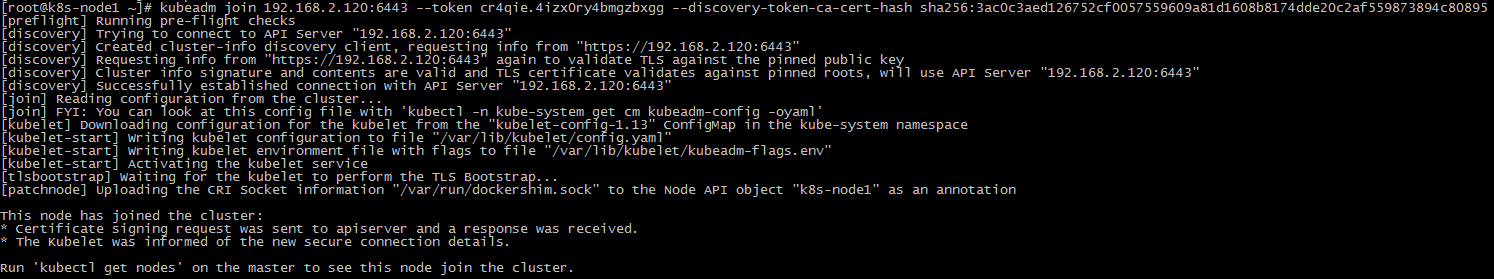

2.5.1. Execute on node1:

# kubeadm join 192.168.2.120:6443 --token cr4qie.4izx0ry4bmgzbxgg --discovery-token-ca-cert-hash sha256:3ac0c3aed126752cf0057559609a81d1608b8174dde20c2af559873894c80895

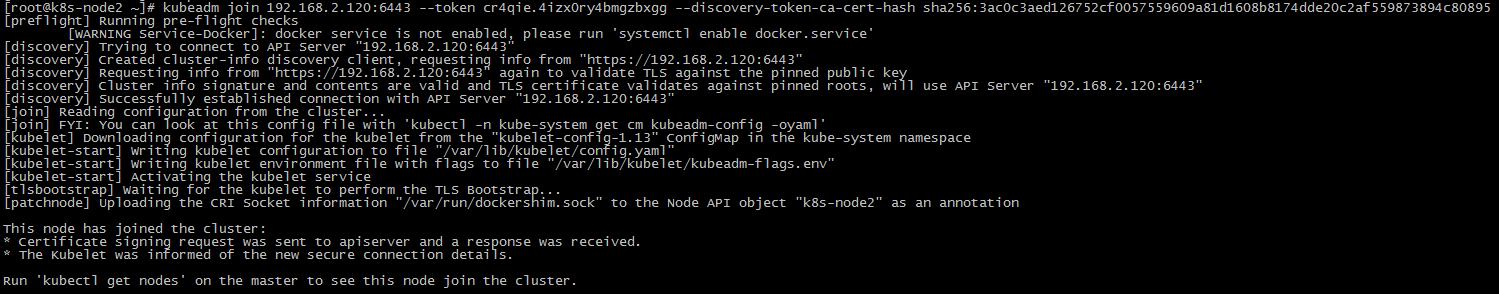

2.5.2. Execute on node2:

# kubeadm join 192.168.2.120:6443 --token cr4qie.4izx0ry4bmgzbxgg --discovery-token-ca-cert-hash sha256:3ac0c3aed126752cf0057559609a81d1608b8174dde20c2af559873894c80895

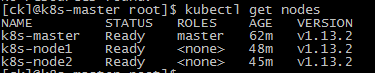

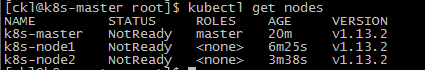

2.6. View node status in master:

Add command completion:

# yum install -y bash-completion # find / -name "bash_completion" /usr/share/bash-completion/bash_completion # source /usr/share/bash-completion/bash_completion # source <(kubectl completion bash)

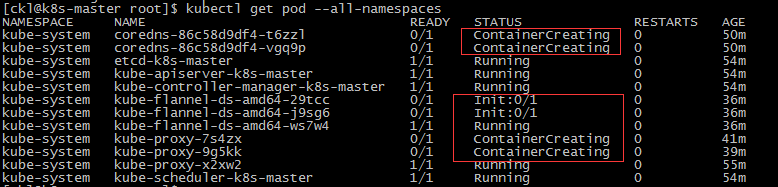

The three nodes are NotReady. You need to start several components. These components run in the pod. View the Pod:

$ kubectl get pod --all-namespaces

Wait for kubernets to download the image, and try again to ensure that the image address can be downloaded

Wait for a while:

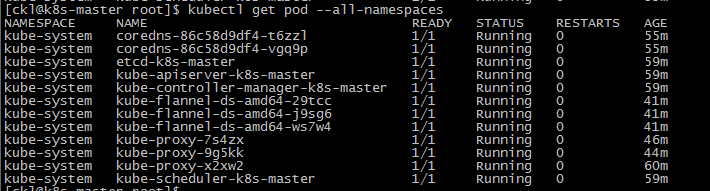

Check the node status again: