1 What is a Docker image

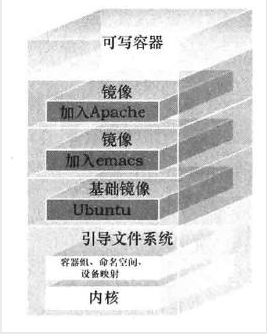

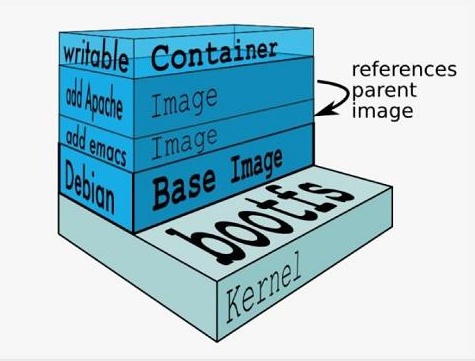

The Docker image is an overlay of the file system. At the bottom is a file boot system, bootfs. Docker users will not have direct interaction with the boot file system. The second layer of the Docker image is the root file system rootfs, which is usually one or more operating systems, such as ubuntu. In Docker, the file system is always read-only, and each time it is modified, it is copied over to form the final file system. Docker refers to such files as mirrors. One mirror can iterate over the top of the other. The mirror located below is called the parent image, and the bottom image is called the base image. Finally, when a container is launched from a mirror, Docker loads a read-write file system at the top level as the container.

Docker's mechanism is what we call write-time replication.

When a container is deleted, its own Writable layer is deleted together.

Contents of the 2 Docker mirror

Given an overview of what a Docker image is, let's see what it contains.

- The Docker image represents the file system content of a container;

- Initial contact with the federated file system. The concept of a federated file system is a mirror-level management technique in which each layer of mirroring is part of the contents of a container file system.

- Containers are a dynamic environment. Files in each layer of the image are static content. However, ENV, VOLUME, CMD and other contents in the Dockerfile eventually need to be implemented into the running environment of the container. These contents cannot be directly located in the file system content contained in each layer of the image. Then each Docker image will also contain the relationship between the json file record and the container.

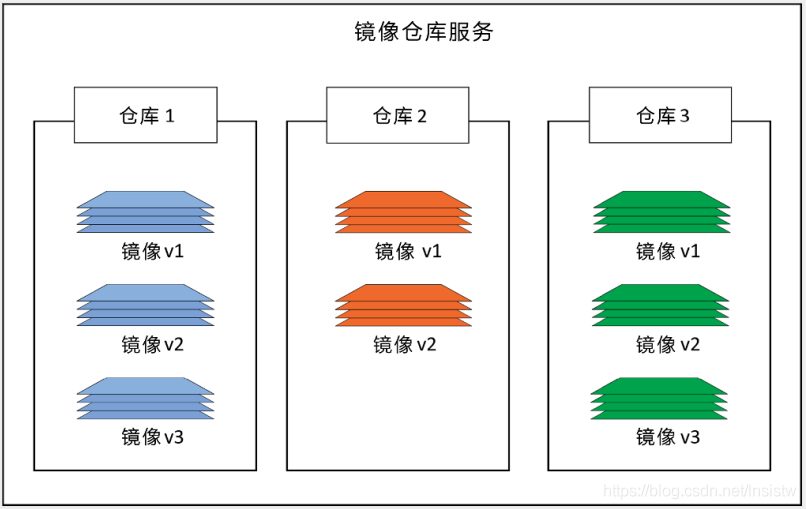

3 Mirror Warehouse Service

Docker mirrors are stored in the Image Registry

The Docker client's mirror warehouse service is configurable and uses Docker Hub by default

The mirror warehouse service contains multiple image repositories. Similarly, a mirror warehouse can contain multiple mirrors

The following diagram shows a mirror warehouse service with three mirror warehouses, each of which contains one or more mirrors

4 docker storage driver

docker provides a variety of storage drivers to store mirrors in different ways. Here are several common storage drivers

- AUFS

- OverlayFS

- Devicemapper

- Btrfs

- VFS

4.1 AUFS

AUFS (AnotherUnionFS) is a Union FS and is a file-level storage driver. AUFS is a layered file system that transparently covers one or more existing file systems, combining layers into a single representation of the file system. Simply put, it supports mounting different directories to a file system under the same virtual file system. This file system can overlay modified files one by one. No matter how many layers are read-only below, only the top file system is writable. When a file needs to be modified, AUFS creates a copy of the file, uses COW (copy-on-write) to copy the file from the read-only layer to the writable layer for modification, and saves the results in the writable layer. In Docker, the bottom read-only layer is image, and the writable layer is Container. Docker on ubuntu uses AUFS by default.

4.2 OverlayFS

Overlay is supported after the Linux kernel 3.18 and is also a Union FS. Unlike AUFS, there are only two layers of Overlay: an upperfile system and a lower file system, representing the mirror and container layers of Docker, respectively. When a file needs to be modified, COW is used to copy the file from a read-only lower to a writable upper for modification, and the results are saved in the upper layer. In Docker, the bottom read-only layer is image, and the writable layer is Container. The latest OverlayFS is Overlay2.

AUFS and Overlay are both federated file systems, but AUFS has multiple layers, while Overlay has only two, so AUSF is slower when copying large files with lower layers when writing. And Overlay incorporates the linux kernel mainline, which AUFS does not. At present, AUFS has been basically eliminated.

4.3 DeviceMapper (Device Mapper)

Device mapper is supported after Linux kernel 2.6.9. It provides a mapping framework mechanism from logical devices to physical devices, under which users can easily formulate management strategies to implement storage resources according to their needs. AUFS and OverlayFS are file-level storage, while Device mapper is block-level storage, and all operations operate directly on blocks, not files. The Device mapper driver first creates a resource pool on the block device, then creates a basic device with a file system on the resource pool. All mirrors are snapshots of this basic device, while containers are snapshots of mirrors. So you see in the container that the file system is a snapshot of the file system of the basic device on the resource pool, and no space is allocated to the container. When a new file is to be written, a new block is allocated to it within the mirror of the container and data is written. This is called time-of-use allocation. When you want to modify an existing file, use CoW to allocate block space for the container snapshot, and copy the modified data into a new block in the container snapshot before making the modification.

OverlayFS is file-level storage, Device mapper is block-level storage. When a file is very large and the content modified is small, Overlay copies the entire file regardless of the size of the content modified. It obviously takes more time to modify a large file than a small one. Block-level copies only the blocks that need to be modified, not the entire file. In this scenario, apparently the device mapper is faster. Because the block level has direct access to the logical disk, it is suitable for IO-intensive scenarios. Overlay is relatively better for scenarios with complex internal programs, large concurrencies, but less IO.

4.4 Btrfs

BTRFS (often referred to as Butter FS), a COW(copy-on-write) file system announced and in progress by Oracle in 2007. The goal is to replace Linux's ext3 file system, improve ext3 limitations, especially for single file size, total file system size limitations, and add file checking and features. Add features not supported by ext3/4, such as snapshots for writable disks, snapshots for recursive snapshots, RAID support, and subvolume concepts to allow online resizing of file systems.

4.5 VFS

The purpose of VFS (virtual File System) is to use standard Unix system calls to read and write different file systems on different physical media, which provides a unified operation interface and application programming interface for various file systems. VFS is a glue layer that allows system calls such as open(), read(), write() to work without concern for underlying storage media and file system types.

5 Docker registry

When the container is started, docker daemon attempts to get the associated image locally, which is downloaded from Registry and saved locally when the local image does not exist.

Registry is used to save docker images, including the hierarchy and metadata of the images. Users can build their own Registry or use the official Docker Hub.

Classification of docker registry

- Sponsor Registry: Third-party Registry for use by customers and the Docker community

- Mirror Registry: Third-party Registry, for customer use only

- Vendor Registry: Regisry provided by vendor publishing docker image

- Private Registry: registry provided through a private entity with a firewall and additional security layer

Composition of docker registry

-

Repository

- A mirror repository consisting of all iterated versions of a particular docker image

- Multiple Repositories may exist in a Registry

- Repository can be divided into Top Warehouse and User Warehouse

- User repository name format is User/Repository Name

- Each warehouse can contain multiple Tags, each with a mirror image

-

Index

- Maintain information about user accounts, mirror checks, and public namespaces

- Equivalent to providing Registry with a retrieval interface that performs functions such as user authentication

6 Docker Hub

Docker Hub is a cloud-based registration service that allows you to link to code libraries, build and test your mirrors, store manually pushed mirrors, and link to Docker Cloud so you can deploy your mirrors on your host.

It provides centralized resources for container image discovery, distribution and change management, user and team collaboration, and workflow automation throughout the development pipeline.

Docker Hub provides the following main features

- Mirror Repository

- Find and extract mirrors from community and official libraries, and manage, push, and extract images from private image libraries that you can access.

- Automated Build

- Automatically create a new image when you change the source code repository

- Webhooks

- A webhook is a feature of automated build that allows you to trigger actions after a successful push to the repository.

- Organization Management

- Create a workgroup to manage access to the image repository

- GitHub and Bitbucket Integration

- Add hub and Docker images to the current workflow

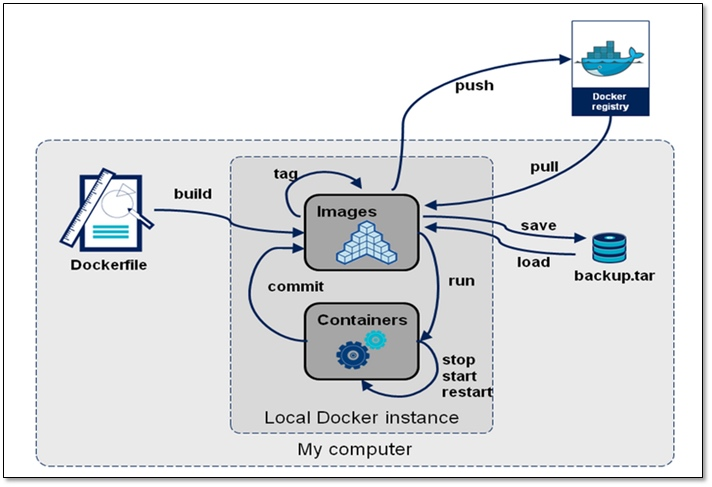

7 Docker Image Creation and Generation

In most cases, we do mirroring based on an existing basic image of someone else, which we call a base image. For example, a clean version of the minimal centos, ubuntu, or debian

7.1 Mirror Generation

Ways to Generate Mirrors

- Dockerfile

- Container-based manufacturing

- Docker Hub automated builds

7.2 Container-based mirroring

Create a new image based on container changes

Grammar: docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]]

| Options | Default | Description |

|---|---|---|

| —author, -a | Designated Author | |

| -c, --change list | Apply the Dockerfile directive to the created image | |

| -m, --message string | Submit message | |

| -p, --pause | true | Suspend container during submission |

7.2.1 Docker Image Acquisition

To get a Docker image from a remote Registry (such as your own Docker Registry) and add it to your local system, you need to use the docker pull command. I use here to pull a mirror from Docker hub

Grammar: docker pull <registry>[:<port>]/[<namespace>/]<name>:<tag> [root@Docker ~]# docker pull centos Using default tag: latest latest: Pulling from library/centos a1d0c7532777: Pull complete Digest: sha256:a27fd8080b517143cbbbab9dfb7c8571c40d67d534bbdee55bd6c473f432b177 Status: Downloaded newer image for centos:latest docker.io/library/centos:latest

7.2.2 Create and run containers

//Run the container, --name specifies the name of the container, tty type, default is sh [root@Docker ~]# docker run --name nginx -itd 5d0da3dc9764 /bin/bash 95f278ee0a241d28280f967c3878ee45d40b56f50d2a7bab3d2f25e511ed8b39

7.2.3 Enter the container and compile and install nginx

use first docker cp take nginx Packages are passed to containers [root@Docker ~]# docker cp /usr/src/nginx-1.20.2.tar.gz 95f278ee0a24:/usr/src/ [root@Docker ~]# docker exec -it 95f278ee0a24 /bin/bash [root@95f278ee0a24 /]# ls /usr/src/ debug kernels nginx-1.20.2.tar.gz //Create system user nginx [root@95f278ee0a24 /]# useradd -r -m -s /sbin/nologin nginx [root@95f278ee0a24 /]# id nginx uid=998(nginx) gid=996(nginx) groups=996(nginx) //Install dependent packages and compilation tools [root@95f278ee0a24 /]# yum -y install pcre-devel openssl openssl-devel gd-devel gcc gcc-c++ make [root@95f278ee0a24 /]# yum -y groups mark install 'Development Tools' //Create log store directory [root@95f278ee0a24 /]# mkdir -p /var/log/nginx [root@95f278ee0a24 /]# chown -R nginx.nginx /var/log/nginx/ //Compile and Install nginx [root@95f278ee0a24 ~]# cd /usr/src/ [root@95f278ee0a24 src]# ls debug kernels nginx-1.20.2.tar.gz [root@95f278ee0a24 src]# tar xf nginx-1.20.2.tar.gz [root@95f278ee0a24 src]# cd nginx-1.20.2 [root@95f278ee0a24 nginx-1.20.2]# ./configure \ --prefix=/usr/local/nginx \ --user=nginx \ --group=nginx \ --with-debug \ --with-http_ssl_module \ --with-http_realip_module \ --with-http_image_filter_module \ --with-http_gunzip_module \ --with-http_gzip_static_module \ --with-http_stub_status_module \ --http-log-path=ar/loginx/access.log \ --error-log-path=ar/loginx/error.log [root@95f278ee0a24 nginx-1.20.2]# make && make install [root@95f278ee0a24 ~]# ls /usr/local/ bin etc games include lib lib64 libexec nginx sbin share src //Configuring environment variables [root@95f278ee0a24 ~]# echo 'export PATH=/usr/local/nginx/sbin:$PATH' > /etc/profile.d/nginx.sh [root@95f278ee0a24 ~]# source /etc/profile.d/nginx.sh //start nginx [root@95f278ee0a24 ~]# nginx [root@95f278ee0a24 ~]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:80 0.0.0.0:* //Create web test content [root@6476d875f2b4 ~]# echo Nginx to work > /usr/local/nginx/html/index.html [root@6476d875f2b4 ~]# cat /usr/local/nginx/html/index.html Nginx to work //Access on Host [root@Docker ~]# curl 172.17.0.2 Nginx to work //This is because the ports in the docker container do not map all that are inaccessible on the browser

7.2.4 Creating a Nginx image

When creating a mirror, we cannot close the container, we must keep it running, so we must start a new terminal and execute it

The default is to start the sh process, but we want to start a process that you roll down, so we set the container's default start process to nginx when creating the image, so that we can quickly build a simple nginx service with the newly generated image

[root@Docker ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9408c7af4b18 286644a4d832 "/bin/bash" 13 seconds ago Up 12 seconds nginx [root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE centos latest 5d0da3dc9764 2 months ago 231MB [root@Docker ~]# docker commit -a '172.168.25.147@qq.com' -c 'CMD ["/usr/local/nginx/sbin/nginx","-g","daemon off;"]' -p 9408c7af4b18 zhaojie10/nginx:v1.20.2 sha256:6f5b02e61ad49adeae10eabab8aa49082c8721eeb731288d0d24d7aa96a5d823 [root@Docker ~]# [root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE zhaojie10/nginx v1.20.2 6f5b02e61ad4 6 seconds ago 549MB centos latest 5d0da3dc9764 2 months ago 231MB

7.2.5 Create a new container with the mirror you just created and set the port mapping

[root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE zhaojie10/nginx v1.20.2 6f5b02e61ad4 About a minute ago 549MB centos latest 5d0da3dc9764 2 months ago 231MB [root@Docker ~]# docker run -dit --name nginx -p 80:80 6f5b02e61ad4 d9526158bdc16fb347d6c5a86a10f119ca138aa65c72798c77ac8d0984f36057 [root@Docker ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES d9526158bdc1 6f5b02e61ad4 "/usr/local/nginx/sb..." 4 seconds ago Up 2 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp nginx [root@Docker ~]# docker exec -it d9526158bdc1 /bin/bash [root@d9526158bdc1 /]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:80 0.0.0.0:* //The container starts the nginx service at this time

Now you can access it on your browser

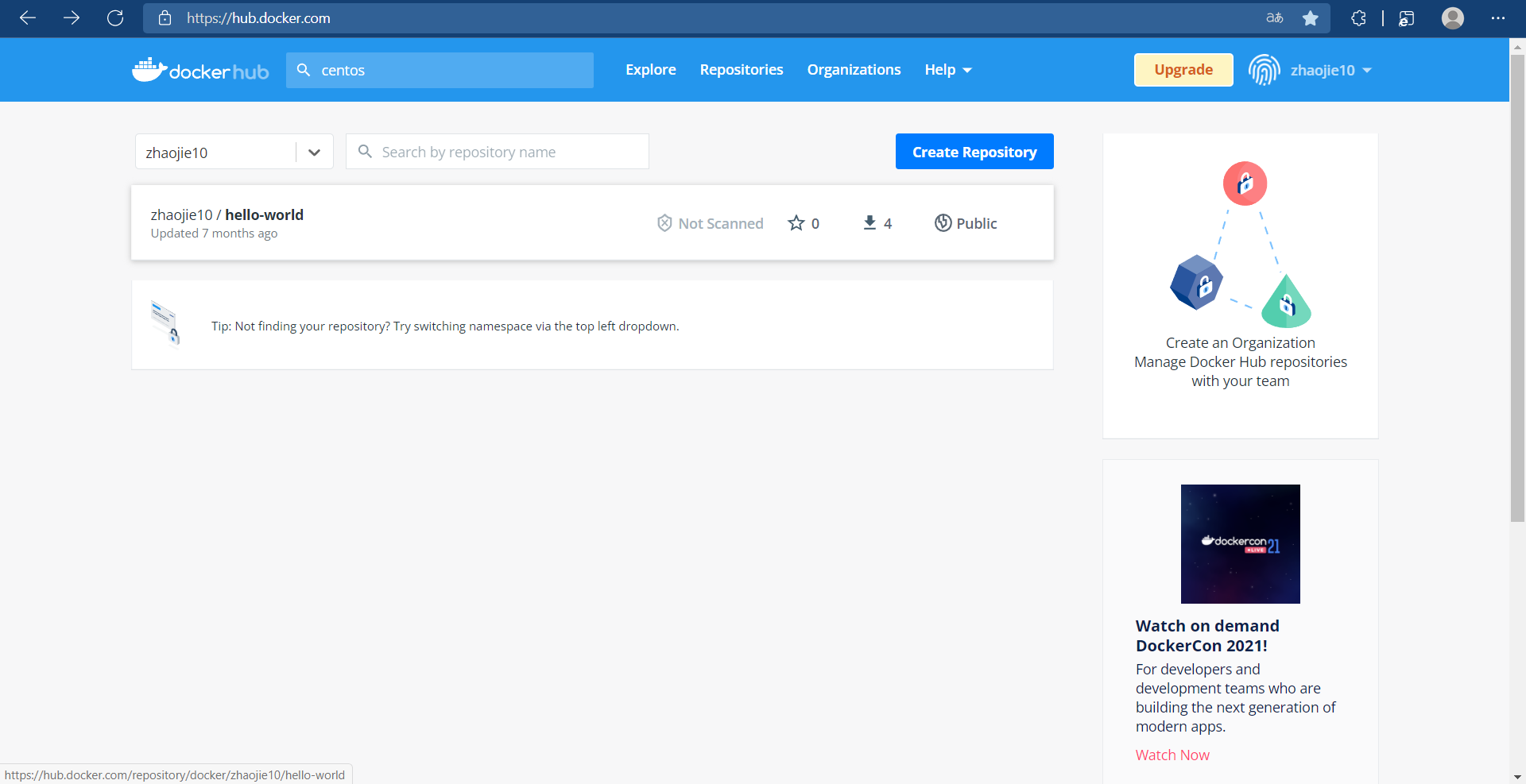

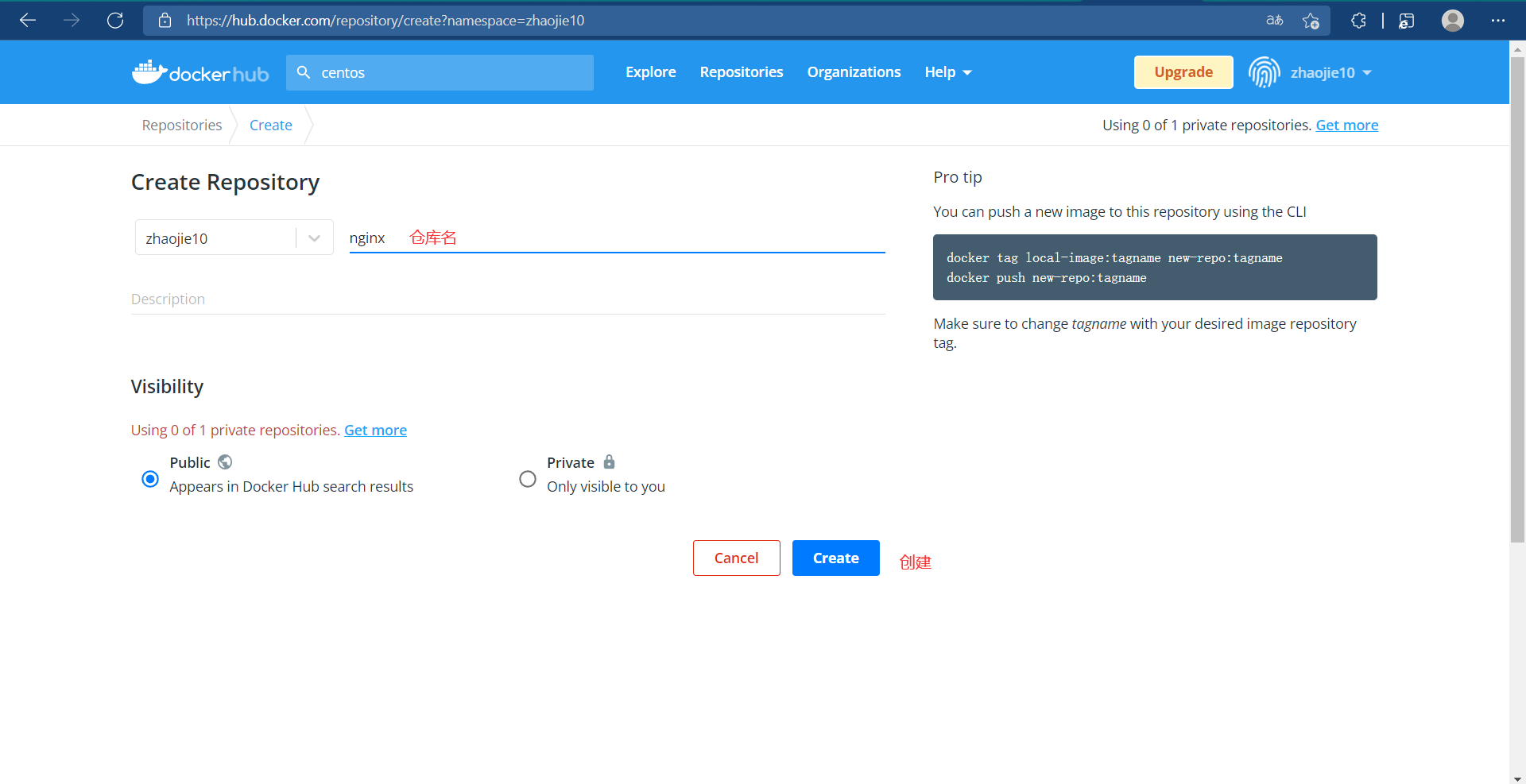

7.3 Upload to docker hub

Note at this point that our mirror name is zhaojie10/nginx, so we'll create a warehouse named zhaojie10/nginx on Docker Hub, and then push our mirror up

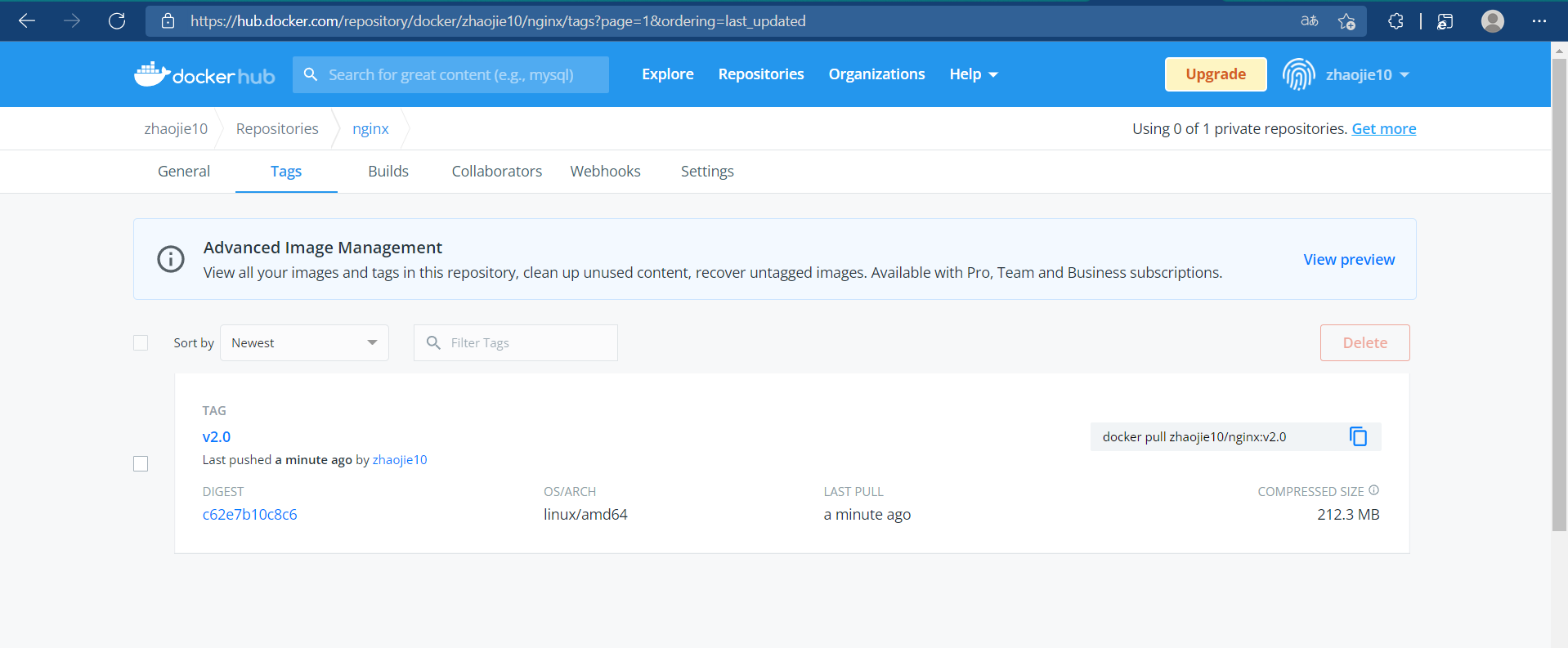

//Log in to docker hub to upload a mirror [root@Docker ~]# docker login Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one. Username: zhaojie10 Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded [root@Docker ~]# docker push zhaojie10/nginx:v2.0 The push refers to repository [docker.io/zhaojie10/nginx] 54bfe1169208: Pushed 27c919eb23ea: Pushed 74ddd0ec08fa: Mounted from library/centos v2.0: digest: sha256:c62e7b10c8c6fcefa9d7248d466b278abc70cc3be9dc9a1a730400d2702f06b6 size: 950

//Pull the container you just uploaded locally to verify that the operation was successful [root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE zhaojie10/nginx v1.0 81a4a6c1034d About an hour ago 549MB centos latest 5d0da3dc9764 2 months ago 231MB

8 Import and Export of Mirrors

We can push the image into the mirror warehouse on Host 1, then pull the image down on Host 2, which is a bit of a hassle. If I'm just testing it, I can run on another host after I've finished mirroring on one host. There's no need to push it onto the warehouse and pull it back locally.

At this point, we can package the image into a compressed file based on the existing image and copy it to another host to import it. This is the import and export function of the image.

In docker we export using docker save and import using docker load

Execute docker save export mirror on the host where the mirror has been generated

[root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE zhaojie10/nginx v1.20.2 6f5b02e61ad4 7 minutes ago 549MB centos latest 5d0da3dc9764 2 months ago 231MB [root@Docker ~]# docker save -o nginximages.gz zhaojie10/nginx:v1.20.2 [root@Docker ~]# ls anaconda-ks.cfg nginximages.gz

Perform docker load import mirroring on another host that is not mirrored

[root@Docker ~]# ls anaconda-ks.cfg nginximages.gz [root@Docker ~]# docker load -i nginximages.gz Loaded image: zhaojie10/nginx:v1.20.2 [root@Docker ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE zhaojie10/nginx v1.20.2 6f5b02e61ad4 11 minutes ago 549MB