1, Install docker

1. Download offline package

Index of linux/static/stable/x86_64/

2. Decompression

tar -xzvf docker-18.06.3-ce.tgz

(ce version means community free version, please specify The difference between docker with ce and without ce)

3. Copy the extracted folder to the / usr/local directory

cp docker-18.06.3-ce /usr/local

4. Register docker as a system service

Create docker.service file vim / usr/lib/systemd/system/docker.service, copy the following contents to the docker.service file, save and exit

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process # restart the docker process if it exits prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

5. Start docker service

systemctl start docker

6. Check docker running status

systemctl status docker

7. Set startup

systemctl enable docker.service

Reference article: Offline deployment of docker the journey of a thousand miles begins with one step - CSDN blog _dockeroffline installation and deployment

2, docker deploying hadoop

To deploy hadoop, you need to install the jdk and ssh packages yourself

1. Download hadoop software package

Note: choose the appropriate version by yourself

2. Unzip offline treasure

tar -xzvf hadoop-2.7.2.tar.gz

3. Copy the extracted folder to the / usr/local directory

cp hadoop-2.7.2 /usr/local

4. Configure JAVA_HOME variable

Enter the Hadoop configuration file directory / usr/local/hadoop/etc/hadoop, edit the hadoop-env.sh configuration file, and add the JAVA_HOME environment variable configuration

export JAVA_HOME = "jdk root directory"

Save exit

5. There are three operation modes of Hadoop

5.1 stand alone mode (default configuration)

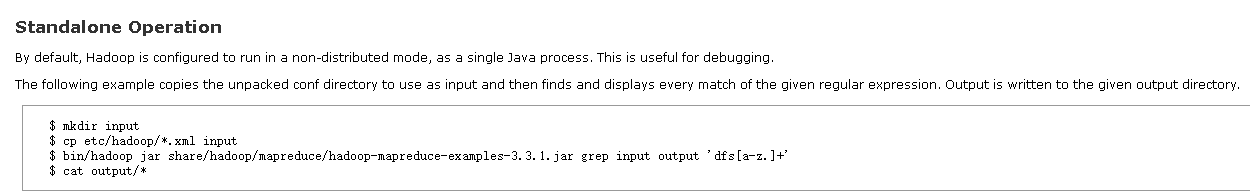

By default, Hadoop is configured to run in non distributed mode as a separate Java process, which is helpful for development and debugging.

In this mode, there is no need to modify the configuration file. You can directly execute the mapreduce example test provided by hadoop.

$ mkdir input $ cp etc/hadoop/*.xml input $ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar grep input output 'dfs[a-z.]+' $ cat output/*

5.2 pseudo distribution mode

Hadoop can also run on a single node in pseudo distributed mode, and Hadoop daemon runs on different Java processes.

Modify profile

Hadoop/etc/hadoop/core-site.xml

Link: core-site.xml detailed configuration parameter description

fs.defaultFS Hadoop file system address

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>Hadoop/etc/hadoop/hdfs-site.xml:

Link: hdfs-site.xml detailed configuration parameter description

The number of copies of dfs.replication data block is 1

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>Set SSH password free login

Use the following command to check whether password free mode is configured

$ ssh localhost

If it is not configured, it can be configured according to the following command

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys $ chmod 0600 ~/.ssh/authorized_keys

Start Hadoop

1. Format file system

$ bin/hdfs namenode -format

2. Start the NameNode process and DataNode process

$ sbin/start-dfs.sh

The default log output write path of hadoop is Hadoop/logs folder

3. Access NameNode through web browser. The default interface address is: http://localhost:9870

4. Create an HDFS directory (Hadoop file system directory) and execute MapReduce Job

$ bin/hdfs dfs -mkdir /user $ bin/hdfs dfs -mkdir /user/<username>

5. Copy input to HDFS

$ bin/hdfs dfs -mkdir input $ bin/hdfs dfs -put etc/hadoop/*.xml input

6. Run Hadoop examples

$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar grep input output 'dfs[a-z.]+'

7. Check the files in the output directory and copy the output directory from HDFS to the local file system for inspection

$ bin/hdfs dfs -get output output $ cat output/*

Or view it directly on HDFS

$ bin/hdfs dfs -cat output/*

8. Close the Hadoop process

$ sbin/stop-dfs.sh

Yarn (Resource Coordinator) runs on single-node

MapReduce Job can also run tasks through Yan (Resource Coordinator) in pseudo distribution mode. Some parameters need to be configured. In addition, the ResourceManager and NodeManager processes need to be started.

1. Modify the configuration file

Hadoop/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>Hadoop/etc/hadoop/yarn-site.xml:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_HOME,PATH,LANG,TZ,HADOOP_MAPRED_HOME</value>

</property>

</configuration>2. Start the ResourceManager and NodeManager processes

$ sbin/start-yarn.sh

3. Access the ResourceManager through a web browser; the default address is: http://localhost:8088/

4. Run MapReduce Job

It is the same as the MapReduce Job command executed by Hadoop. Refer to the above

5. Close the ResourceManager and NodeManager processes

$ sbin/stop-yarn.sh