1, Introduction to Docker swarm

Docker swarm and docker-compose In the same way, it is the official docker container choreographer. But the difference is that Docker Compose is a tool to create multiple containers on a single server or host, while Docker Swarm can create container cluster services on multiple servers or hosts. Obviously, Docker Swarm is more suitable for the deployment of microservices.

Docker swarm: its function is to abstract several docker hosts as a whole, and manage all kinds of docker resources on these docker hosts through a single portal. Swarm and kubernetes are similar, but lighter and have fewer functions than kubernetes.

Since Docker 1.12.0, docker swarm has been included in the Docker engine (docker swarm) and has built-in service discovery tools. We do not need to configure Etcd or Consul for service discovery configuration as before.

There are three roles in Docker swarm:

- Manager node: responsible for the arrangement of containers and the management of clusters, keeping and maintaining the swarm in the desired state. Swarm can have multiple manager node, and they will automatically negotiate to select a leader to perform the arrangement task. On the contrary, manager node cannot be absent;

- Worker node: accept and execute the tasks assigned by manager node. By default, manager node is also a work node. However, you can set it as manager only node to be responsible for arrangement and management;

- Service: used to define the commands executed on the worker;

Note: in a Docker Swarm cluster, the role of each docker server can be manager, but it can't be worker, that is to say, it can't be without a leader, and all the host names participating in the cluster must not conflict.

2, Environmental preparation

matters needing attention:

- Ensure time synchronization;

- Turn off the firewall and SElinux (experimental environment);

- Change the host name;

- Write host file to ensure domain name resolution;

3, Initialize Swarm cluster

[root@node01 ~]# tail -3 /etc/hosts 192.168.1.1 node01 192.168.1.2 node02 192.168.1.3 node03 //All three hosts need to be configured with hosts files to achieve the effect of domain name resolution [root@node01 ~]# docker swarm init --advertise-addr 192.168.1.1 //--Advertise addr: Specifies the address to communicate with other node s

The return information of the command is as shown in the figure:

The command in figure ①: Join swarm cluster as worker;

② To join swarm cluster as manager;

The above figure indicates that initialization is successful! Note: - token means the period is 24 hours;

4, Configure node02 and node03 to join and leave swarm cluster

###################node02 The operations are as follows################### [root@node02 ~]# docker swarm join --token SWMTKN-1-4pc1gjwjrp9h4dny52j58m0lclq88ngovis0w3rinjd05lklu5-ay18vjhwu7w8gsqvct84fv8ic 192.168.1.1:2377 ###################node03 The operations are as follows################### [root@node03 ~]# docker swarm join --token SWMTKN-1-4pc1gjwjrp9h4dny52j58m0lclq88ngovis0w3rinjd05lklu5-ay18vjhwu7w8gsqvct84fv8ic 192.168.1.1:2377 //node02 and node03 are added as worker s by default ###################node01 The operations are as follows################### [root@node01 ~]# docker node ls //View node details (only manager can view) ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION mc3xn4az2r6set3al79nqss7x * node01 Ready Active Leader 18.09.0 olxd9qi9vs5dzes9iicl170ob node02 Ready Active 18.09.0 i1uee68sxt2puzd5dx3qnm9ck node03 Ready Active 18.09.0 //It can be seen that the statuses of node01, node02 and node03 are Active ###################node02 The operations are as follows################### [root@node02 ~]# docker swarm leave ###################node03 The operations are as follows################### [root@node03 ~]# docker swarm leave //node02 and node03 apply to leave the cluster ###################node01 The operations are as follows################### [root@node01 ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION mc3xn4az2r6set3al79nqss7x * node01 Ready Active Leader 18.09.0 olxd9qi9vs5dzes9iicl170ob node02 Down Active 18.09.0 i1uee68sxt2puzd5dx3qnm9ck node03 Down Active 18.09.0 ///You can see that the statuses of node02 and node03 are Down [root@node01 ~]# docker node rm node02 [root@node01 ~]# docker node rm node03 //node01 remove node02 and node03 from the cluster

The above commands can join or delete a node to the cluster, but the worker identity is used when joining. If you want the node to join the cluster as manager, you need to use the following commands:

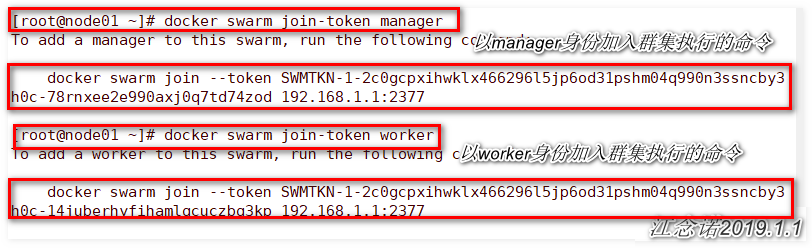

[root@node01 ~]# docker swarm join-token manager //Query commands to join the cluster as manager [root@node01 ~]# docker swarm join-token worker //Querying commands to join a cluster as a worker

As shown in the picture:

###################node02 The operations are as follows################### [root@node02 ~]# docker swarm join --token SWMTKN-1-2c0gcpxihwklx466296l5jp6od31pshm04q990n3ssncby3h0c-78rnxee2e990axj0q7td74zod 192.168.1.1:2377 ###################node03 The operations are as follows################### [root@node03 ~]# docker swarm join --token SWMTKN-1-2c0gcpxihwklx466296l5jp6od31pshm04q990n3ssncby3h0c-78rnxee2e990axj0q7td74zod 192.168.1.1:2377 //node02 and node03 join the cluster as manager ###################node01 The operations are as follows################### [root@node01 ~]# docker node ls / / view node details ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION exr8uoww0eih43iujqz5cbv6q * node01 Ready Active Leader 18.09.0 r35f48huyw5hvnkuzatrftj1r node02 Ready Active Reachable 18.09.0 gsg1irl1bywgdsmfawi9rna7p node03 Ready Active Reachable 18.09.0 //As can be seen from the MANAGER STATUS column

Although you can specify manager and worker identities when you join the cluster, you can also downgrade and upgrade through the following commands:

[root@node01 ~]# docker node demote node02 [root@node01 ~]# docker node demote node03 //Downgrade node02 and node03 to worker [root@node01 ~]# docker node promote node02 [root@node01 ~]# docker node promote node03 //Upgrade node02 and node03 to manager //Self verification

5, Deploy graphical UI

The deployment of graphical UI interface is completed by node01!

[root@node01 ~]# docker run -d -p 8080:8080 -e HOST=192.168.1.1 -e PORT=8080 -v /var/run/docker.sock:/var/run/docker.sock --name visualizer dockersamples/visualizer

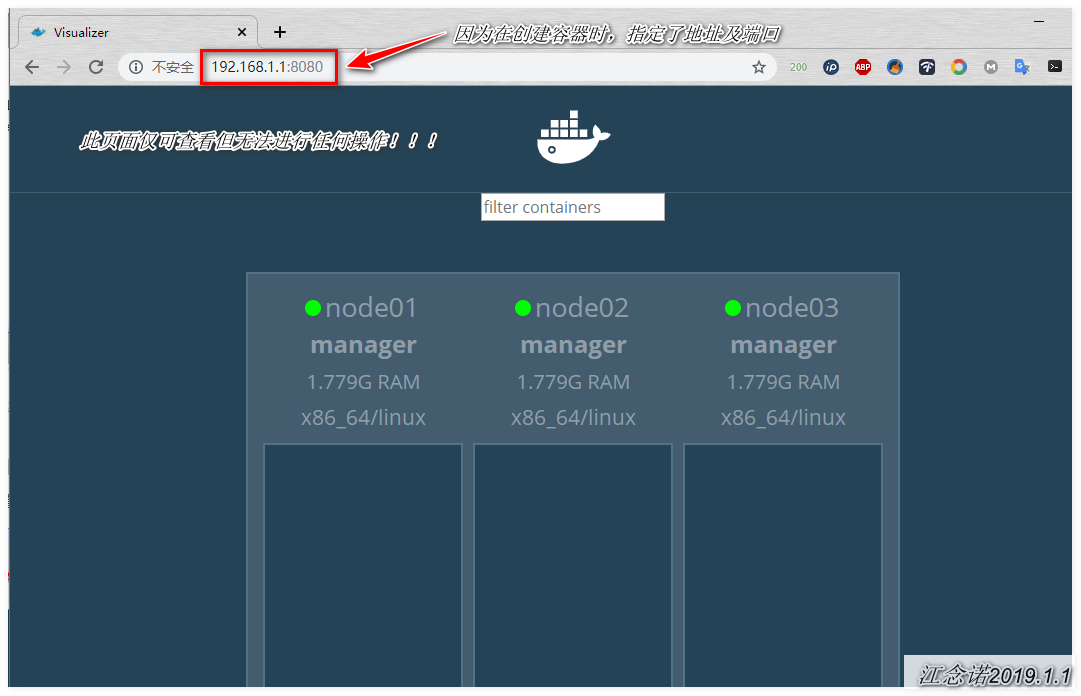

Access with browser:

If the browser can be accessed normally, the deployment of graphical UI interface is completed!

6, Service service configuration of docker swarm cluster

node01 publishes a task, which must run six containers on the host of the manager role. The command is as follows:

[root@node01 ~]# docker service create --replicas 6 --name web -p 80:80 nginx // --Replicas: number of replicas; it can be roughly understood that a replica is a container

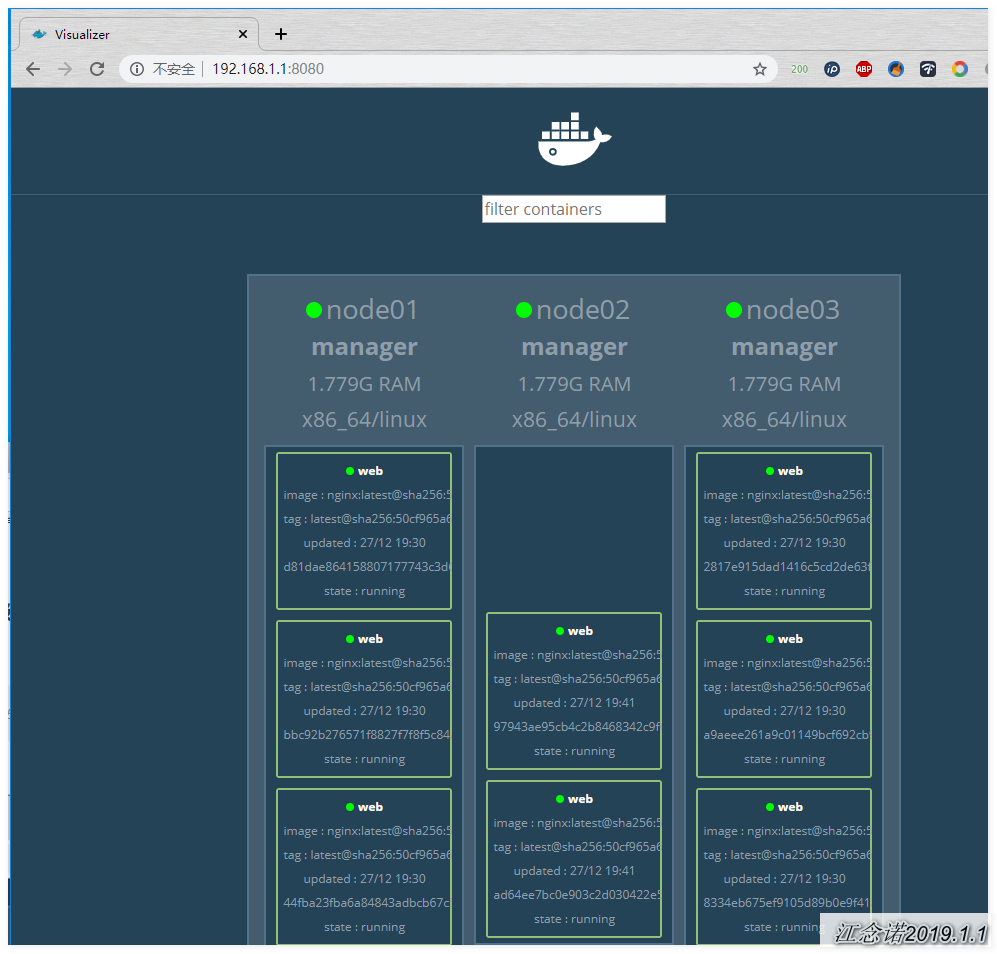

After the container is running, you can log in to the web page to view, as shown in the figure:

Note: if there is no corresponding wake-up on the other two node servers, it will be automatically downloaded from the docker Hub by default!

[root@node01 ~]# docker service ls / / view the created service ID NAME MODE REPLICAS IMAGE PORTS nbfzxltrcbsk web replicated 6/6 nginx:latest *:80->80/tcp [root@node01 ~]# docker service ps web / / view which containers the created services are running on ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS v7pmu1waa2ua web.1 nginx:latest node01 Running Running 6 minutes ago l112ggmp7lxn web.2 nginx:latest node02 Running Running 5 minutes ago prw6hyizltmx web.3 nginx:latest node03 Running Running 5 minutes ago vg38mso99cm1 web.4 nginx:latest node01 Running Running 6 minutes ago v1mb0mvtz55m web.5 nginx:latest node02 Running Running 5 minutes ago 80zq8f8252bj web.6 nginx:latest node03 Running Running 5 minutes ago

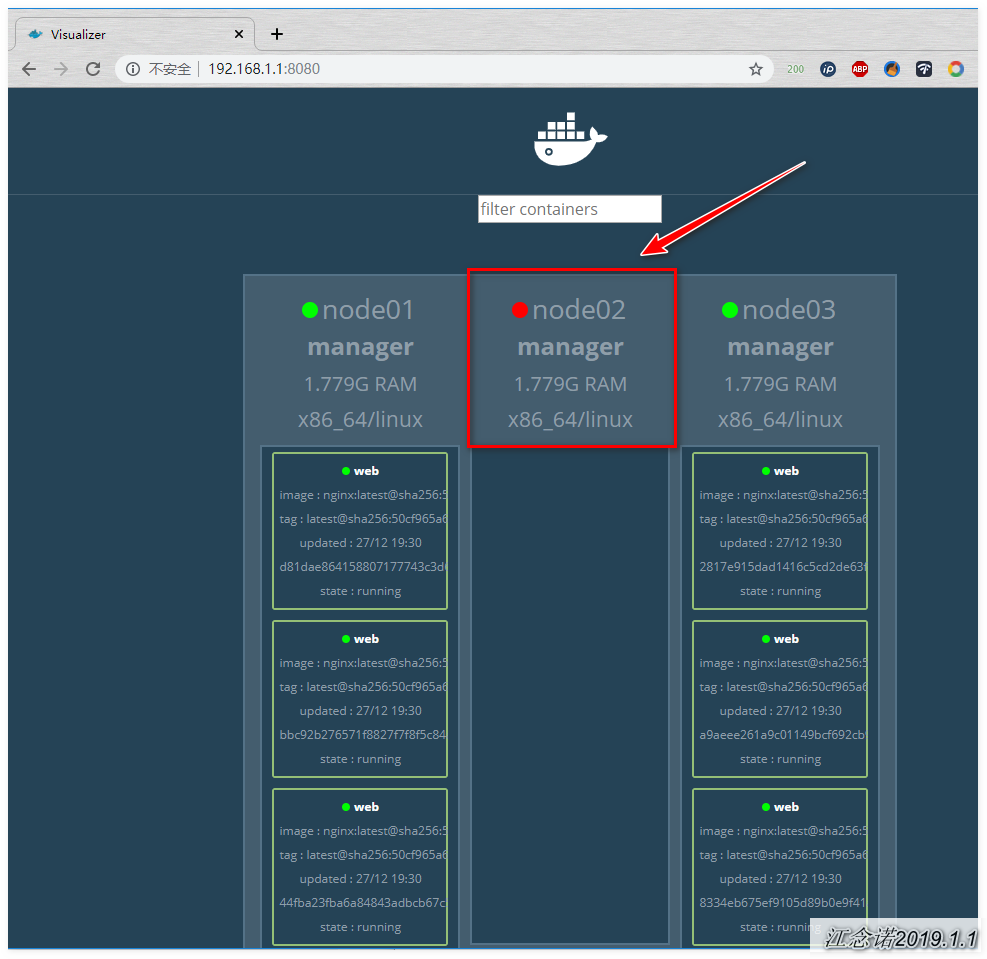

If node02 and node03 are down now, the service will not die immediately because of the node, but will automatically run to the normal node.

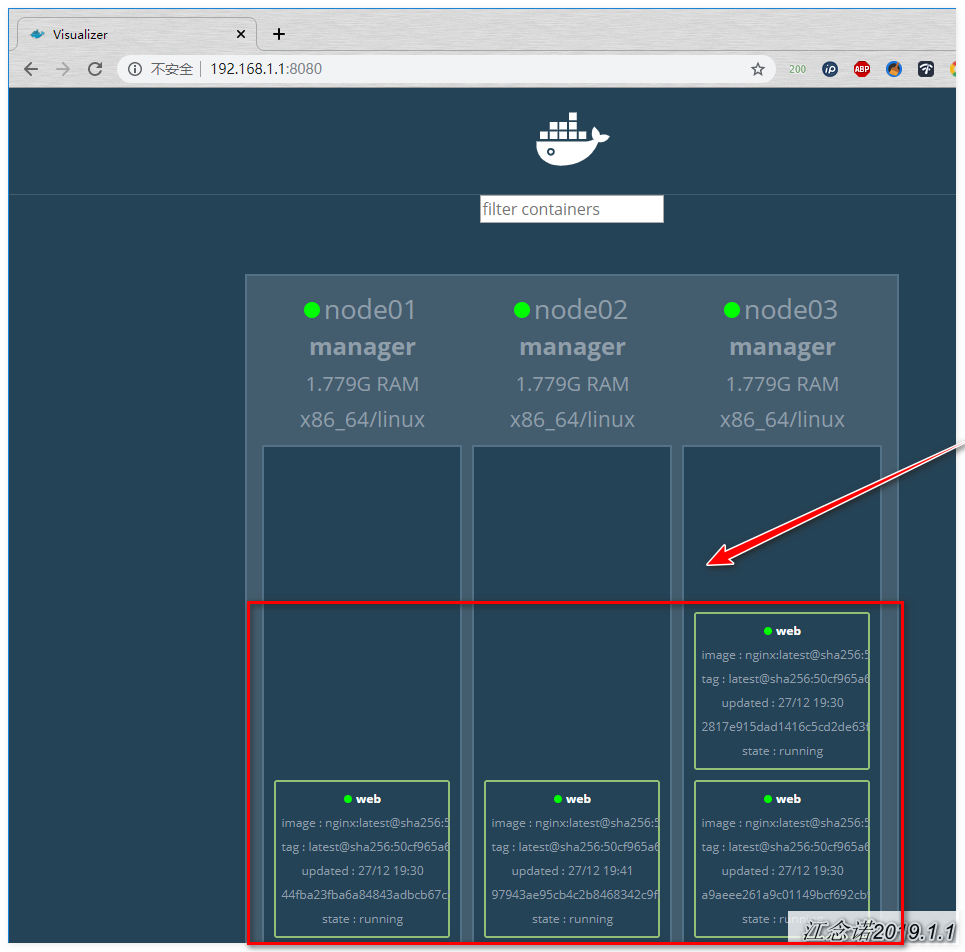

Simulate node02 downtime, and the web page is as follows:

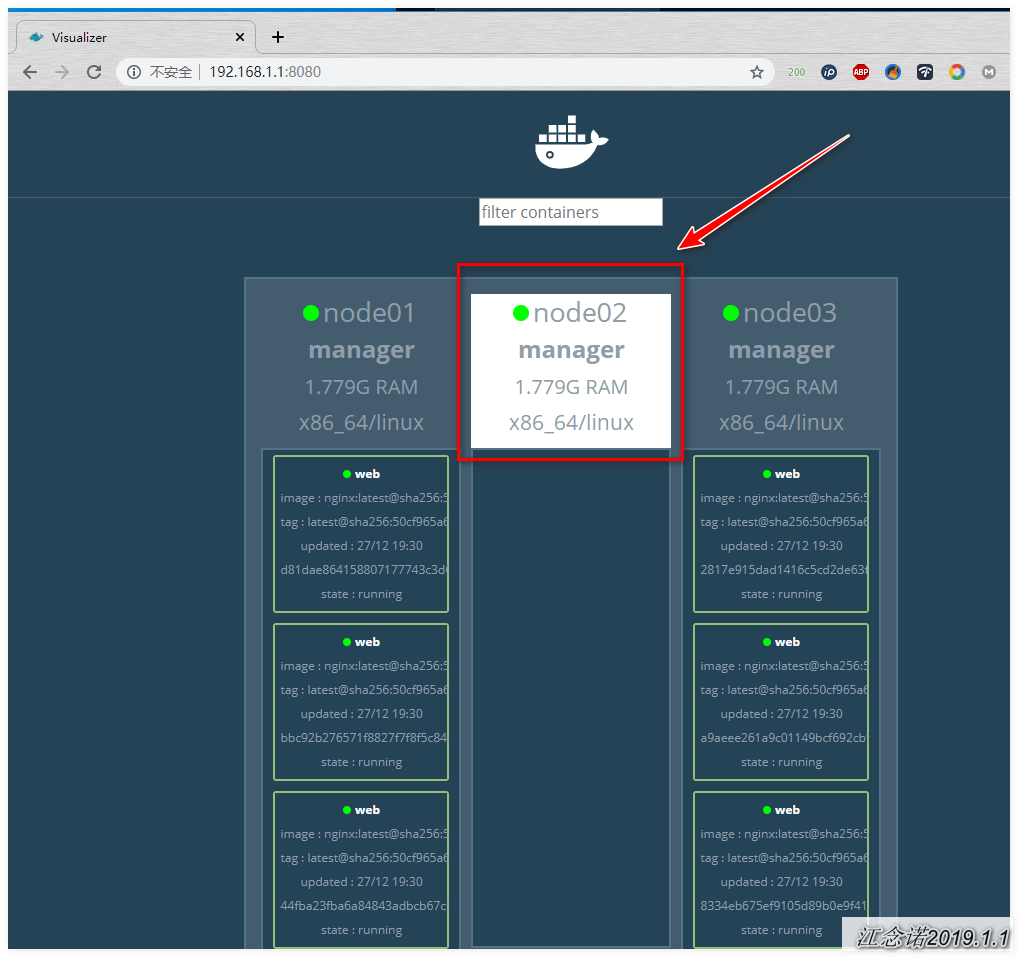

Restore node02. The web page is as follows:

Even if node02 returns to normal, the service will not be assigned to node02.

Therefore, we can draw a conclusion: if the node fails, the service will automatically run to the available node; otherwise, if the node fails, by default, the service will not easily change the node!

7, Realize the expansion and contraction of service

Capacity expansion: i is to add several service s;

Shrink: reduce several service s;

Shrink and expand for the above environment;

(1) service expansion

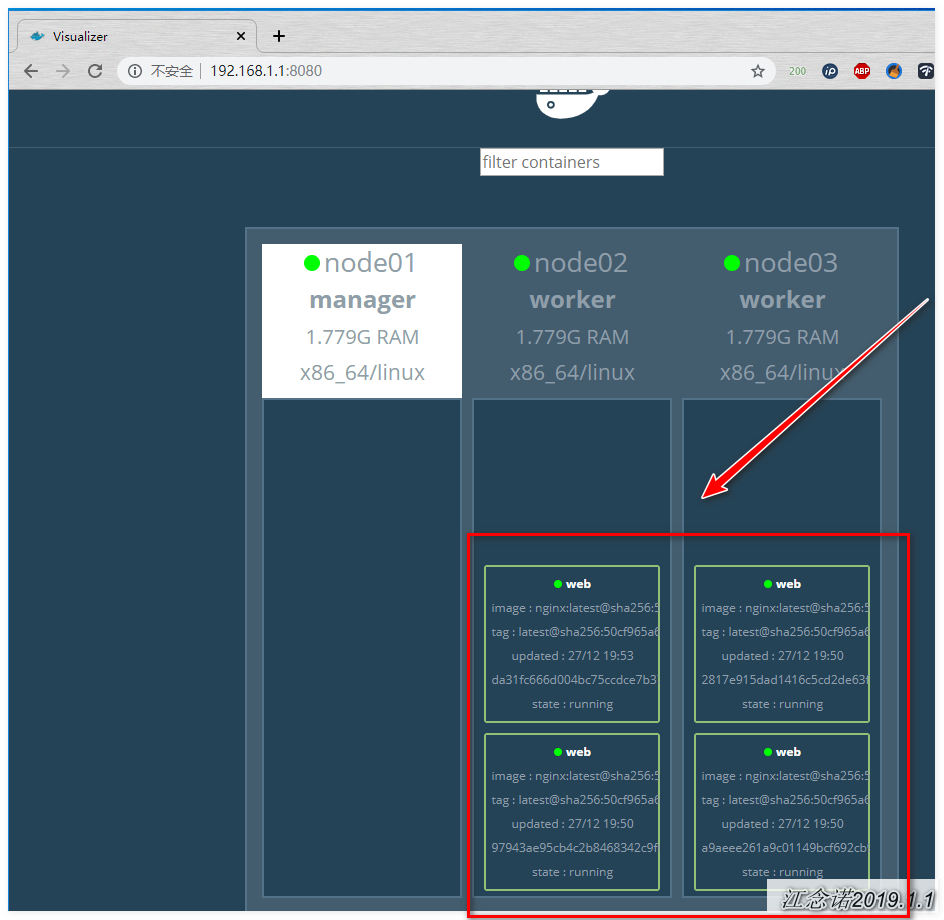

[root@node01 ~]# docker service scale web=8 //There used to be 6 service s, now it's increased to 8

The web page is as follows:

The allocation of service to that node is based on the algorithm of docker swarm.

(2) service shrinkage

[root@node01 ~]# docker service scale web=4 //There used to be 8 service s, now it's reduced to 4

The web page is as follows:

(3) Set a node not to run service

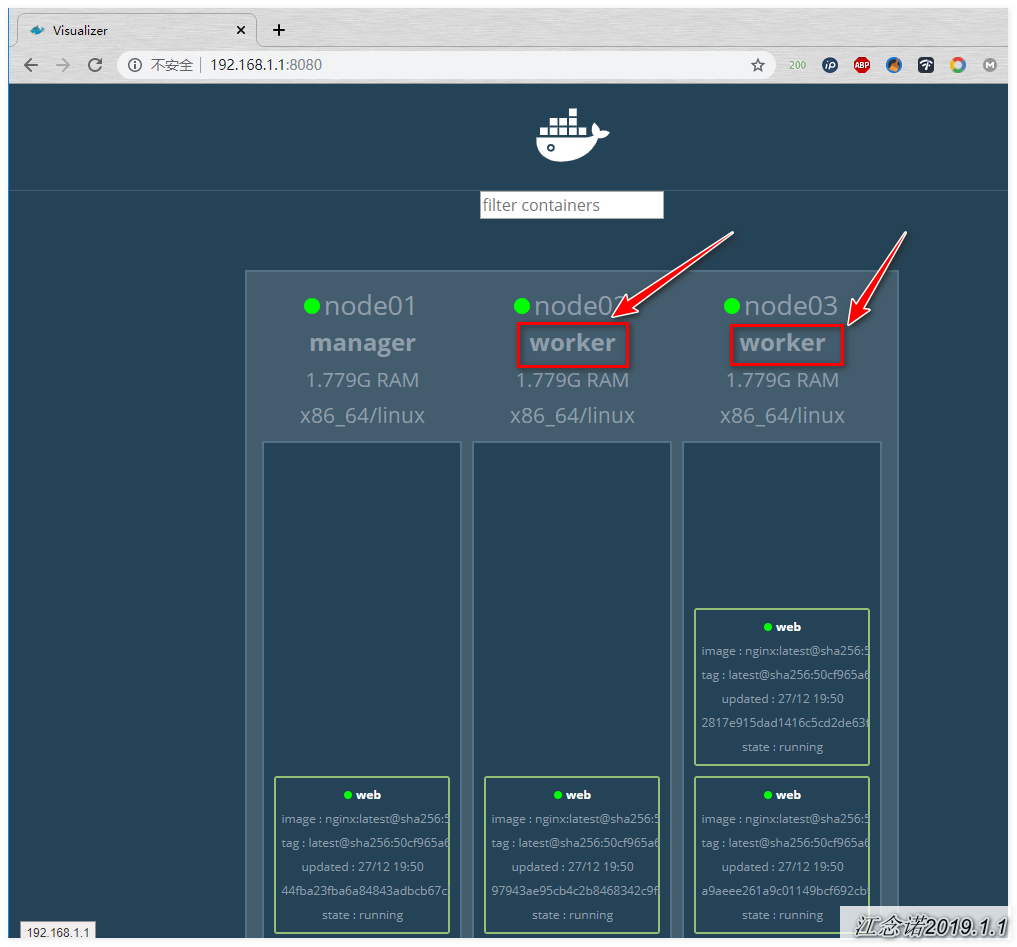

In the above environment, all three are managers. Even one manager and two workers are working by default. Downgrade node02 and node03 to worker, and execute the following command:

[root@node01 ~]# docker node demote node02 [root@node01 ~]# docker node demote node03

As shown in the picture:

You can set a node to not run service, as follows:

[root@node01 ~]# docker node update --availability drain node01 //Do not run the container after setting noder01, but the already running container will not stop // --availability: there are three options to configure after the options, as follows: active: Work; pause: Temporarily not working; drain: Permanent non work

The web page is as follows:

[root@node01 ~]# docker node update --availability drain node02 //node02 doesn't work either, but containers that are already running don't stop

As shown in the picture:

It can be concluded that only manager has the right not to work!

8, docker Swarm cluster common commands

[root@node02 ~]# docker swarm leave //If that node wants to launch swarm cluster, execute this command on that node //Node automatically exits swarm cluster (equivalent to resignation) [root@node01 ~]# docker node rm node name //Actively delete nodes by manager (equivalent to dismissal) [root@node01 ~]# docker node promote node name //Upgrade node [root@node01 ~]# docker node demote node name //Demote a node [root@node01 ~]# docker node ls //View the information of swarm cluster (only on the host of manager role) [root@node01 ~]# docker node update --availability drain node name //Adjustment node does not work [root@node01 ~]# docker swarm join-token worker //View the token joining the swarm cluster (either worker or manager) [root@node01 ~]# docker service scale web=4 //Expand and shrink the number of swarn cluster servie s (depending on the original number of clusters) //More than the original number of clusters, i.e. expansion, otherwise, contraction [root@node01 ~]# docker service ls //View the created service [root@node01 ~]# Name of docker service ps service //View which containers the created service runs on [root@node01 ~]# docker service create --replicas 6 --name web -p 80:80 nginx //Specify the number of service copies to run

9, docker swarm summary

- The host names participating in the cluster must not conflict, and can resolve each other's host names;

- All nodes in the cluster can be manager s, but not worker s;

When a running image is specified, if the local node in the cluster does not have the image, it will automatically download the corresponding image; - When the cluster works normally, if a docker server running a container goes down, all the containers it runs will be transferred to other nodes running normally. Moreover, even if the server that goes down goes back to normal operation, it will not take over the containers running before it;

————————————Thank you for reading——————————