1. overview

HashMap Source Code Detailed Analysis (JDK 1.8): https://segmentfault.com/a/1190000012926722

Java 7's entire Concurrent HashMap is a Segment array. Segments are locked by inheriting ReentrantLock, so each operation that needs to be locked is a segment, so as to ensure that each Segment is thread-safe, it also achieves global thread security. So it is described as a segmented lock in many places.

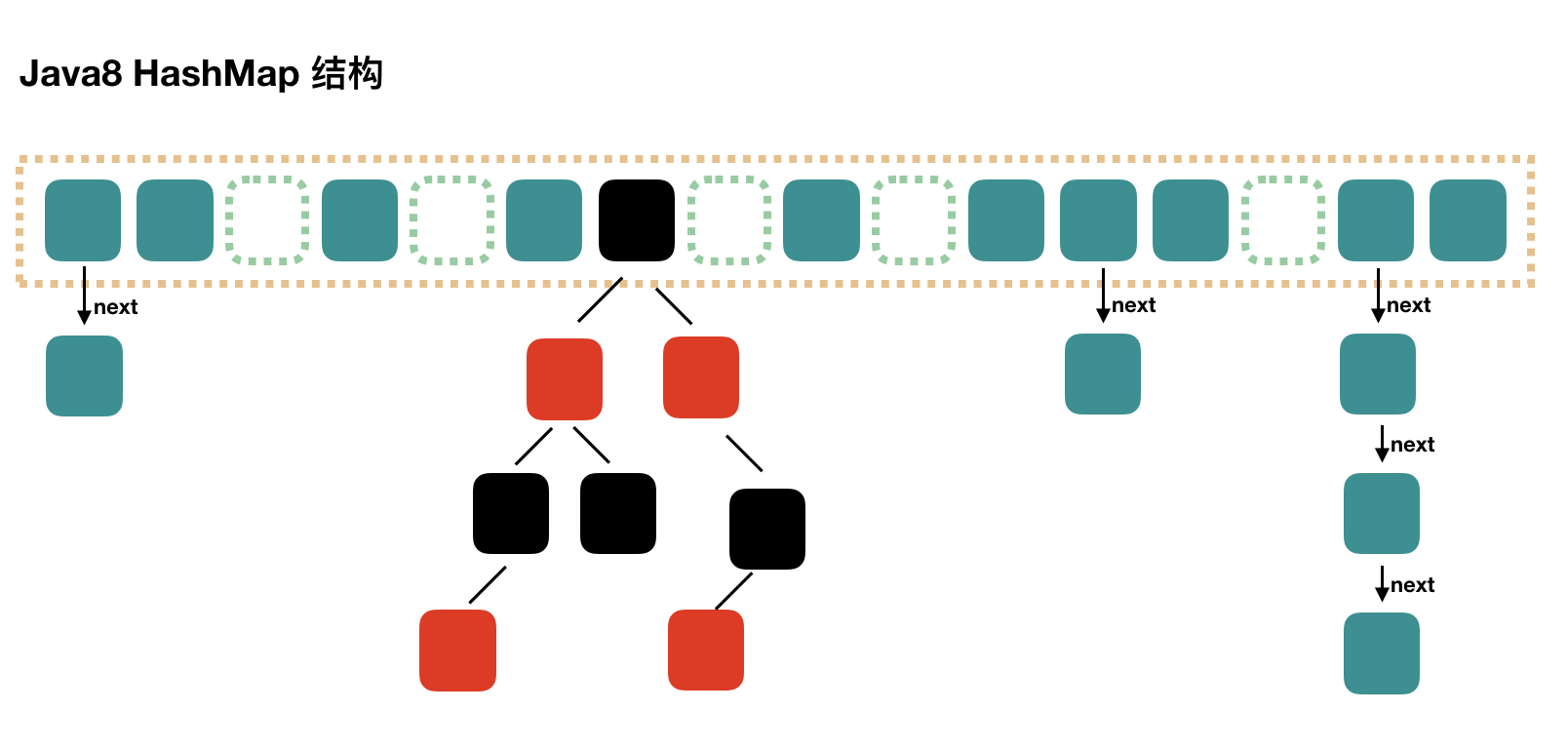

Java 8 has made major changes to Concurrent HashMap. Structurally, it's basically the same as Java 8's HashMap, but it does have to be thread-safe, so it's a bit more complex on the source code.

2. Source code analysis

Bit operations are heavily used in Concurrent HashMap

- The right shift operator, num >> 1, equals num divided by 2

- ">" No sign right shift, ignore the sign bit, the vacancies are all filled with 0.

- Left shift operator, num << 1, equivalent to num multiplied by 2

3.1 Construction Method

Analysis of 3.1.1 Construction Method

// Nothing in this constructor

public ConcurrentHashMap() {

}

// Initial capacity of HashMap

public ConcurrentHashMap(int initialCapacity) {

if (initialCapacity < 0)

throw new IllegalArgumentException();

int cap = ((initialCapacity >= (MAXIMUM_CAPACITY >>> 1)) ?

MAXIMUM_CAPACITY :

tableSizeFor(initialCapacity + (initialCapacity >>> 1) + 1));

this.sizeCtl = cap;

}This initialization method is somewhat interesting. By providing the initial capacity, the sizeCtl, sizeCtl = 1.5 * initial capacity + 1, is calculated, and then the nearest n power of 2 is taken up. If initial Capacity is 10, then sizeCtl is 16, and if initial Capacity is 11, sizeCtl is 32.

In HashMap, the initial capacity is directly used as tableSizeFor (initial capacity). I don't know why the initial capacity is changed to 1.5 * initial capacity in Concurrent HashMap. For the case of adding 1, the initial capacity = 0 is considered.

The sizeCtl attribute uses many scenarios. Here is the first scenario: Concurrent HashMap initialization. SizeCtl = 0 (that is, the parametric constructor) denotes the default initialization size, otherwise the custom capacity is used.

3.1.2 put process analysis

public V put(K key, V value) {

return putVal(key, value, false);

}

/** Implementation for put and putIfAbsent */

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

// 1. Calculate hash value, (h ^ (h > > > > > 16) & HASH_BITS

int hash = spread(key.hashCode());

int binCount = 0;

// 2. Spin ensures that newly added elements will be successfully added to HashMap

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

// 2.1 If the array is empty, initialize the array. How to Ensure the Thread Security of Array Expansion (Emphasis)

if (tab == null || (n = tab.length) == 0)

tab = initTable();

// 2.2 The slot corresponding to the hash (also known as bucket bucket) is empty. Just put the new value in it directly.

// The array tab is volatile rhetoric, which does not mean that its elements are volatile. U.getObjectVolatile

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

// The CAS operation places this new value in the slot, and if it succeeds, it ends.

// If CAS fails, it is concurrent operation, then take step 3 or 4.

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

// 2.3 Only if f.hash==MOVED is expanded, the thread adds value after helping with the expansion first.

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

// 2.4 Lock the corresponding slot, and the rest of the operation is similar to HashMap.

else {

V oldVal = null;

// The following operations are thread-safe

synchronized (f) {

// All operations on F after f is locked are thread-safe, but tab itself is not thread-safe.

// That is to say, tab[i] may change.

if (tabAt(tab, i) == f) {

// 2.4.1 header node hash >= 0, indicating that it is a linked list

if (fh >= 0) {

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key, value, null);

break;

}

}

}

// 2.4.2 denotes red-black trees

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key, value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

// The linked list binCount indicates the length of the added element, and the red-black tree binCount=2 cannot perform the treeifyBin method.

if (binCount != 0) {

// To determine whether to convert a linked list to a red-black tree, the critical value is 8, just like HashMap.

if (binCount >= TREEIFY_THRESHOLD)

// This method is slightly different from HashMap in that it is not necessarily a red-black tree conversion.

// If the length of the current array is less than 64, the array expansion is chosen instead of converting to a red-black tree.

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

// 3. Adding 1 to the number of elements and judging whether to expand or not, how to ensure thread safety

addCount(1L, binCount);

return null;

}The main process of put is finished, but there are at least a few problems left. The first one is initialization, the second one is expansion, and the third one is to help data migration, which we will introduce later.

// All in all, for one purpose, make nodes more evenly distributed in HashMap

static final int spread(int h) {

return (h ^ (h >>> 16)) & HASH_BITS;

}h is the hashcode corresponding to the key, and the slot of the computing node is the hash% length of the array (hash%length), but if the length of the array is 2^n, the bit operation hash & (length-1) can be used directly. In order to make hash hash hash more uniform, that is, hash more random, let hash's high 16 and low 16 carry out exclusive or operation, so the last 16 bits of hash are not easy to repeat. Note that hashcode may be negative at this time. Negative numbers have a special meaning in Concurrent HashMap. In order to ensure that the calculated hash must be positive, the symbolic bits can be forcibly removed from the calculated hash values to ensure that the results are only in the positive range. More reference hashcode may be negative

hash = key.hashCode() & Integer.MAX_VALUE;

3.1.2 Array initialization initTable

The concurrency problem in the initialization method is controlled by a CAS operation on sizeCtl.

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

while ((tab = table) == null || tab.length == 0) {

if ((sc = sizeCtl) < 0)

Thread.yield(); // lost initialization race; just spin

// sizeCtl=-1 indicates that the array is initializing

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if ((tab = table) == null || tab.length == 0) {

// SizeCtl = 0 (that is, parametric constructor) indicates the default initialization size, otherwise the custom capacity is used

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = tab = nt;

// n-n/4, or 0.75*n, is the same as the threshold in HashMap, except that bitwise operations are used here.

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

break;

}

}

return tab;

}The attribute sizeCtl was mentioned earlier when Concurrent HashMap was initialized. Here we introduce another usage scenario of sizeCtl:

- sizeCtl's first usage scenario: before array initialization. sizeCtl = 0 (that is, the parametric constructor) denotes the default initialization size, otherwise the customized initialization capacity is used.

- The second use scenario of sizeCtl: in array initialization. sizeCtl=-1 indicates that the array table is being initialized

- The third use scenario of sizeCtl: after array initialization. SizeCtl > 0 represents the threshold of table expansion

3.1.3 Chain List to Red-Black Tree treeifyBin

As we said earlier in put source analysis, treeifyBin does not necessarily convert red-black trees, or it may just expand arrays. Let's do source code analysis.

// Index is a node index that needs to be linked to a red-black tree

private final void treeifyBin(Node<K,V>[] tab, int index) {

Node<K,V> b; int n, sc;

if (tab != null) {

// MIN_TREEIFY_CAPACITY=64, preferring expansion when array length is less than 64

if ((n = tab.length) < MIN_TREEIFY_CAPACITY)

// Later we will analyze this method in detail.

tryPresize(n << 1);

// After locking, the list turns red and black, which is the same as HashMap.

else if ((b = tabAt(tab, index)) != null && b.hash >= 0) {

synchronized (b) {

if (tabAt(tab, index) == b) {

TreeNode<K,V> hd = null, tl = null;

for (Node<K,V> e = b; e != null; e = e.next) {

TreeNode<K,V> p =

new TreeNode<K,V>(e.hash, e.key, e.val, null, null);

if ((p.prev = tl) == null)

hd = p;

else

tl.next = p;

tl = p;

}

setTabAt(tab, index, new TreeBin<K,V>(hd));

}

}

}

}

}3.1.4 Expansion of tryPresize

If the source code of Java 8 Concurrent HashMap is not simple, then it is expansion operation and migration operation. The real data migration after array expansion is transfer method. Readers should know this in advance. The capacity expansion here is doubled, and the capacity of the array after expansion is twice that of the original.

// tryPresize has two methods that call putAll or putVal, regardless of which method size is the expanded length.

private final void tryPresize(int size) {

// c: size 1.5 times, plus 1, and then take the nearest 2 n power up.

int c = (size >= (MAXIMUM_CAPACITY >>> 1)) ? MAXIMUM_CAPACITY :

tableSizeFor(size + (size >>> 1) + 1);

int sc;

while ((sc = sizeCtl) >= 0) {

Node<K,V>[] tab = table; int n;

// 1. Arrays are initialized without initialization, similar to array initTable

// Maybe the array has not been initialized when putAll

if (tab == null || (n = tab.length) == 0) {

n = (sc > c) ? sc : c;

if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if (table == tab) {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = nt;

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

}

}

// 2. Array capacity is maximum or sufficient, and no expansion is required.

else if (c <= sc || n >= MAXIMUM_CAPACITY)

break;

// 3. Really Start Expansion

else if (tab == table) {

// Each expansion generates a timestamp-like checkmark. The last expansion is different from the next.

// This value is determined by the length of the current array in a format of 0000 0000 100x XXX

// High 16 is all 0, low 16 is the first 1, the last five are determined by n (n to binary after the highest 1 before the zero, the maximum 32)

int rs = resizeStamp(n);

// SC < 0 indicates that other threads are expanding to help expand (sc=-1 means array initialization, which has already been processed)

// The sizeCtl with 16 bits high represents the expansion stamp (check mark) and 16 bits low represents (the number of threads participating in the expansion + 1)

if (sc < 0) {

Node<K,V>[] nt;

// 3.1 If the high 16 bits of sc are not equal to the identifier (indicating that the sizeCtl has changed and the expansion has ended)

// 3.2 If sc = identifier + 1 (the expansion is over and no threads are added)

// The first thread set SC = RS << 16 + 2

// The second thread sets sc = sc + 1

// When a thread ends its expansion SC = SC-1

// After the last thread expansion, SC = RS + 1 (why SC = RS + 1, should not SC = (rs < 16) +1??)

// 3.3 If sc = identifier + 65535 (the number of auxiliary expansion threads has reached the maximum, the same question????)

// 3.4 If nextTable = null (the expansion is over, or the expansion is not yet started)

// 3.5 If transfer index <= 0 (transition status changed)

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

// Start expanding (sizeCtl=-1 means array initialization, so here it's directly + 2)

else if (U.compareAndSwapInt(this, SIZECTL, sc, (rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

}

}

}The first thing to note is that the method name is tryPresize. Since the method name contains try, the expansion may not be successful. In fact, it is also true that the expansion condition is sizeCtl >= 0, that is to say, no threads will expand only when they do array initialization or expansion operations.

The real expansion is achieved by the method transfer, whose second parameter represents the expanded array. If the second parameter of the expansion initiated by the current thread is null, and if other threads are already expanding, the current thread is added to the expansion, and the expanded array already has a nextTable.

sizeCtl

The changes of sizeCtl values before, during and after initialization of arrays have been explained earlier. Here we need to focus on the use of sizeCtl in expansion. The sizeCtl is divided into two parts. A 16-bit high represents an extension stamp (check mark) and a 16-bit low represents the number of threads participating in the expansion (threads + 1).

High 16-bit extension stamp (check mark)

static final int resizeStamp(int n) {

// Integer. numberOfLeading Zeros (n) denotes the number of zeros before the highest bit 1 after n is converted to binary. This number must be less than 32.

// 1 < < (RESIZE_STAMP_BITS-1) denotes 0x7fff, that is, to change the first of the lower 16 bits to 1.

// The final result is 0000 0000 100x XXX

return Integer.numberOfLeadingZeros(n) | (1 << (RESIZE_STAMP_BITS - 1));

}This value is determined by the length of the current array. The format must be 0000,0000,100x x x x x X X. The highest bit after RS < 16 must be 1, that is to say, sizeCtl is a negative number.

Lower 16-bit threads expanding + 1

Initialization directly + 2, and then every additional thread participates in the expansion + 1, the thread enlarges the harness - 1, and the final enlargement is 1.

3.1.5 Data Migration HelTransfer

When putVal discovers that the node is f.hash=MOVED, it means that other threads will call helpTransfer when they expand the array, that is, the current thread first helps expand the array and then adds elements.

// Similar to tryPresize, the most complicated part is this if condition judgment.

final Node<K,V>[] helpTransfer(Node<K,V>[] tab, Node<K,V> f) {

Node<K,V>[] nextTab; int sc;

if (tab != null && (f instanceof ForwardingNode) &&

(nextTab = ((ForwardingNode<K,V>)f).nextTable) != null) {

int rs = resizeStamp(tab.length);

while (nextTab == nextTable && table == tab &&

(sc = sizeCtl) < 0) {

// If the node has been modified to Forwarding Node, the expanded array has been created.

// So the conditional judgment here is one less nextTable=null

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) {

transfer(tab, nextTab);

break;

}

}

return nextTab;

}

return table;

}3.1.6 Data migration transfer

Before you read the source code, you need to understand the mechanism of concurrent operations. The original array length is n, so we have n migration tasks. It is the simplest to have each thread take charge of a small task at a time. Once a task is completed, we can check whether there are other tasks that have not been completed to help migrate. Doug Lea uses a stride, which is simply understood as step size. Each thread is responsible for migrating a part of it at a time, such as 16 migrations at a time. Small task. Therefore, we need a global scheduler to arrange which thread to perform which tasks, which is the role of the attribute transferIndex.

The first thread that initiates the data migration will point transferIndex to the last position of the original array, then the stride tasks from back to front belong to the first thread, then transfer Index to the new location, and the stride tasks from forward belong to the second thread, and so on. Of course, the second thread mentioned here does not necessarily refer to the second thread, but it can also refer to the same thread. This reader should be able to understand. In fact, it divides a large migration task into task packages.

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

// 1. stride can be understood as "step size". There are n locations that need to be migrated.

// Divide the n tasks into multiple task packages, each with stride tasks

// stride is directly equal to N in single core mode and (n > > 3) / NCPU in multi-core mode. The minimum value is 16.

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

// 2. If nextTab is null and initializes once, why is it thread-safe????

// As we said earlier, the periphery guarantees that when the first thread that initiates the migration calls this method, the parameter nextTab=null

// nextTab!=null when the thread participating in the migration calls this method

if (nextTab == null) { // initiating

try {

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

// 3. Forwarding Node is a placeholder, marking that the node has been processed

// This constructor generates a Node with null keys, value s, and next. The key is that hash is MOVED.

// As we will see later, when the node at position i in the original array completes the migration work,

// Location i is set to this Forwarding Node to tell other threads that the location has been processed

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

// advance=true means that the data of one node has been processed and ready to get the next node

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

// i For each task, the upper bound is the lower bound. Note that it's from the back to the front.

// I < bound, get a task once until the task has been processed.

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

// 4. advance represents true that the next location can be migrated.

// The first while loop: The current thread takes the task and goes to the third else.

// The final result is that i points to transfer index and bound points to transfer index-stride

// Then each node is processed: go to the first if, process the next slot node, until the task received by the current thread is processed.

// Go to the third else again and get the task of stride until transfer Index<=0

while (advance) {

int nextIndex, nextBound;

// 4.1 --i denotes the nodes that handle slots.

if (--i >= bound || finishing)

advance = false;

// 4.2 TransfIndex subtracts a stride from each task received

// The initial value of transferIndex is table.length.

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

// 4.3 Spin Trial Task for stride

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

// nextBound is the lower boundary of this migration task. Note that it's backward and forward.

bound = nextBound;

// i is the upper boundary of the migration task

i = nextIndex - 1;

advance = false;

}

}

// 5. If i is less than 0 (not in tab subscript, according to the above judgment, the end of thread expansion for the last section)

// If I >= tab. length (I don't know why to judge that)

// If I + tab. length >= nextTable. length (I don't know why to judge that)

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

// 5.1 Complete expansion

if (finishing) { // Complete capacity expansion

nextTable = null;

table = nextTab; // Update table

sizeCtl = (n << 1) - (n >>> 1); // Update threshold

return;

}

// 5.2 sizeCtl is set to (rs < RESIZE_STAMP_SHIFT) +2 before migration

// Then, each thread participating in the migration adds sizeCtl to 1

// Here we use CAS operation to subtract 1 from sizeCtl, which means that we have finished our own task.

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

// Inequality indicates that other threads are assisting expansion, and the current thread returns directly. Note that sc is a value before - 1.

// There are other threads participating in the expansion, that is to say, the expansion has not ended, and the current thread returns directly.

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

// Equality means that no threads are helping them expand. That is to say, expansion is over.

finishing = advance = true;

i = n; // recheck before commit

}

}

// 6. If the location i is empty and there are no nodes, place the newly initialized Forwarding Node empty node.“

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

// 7. The location is a Forwarding Node, which means that the location has been moved.

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

// 8. Array migration, the same as HashMap

else {

// Lock the node at the location of the array and start processing migration at that location of the array

synchronized (f) {

...

}

}

}

}As you can see from the above code, the following code for data migration can be ignored, just like HashMap.

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

// 8.1 hash >= 0 denotes linked list

if (fh >= 0) {

int runBit = fh & n; // N must be 2^n, that's 1000,000...

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

if (runBit == 0) { // 0 is low migration

ln = lastRun;

hn = null;

}

else { // 1 is high migration.

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}3.1.7 counter addCount

As long as the elements in Concurrent HashMap are added or deleted, the number of elements will change, and then the addCount method needs to be called. Before looking at this method, take a look at how Concurrent HashMap records the number of elements.

public int size() {

long n = sumCount();

return ((n < 0L) ? 0 :

(n > (long)Integer.MAX_VALUE) ? Integer.MAX_VALUE : (int)n);

}

// sumCount is used to count the current number of elements

final long sumCount() {

CounterCell[] as = counterCells; CounterCell a;

long sum = baseCount;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}You can see that the number of elements is divided into two parts, one is baseCount, and the other is the accumulation of elements in counterCells array. Why is it so complicated? Is it okay to have an int self-increasing, or to use Atomic Integer in a multi-threaded environment?

Disadvantages of AtomicLong

We all know that AtomicLong sets value through CAS spin until it succeeds. Then when the concurrency number is large, it will lead to higher failure probability of CAS, more retries, more threads retries, higher failure probability of CAS, forming a vicious circle, thus reducing the efficiency.

LongAddr Source Parsing: https://www.jianshu.com/p/d9d4be67aa56

How to guarantee