Kafka -- cluster building

Service discovery: first of all, the mutual discovery of services between kfakas is realized by using Zookeeper. Therefore, Zookeeper must be built in advance. To build a Zookeeper cluster, please pay attention to the blog's article: Building Zookeeper cluster;

Service relationship: then use Kfaka configuration file to specify Zookeeper ip and its own node properties

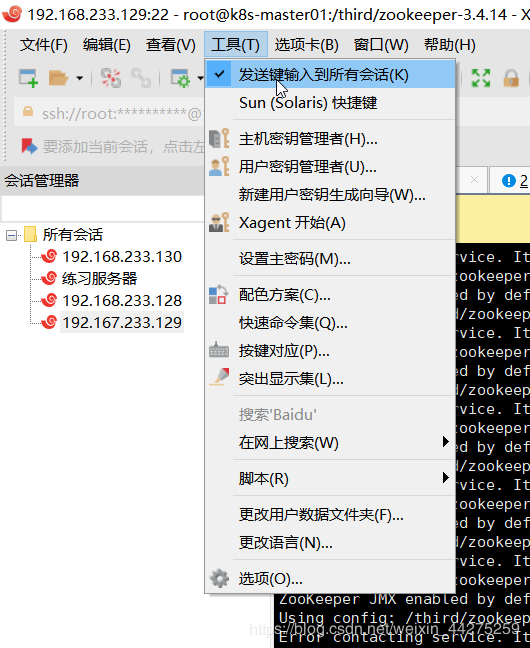

With this operation, several windows can send instructions together;

Kafak -- download / unzip:

wget http://mirrors.hust.edu.cn/apache/kafka/2.3.0/kafka_2.11-2.3.0.tgz

(If 404, please go to http://mirrors.hust.edu.cn/apache/kafka find the correct version and download it)

tar -zxvf kafka_2.11-2.3.0.tgz

mv kafka_2.11-2.3.0 kafka

Kafak -- create basic directory:

mkdir /app

mkdir /app/third

mkdir /app/third/kafka

mv /opt/kafka/ /app/third

cd /app/third/kafka/

Kafak -- configuration modification:

It just needs to be changed broker.id Each cluster is set to be different;

then zookeeper.connect The connection address can be configured into its own;

vim /app/third/kafka/config/server.properties

Ps: visual space is recommended for modification

##-------------------------------------------External identification configuration------------------------------------------- ##The unique identifier of each broker in the cluster must be a positive number. Change IP address, do not change broker.id It doesn't affect consumers ##The storage address of Kafka data. If there are multiple addresses, use commas to separate / tmp/kafka-logs-1, / tmp/kafka-logs-2 ##The port provided to the client for response broker.id =1 log.dirs = /tmp/kafka-logs port =6667 ## ----------------------------------ZooKeeper related---------------------------------- ## Address of ZooKeeper cluster (use comma to separate hostname1:port1,hostname2:port2,hostname3:port3) ## Maximum timeout for ZooKeeper ## ZooKeeper timeout connection time ## Master slave synchronization data of ZooKeeper zookeeper.connect = 192.168.233.129:2888,192.168.233.130:2888,192.168.233.131:2888 zookeeper.session.timeout.ms=6000 zookeeper.connection.timeout.ms =6000 zookeeper.sync.time.ms =2000 ## -------------------------------------------TOPIC related------------------------------------------- ## Auto create topic switch does not exist, or can only be created by instruction ## [Topic Partition copies] for a topic, the number of replication of the default partition must not be greater than the number of broker s in the cluster ## [number of topic partition entities] the number of partitions per topic auto.create.topics.enable =true default.replication.factor =1 num.partitions =1

Kafak - start up service:

Contents: CD / APP / third / Kafka/

Start: bin / Kafka server- start.sh -daemon config/ server.properties

Close: bin / Kafka server- stop.sh

Kafak - Test Service:

Kafak -- create topic:

/app/third/kafka/bin/kafka-topics.sh

–create

–zookeeper 192.168.2.152:2181

–replication-factor 3

–partitions 1

–topic order

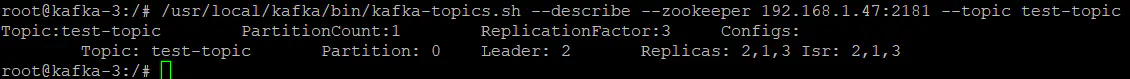

Kafak - see topic:

/app/third/kafka/bin/kafka-topics.sh

–list

–zookeeper 192.168.2.152:2181

Ps:

The – zookeeper gives a zk connection as the starting point of the connection, which can be specified in the cli file or specified by dynamic cmd

- replication factor 3 ා duplicate two copies

- partitions 1 create a partition

The topic is order

Kafka -- Broker configuration

Title Broker configuration - basic configuration

##-------------------------------------------External identification configuration------------------------------------------- ##The unique identifier of each broker in the cluster must be a positive number. Change IP address, do not change broker.id It doesn't affect consumers ##The storage address of Kafka data. If there are multiple addresses, use commas to separate / tmp/kafka-logs-1, / tmp/kafka-logs-2 ##The port provided to the client for response broker.id =1 log.dirs = /tmp/kafka-logs port =6667 ## ----------------------------------ZooKeeper related---------------------------------- ## Address of ZooKeeper cluster (use comma to separate hostname1:port1,hostname2:port2,hostname3:port3) ## Maximum timeout for ZooKeeper ## Connection timeout for ZooKeeper ## Master slave synchronization data of ZooKeeper zookeeper.connect = 192.168.233.129:2888,192.168.233.130:2888,192.168.233.131:2888 zookeeper.session.timeout.ms=6000 zookeeper.connection.timeout.ms =6000 zookeeper.sync.time.ms =2000 ## -------------------------------------------TOPIC related------------------------------------------- ## Auto create topic switch does not exist, or can only be created by instruction ## [Topic Partition copies] for a topic, the number of replication of the default partition must not be greater than the number of broker s in the cluster ## [number of topic partition entities] the number of partitions per topic auto.create.topics.enable =true default.replication.factor =1 num.partitions =1

Broker configuration - tuning related

##-------------------------------------------Thread efficiency configuration------------------------------------------- ##The maximum size of the message body, in bytes ## The maximum number of threads that broker processes messages. Generally, it does not need to be modified ## The number of threads that broker processes disk IO. The value should be greater than the number of your hard disk ## In general, there is no need to modify the number of threads processed by some background tasks, such as deleting expired message files ## The maximum number of request queues waiting for IO thread processing. If the number of requests waiting for IO exceeds this value, it will stop receiving external messages, which is a self-protection mechanism message.max.bytes =1000000 num.network.threads =3 num.io.threads =8 background.threads =4 queued.max.requests =500 ##-------------------------------------------socket configuration------------------------------------------- ## The send buffer of socket and the tuning parameter so of socket_ SNDBUFF ## Socket's accept buffer, socket's tuning parameter SO_RCVBUFF ## The maximum number of socket requests to prevent serverOOM, message.max.bytes Must be less than socket.request.max.bytes, which will be overridden by the specified parameters when the topic is created socket.send.buffer.bytes =100*1024 socket.receive.buffer.bytes =100*1024 socket.request.max.bytes =100*1024*1024

Broker configuration - log related

## -------------------------------------------LOG cleaning related------------------------------------------- ## [log cleaning policy] (options are: delete and compact are mainly used to process expired data, or log files reach the limit) ## [log trial check cycle] (specify how often the log can be checked to see if it can be deleted, 1 minute by default) ## [log size update cycle] the cycle time of file size check and whether it is penalized log.cleanup.policy Policy set in log.cleanup.policy = delete log.cleanup.interval.mins=1 log.retention.check.interval.ms=5minutes ## Do you want to turn on log compression ## Number of threads running log compression ## Maximum size to be processed during log compression ## The larger the cache space is, the better log.cleaner.enable=false log.cleaner.threads =1 log.cleaner.io.max.bytes.per.second=None log.cleaner.dedupe.buffer.size=500*1024*1024 ## The IO block size used in log cleaning generally does not need to be modified ## Generally, the expansion factor of hash table in log cleaning does not need to be modified ## Check whether to punish the interval of log cleaning ## The larger the frequency of log cleaning, the more efficient the cleaning. At the same time, there will be some space waste, which will be covered by the specified parameters when the topic is created ## For compressed logs, the maximum retention time is also the longest time the client consumes messages log.retention.minutes The difference is that one controls the uncompressed data and the other controls the compressed data. Will be overridden by the specified parameters when the topic is created log.cleaner.io.buffer.size=512*1024 log.cleaner.io.buffer.load.factor =0.9 log.cleaner.backoff.ms =15000 log.cleaner.min.cleanable.ratio=0.5 log.cleaner.delete.retention.ms =1day ## -------------------------------------------LOG storage related------------------------------------------- ## [log storage unit] (the partition of the topic is stored in a pile of segment files, and the log segment is not reached log.segment.bytes Set the size of, will also force a new segment) ## [log index size] segment index size: the index file size limit for segment logs ## [log scan configuration]: after a fetch operation is executed, a certain amount of space is needed to scan the nearest offset size. The larger the setting, the faster the scanning speed is, but also the better memory. Generally, this parameter is not needed log.segment.bytes=1024 log.index.size.max.bytes =10*1024*1024 log.index.interval.bytes =4096 ## -------------------------------------------LOG threshold correlation------------------------------------------- ## [log storage time] 1 (the maximum data storage time beyond this time will be determined according to the log.cleanup.policy The set policy processes data, that is, how long the consumer can consume data ) ## [log storage time] 2( log.retention.bytes and log.retention.minutes If any one meets the requirements, it will be deleted and will be covered by the specified parameters when the topic is created.) log.segment.bytes =1024*1024*1024 log.roll.hours =24*7 log.retention.minutes=7days ## [total log topic size] the size limit of a topic = the number of partitions* log.retention.bytes . -There is no size limit log.retention.bytes=-1 ## -------------------------------------------LOG reliable correlation------------------------------------------- ## Open message hard disk ## Fixed disk interval ## It is not enough to control the disk writing timing of messages only by interval ## This parameter is used to control the time interval of "fsync", if the message volume never reaches the threshold, but the interval from the last disk synchronization ## Reaching the threshold will also trigger ## Control the time point of the last hard disk to facilitate data recovery without modification ## Generally, the retention time of a file after it is cleared in the index does not need to be modified log.flush.interval.messages=None log.flush.scheduler.interval.ms =3000 log.flush.interval.ms = None log.flush.offset.checkpoint.interval.ms =60000 log.delete.delay.ms =60000

Broker configuration - replication related

----------------------------------copy(Leader,replicas) relevant ---------------------------------- ## [unavailable timeout] the timeout of socket when communicating between partition leader and replica ## [queue synchronization size] the size of the message queue when the partition leader is synchronized with the replica data ## [death judgment] the longest waiting time for a replica to respond to the partition leader. If it exceeds this time, the replica will be listed in ISR (in sync replicas), and it will be considered dead and will not be added to the management controller.socket.timeout.ms =30000 controller.message.queue.size=10 replica.lag.time.max.ms =10000 ## Ps: if the follower lags behind the leader too much, the follower [or partition relicas] will be considered invalid; because the network delay or link disconnection will always cause the message synchronization lag in the replica, and the replica will be migrated to other followers ## Maximum number of messages at a time: it is recommended to increase this value in the environment with small number of broker s or insufficient network ## socket timeout between follower and leader ## The size of socket cache for leader replication ## The maximum size of data retrieved by replicas each time ## The maximum waiting time for communication between replicas and leader. If it fails, it will be retried ## The minimum data size of the fetch. If the data not synchronized in the leader is insufficient, it will block until the condition is met ## The number of threads that the leader replicates. Increasing this number will increase the IO of the follower replica.lag.max.messages =4000 replica.socket.timeout.ms=30*1000 replica.socket.receive.buffer.bytes=64*1024 replica.fetch.max.bytes =1024*1024 replica.fetch.wait.max.ms =500 replica.fetch.min.bytes =1 num.replica.fetchers=1 ## Maximum space size for client to reserve offset information ## Frequency at which each replica checks whether the highest water level is solidified offset.metadata.max.bytes replica.high.watermark.checkpoint.interval.ms =5000 ## ---------------------------------Controller dependent---------------------------------- ## Whether the controller is allowed to close the broker. If set to true, all the leader s on this broker will be closed and transferred to other brokers ## Number of attempts to shut down the controller ## Interval between each shutdown attempt controlled.shutdown.enable =false controlled.shutdown.max.retries =3 controlled.shutdown.retry.backoff.ms =5000 ## ---------------------------------Balanced correlation---------------------------------- ## Whether to balance the allocation policy between broker s automatically ## If the imbalance ratio of the leader exceeds this value, the partition will be rebalanced ## Time interval to check whether the leader is unbalanced auto.leader.rebalance.enable =false leader.imbalance.per.broker.percentage =10 leader.imbalance.check.interval.seconds =300

Kafka -- basic configuration of Cli

The cli command line is mainly used to create consumers and producers in the linux environment, so we generally do not need to configure them. These are operated in SpringBoot, so we probably know it

Consumer configuration

## ————————————————————————————————————————External configuration———————————————————————————————————————————————— ## The group ID to which the Consumer belongs. The broker is based on the group.id It is very important to determine whether it is a queue mode or a publish subscribe mode ## Consumer ID, if not set, will automatically increase ## An ID for tracking investigation, preferably the same as group.id identical group.id = consumer.id = client.id = ## ————————————————————————————————————————zookeeper configuration related -———————————————————————————————————————————————— ## For the designation of zookeeper cluster, it can be multiple hostname1:port1,hostname2:port2,hostname3:port3. The same zk configuration must be used for broker ## The heartbeat timeout time of zookeeper. After checking this time, it is considered as dead consumer ## Wait connection time of zookeeper ## Synchronization time of zookeeper's follower and leader ## When there is no initial offset in zookeeper. Small: reset to minimum: reset to maximum anythingelse: throw an exception zookeeper.connect=localhost:2182 zookeeper.session.timeout.ms =6000 zookeeper.connection.timeout.ms =6000 zookeeper.sync.time.ms =2000 auto.offset.reset = largest ## ——————————————————————————————————————- socket configuration related -———————————————————————————————————————————————— ## The timeout of socket. The actual timeout is: max.fetch.wait + socket.timeout.ms . ## The size of socket's accepted cache space ##Message size limit from each partition socket.timeout.ms=30*1000 socket.receive.buffer.bytes=64*1024 fetch.message.max.bytes =1024*1024 ## ————————————————————————————————————————High availability configuration related -———————————————————————————————————————————————— ## Whether to synchronize the offset to the hookeeper after consuming the message. When the Consumer fails, the latest offset can be obtained from the zookeeper ## Time interval for automatic submission ## Blocks used to process consumption messages, each block can be equivalent to fetch.message.max Values in. Bytes auto.commit.enable =true auto.commit.interval.ms =60*1000 queued.max.message.chunks =10 ## This value is used to control the number of retries to register nodes ## Time interval for each rebalance ## Time of each re election of leader ## The minimum data sent by the server to the consumer. If it does not meet this value, it will wait until it meets the numerical requirements ## If the minimum size is not satisfied( fetch.min.bytes )The maximum waiting time to wait for a request from the consumer ## If no message arrives within the specified time, an exception will be thrown, which generally does not need to be changed rebalance.max.retries =4 rebalance.backoff.ms =2000 refresh.leader.backoff.ms fetch.min.bytes =1 fetch.wait.max.ms =100 consumer.timeout.ms = -1 ## Ps: when a new consumer joins the group, it will be reborn. After that, the consumer side of partitions will be migrated to the new consumer. If a consumer obtains the consumption permission of a certain partition, it will register with zk, "Partition Owner registry" node information. However, it is possible that the old consumer has not released this node,

Producer configuration

## Consumers get the address of topics, partitions and replicas. The configuration format is: host1:port1,host2:port2. You can also set a vip outside metadata.broker.list ##Confirmation mode of message ## 0: it does not guarantee the arrival confirmation of the message. It only sends the message with low delay, but the message will be lost. When a server fails, it is a bit like TCP ## 1: Send the message and wait for the leader to receive the confirmation, which has certain reliability ## -1: Send a message, wait for the leader to receive the confirmation, and copy the operation before returning, with the highest reliability request.required.acks =0 ## Maximum waiting time for message sending ## Cache size of socket ## The serialization method of key, if not set, is the same as serializer.class ## The partition policy is module taking by default ## The compression mode of the message is none by default. gzip and snappy can be available ## Compression can be performed for specific topic s written by dictation ## The number of retries after the message failed to send ## Interval after each failure ## If the interval is set to 0, the producer will update the data after each message is sent ## It can be specified by the user at will, but it cannot be repeated. It is mainly used to track and record messages request.timeout.ms =10000 send.buffer.bytes=100*1024 key.serializer.class partitioner.class=kafka.producer.DefaultPartitioner compression.codec = none compressed.topics=null message.send.max.retries =3 retry.backoff.ms =100 topic.metadata.refresh.interval.ms =600*1000 client.id="" ## -------------------------------------------Message mode correlation------------------------------------------- ## The type of producer async: sending messages async: sending messages synchronously ## In asynchronous mode, the message is cached at the set time and sent once ## The longest number of messages to wait for in asynchronous mode ## In asynchronous mode, if the waiting time for entering the queue is set to 0, it will either enter the queue or discard it directly ## In asynchronous mode, the maximum number of messages sent each time, if triggered queue.buffering.max.messages or queue.buffering.max.ms ## The serialization processing class of message body is transformed into byte stream for transmission producer.type=sync queue.buffering.max.ms =5000 queue.buffering.max.messages =10000 queue.enqueue.timeout.ms = -1 batch.num.messages=200 serializer.class= kafka.serializer.DefaultEncoder

Kfaka - common operation and maintenance

Configuration dynamics

It is impossible to restart kafka every time a configuration is modified. We can use kafka command to realize dynamic configuration. Of course, we can make use of some dynamic configuration states for more centralized management;

Kafak-New configuration

bin/kafka-topics.sh

–zookeeper localhost:2181

–create --topic my-topic

–partitions1

–replication-factor1

–config max.message.bytes=64000

–config flush.messages=1

Kafak-Modify configuration

bin/kafka-topics.sh

–zookeeper localhost:2181

–alter --topic my-topic

–config max.message.bytes=128000

Kafak-Delete configuration

bin/kafka-topics.sh

–zookeeper localhost:2181

–alter --topic my-topic

–delete Config max.message.byte

Log view

If Kafka fails to start, we can check the log to see a lot of things; the out file under the Kfaka directory can be viewed!