Because genome data is too large, I want to use R language to deal with the system memory shortage, so I want to split the file by chromosome, and find that python, awk, R language can be implemented very simply and quickly. Is there a gap in speed? So before running several large 50G files, I use 244MB data to test the scripts and speed them. Contrast.

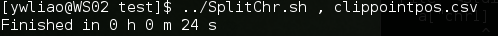

First of all, awk processing, awk processing is line-by-line, with its own grammar, has great flexibility, a line of code to solve, using 24S,

1 #!/usr/bin/sh 2 function main() 3 { 4 start_tm=date 5 start_h=`$start_tm +%H` 6 start_m=`$start_tm +%M` 7 start_s=`$start_tm +%S` 8 awk -F $sep '{print $1","$2","$3 >> "'"$inputfile"'""_"$1}' $inputfile 9 end_tm=date 10 end_h=`$end_tm +%H` 11 end_m=`$end_tm +%M` 12 end_s=`$end_tm +%S` 13 use_tm=`echo $end_h $start_h $end_m $start_m $end_s $start_s | awk '{ print ($1 - $2),"h",($3-$4),"m",($5-$6),"s"}'` 14 echo "Finished in "$use_tm 15 } 16 17 18 if [ $# == 2 ]; then 19 sep=$1 20 inputfile=$2 21 main 22 else 23 echo "usage: SplitChr.sh sep inputfile" 24 echo "eg: SplitChr.sh , test.csv" 25 fi

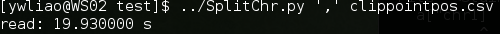

Next, we use python, which is simple and easy to write. So the program was quickly implemented, and the same line-by-line processing was done, adding a little more detail than awk, and only the chromosomes needed were picked out. It took 19.9 seconds.

1 #!/usr/bin/python 2 import sys 3 import time 4 def main(): 5 if len(sys.argv)!=3: 6 print "usage : SplitChr sep inputfile eg: SplitChr ',' test.txt" 7 exit() 8 sep=sys.argv[1] 9 filename=sys.argv[2] 10 f=open(filename,'r') 11 header=f.readline() 12 if len(header.split(sep))<2: 13 print "The sep can't be recongnized !" 14 exit() 15 chrLst=range(1,23) 16 chrLst.extend(["X","Y"]) 17 chrLst=["chr"+str(i) for i in chrLst] 18 outputdic={} 19 for chrI in chrLst: 20 output=filename+"_"+chrI 21 outputdic[chrI]=open(output,'w') 22 outputdic[chrI].write(header) 23 for eachline in f: 24 tmpLst=eachline.strip().split(sep) 25 tmpChr=tmpLst[0] 26 if tmpChr in chrLst: 27 outputdic[tmpChr].write(eachline) 28 end=time.clock() 29 print "read: %f s" % (end - start) 30 31 32 33 if __name__=='__main__': 34 start=time.clock() 35 main()

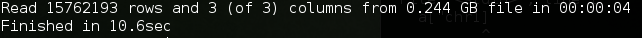

Finally, the R language data.table package is used for processing. data.table is a high-level version of data.frame. It has made great improvements in speed. But is it superior to awk and python?

1 #!/usr/bin/Rscript 2 library(data.table) 3 main <- function(filename,sep){ 4 started.at <- proc.time() 5 arg <- commandArgs(T) 6 sep <- arg[1] 7 inputfile <- arg[2] 8 dt <- fread(filename,sep=sep,header=T) 9 chrLst <- lapply(c(1:22,"X","Y"),function(x)paste("chr",x,sep="")) 10 for (chrI in chrLst){ 11 outputfile <- paste(filename,"_",chrI,sep="") 12 fwrite(dt[.(chrI),,on=.(chr)],file=outputfile,sep=sep) 13 } 14 cat ("Finished in",timetaken(started.at),"\n") 15 } 16 17 arg <- commandArgs(T) 18 if (length(arg)==2){ 19 sep <- arg[1] 20 filename <- arg[2] 21 main(filename,sep) 22 }else{ 23 cat("usage: SplitChr.R sep inputfile eg: SplitChr.R '\\t' test.csv","\n") 24 }

It takes 10.6 seconds and finds that the data has just been read, and the processing and writing are finished immediately. The processing and writing time is very short, so the overall use time is relatively short.

summary

Although all of them are line-by-line processing, it is speculated from the above results that the internal operation of awk is not as fast as python, but awk writes a line of code, which is faster than data. As for python, it is speculated that the reason is that R. data.table is written in C language, and uses multi-threading to write, hash to read and address to optimize the speed. Of course, the above results are for reference only.