DAY ONE - PM

There are two ways to modify the container configuration file:

-

Log in to DOCKER and modify the corresponding configuration file

[root@controller0 ~] docker exec -it keystone bash ()[root@controller0 /] vi /etc/keystone/keystone.conf

-

Log in to controller0 to modify the configuration of container mapping. The following directory is the mapping directory of docker configuration file, and the docker directory corresponds to the running docker name

cd /var/lib/config-data/puppet-generated/

View the ip address of the dashboard (docker:horizon)

(undercloud) [stack@director ~]$ cat overcloudrc

# Clear any old environment that may conflict.

for key in $( set | awk '{FS="="} /^OS_/ {print $1}' ); do unset $key ; done

export OS_NO_CACHE=True

export COMPUTE_API_VERSION=1.1

export OS_USERNAME=admin

export no_proxy=,172.25.250.50,172.25.249.50

export OS_USER_DOMAIN_NAME=Default

export OS_VOLUME_API_VERSION=3

export OS_CLOUDNAME=overcloud

export OS_AUTH_URL=http://172.25.250.50:5000//v3 # access to the Internet is keystone and dashboard IP

export NOVA_VERSION=1.1

export OS_IMAGE_API_VERSION=2

export OS_PASSWORD=redhat #

export OS_PROJECT_DOMAIN_NAME=Default

export OS_IDENTITY_API_VERSION=3

export OS_PROJECT_NAME=admin

export OS_AUTH_TYPE=password

export PYTHONWARNINGS="ignore:Certificate has no, ignore:A true SSLContext object is not available"

# Add OS_CLOUDNAME to PS1

if [ -z "${CLOUDPROMPT_ENABLED:-}" ]; then

export PS1=${PS1:-""}

export PS1=\${OS_CLOUDNAME:+"(\$OS_CLOUDNAME)"}\ $PS1

export CLOUDPROMPT_ENABLED=1

fi

During the exam, I won't tell the dashboard's IP address: export OS_AUTH_URL=http://172.25.250.50:5000//v3

User name: export OS_USERNAME=admin

Password: export OS_PASSWORD=redhat

-

Look at the IP address of the overcloud RC file.

-

dashboard container name: horizon, on controller0

-

Most of them will encounter during the exam. They can't visit and open it. You need to restart the horizon container. Or after modifying horizon, you also need to restart docker.

Instruction: docker restart horizon

Exercise: viewing the underground architecture

The special user heat admin is a secret free user. sudo -i can directly switch to root.

Note: the status of all containers except swift container should be normal.

About network view commands

(undercloud) [stack@director ~]$ openstack subnet list +--------------------------------------+---------------------+--------------------------------------+-----------------+ | ID | Name | Network | Subnet | +--------------------------------------+---------------------+--------------------------------------+-----------------+ | 1653cf28-1da7-4bb7-b060-872a0da6c0d1 | external_subnet | 444ad6f9-7ad8-43d6-a825-37ff9cbc63c5 | 172.25.250.0/24 | | 243a4564-e344-4d80-9eeb-972287a4b8ae | management_subnet | 37a81453-9f5e-415d-90e5-14bdb1858806 | 172.24.5.0/24 | | 30e75947-64c2-4961-9b49-67b066e54fe8 | internal_api_subnet | 60c574f1-cb7d-4f37-8dd6-4f76a2d0218c | 172.24.1.0/24 | | 45dce459-6e9d-40dc-a4d5-ef2e91de6ec7 | ctlplane-subnet | 2c9cee9a-e797-462e-ba76-efaa564b7b7f | 172.25.249.0/24 | | be6d8ef9-ea6a-436f-a1f7-2d085336667c | storage_mgmt_subnet | 7029b988-a1a2-405d-9809-d051c8a726d8 | 172.24.4.0/24 | | d551f63e-d144-4c0a-8a1b-8892aa40ae78 | tenant_subnet | d1cc495b-dda5-4c0e-812a-bd79708716d4 | 172.24.2.0/24 | | f8b997e4-f5f5-46ac-92a2-079340aa0dde | storage_subnet | 352efe55-3af2-4e26-abf6-6f2d388c6a1a | 172.24.3.0/24 | +--------------------------------------+---------------------+--------------------------------------+-----------------+ (undercloud) [stack@director ~]$ openstack subnet show external_subnet +-------------------+--------------------------------------+ | Field | Value | +-------------------+--------------------------------------+ | allocation_pools | 172.25.250.60-172.25.250.99 | | cidr | 172.25.250.0/24 | | created_at | 2018-10-23T13:55:27Z | | description | | | dns_nameservers | | | enable_dhcp | False | | gateway_ip | 172.25.250.254 | | host_routes | | | id | 1653cf28-1da7-4bb7-b060-872a0da6c0d1 | | ip_version | 4 | | ipv6_address_mode | None | | ipv6_ra_mode | None | | name | external_subnet | | network_id | 444ad6f9-7ad8-43d6-a825-37ff9cbc63c5 | | project_id | f50fbd0341134b97a5a735cca5d6255c | | revision_number | 0 | | segment_id | None | | service_types | | | subnetpool_id | None | | tags | | | updated_at | 2018-10-23T13:55:27Z | +-------------------+--------------------------------------+

Practice command

- openstack subnet list – > view self network information:

- openstack subnet show external_subnet – > view self network details

- docker images – > lists the images used to create the server;

- docker inspect – > inspect containers

- docker logs – > check the keystone startup log file;

- Docker exec – > determine the status of OpenStack server in Keystone container: docker exec -it keystone /openstack/healthcheck;

- docker stop – > stop the container;

Describe OVERCLOUD

Openstack core components

Private management platform - > IAAs management platform

OpenStack core services on the controller node

| Component name | effect | describe |

|---|---|---|

| ★ keystone | Authentication | All components require authentication |

| ★ glance | Mirror service | Virtual machine image startup |

| ★ nova | Core computing services controller and compute | Role of control node: resource management and scheduling |

| heat | layout | Template, batch creation of host and Application |

| swift | Object storage | Default 3 copy |

| cinder | Block storage | Additional storage, formatting required |

| ★ neutron | network service | It is equivalent to public cloud VPC SDN, virtual network, subnet, router and firewall |

| mysql/mariadb | database | |

| RabbitMQ | Message queue | Messaging services provide internal communication between a variety of OpenStack services. |

| ceilmeter | charging | |

| manila | Shared file system service | |

| octavia | Load balancing service | |

| gnocchi | Indicator service | Provide intelligent analysis of cloud usage, billing, placement, refund and capacity planning. |

| mistral | Workflow services | |

| redis | Memory database | |

| memcached | Cache database | |

| pacemaker | Cluster software | Key services and components are clustered. |

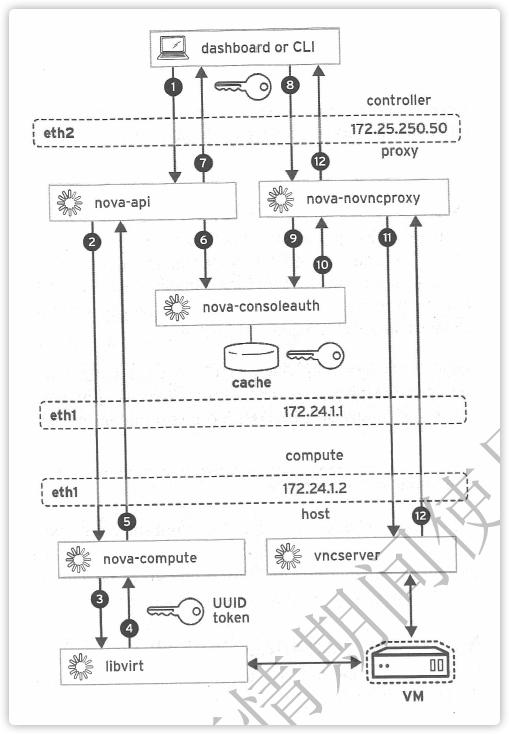

| noVNC | Terminal software console | Access complex graphical SPICE (equivalent to RDP) |

Operation on OVERCLOUD

openstack user list – > each component corresponds to a keystone user;

Create virtual machine

-

Create project

-

Divide resources to project

-

Create a user, assign it to the project, and select a role

-

What resources are needed to create an instance? Image, private network, specification (vcpu\mem\storage), security group and keypair (OpenStack can only log in with key), public network address (floating ip).

Operation: select – > amdin – > project – > create project - > create user at the top

Login user1 - > image – > create image – > first select – > amdin - > flavor - > own network - > create router - > Add interface - > Security Group - > Add Rule - > compute - > keypairs - > instance - > Manage floating IP Associates - > view log - > SSH - I key1.pem cloud- user@172.25.250.X

Configure the overcloud RC file, CP overcloud RC overcloud rc-user1, and modify the OS_PROJECT_NAME=project1,OS_USERNAME=user1, verify openstack server list

Image address: osp-small.qcow2 in materials.example.com, and download it to the current host

wget http://materials.example.com/osp-small.qcow2

View image details

[root@foundation9 ~]# qemu-img info osp-small.qcow2 image: osp-small.qcow2 file format: qcow2 virtual size: 10G (10737418240 bytes) disk size: 1.5G cluster_size: 65536 Format specific information: compat: 0.10 refcount bits: 16

The private network does not have a gateway. DHCP must be enabled. Don't forget to build virtual routers internally and externally

Change the key permission to 600, otherwise you may log in and prompt for key security, * * refuse to log in * *.

[root@foundation9 Downloads]# ssh -i user-bash: warning: setlocale: LC_CTYPE: cannot change locale (zh_CN.UTF-8) -bash: warning: setlocale: LC_CTYPE: cannot change locale (zh_CN.UTF-8) 1-key1.pem cloud-user@172.25.250.108 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: UNPROTECTED PRIVATE KEY FILE! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ Permissions 0644 for 'user1-key1.pem' are too open. It is required that your private key files are NOT accessible by others. This private key will be ignored. Load key "user1-key1.pem": bad permissions cloud-user@172.25.250.108: Permission denied (publickey,gssapi-keyex,gssapi-with-mic). [root@foundation9 Downloads]# chmod 600 user1-key1.pem [root@foundation9 Downloads]# ssh -i user1-key1.pem cloud-user@172.25.250.108 [cloud-user@user1-instance1 ~]$

create volume

Volume - > create volume - > Manage attachment - > OS: format

Attachment storage: after the instance is deleted, the additional storage still exists and can be loaded into the new instance.

Chapter 2 openstack control plane

keystone service three elements

- user

- service

- Terminal

Common instructions

Openstack catalog list – lists the endpiont of each service

There are 3 url addresses:

1. Public (Internet address);

2. internal (intranet address);

3. admin (Network Management)

(overcloud) [stack@director ~]$ openstack catalog list +------------+----------------+------------------------------------------------------------------------------+ | Name | Type | Endpoints | +------------+----------------+------------------------------------------------------------------------------+ | cinderv2 | volumev2 | regionOne | | | | internal: http://172.24.1.50:8776/v2/42eecbfbaf684f909abfe5304434fc77 | | | | regionOne | | | | admin: http://172.24.1.50:8776/v2/42eecbfbaf684f909abfe5304434fc77 | | | | regionOne | | | | public: http://172.25.250.50:8776/v2/42eecbfbaf684f909abfe5304434fc77 | | | | | | octavia | load-balancer | regionOne | | | | public: http://172.25.250.50:9876 | | | | regionOne | | | | admin: http://172.24.1.50:9876 | | | | regionOne | | | | internal: http://172.24.1.50:9876 | | | | | | cinderv3 | volumev3 | regionOne | | | | public: http://172.25.250.50:8776/v3/42eecbfbaf684f909abfe5304434fc77 | | | | regionOne | | | | internal: http://172.24.1.50:8776/v3/42eecbfbaf684f909abfe5304434fc77 | | | | regionOne | | | | admin: http://172.24.1.50:8776/v3/42eecbfbaf684f909abfe5304434fc77 |

Message queue RabbitMQ

Test content: create and launch.

concept

- binding key: parameters of the filter

- exchange: information metadata, which publishes the generated by the application to the message routing queue.

- routing key: applies the specified message metadata (keyword).

Common centralized message queues

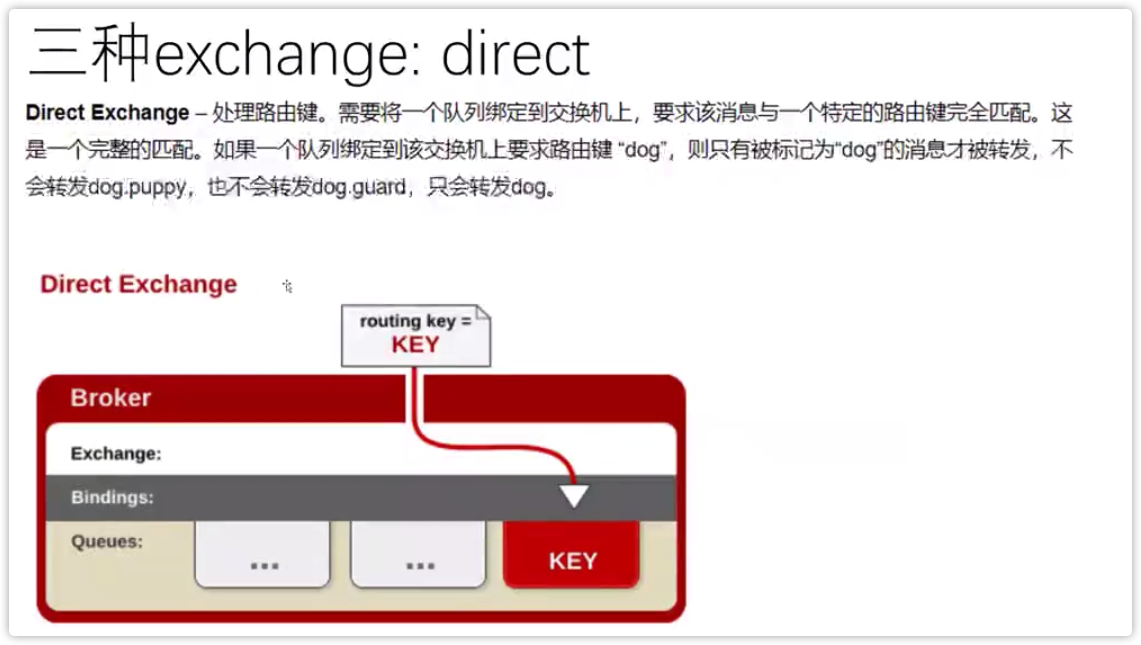

| Direct (default) | The user subscribes to a queue and associates it with a binding key. The server sets the routing key and binding key to associate with the queue subscribed by the user. (exact match) |

|---|---|

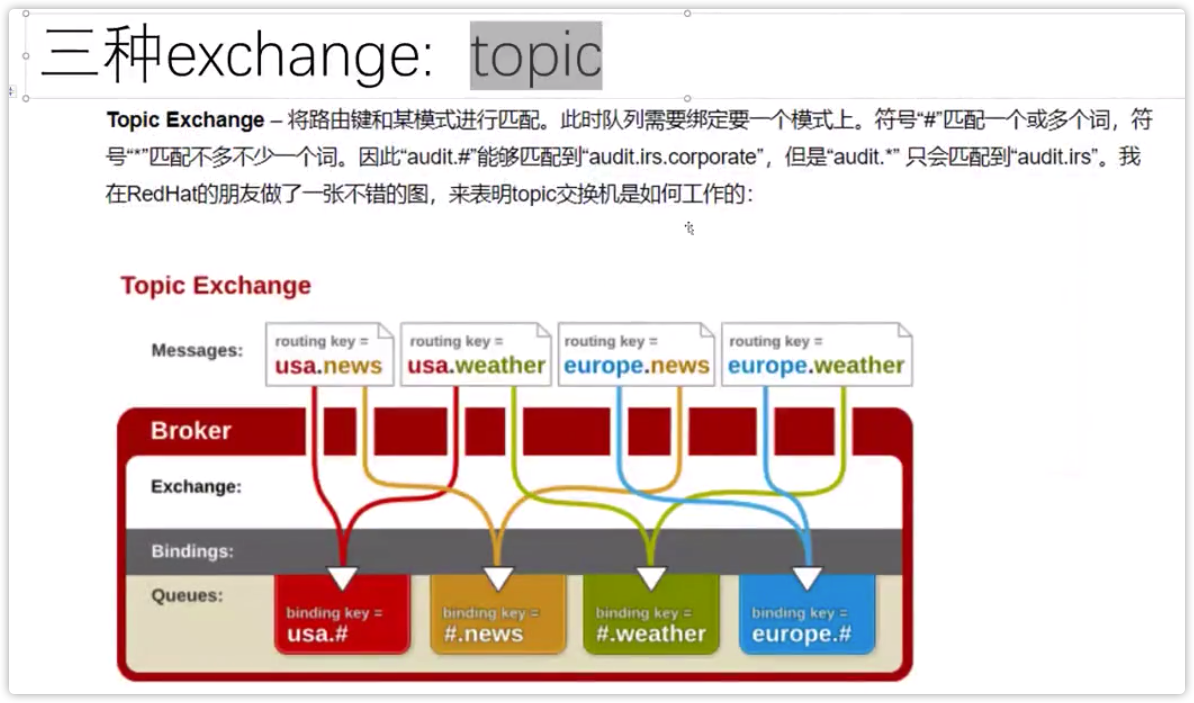

| Topic | The queue subscribed by the user has wildcards (generalized), and the server can send data to the corresponding queue. (partial matching) |

| Fanout | Message broadcast all subscribed queue s, regardless of whether the routing key and binding key match. There is no routing key. (broadcast) |

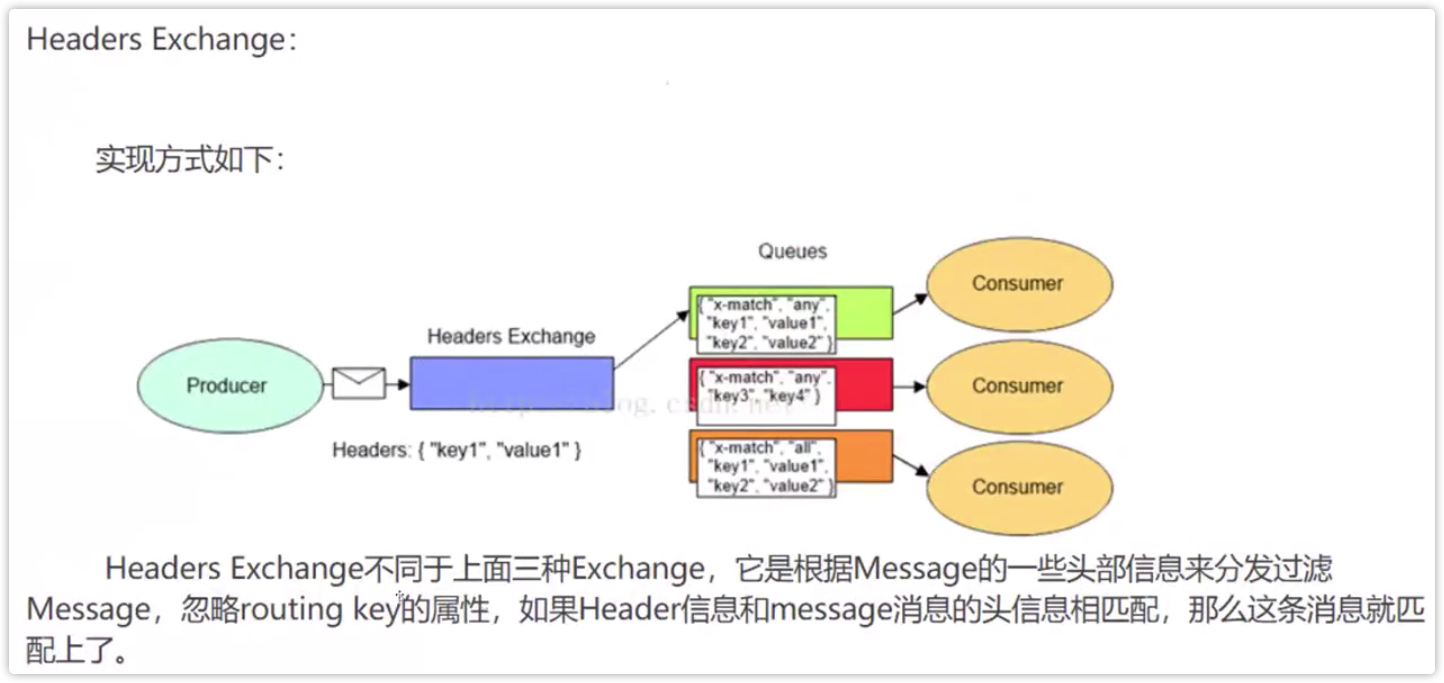

| Header | Use the header feature of the message to bind the queue. (message header information) |

How to manage and create RabbitMQ

Log in to controller0 and execute the RabbitMQ command

[root@controller0 ~] docker exec -it rabbitmq-bundle-docker-0 bash rabbitmqctl help ()[root@controller0 /] rabbitmqctl list_users Listing users guest [administrator]

Test site:

Tracking RabbitMQ messages: a built-in function. When this function is enabled, all messages entering RabbitMQ will be copied to amq.rabbitmq.tarce exchange. It is convenient for users to analyze messages. The instruction rabbitmqctl trace_on

P97 exercise in the book, exam example: create a rabbitmq user ash with a password of redhat. The user can create, operate and query RabbitMQ queues and exchanges.

Assign the administrator role, specify permissions, and start trace_on.

[root@controller0 ~] docker exec -it rabbitmq-bundle-docker-0 bash ()[root@controller0 /] rabbitmqctl add_user ash redhat Creating user "ash" ()[root@controller0 /] rabbitmqctl set_permissions ash ".*" ".*" ".*" Setting permissions for user "ash" in vhost "/" ()[root@controller0 /] rabbitmqctl set_user_tags ash administrator Setting tags for user "ash" to [administrator] ()[root@controller0 /] rabbitmqctl list_users Listing users ash [] guest [administrator] ()[root@controller0 /] rabbitmqctl trace_on Starting tracing for vhost "/"

Accessing VM flowchart using VNC

Close OpenStack

- Close all instances on the overcloud openstack server list -- all projects openstack server stop ID

- Turn off the poweroff of the undercloud compute node

- Close the poweroff of the underlying cloud control node

- Close the control parameter ceph osd set noout ceph osd set norecover ceph osd set norebalance ceph osd set nobackfill ceph osd set nodown ceph osd set pause stored on controller0

- Close ceph0

- Storage node poweroff

- Close controller0 cluster pcs cluster stop – all poweroff

- Close director poweroff

Test example: query redis service password

ssh controller0 docker exec -it redis-bundle-docker-0 bash cat /etc/redis.conf |grep -i pass