CentOS deploys Kubernetes 1.13.10 cluster using binary

1. Installation environment preparation:

Deployment node description

| IP address | host name | CPU | Memory | disk |

|---|---|---|---|---|

| 192.168.250.10 | k8s-master01 | 4C | 4G | 50G |

| 192.168.250.20 | k8s-node01 | 4C | 4G | 50G |

| 192.168.250.30 | k8s-node02 | 4C | 4G | 50G |

k8s installation package download Link: Link: https://pan.baidu.com/s/1fh8gi-GJdM6MsPuofYk6Dw Extraction code: 2333

Deployment Network Description

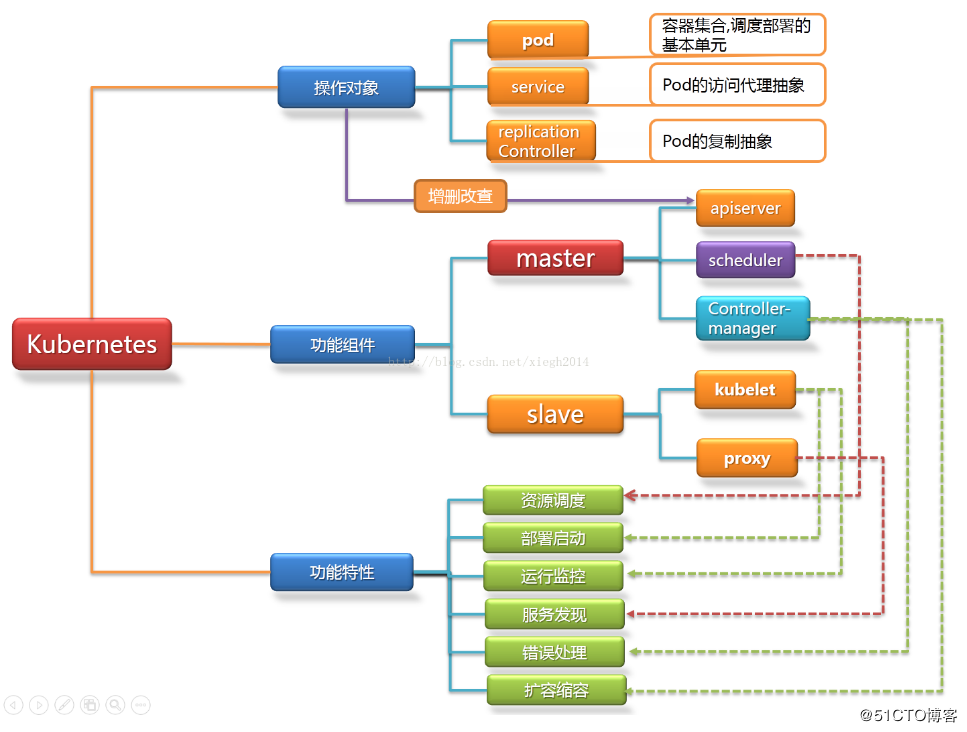

2. Architecture Diagram

Kubernetes Architecture Diagram

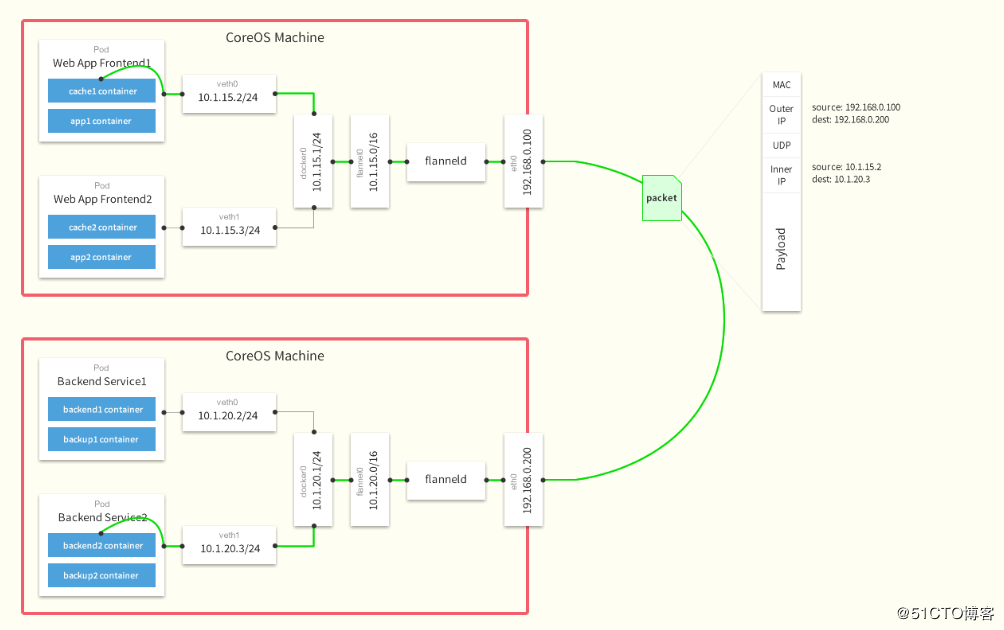

Flannel Network Architecture Diagram

After the data is sent from the source container, it is forwarded to the flannel 0 virtual network card via the docker0 virtual network card of the host. This is a P2P virtual network card. flanneld service monitors the other end of the network card.

Flannel maintains a routing table between nodes through the Etcd service, which we will cover in the configuration section later.

The flanneld service of the source host encapsulates the original data content UDP and delivers it to the flanneld service of the destination node according to its own routing table. After the data arrives, it is unpacked and then directly enters the flannel 0 virtual network card of the destination node.

Then it is forwarded to the docker0 virtual network card of the destination host, and finally, as the local container communicates, docker0 routes to the destination container.

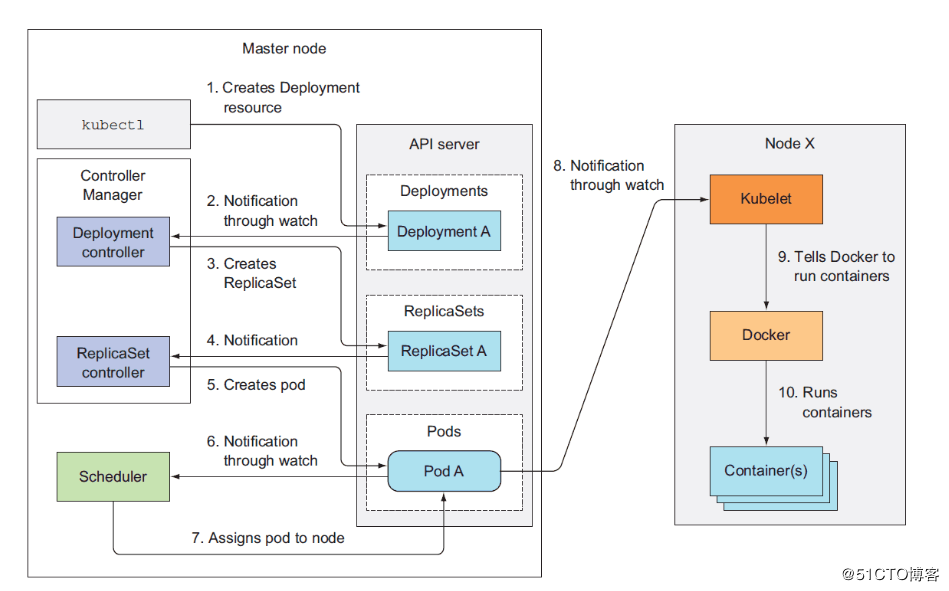

3. Kubernetes workflow

Function description of each module of cluster function:

Master node:

Master node is mainly composed of four modules, APIServer, schedule,controller-manager,etcd.

APIServer: APIServer is responsible for providing RESTful kubernetes API service to the outside world. It is the unified interface of system management instructions. Any addition or deletion of resources should be handed over to APIServer and then to etcd. As shown in the figure, kubectl (the client tool provided by kubernetes, which calls the kubernetes API internally) is straight. Connect and interact with API Server.

schedule: schedule is responsible for dispatching Pod to the appropriate Node. If scheduler is regarded as a black box, its input is pod and a list of Nodes, and its output is a binding of Pod and Node. kubernetes currently provides scheduling algorithms, but also retains interfaces. Users define their own scheduling algorithm according to their own needs.

Controller manager: If APIServer does front-end work, then controller manager is responsible for back-end work. Each resource corresponds to a controller. control manager is responsible for managing these controllers. For example, we created a Pod through APIServer. When the Pod is created successfully, the task of APIServer is completed.

Etcd: etcd is a highly available key value storage system. kubernetes uses it to store the state of each resource, thus realizing Restful API.

Node node:

Each Node node consists of three templates: kublet, kube-proxy

Kube-proxy: This module implements service discovery and reverse proxy in kubernetes. Kube-proxy supports TCP and UDP connection forwarding. The default base Round Robin algorithm forwards client traffic to a set of back-end pods corresponding to service. In terms of service discovery, kube-proxy uses the watch mechanism of etcd to monitor the dynamic changes of service and endpoint object data in the cluster, and maintains a mapping relationship between service and endpoint, so as to ensure that the IP changes of back-end pod will not affect visitors. In addition, kube-proxy also supports session affinity.

Kublet: kublet is Master's agent on each Node node and the most important module on the Node node. It is responsible for maintaining and managing all containers on the Node, but it will not be managed if the container is not created through kubernetes. Essentially, it is responsible for keeping the Pod running as expected.

II. Installation and Configuration of Kubernetes

1. Initialization environment

1.1. Set up shutdown firewall and SELINUX (master & & node)

systemctl stop firewalld && systemctl disable firewalld setenforce 0 vi /etc/selinux/config SELINUX=disabled

1.2. Close Swap (master & node)

swapoff -a && sysctl -w vm.swappiness=0 vi /etc/fstab #UUID=7bff6243-324c-4587-b550-55dc34018ebf swap swap defaults 0 0

1.3. Setting Docker's parameters

vi /etc/sysctl.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 sysctl -p

1.4. Installing Docker

wget https://download.docker.com/linux/centos/docker-ce.repo mv docker-ce.repo /etc/yum.repos.d/ yum list docker-ce --showduplicates | sort -r yum install docker-ce -y systemctl start docker && systemctl enable docker

1.5. Create installation directory

mkdir /k8s/etcd/{bin,cfg,ssl} -p

mkdir /k8s/kubernetes/{bin,cfg,ssl} -p

1.6. Installing and configuring CFSSL

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

1.7. Creating Certificates

Create ETCD certificates

cat << EOF | tee server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.250.10",

"192.168.250.20",

"192.168.250.30"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Jiangxi",

"ST": "Jiangxi"

}

]

}

EOF

Generating ETCD CA certificates and private keys

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

Creating Kubernetes CA Certificate

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat << EOF | tee ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shenzhen",

"ST": "Shenzhen",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

Generating API_SERVER Certificate

cat << EOF | tee server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.250.10

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Jiangxi"

"ST": "Jiangxi",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

Creating Kubernetes Proxy Certificate

cat << EOF | tee kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Jiangxi",

"ST": "Jiangxi",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

1.8, ssh-key authentication

ssh-keygen

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:FQjjiRDp8IKGT+UDM+GbQLBzF3DqDJ+pKnMIcHGyO/o root@qas-k8s-master01 The key's randomart image is: +---[RSA 2048]----+ |o.==o o. .. | |ooB+o+ o. . | |B++@o o . | |=X**o . | |o=O. . S | |..+ | |oo . | |* . | |o+E | +----[SHA256]-----+ # ssh-copy-id 192.168.250.20 # ssh-copy-id 192.168.250.30

2. Deployment of ETCD

Unzip installation file

tar -xvf etcd-v3.3.14-linux-amd64.tar.gz -C /home/file/ cd etcd-v3.3.10-linux-amd64/ cp etcd etcdctl /k8s/etcd/bin/

[root@k8s-master ~]# vi /k8s/etcd/cfg/etcd

#[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.250.10:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.250.10:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.250.10:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.250.10:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.250.10:2380,etcd02=https://192.168.250.20:2380,etcd03=https://192.168.250.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

Create etcd's systemd unit file

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/k8s/etcd/cfg/etcd

ExecStart=/k8s/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/k8s/etcd/ssl/server.pem \

--key-file=/k8s/etcd/ssl/server-key.pem \

--peer-cert-file=/k8s/etcd/ssl/server.pem \

--peer-key-file=/k8s/etcd/ssl/server-key.pem \

--trusted-ca-file=/k8s/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/k8s/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Copy certificate files (ETCD certificates and kubernetes certificates should be distinguished)

cp ca*pem server*pem /k8s/etcd/ssl

Start ETCD service

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

Copy startup files and configuration files to Node 1 and Node 2(node)

cd /k8s/

scp -r etcd root@192.168.250.20:/k8s/

scp -r etcd root@192.168.250.30:/k8s/

scp /usr/lib/systemd/system/etcd.service root@192.168.250.20:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.250.30:/usr/lib/systemd/system/

(node01&&node02)

vi /k8s/etcd/cfg/etcd #[Member] ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.250.20:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.250.20:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.250.20:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.250.20:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.250.10:2380,etcd02=https://192.168.250.20:2380,etcd03=https://192.168.250.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" vi /k8s/etcd/cfg/etcd #[Member] ETCD_NAME="etcd03" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.250.30:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.250.30:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.250.30:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.250.30:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.250.10:2380,etcd02=https://192.168.250.20:2380,etcd03=https://192.168.250.30:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

Verify that the cluster is functioning properly

cd /k8s/kubenetes/bin ./etcdctl --ca-file=/k8s/etcd/ssl/ca.pem --cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem --endpoints="https://192.168.250.10:2379,https://192.168.250.20:2379,https://192.168.250.30:2379" cluster-health

member 6445455ff5c1ee8c is healthy: got healthy result from

https://192.168.250.30:2379 member 81271f3a4d058dd5 is healthy: got

healthy result from https://192.168.250.20:2379 member

a2f20cd0d3f67b4f is healthy: got healthy result from

https://192.168.250.10:2379 cluster is healthy

Be careful:

Start ETCD cluster and start two nodes at the same time. Start one node cluster can not start normally.

3. Deployment of Flannel Network

cd /k8s/etcd/ssl/

/k8s/etcd/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem \

--key-file=server-key.pem \

--endpoints=https://192.168.250.10:2379,https://192.168.250.20:2379,https://192.168.250.30:2379" \

set /coreos.com/network/config '{ "Network": "172.18.0.0/16", "Backend": {"Type": "vxlan"}}'

- The current version of flanneld (v0.10.0) does not support etcd v3, so etcd v2 API is used to write configuration key and segment data.

- Written Pod segment ${CLUSTER_CIDR} must be / 16 segment address, and must be consistent with the cluster-cidr parameter value of kube-controller-manager;

Decompression installation

tar -xvf flannel-v0.9.1-linux-amd64.tar.gz -C /home/file mv flanneld mk-docker-opts.sh /k8s/kubernetes/bin/

Configure Flannel(node)

vi /k8s/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.250.10:2379,https://192.168.250.20:2379,https://192.168.250.30:2379 -etcd-cafile=/k8s/etcd/ssl/ca.pem -etcd-certfile=/k8s/etcd/ssl/server.pem -etcd-keyfile=/k8s/etcd/ssl/server-key.pem"

Create flanneld's systemd unit file

vi /usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/k8s/kubernetes/cfg/flanneld ExecStart=/k8s/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/k8s/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

The mk-docker-opts.sh script writes the information of the OD subnet segment allocated to flanneld into the / run/flannel/docker file, which is used to configure the docker 0 bridge when the docker starts.

flanneld communicates with other nodes using the interface where the system default route is located. For nodes with multiple network interfaces (such as intranet and public network), it can specify the communication interface with the - iface parameter, such as eth0 interface above.

flanneld runtime requires root privileges;

Configure Docker to start the specified subnet segment (node)

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

Flanneld system D unit file to all nodes

cd /k8s/ scp -r kubernetes 192.168.250.30:/k8s/ scp /k8s/kubernetes/cfg/flanneld 192.168.250.30:/k8s/kubernetes/cfg/flanneld scp /usr/lib/systemd/system/docker.service 192.168.250.30:/usr/lib/systemd/system/docker.service scp /usr/lib/systemd/system/flanneld.service 192.168.250.30:/usr/lib/systemd/system/flanneld.service //Startup service systemctl daemon-reload systemctl start flanneld systemctl enable flanneld systemctl restart docker

Check to see if it works

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:3a:60:b0 brd ff:ff:ff:ff:ff:ff

inet 192.168.250.20/24 brd 192.168.250.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::3823:a9d:a68:50fd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:d3:11:a3:83 brd ff:ff:ff:ff:ff:ff

inet 172.18.52.1/24 brd 172.18.52.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 72:05:56:90:bf:ba brd ff:ff:ff:ff:ff:ff

inet 172.18.52.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::7005:56ff:fe90:bfba/64 scope link

valid_lft forever preferred_lft forever

4. Deployment of master nodes

The kubernetes master node runs the following components:

•kube-apiserver

•kube-scheduler

•kube-controller-manager

kube-scheduler and kube-controller-manager can run in cluster mode, and produce a working process through leader election, while other processes are in blocking mode.

Unzip and copy binary files to master node

tar -xvf kubernetes-server-linux-amd64.tar.gz -C /home/file cd /hoe/file/kubernetes/server/bin/ cp kube-scheduler kube-apiserver kube-controller-manager kubectl /k8s/kubernetes/bin/

Copy authentication

cp *pem /k8s/kubernetes/ssl/

Deploy the kube-apiserver component

Create TLS Bootstrapping Token

# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 75e5e51ee1c43417e1a161ead8801ce3 vi /k8s/kubernetes/cfg/token.csv 75e5e51ee1c43417e1a161ead8801ce3,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

Create apiserver configuration file

vi /k8s/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 --etcd-servers=https://192.168.250.10:2379,https://192.168.250.20:2379,https://192.168.250.30:2379 \ --bind-address=192.168.250.10 \ --secure-port=6443 \ --advertise-address=192.168.250.10 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --enable-bootstrap-token-auth \ --token-auth-file=/k8s/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/k8s/kubernetes/ssl/server.pem \ --tls-private-key-file=/k8s/kubernetes/ssl/server-key.pem \ --client-ca-file=/k8s/kubernetes/ssl/ca.pem \ --service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/k8s/etcd/ssl/ca.pem \ --etcd-certfile=/k8s/etcd/ssl/server.pem \ --etcd-keyfile=/k8s/etcd/ssl/server-key.pem"

Create a Kube API server system D unit file

vi /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-apiserver ExecStart=/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

Startup service

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

Check whether apiserver is running

ps -ef |grep kube-apiserver root 13226 1 3 02:12 ? 00:05:18 /k8s/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.250.10:2379,https://192.168.250.20:2379,https://192.168.250.30:2379 --bind-address=192.168.250.10 --secure-port=6443 --advertise-address=192.168.250.10 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --enable-bootstrap-token-auth --token-auth-file=/k8s/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/k8s/kubernetes/ssl/server.pem --tls-private-key-file=/k8s/kubernetes/ssl/server-key.pem --client-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem --etcd-cafile=/k8s/etcd/ssl/ca.pem --etcd-certfile=/k8s/etcd/ssl/server.pem --etcd-keyfile=/k8s/etcd/ssl/server-key.pem root 14479 13193 1 04:26 pts/0 00:00:00 grep --color=auto kube-apiserver

Deployment of kube-scheduler

Create the kube-scheduler configuration file

vi /k8s/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect"

address: receives http /metrics requests at port 127.0.0.1:10251; kube-scheduler does not currently support receiving https requests;

- kubeconfig: specify the path of the kubeconfig file, which is used by kube-scheduler to connect and validate the kube-apiserver;

Lead-elect = true: cluster operation mode, enabling election function; node selected as leader is responsible for processing work, other nodes are blocked;

Create the kube-scheduler system dunit file

Create the kube-scheduler system dunit file

vi /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-scheduler ExecStart=/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

Startup service

systemctl daemon-reload systemctl enable kube-scheduler.service systemctl restart kube-scheduler.service

Check whether kube-scheduler is running

ps -ef |grep kube-scheduler root 14391 1 1 03:49 ? 00:00:32 /k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect root 14484 13193 0 04:30 pts/0 00:00:00 grep --color=auto kube-scheduler

Deployment of kube-controller-manager

Create the kube-controller-manager configuration file

vi /k8s/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem \ --root-ca-file=/k8s/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/k8s/kubernetes/ssl/ca-key.pem"

Create the kube-controller-manager system D unit file

vi /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-controller-manager ExecStart=/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

Startup service

systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager

Check whether kube-controller-manager is running

ps -ef |grep kube-controller-manager root 14397 1 2 03:49 ? 00:00:57 /k8s/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem --cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem --root-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-private-key-file=/k8s/kubernetes/ssl/ca-key.pem root 14489 13193 0 04:32 pts/0 00:00:00 grep --color=auto kube-controller-manager

Add the executable path / k8s/kubernetes / to the PATH variable

vi /etc/profile PATH=/k8s/kubernetes/bin:$PATH:$HOME/bin source /etc/profile

View master cluster status

kubectl get cs,nodes

NAME STATUS MESSAGE ERROR

componentstatus/etcd-2 Healthy {"health":"true"}

componentstatus/etcd-1 Healthy {"health":"true"}

componentstatus/etcd-0 Healthy {"health":"true"}

componentstatus/controller-manager Healthy ok

componentstatus/scheduler Healthy ok

NAME STATUS ROLES AGE VERSION

node/192.168.250.10 NotReady <none> 6h26m v1.13.10

node/192.168.250.20 Ready <none> 144m v1.13.10

node/192.168.250.30 Ready <none> 144m v1.13.10

5. Deploying node nodes

The kubernetes work node runs the following components:

- docker has been deployed before

- kubelet

- kube-proxy

Deployment of kubelet components

- kublet runs on each worker node, receives requests sent by kube-apiserver, manages the Pod container, and executes interactive commands, such as exec, run, logs, etc.

- kublet automatically registers node information to kube-apiserver at startup, and the built-in cadvisor counts and monitors node resource utilization.

- To ensure security, this document only opens and receives https

The secure port of the request, authenticates and authorizes the request, and denies unauthorized access (such as apiserver, heapster).

Copy the kubelet binary file to the node node

cp kubelet kube-proxy /k8s/kubernetes/bin/ scp kubelet kube-proxy 192.168.250.20:/k8s/kubernetes/bin/ scp kubelet kube-proxy 192.168.250.30:/k8s/kubernetes/bin/

Create the kubelet bootstrap kubeconfig file

vi environment.sh

# Create kubelet bootstrapping kubeconfig

BOOTSTRAP_TOKEN=75e5e51ee1c43417e1a161ead8801ce3

KUBE_APISERVER="https://192.168.250.10:6443"

# Setting cluster parameters

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# Setting Client Authentication Parameters

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# Setting context parameters

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# Setting default context

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# Create a kube-proxy kubeconfig file

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

Copy the bootstrap kubeconfig kube-proxy.kubeconfig file to all nodes

cp bootstrap.kubeconfig kube-proxy.kubeconfig /k8s/kubernetes/cfg/ scp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.250.20:/k8s/kubernetes/cfg/ scp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.250.30:/k8s/kubernetes/cfg/

Create a kubelet parameter profile and copy it to all nodes

Create a kubelet parameter configuration template file:

vi /k8s/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.250.20#ip of current node

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.0.0.2"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

Create a kubelet configuration file

vi /k8s/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.250.20 \ --kubeconfig=/k8s/kubernetes/cfg/kubelet.kubeconfig \ --bootstrap-kubeconfig=/k8s/kubernetes/cfg/bootstrap.kubeconfig \ --config=/k8s/kubernetes/cfg/kubelet.config \ --cert-dir=/k8s/kubernetes/ssl \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

- Host name-override ip of the current node

Create the kubelet system dunit file

vi /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/k8s/kubernetes/cfg/kubelet ExecStart=/k8s/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

Binding kubelet-bootstrap users to system cluster roles (master)

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap

Startup service

systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

pprove kubelet CSR request

You can approve csr requests manually or automatically. It is recommended to use an automated approach because certificates generated after approve csr can be automatically rotated starting with v1.8.

Manual approve CSR request

View the CSR list:

# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs 39m kubelet-bootstrap Pending node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s 5m5s kubelet-bootstrap Pending # kubectl certificate approve node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs certificatesigningrequest.certificates.k8s.io/node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs # kubectl certificate approve node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s certificatesigningrequest.certificates.k8s.io/node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s approved [ # kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-An1VRgJ7FEMMF_uyy6iPjyF5ahuLx6tJMbk2SMthwLs 41m kubelet-bootstrap Approved,Issued node-csr-dWPIyP_vD1w5gBS4iTZ6V5SJwbrdMx05YyybmbW3U5s 7m32s kubelet-bootstrap Approved,Issued

- Requesting User: User requesting CSR, kube-apiserver authenticates and authorizes it;

- Subject: Certificate information requesting signature;

- Certificate CN is system: node: kube-node 2, Organization is

system:nodes, the Node authorization mode of Kube API server will grant the relevant permissions of the certificate;

View cluster status

# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.250.10 NotReady <none> 6h44m v1.13.10 192.168.250.20 Ready <none> 162m v1.13.10 192.168.250.30 Ready <none> 161m v1.13.10

Deployment of kube-proxy components

kube-proxy runs on all node nodes. It monitors the changes of service and Endpoint in apiserver and creates routing rules to balance service load.

Create the kube-proxy configuration file

vi /k8s/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.250.20 \ --cluster-cidr=10.0.0.0/24 \ --kubeconfig=/k8s/kubernetes/cfg/kube-proxy.kubeconfig" ~

- bindAddress: listen address;

- clientConnection.kubeconfig: the kubeconfig file connecting apiserver;

- Cluster CIDR: kube-proxy judges the internal and external traffic of the cluster based on cluster-cidr, specifying cluster-cidr

Or --- masquerade-all option, kube-proxy will only make SNAT for requests to access Service IP; - Hostname Override: The parameter value must be the same as the value of kubelet, otherwise the kube-proxy will not be found after booting.

- Node, so that no ipvs rules are created;

Mode: using ipvs mode;

Create the kube-proxy system dunit file

vi /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-proxy ExecStart=/k8s/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

Startup service

systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

Cluster state

Label node or master node

kubectl label node 172.16.8.100 node-role.kubernetes.io/master='master' kubectl label node 192.168.250.20 node-role.kubernetes.io/node='node' kubectl label node 192.168.250.30 node-role.kubernetes.io/node='node' # kubectl get node NAME STATUS ROLES AGE VERSION 172.16.8.100 Ready master 137m v1.13.0 192.168.250.20 Ready node 167m v1.13.10 192.168.250.30 Ready node 167m v1.13.10