CentOS 7 builds k8s clusters

Environmental Science:

| ip | host name | role |

|---|---|---|

| 192.168.25.133 | k8s01 | master |

| 192.168.25.134 | k8s02 | slave |

| 192.168.25.135 | k8s03 | slave |

Install the necessary software

yum install -y net-tools.x86_64 wget yum-utils

Configure hosts

cat >> /etc/hosts << EOF 192.168.25.133 k8s01 192.168.25.134 k8s02 192.168.25.135 k8s03 EOF

Turn off firewall

systemctl disable firewalld systemctl stop firewalld

Disable selinux so that the container can read the host file system smoothly

setenforce 0 sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

Close swap, which is the virtual memory applied by the operating system when the memory is tight. According to Kubernetes official website, swap will affect the performance of Kubernetes and is not recommended

swapoff -a #Temporarily Closed vi /etc/fstab #Permanently close, delete or comment out which line of swap configuration Temporary closure and permanent closure can be implemented alternatively

If not, modify vi /etc/sysconfig/kubelet:

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

The chain that delivers bridged IPv4 traffic to iptables:

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

see information

sysctl --system

It is executed separately on three machines to generate their own ssh public-private key pairs

ssh-keygen -t rsa -f /root/.ssh/id_rsa -P ""

Perform the secret free access operation of configuring the other two machines on the three machines respectively

ssh-copy-id k8s01 ssh-copy-id k8s02 ssh-copy-id k8s03

Download the docker.repo package to the / etc/yum.repos.d/ directory

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

New kubernetes warehouse file

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

Restart synchronization system time (ensure that the time of multiple services is consistent)

systemctl restart chronyd

Configure docker warehouse

yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Install docker, start docker, and set startup

yum install docker-ce systemctl enable docker && systemctl start docker

Configure mirror acceleration

cat >> /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://3******.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

Reload and restart docker

systemctl daemon-reload && systemctl restart docker

Install kubelet, kubedm and kubectl, start kubelet and set startup and self startup

yum install -y kubelet kubeadm kubectl systemctl enable kubelet && systemctl start kubelet

Use kubedm version to check the latest version number of kubernetes

Perform initialization on the master node. Initialization will be slow. Wait patiently. The following command is to install the latest version of kubernetes directly. To install the specified version of kubernetes, you need to add the parameter kubernetes version, for example: – kubernetes version = "v1.17.4"

kubeadm init --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16 --apiserver-advertise-address=192.168.25.133 --image-repository registry.aliyuncs.com/google_containers

After the initialization of the master node, a token value will be generated. You need to save this token value to avoid losing it. The slave node needs to use this token to join the cluster.

Join k8s cluster on two slave nodes

kubeadm join 192.168.25.133:6443 --token 8000y1.xh9227rgmyfy0ljx

After the slave node successfully joins the cluster, the prompt This node has joined the cluster will be displayed

Executing kubectl get nodes on the master will report an error: the connection to the server localhost: 8080 was rejected - did you specify the right host or port?

Solution: execute export KUBECONFIG=/etc/kubernetes/admin.conf on the master

Execute kubectl get nodes command again successfully

After copying admin.conf to each node, you can execute kubectl command on the node node to view

The master node installs the network plug-in. Here I choose to install flannel

The official installation method of flannel requires scientific Internet access. The specific contents of kube-flannel.ym will be provided below

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

The version of flannel should be consistent with that of kubernetes

The author installed kubernetes version v1.22.4, so the installed flannel version is v0.15.1

kube-flannel.yml v0.15.1

cat <<EOF > kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.15.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

EOF

After generating kube-flannel.yml file

docker pull quay.io/coreos/flannel:v0.15.1 kubectl apply -f kube-flannel.yml

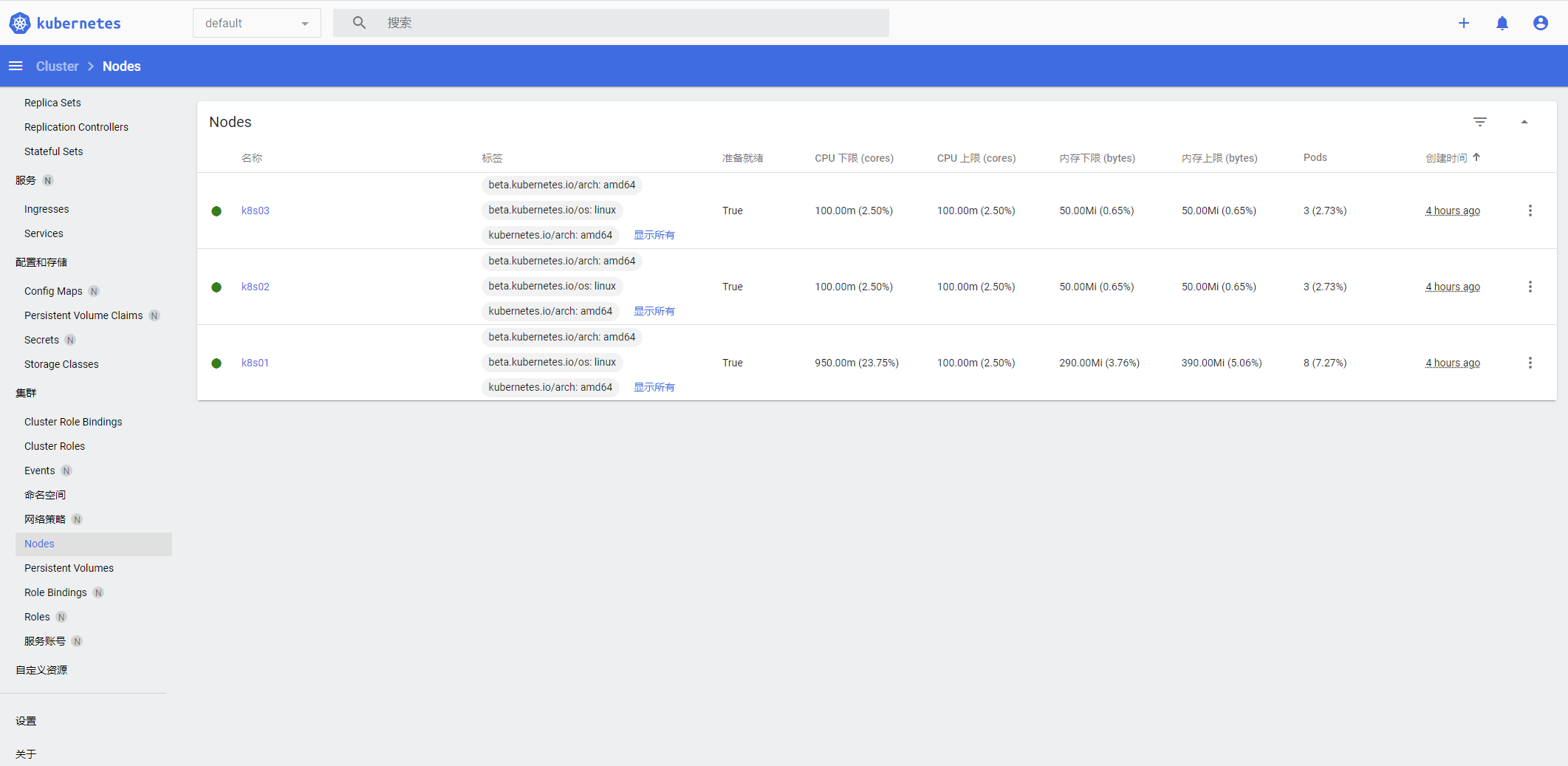

[root@k8s01 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-7f6cbbb7b8-fccvh 1/1 Running 0 4h17m coredns-7f6cbbb7b8-nb8b9 1/1 Running 0 4h17m etcd-k8s01 1/1 Running 0 4h17m kube-apiserver-k8s01 1/1 Running 0 4h17m kube-controller-manager-k8s01 1/1 Running 0 4h17m kube-flannel-ds-2gfsw 1/1 Running 0 127m kube-flannel-ds-9847s 1/1 Running 0 127m kube-flannel-ds-xxgmn 1/1 Running 0 127m kube-proxy-46gvj 1/1 Running 0 4h14m kube-proxy-5cgj4 1/1 Running 0 4h17m kube-proxy-wd67q 1/1 Running 0 4h14m kube-scheduler-k8s01 1/1 Running 0 4h17m [root@k8s01 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.aliyuncs.com/google_containers/kube-apiserver v1.22.4 8a5cc299272d 9 days ago 128MB registry.aliyuncs.com/google_containers/kube-scheduler v1.22.4 721ba97f54a6 9 days ago 52.7MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.4 0ce02f92d3e4 9 days ago 122MB registry.aliyuncs.com/google_containers/kube-proxy v1.22.4 edeff87e4802 9 days ago 104MB quay.io/coreos/flannel v0.15.1 e6ea68648f0c 2 weeks ago 69.5MB rancher/mirrored-flannelcni-flannel-cni-plugin v1.0.0 cd5235cd7dc2 4 weeks ago 9.03MB registry.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 5 months ago 295MB registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 6 months ago 47.6MB registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 8 months ago 683kB [root@k8s01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s01 Ready control-plane,master 4h19m v1.22.4 k8s02 Ready <none> 4h17m v1.22.4 k8s03 Ready <none> 4h17m v1.22.4

Install kubernetes dashboard

download

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.4.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

Define the port of the role and set 3200 port (range: 30000-32767). This port should not be the same as NodePort

vi recommended.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #NodePort mode is used to facilitate Internet access

ports:

- port: 443

targetPort: 8443

nodePort: 32000 #32000 port mapped to host

---

create administrator role

[root@k8s01 ~]# kubectl create -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created #Check whether kubernetes dashboard is successful [root@k8s01 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE ...... kubernetes-dashboard dashboard-metrics-scraper-c45b7869d-x9rc7 1/1 Running 0 111m kubernetes-dashboard kubernetes-dashboard-576cb95f94-f68wh 1/1 Running 0 111m #Check whether the port of pod, service and role has been modified successfully [root@k8s01 ~]# kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.1.143.142 <none> 8000/TCP 114m kubernetes-dashboard NodePort 10.1.59.150 <none> 443:32000/TCP 114m

Generate authentication token for Dashboard

[root@k8s01 ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@k8s01 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s01 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-4fkng

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: ef0ace5f-a043-419a-8754-985ae57630a9

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkdrUnh1dDAxU0lfZXd5ZTJfeVd1U0prckRnZTE5UWZZaVA3MzVXWkJ6RkEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNGZrbmciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZWYwYWNlNWYtYTA0My00MTlhLTg3NTQtOTg1YWU1NzYzMGE5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.p4A6zTnUC45FMbLadeE9goCd4d-YIswSkyDiD7X8E-zb6oBn5ANQvrbEq4MKCXLESCQF7b2H8EbqtwYRfRAWArU796MWWN3O1L5MohqWwH37x9fo2zYiiH9GUKCf62tHiAU6BR1WlRVURjdDfz2GnTpkmebSsPoFVmyNZ6WvRRUsmz3FKJZywDqWTKoso8Zl_nnDBNFWaCF08Z8YdCKqE67UtwIRSyHX1TN7BwQtmQHu7XLXv7fqI2WRLQVMGTu5ohCcGwbo2-OsnTbyZhmqL3OabyTaE-STJFYLh1k80L3nTTbDaRst7dxbSpl-ZmHQ-5zHLc-gd25NaTUzL2F1LQ

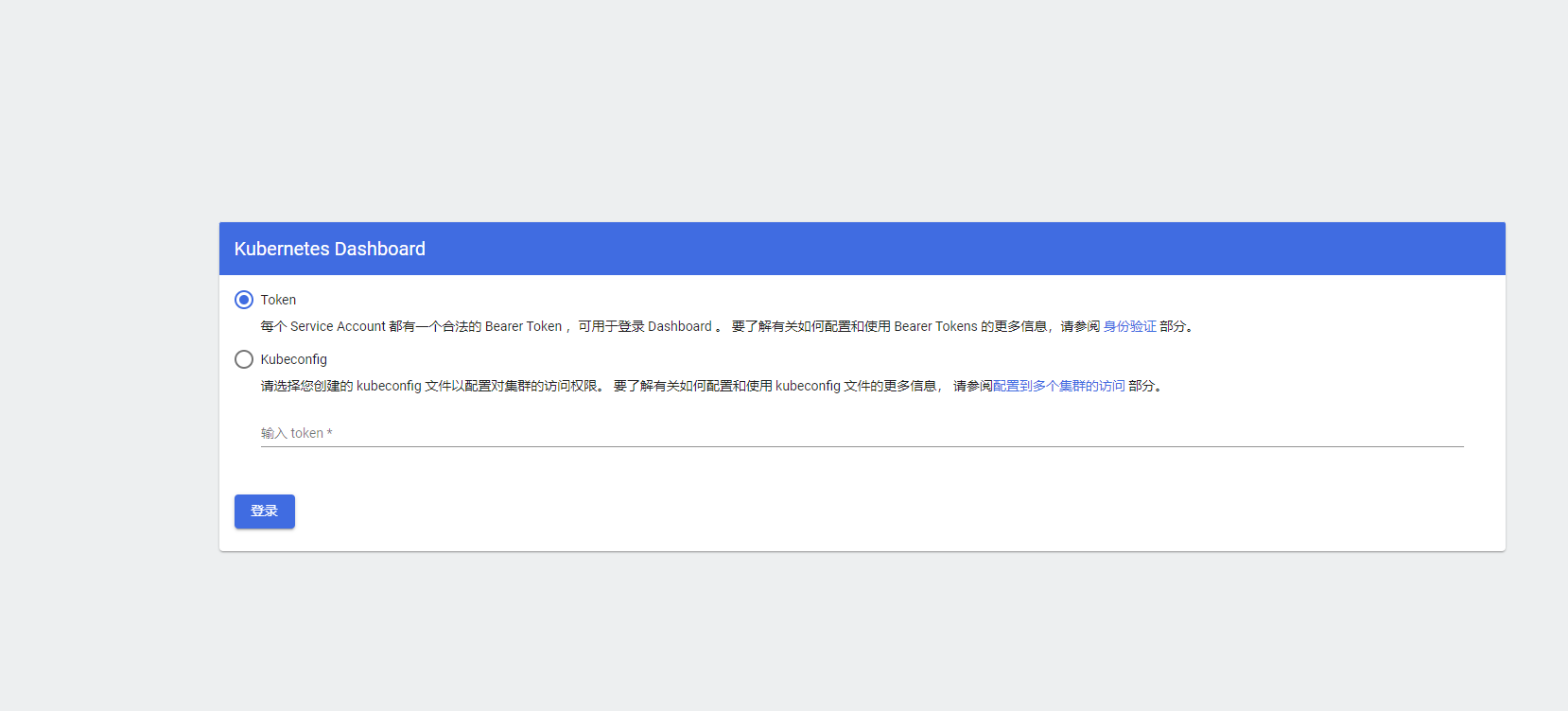

Save the generated token and access https://192.168.25.133:32000/ , select the token authentication method to log in and enter the token

reference material:

https://blog.csdn.net/xtss999/article/details/105061136

https://blog.csdn.net/weixin_41827162/article/details/117670165