1. kubernetes cluster deployment

1.1 kubernetes installation overview

To learn kubernetes, first of all, a kubernetes cluster is needed. In order to meet the needs of different scenarios, the community provides different installation methods to meet the needs of various scenarios. Common methods are:

- MiniKube is a single node installation of kubernetes in the local virtualization tool, MiniKube installation documentation

- Binary installation, through the compiled binary installation, the parameters need to be set, which can be customized and difficult to install

- Kubeadm, an automatic installation tool, is deployed in the image mode. It is easy to use. The image is in the Google warehouse. Downloading is easy to fail

For the learning environment, Katacoda provides an online MiniKube environment, which can be used only by enabling it in the console, or downloading it to local users. For the production environment, it is recommended to use binary installation or kubeadm. At present, the new kubeadm has deployed kubernetes management component in the form of pod in the cluster. No matter which way is used, limited by GFW, most of the image downloads, you can supplement your brain and solve it by yourself. In this paper, you can install and deploy offline.

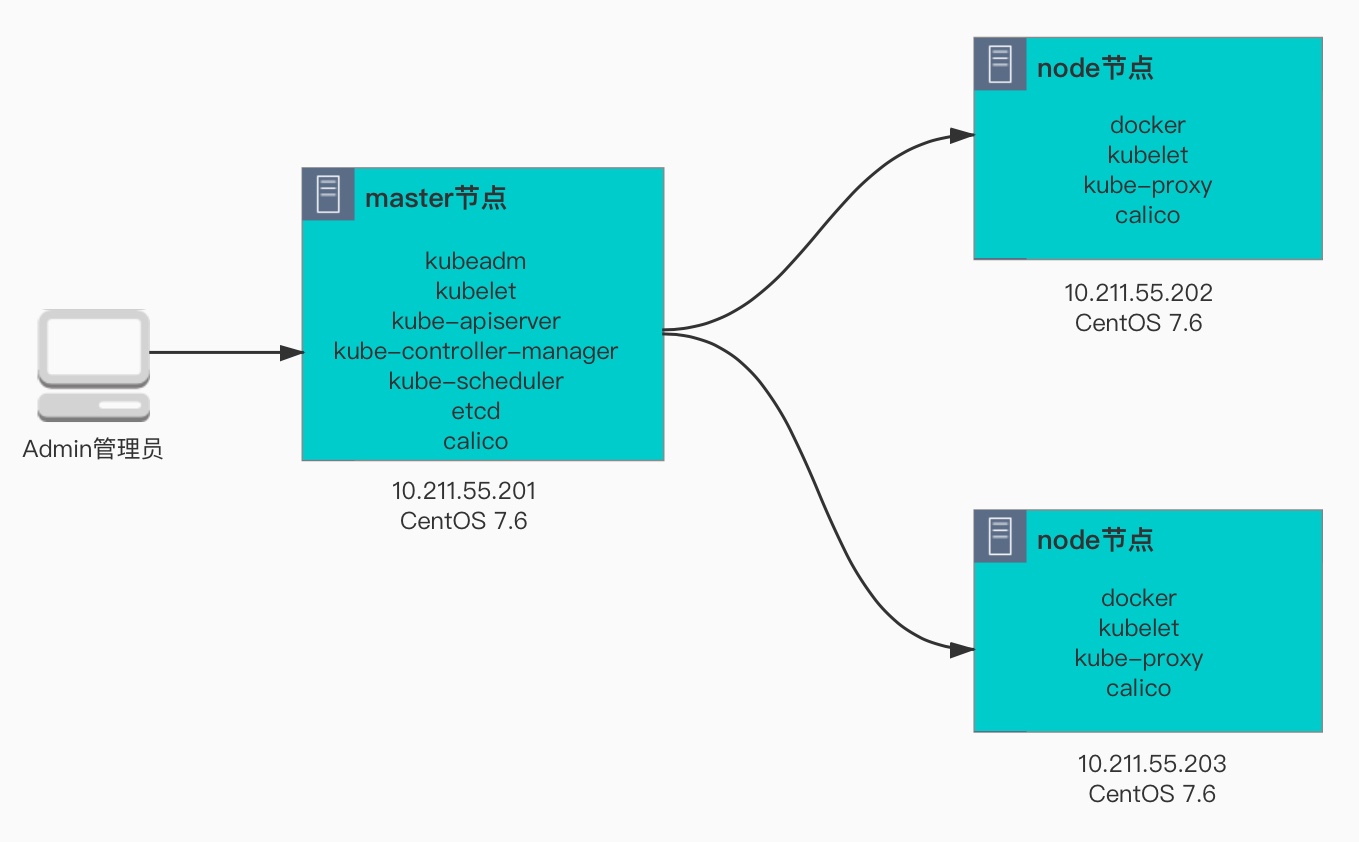

1.2 kubernetes environment description

[Environmental preparation]

1. Set the host name. The other two nodes are similar

root@VM_100_101_centos ~# hostnamectl set-hostname node-1 root@VM_100_101_centos ~# hostname node-1

2. Set the hosts file, and set the same content for the other two nodes

root@node-1 ~# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain 10.211.55.201 node-1 10.211.55.202 node-2 10.211.55.203 node-3

3. Set SSH password free login and copy the public key to the peer through SSH copy ID

#Generate key pair root@node-1 .ssh# ssh-keygen -P '' Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:zultDMEL8bZmpbUjQahVjthVAcEkN929w5EkUmPkOrU root@node-1 The key's randomart image is: +---RSA 2048----+ | .=O=+=o.. | | o+o..+.o+ | | .oo=. o. o | | . . * oo .+ | | oSOo.E . | | oO.o. | | o++ . | | . .o | | ... | +----SHA256-----+ #Copy public key to node-2 and node-3 root@node-1 .ssh# ssh-copy-id -i /root/.ssh/id_rsa.pub node-2: /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host 'node-1 (10.254.100.101)' can't be established. ECDSA key fingerprint is SHA256:jLUH0exgyJdsy0frw9R+FiWy+0o54LgB6dgVdfc6SEE. ECDSA key fingerprint is MD5:f4:86:a8:0e:a6:03:fc:a6:04:df:91:d8:7a:a7:0d:9e. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node-1's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'node-2'" and check to make sure that only the key(s) you wanted were added.

1.3 installing docker

1. Download the yum source of docker

# wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2. Set cgroup driver type to systemd

[root@node-1 ~]# cat > /etc/docker/daemon.json <<EOF

> {

> "exec-opts": ["native.cgroupdriver=systemd"],

> "log-driver": "json-file",

> "log-opts": {

> "max-size": "100m"

> },

> "storage-driver": "overlay2",

> "storage-opts": [

> "overlay2.override_kernel_check=true"

> ]

> }

> EOF3. Start the docker service and verify. You can view the installed version of docker through docker info

[root@node-1 ~]# systemctl restart docker [root@node-1 ~]# systemctl enable docker

1.4 installation of kubeadm components

1. Install the kubernetes source. You can use the kubernetes source of Ali in China, which will be faster

[root@node-1 ~]#cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

2. When kubeadm, kubelet and kubectl are installed, several important dependency packages will be installed automatically: socat, CRI tools, cni, etc

[root@node-1 ~]# yum install kubeadm kubectl kubelet --disableexcludes=kubernetes -y

3. Setting iptables bridge parameters

[root@node-1 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf > net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > EOF [root@node-1 ~]# sysctl --system, and then use the sysctl -a|grep parameter to verify whether it works

4. Restart the kubelet service for the configuration to take effect

[root@node-1 ~]# systemctl restart kubelet [root@node-1 ~]# systemctl enable kubelet

1.5 import kubernetes image

1. Download the kubernetes installation image from cos, and import the image into the environment through the docker load command

[root@node-1 v1.14.1]# docker image load -i etcd:3.3.10.tar [root@node-1 v1.14.1]# docker image load -i pause:3.1.tar [root@node-1 v1.14.1]# docker image load -i coredns:1.3.1.tar [root@node-1 v1.14.1]# docker image load -i flannel:v0.11.0-amd64.tar [root@node-1 v1.14.1]# docker image load -i kube-apiserver:v1.14.1.tar [root@node-1 v1.14.1]# docker image load -i kube-controller-manager:v1.14.1.tar [root@node-1 v1.14.1]# docker image load -i kube-scheduler:v1.14.1.tar [root@node-1 v1.14.1]# docker image load -i kube-proxy:v1.14.1.tar

2. Check image list

[root@node-1 v1.14.1]# docker image list REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy v1.14.1 20a2d7035165 3 months ago 82.1MB k8s.gcr.io/kube-apiserver v1.14.1 cfaa4ad74c37 3 months ago 210MB k8s.gcr.io/kube-scheduler v1.14.1 8931473d5bdb 3 months ago 81.6MB k8s.gcr.io/kube-controller-manager v1.14.1 efb3887b411d 3 months ago 158MB quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 6 months ago 52.6MB k8s.gcr.io/coredns 1.3.1 eb516548c180 6 months ago 40.3MB k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 8 months ago 258MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 19 months ago 742kB

1.6 kubeadm initialization cluster

1. To initialize the cluster, you need to set the initial parameters

- --Pod network CIDR specifies the network segment used by pod, and the setting value is selected according to different network plugin s. In this paper, take flannel as an example, the setting value is 10.244.0.0/16

- container runtime can specify the path of socket file through -- CRI socket

- If there are multiple network cards that can specify the master address through -- apiserver-advertisement-address, the ip address for accessing the external network will be selected by default

[root@node-1 ~]# kubeadm init --apiserver-advertise-address 10.254.100.101 --apiserver-bind-port 6443 --pod-network-cidr 10.244.0.0/16 [init] Using Kubernetes version: v1.14.1 [preflight] Running pre-flight checks [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.03.1-ce. Latest validated version: 18.09 [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'#Download Image [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki"#Generate CA and other certificates [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [node-1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.254.100.101] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [node-1 localhost] and IPs [10.254.100.101 127.0.0.1 ::1] [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [node-1 localhost] and IPs [10.254.100.101 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests"#Generate master node static pod configuration file [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 18.012370 seconds [upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --experimental-upload-certs [mark-control-plane] Marking the node node-1 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node node-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: r8n5f2.9mic7opmrwjakled [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles#Configure RBAC authorization [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube #Configure environment variable profile sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: #Install network plug-in https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.254.100.101:6443 --token r8n5f2.9mic7opmrwjakled \ #Add node command and record it first --discovery-token-ca-cert-hash sha256:16e383c8abff6233021331944080087f0514ddd15d96c65d19443b0af02d64ab

Through kubeadm init -- apiserver-advertisement-address 10.254.100.101 -- apiserver bind port 6443 -- kubernetes version 1.14.1 -- pod network CIDR The 10.244.0.0/16 installation command shows some important steps in the installation process of kubeadm: downloading images, generating certificates, generating configuration files, configuring RBAC authorization authentication, configuring environment variables, installing network plug-in guidance, adding node guidance configuration files.

2. Generate kubectl environment profile

[root@node-1 ~]# mkdir /root/.kube [root@node-1 ~]# cp -i /etc/kubernetes/admin.conf /root/.kube/config [root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 NotReady master 6m29s v1.14.1

3. Add the node node, add the other two nodes to the cluster, and copy the above add node command to the specified node.

[root@node-3 ~]# kubeadm join 10.254.100.101:6443 --token r8n5f2.9mic7opmrwjakled \

> --discovery-token-ca-cert-hash sha256:16e383c8abff6233021331944080087f0514ddd15d96c65d19443b0af02d64ab

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 18.03.1-ce. Latest validated version: 18.09

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

//And so on to add node-2. After adding, it is verified by kubectl get nodes. At this time, because the network plugin has not been installed,

//All node nodes display NotReady status:

[root@node-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 NotReady master 16m v1.14.1

node-2 NotReady <none> 4m34s v1.14.1

node-3 NotReady <none> 2m10s v1.14.1

4. Install the network plugin. Kubernetes supports various types of network plug-ins. It is required that the network supports CNI plug-ins. CNI is the Container Network Interface. It is required that kubernetes has the medium pod network access mode:

- Network interworking between node s

- Network interworking between pod and pod

- Network interworking between node and pod

Different CNI plugin s support different features. kubernetes supports a variety of open-source network CNI plug-ins, including flannel, calico, canal, weave, etc. flannel is an overlay network model. The tunnel network is built through vxlan tunnel to realize the interconnection of the network in k8s. The following is the installation process:

[root@node-1 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml podsecuritypolicy.extensions/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.extensions/kube-flannel-ds-amd64 created daemonset.extensions/kube-flannel-ds-arm64 created daemonset.extensions/kube-flannel-ds-arm created daemonset.extensions/kube-flannel-ds-ppc64le created daemonset.extensions/kube-flannel-ds-s390x created

5. It can be seen from the above output that the deployment of flannel requires RBAC authorization, configmap and daemons. Daemons can adapt to various types of CPU architectures. By default, there are many installed, usually adm64. You can download and edit the above url, keep the daemons of Kube flannel DS AMD64, or delete them

#View the daemonsets installed by flannel [root@node-1 ~]# kubectl get daemonsets -n kube-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-flannel-ds-amd64 3 3 3 3 3 beta.kubernetes.io/arch=amd64 2m34s kube-flannel-ds-arm 0 0 0 0 0 beta.kubernetes.io/arch=arm 2m34s kube-flannel-ds-arm64 0 0 0 0 0 beta.kubernetes.io/arch=arm64 2m34s kube-flannel-ds-ppc64le 0 0 0 0 0 beta.kubernetes.io/arch=ppc64le 2m34s kube-flannel-ds-s390x 0 0 0 0 0 beta.kubernetes.io/arch=s390x 2m34s kube-proxy 3 3 3 3 3 <none> 30m #Delete unneeded damonsets [root@node-1 ~]# kubectl delete daemonsets kube-flannel-ds-arm kube-flannel-ds-arm64 kube-flannel-ds-ppc64le kube-flannel-ds-s390x -n kube-system daemonset.extensions "kube-flannel-ds-arm" deleted daemonset.extensions "kube-flannel-ds-arm64" deleted daemonset.extensions "kube-flannel-ds-ppc64le" deleted daemonset.extensions "kube-flannel-ds-s390x" deleted

6. At this time, verify the node installation. All nodes are in Ready status. The installation is completed!

[root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 29m v1.14.1 node-2 Ready <none> 17m v1.14.1 node-3 Ready <none> 15m v1.14.1

1.7 verifying kubernetes components

1. Verify the node status and obtain the current installation node. You can view the status, role, launch market and version,

[root@node-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-1 Ready master 46m v1.14.1 node-2 Ready <none> 34m v1.14.1 node-3 Ready <none> 32m v1.14.1

2. View kubernetse service component status, including scheduler, controller manager, etcd

[root@node-1 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"} 3. To view the pod situation, the roles in the master include Kube apiserver, Kube scheduler, Kube controller manager, etcd, coredns are deployed in the cluster in the form of pods, and Kube proxy of the worker node is also deployed in the form of pod. In fact, pod is controlled in the form of other controllers such as daemonset.

[root@node-1 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-fb8b8dccf-hrqm8 1/1 Running 0 50m coredns-fb8b8dccf-qwwks 1/1 Running 0 50m etcd-node-1 1/1 Running 0 48m kube-apiserver-node-1 1/1 Running 0 49m kube-controller-manager-node-1 1/1 Running 0 49m kube-proxy-lfckv 1/1 Running 0 38m kube-proxy-x5t6r 1/1 Running 0 50m kube-proxy-x8zqh 1/1 Running 0 36m kube-scheduler-node-1 1/1 Running 0 49m

1.8 configure kubectl command completion

When using kubectl and kubernetes to interact, you can use abbreviation mode or complete mode. For example, kubectl get nodes and kubectl get no can achieve the same effect. In order to improve work efficiency, you can use command completion to speed up work efficiency.

1. Build kubectl bash command line completion shell

[root@node-1 ~]# kubectl completion bash >/etc/kubernetes/kubectl.sh

[root@node-1 ~]# echo "source /etc/kubernetes/kubectl.sh" >>/root/.bashrc

[root@node-1 ~]# cat /root/.bashrc

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

source /etc/kubernetes/kubectl.sh #Add environment variable configuration2. Load shell environment variables for configuration to take effect

[root@node-1 ~]# source /etc/kubernetes/kubectl.sh

3. Verify the command line completion. Input kubectl get co in the command line and press TAB to complete automatically

[root@node-1~]# kubectl get co componentstatuses configmaps controllerrevisions.apps [root@node-1~]# kubectl get componentstatuses

In addition to command-line completion, kubectl also supports command shorthand. The following are some common command-line detection operations. More are obtained through the kubectl API resources command. SHORTNAMES shows the short usage in subcommands.

- kubectl get componentstatuses

- Kubectl get node s

- kubectl get services

- kubectl get deployments, short for kubectl get deploy ment to get the deployment list

- Kubectl get stateful sets

Reference documents

- Container Runtime installation document: https://kubernetes.io/docs/setup/production-environment/container-runtimes/

- kubeadm installation: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

- Initialize the kubeadm cluster: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#pod-network