In the previous section, we talked about the network namespace of linux, created the veth, and then made the network of the two network namespaces interoperate. After the docker creates the container, it will be found that the external network can be accessed in the container, and the network between the containers is interoperable.

1. Access to the Internet in the container

Create a new container and ping www.baidu.com into it

[root@vol ~]# docker run -d --name test1 busybox /bin/sh -c "while true; do sleep 3600;done" dfe2c0f67d68db7d2b8498ab4ff9a787cde8da9c87f705b0bd685d33b0fab9e5 [root@vol ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES dfe2c0f67d68 busybox "/bin/sh -c 'while t..." 35 seconds ago Up 33 seconds test1 [root@vol ~]# docker exec -it dfe2c0f67d68 /bin/sh / # ping www.baidu.com PING www.baidu.com (14.215.177.38): 56 data bytes 64 bytes from 14.215.177.38: seq=0 ttl=53 time=5.337 ms 64 bytes from 14.215.177.38: seq=1 ttl=53 time=9.697 ms 64 bytes from 14.215.177.38: seq=2 ttl=53 time=5.318 ms ^C --- www.baidu.com ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 5.318/6.784/9.697 ms / #

2. Create a new container, and the network between containers can be interconnected

## Looking at the container ip, it is found that the test1 container ip is 172.17.0.2 and the test2 container ip is 172.17.0.3

[root@vol ~]# docker exec test1 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@vol ~]# docker exec test2 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

##In the test1 container, ping the ip address of test2. It is found that the connection can be pinged

[root@vol ~]# docker exec -it test1 /bin/sh

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.187 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.118 ms

64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.133 ms

^C

--- 172.17.0.3 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.118/0.146/0.187 ms

/ # exit

Entering test2 container ping test1 ip is also passable, indicating that the network between the two containers is interworking.

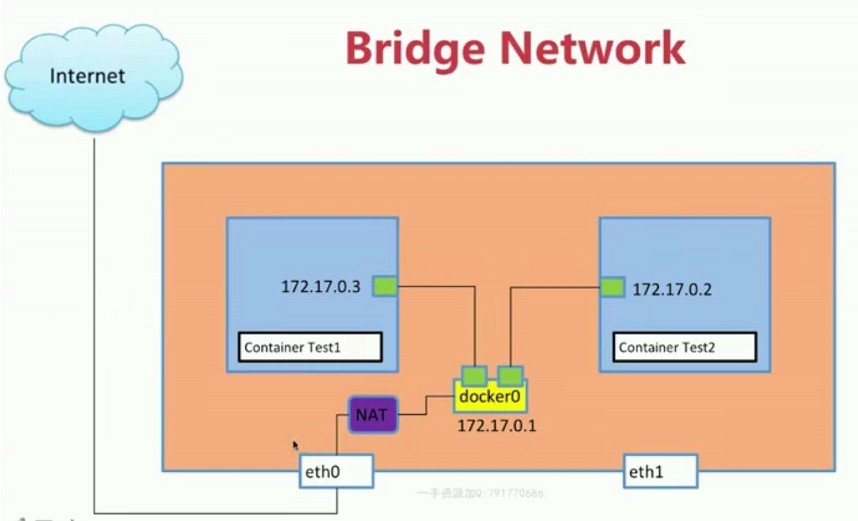

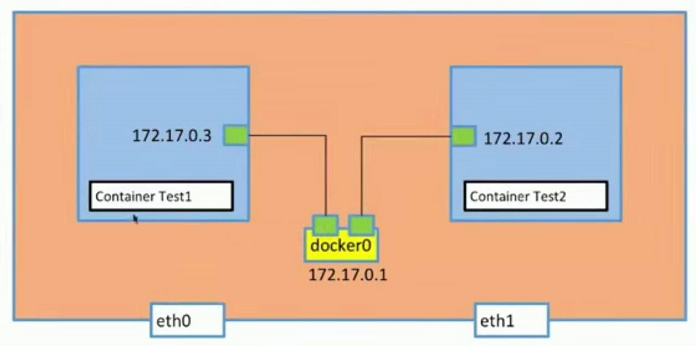

principle

In fact, the principle is similar to that in the previous section. In fact, we have created a new pair of veth s to get through the network.

1. The container can access the Internet because there are a pair of veth s, one end is connected to the container, and the other end is connected to the docker0 of the host, so that the container can share the host's network. When the container accesses the Internet, it will perform address conversion through NAT, which is actually realized through iptables, which is not expanded here.

2. Another new container will generate a pair of veth s, one end is connected to the container, the other end is connected to the docker0 network, so that both containers are connected to the docker0, and they can communicate with each other. Here you can think of the container as a computer at home, and docker0 as a router. If you want two computers in the same LAN, you can take two network cables and connect them to the same router, so that two computers can communicate with each other.

As shown in the figure:

Verification

1. Install brctl tool

2. Track network links

Install brctl

[root@vol ~]# yum install bridge-utils Loaded plugins: fastestmirror Determining fastest mirrors docker-ce-stable | 3.5 kB 00:00 epel | 5.3 kB 00:00 extras | 2.9 kB 00:00 kubernetes/signature | 454 B 00:00 kubernetes/signature | 1.4 kB 00:00 !!! . . . Omit...

View native bridge network and docker network

It is known that there are two running containers. Check the ip address of this machine and find that there are two veths in addition to lo,ens160 and docker0. Running brctl show, you will find that there are two veths connected on the bridge network, and these two veths are exactly the two ones on the host. Therefore, where is the other end of the veth connected?

[root@vol ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

da0dd80d5418 busybox "/bin/sh -c 'while t..." 17 minutes ago Up 17 minutes test2

dfe2c0f67d68 busybox "/bin/sh -c 'while t..." 4 hours ago Up 4 hours test1

[root@vol ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:87:bd:a7 brd ff:ff:ff:ff:ff:ff

inet 172.31.17.54/16 brd 172.31.255.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::33db:6382:9c3a:12e8/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::a780:a19:68f2:9347/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::a62f:dd94:b9a2:3027/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:9d:e3:47:69 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:9dff:fee3:4769/64 scope link

valid_lft forever preferred_lft forever

5: veth9d0b56c@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 52:9f:51:35:0c:b8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::509f:51ff:fe35:cb8/64 scope link

valid_lft forever preferred_lft forever

7: vethe9de44d@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether f2:b4:4a:9c:41:0a brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::f0b4:4aff:fe9c:410a/64 scope link

valid_lft forever preferred_lft forever

[root@vol ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02429de34769 no veth9d0b56c

vethe9de44d

You can see that veth9d0b56c happens to be the 5: veth9d0b56c@if4 of this machine, while veth9de44d is the 7: veth9de44d @ if6 of this machine, where is the other end connected?

inspect bridge to view information about the bridge. It is found that there are test1 and test2 Containers on the Containers, indicating that both Containers are connected to the bridge network. If you create another container, you will find another container connected to the bridge.

[root@vol ~]# docker network list

NETWORK ID NAME DRIVER SCOPE

0ee165ccab6f bridge bridge local

baa1cdd2d1e4 host host local

2cb2a0e5dad5 none null local

[root@vol ~]# docker inspect bridge

[

{

"Name": "bridge",

"Id": "0ee165ccab6fa3c171708329bd3ab692376fc46ea4eb04fce93f2e0b3269d640",

"Created": "2020-01-19T16:23:36.392297087+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"da0dd80d5418f7138d1d41fea43e06006d9d3dfe175e68502ba8ba6a809d1f83": {

"Name": "test2",

"EndpointID": "187509eca88c77ba6b1a7bb63f485d6a9e610038142983817d353031b63afba6",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"dfe2c0f67d68db7d2b8498ab4ff9a787cde8da9c87f705b0bd685d33b0fab9e5": {

"Name": "test1",

"EndpointID": "bc27d2081aa69cebf0ee609aa42f00aa4a3cd73e72ff8b6c13eefb4b0b2fab67",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]