Importance and Method of Point Cloud Filtering

Why do 1-point clouds need filtering

_In order

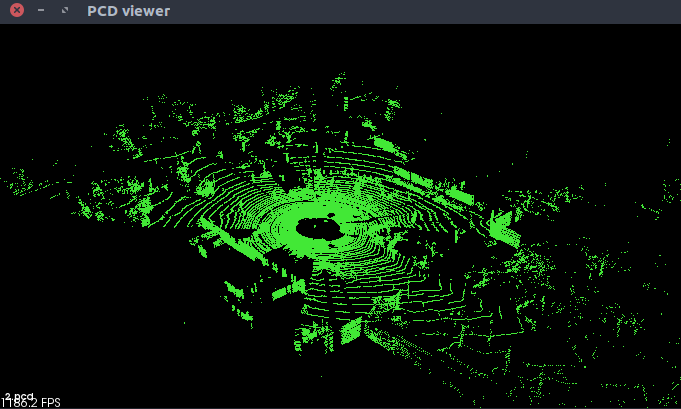

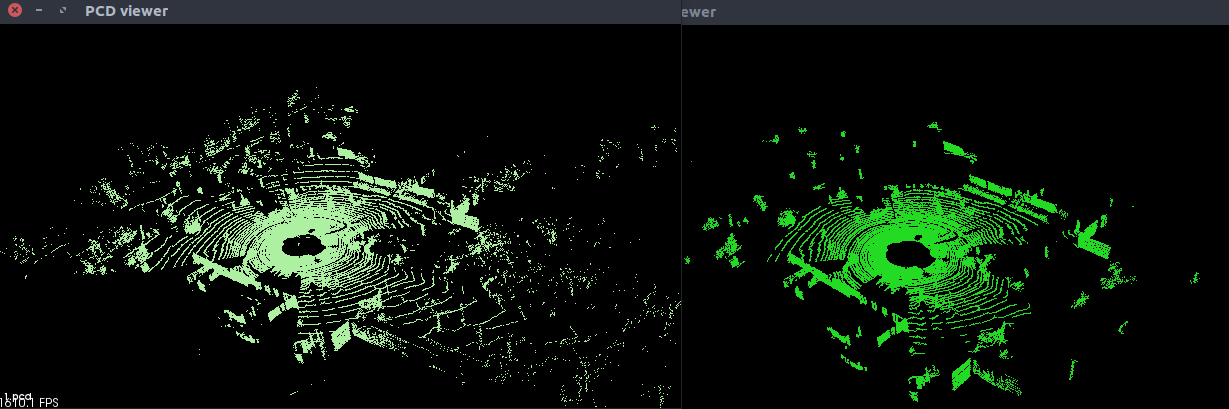

_We can clearly see that there are many discrete points in the lower right of the following figure, and these noise points have no significance for our subsequent operation.

1.1 Which situations need filtering

- Point cloud data density is not uniform

- Outliers need to be removed because of occlusion and other problems.

- Lots of data need to be downsampled

- Noise data need to be removed

Two kinds of existing filtering methods and their application fields

2.1 Straight-Pass Filtering

For point cloud data with certain spatial characteristics in spatial distribution, such as using line structured light scanning to collect point cloud, the distribution along z direction is wider, but the distribution of x,y direction is in a limited range. At this time, a through filter can be used to determine the range of point cloud in the direction of X or y, and the outliers can be cut off quickly to achieve the purpose of the first rough processing. (Remove redundant points in the Z-axis direction).

2.2 voxel filtering

Voxels are similar to pixels in concept. AABB bounding box is used to voxelize point cloud data. The denser voxels are, the more local information is. Noise points and outliers can be removed through voxel grid. On the other hand, if we use high resolution camera and other equipment to collect point clouds, point clouds tend to be more dense. Excessive number of point clouds will bring difficulties to subsequent segmentation. Voxel filter can achieve the function of downsampling without destroying the geometric structure of point cloud itself.

2.3 Statistical Filtering

Considering the characteristics of outliers, we can define that a point cloud is less than a certain density, which is invalid. Calculate the average distance from each point to its nearest k points. Then the distance of point clouds should form a Gauss distribution. Given the mean and variance, points other than 3_can be excluded.

2.4 Conditional Filtering

_Conditional filter filters by setting filtering conditions. It has a taste of piecewise function. When the point cloud is left in a certain range, it will be discarded if it is not there.

2.5 Radius Filtering

The radius filter is simpler and more rough than the statistical filter. Drawing a circle around a point to calculate the number of points falling in the middle of the circle. When the number is greater than a given value, the point is retained, and the point is eliminated when the number is less than a given value. The algorithm runs fast, and the points left by sequential iteration must be the most dense, but the radius of the circle and the number of points in the circle need to be specified manually.

3. Realization of Statistical Filtering

_Section 2 listed five filtering methods in the pcl library have corresponding encapsulated functions, which can be directly invoked to filter pcd files.

The following is the specific implementation code of statistical filtering (cpp source file):

#include <iostream> #include <pcl/io/pcd_io.h> #include <pcl/point_types.h> #include <pcl/filters/statistical_outlier_removal.h> //Statistical filter header file //Statistical filter int main(int argc, char **argv) { pcl::PointCloud<pcl::PointXYZ>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZ>); pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_filtered(new pcl::PointCloud<pcl::PointXYZ>); // Define read objects pcl::PCDReader reader; // Read point cloud files reader.read<pcl::PointXYZ>(argv[1], *cloud); std::cerr << "Cloud before filtering: " << std::endl; std::cerr << *cloud << std::endl; // Create a filter, set the number of adjacent points for each point analysis to 50, and set the multiple of standard deviation to 1, which means if //If the distance of a point exceeds a standard deviation of the average distance, the point is marked as an outlier, removed and stored. pcl::StatisticalOutlierRemoval<pcl::PointXYZ> sor; //Create filter objects sor.setInputCloud(cloud); //Setting point clouds to be filtered sor.setMeanK(50); //Setting the nearest points of query points to be considered when making statistics sor.setStddevMulThresh(1.0); //Set a threshold to determine whether the outlier is an outlier sor.filter(*cloud_filtered); //storage std::cerr << "Cloud after filtering: " << std::endl; std::cerr << *cloud_filtered << std::endl; //Preserving filtered point clouds pcl::PCDWriter writer; writer.write<pcl::PointXYZ>("after_filter.pcd", *cloud_filtered, false); //sor.setNegative(true); //sor.filter(*cloud_filtered); //writer.write<pcl::PointXYZ>("1_outliers.pcd", *cloud_filtered, false); return (0); }

The compiler environment I use is Ubuntu 16.04 + ros-kinetic (ros comes with pcl 1.7) + roboware (IDE), so I only need to call the library in CMakeLists.txt to use the pcl library. Roboware is strongly recommended here. It can help us automatically generate the corresponding CMakeLists files. It is very convenient for us to manage library files and save a lot of development time. Considering the universality, the version suitable for installing only pcl libraries is given here.

CMakeLists.txt file code:

cmake_minimum_required( VERSION 2.8 ) PROJECT(statisticalOutlierRemoval) set( CMAKE_BUILD_TYPE "Release" ) find_package(PCL 1.7 REQUIRED COMPONENTS) include_directories(${PCL_INCLUDE_DIRS}) link_directories(${PCL_LIBRARY_DIRS}) add_definitions(${PCL_DEFINITIONS}) add_executable(statisticalOutlierRemoval statisticalOutlierRemoval.cpp ) target_link_libraries(statisticalOutlierRemoval ${PCL_LIBRARIES} )

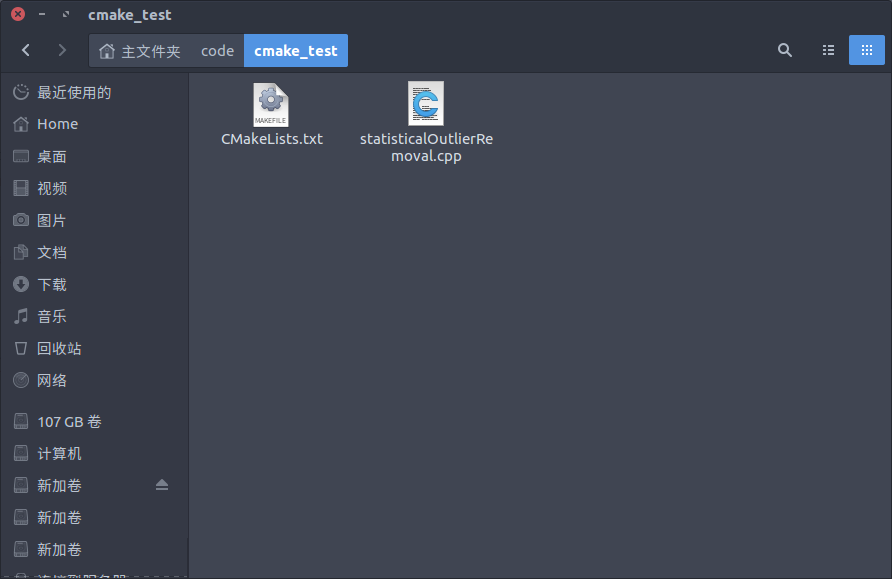

Write two files (cpp source file and MakeLists file) and put them in the same folder

Then perform the following operations:

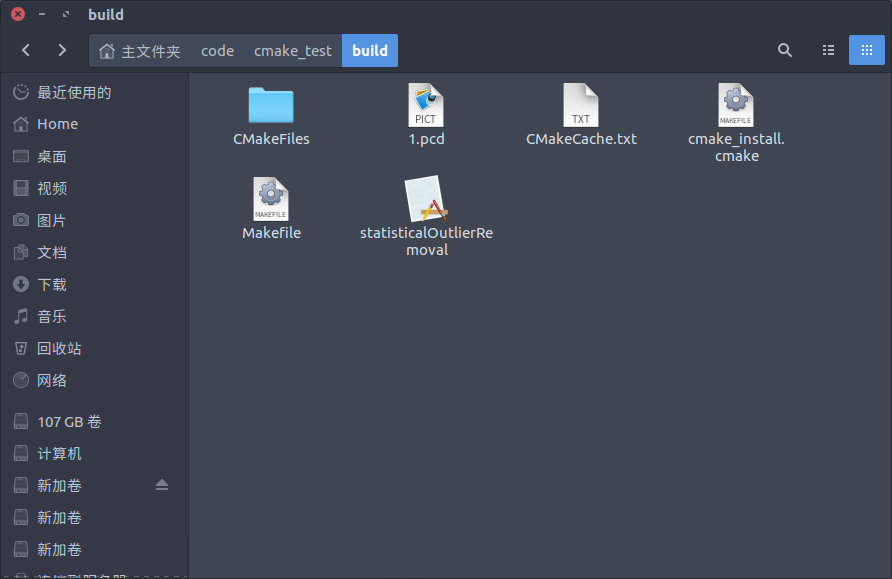

mkdir build

cd build

cmake ...

make

Generate the corresponding executable file as follows:

Open the terminal under this folder to execute the file:

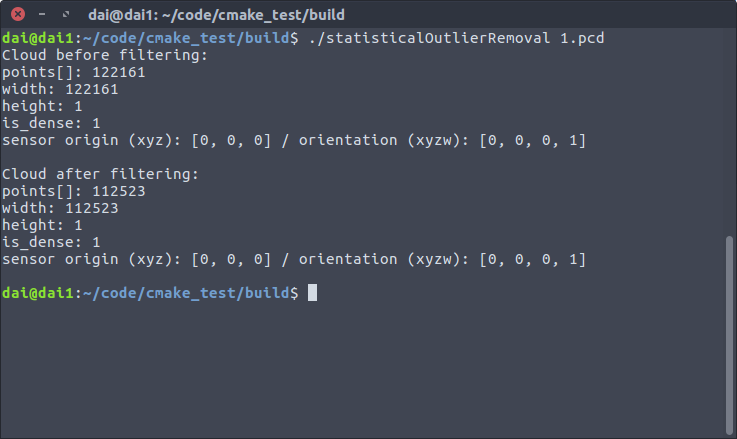

./statisticalOutlierRemoval 1.pcd

Through the display of the terminal, we can see that nearly 1W points have been filtered out. Finally, we can visualize them by following commands (the terminal path remains unchanged):

pcl_viewer 1.pcd

pcl_viewer after_filter.pcd

Through comparison, we can find that after statistical filtering operation, a large number of discrete noise points in the original image have been removed. Although the remaining point clouds still have parts that we do not need and do not want to exist, but filtering out some noise points still helps us to follow-up processing.

If this article is helpful to you, please give a compliment by the way. If there are any mistakes in this article, please put forward and correct them.