- Close the firewall

systemctl status firewalld systemctl disable firewalld systemctl stop firewalld

Systemctl is a system D tool, which is mainly responsible for controlling system D system and service manager.

Systemd is a collection of system management daemons, tools, and libraries to replace the initial process of System V. Systemd's function is to centrally manage and configure UNIX-like systems.

In the Linux ecosystem, Systemd is deployed in most standard Linux distributions, and only a few have not yet been deployed. Systemd is usually the parent of all other daemons, but not always.

- Install etcd and kubernetes

yum install etcd kubernetes

etcd Is an open source, distributed key-to-value data storage system,Provide shared configuration, service registration and discovery.

Question:

Installation error reporting

Transaction check error: file /usr/bin/kubectl from install of kubernetes-client-1.5.2-0.7.git269f928.el7.x86_64 conflicts with file from package kubectl-1.1 file /usr/bin/kubelet from install of kubernetes-node-1.5.2-0.7.git269f928.el7.x86_64 conflicts with file from package kubelet-1.10.

Cause: Conflict with installed

Solution: yum remove, a conflict project written locally

- Startup service

systemctl start etcd systemctl start docker systemctl start kube-apiserver systemctl start kube-controller-manager systemctl start kube-scheduler systemctl start kubelet systemctl start kube-proxy

- Create a new rc to start pod(mysql)

kubectl create -f mysql-rc.yaml

#RC template mysql-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

Question:

When Kubernetes succeeded in building a new RC, but Pods did not have an automatic generation solution

Reasons: Authority issues

Solution: Edit / etc/kubernetes/apiserver to remove SecurityContextDeny, Service Account from KUBE_ADMISSION_CONTROL and restart the kube-apiserver.service service

After the new build is successful, check the status of rc and pods

[root@localhost k8s]# kubectl get rc

NAME DESIRED CURRENT READY AGE

mysql 1 1 0 22s

[root@localhost k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-10gsx 0/1 ContainerCreating 0 26s

#Card in this state to see the pod status

[root@localhost k8s]# kubectl describe pod mysql-10gsx

Name: mysql-10gsx

Namespace: default

Node: 127.0.0.1/127.0.0.1

Start Time: Sat, 20 Oct 2018 01:31:50 -0700

Labels: app=mysql

Status: Pending

IP:

Controllers: ReplicationController/mysql

Containers:

mysql:

Container ID:

Image: docker.io/mysql

Image ID:

Port: 3306/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

MYSQL_ROOT_PASSWORD: 123456

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1d 1m 265 {kubelet 127.0.0.1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

1d 9s 5786 {kubelet 127.0.0.1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

The main problem is details: (open/etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)

Solution:

Viewing / etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt (the link is illustrated in the figure above) is a soft link, but there is no real/etc/rhsm after the link has passed, so you need to install it using yum:

yum install *rhsm*

After the installation is complete, execute docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

If the error is still reported, refer to the following scheme:

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

These two commands generate the / etc/rhsm/ca/redhat-uep.pem file.

If it works out, the following results will be obtained.

[root@localhost]# docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest Trying to pull repository registry.access.redhat.com/rhel7/pod-infrastructure ... latest: Pulling from registry.access.redhat.com/rhel7/pod-infrastructure 26e5ed6899db: Pull complete 66dbe984a319: Pull complete 9138e7863e08: Pull complete Digest: sha256:92d43c37297da3ab187fc2b9e9ebfb243c1110d446c783ae1b989088495db931 Status: Downloaded newer image for registry.access.redhat.com/rhel7/pod-infrastructure:latest # Look at the status again [root@localhost k8s]yukubectl get pods NAME READY STATUS RESTARTS AGE mysql-10gsx 1/1 Running 0 1d

Delete operation

[root@cent7-2 docker]# kubectl delete -f mysql-rc.yaml replicationcontroller "mysql" deleted [root@cent7-2 docker]# kubectl get rc No resources found. [root@cent7-2 docker]# kubectl get pods NAME READY STATUS RESTARTS AGE mysql-hgkwr 0/1 Terminating 0 17m [root@cent7-2 docker]# kubectl delete po mysql-hgkwr pod "mysql-hgkwr" deleted # Forced deletion of pods kubectl delete pods --all --grace-period=0 --force

- New svc

#svc template mysql-rsvc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

[root@localhost k8s]# kubectl create -f mysql-svc.yaml service "mysql" created [root@localhost k8s]# kubectl get svc NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes 10.254.0.1 <none> 443/TCP 1d mysql 10.254.248.175 <none> 3306/TCP 42s

Notice that MySQL service has been assigned a Cluster IP address with a value of 10.254.248.175, which is a virtual address. Other newly created pods in the kubernetes cluster can access it through the Cluster IP + port number 3306 of service.

- Create WEB containers

#docker search tomcat-app

#docker images docker.io/kubeguide/tomcat-app

#docker pull docker.io/kubeguide/tomcat-app:v1

vi myweb-rc.yaml

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: kubeguide/tomcat-app:v1

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

#Noting that MYSQL_SERVICR_HOST=mysql is referenced in the Tomcat container corresponding to the RC above,

#And "mysql" is exactly the name of the MySQL service we should have used before.

Result

[root@localhost k8s]# kubectl create -f myweb-rc.yaml replicationcontroller "myweb" created [root@localhost k8s]# kubectl get pods NAME READY STATUS RESTARTS AGE mysql-10gsx 1/1 Running 0 1d myweb-1t5h1 1/1 Running 0 33s myweb-7wfx9 1/1 Running 0 33s myweb-96bw7 1/1 Running 0 33s myweb-mr3cg 1/1 Running 0 33s myweb-v0sp8 1/1 Running 0 33s [root@localhost k8s]# kubectl get rc NAME DESIRED CURRENT READY AGE mysql 1 1 1 1d myweb 5 5 5 1m

Create the corresponding service

[root@localhost k8s]# vi myweb-svc.yaml

[root@localhost k8s]# cat myweb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30001

selector:

app: myweb

The two attributes of type=NodePort and nodePort=30001 indicate that this service opens the NodePort mode of external network access.

Service's virtual IP is an internal network virtualized by Kubernetes, which can not be addressed externally. But some services need to be accessed externally, such as the front section of the web. At this time, we need to add a layer of network forwarding, that is, the forwarding from the outside network to the inside network. Kubernetes provides NodePort, Load Balancer and Ingress.

NodePort, in the previous Guestbook example, has delayed the use of NodePort. The principle of NodePort is that Kubernetes exposes a port on each Node: nodePort, and the external network can access the back-end Service through (any Node) [NodeIP]:[NodePort].

[root@localhost k8s]# kubectl create -f myweb-svc.yaml service "myweb" created [root@localhost k8s]# kubectl get svc NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes 10.254.0.1 <none> 443/TCP 1d mysql 10.254.248.175 <none> 3306/TCP 12h myweb 10.254.183.138 <nodes> 8080:30001/TCP 34s

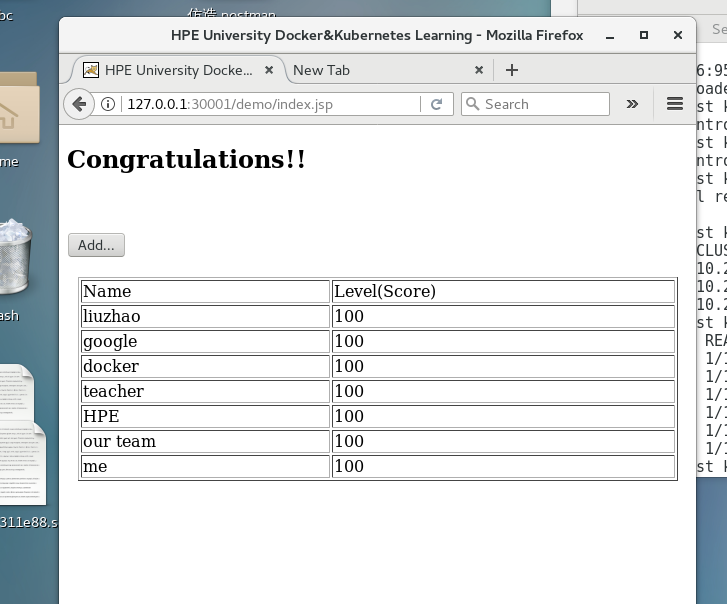

The tomcat page can be opened through native IP/127.0.0.1:30001.

However, goose, we use 127.0.0.1:30001/demo to open the page to prompt jdbc database connection error.

[root@localhost ~]# kubectl get ep NAME ENDPOINTS AGE kubernetes 192.168.80.128:6443 9h mysql 172.17.0.7:3306 9h myweb 172.17.0.2:8080,172.17.0.3:8080,172.17.0.4:8080 + 2 more... 9h [root@localhost ~]# kubectl exec -ti myweb-qrjsd -- /bin/bash root@myweb-qrjsd:/usr/local/tomcat# echo $MYSQL_SERVICE_HOST mysql root@myweb-qrjsd:/usr/local/tomcat# echo "172.17.0.7 mysql" >> /etc/hosts root@myweb-qrjsd:/usr/local/tomcat#

If you want to solve the above problems, you need to call the dns server. Please refer to.

Successfully, finish work, finish work and go home for dinner.