principle

I'm not good at Baidu

- YOLO from the darknet object detection framework

- Based on COCO data set, it can detect 80 categories

- YOLO V3 version

https://pireddie.com/darknet/yolo

Input data corresponding to each network model

You can view the model binary file name, network description file name, median processing parameters, data sample size, description label file name, rgb channel order, typical application scenarios and other information of each model

Link address: https://github.com/opencv/opencv/blob/master/samples/dnn/models.yml

################################################################################ # Object detection models. ################################################################################ . . . # YOLO object detection family from Darknet (https://pjreddie.com/darknet/yolo/) # Might be used for all YOLOv2, TinyYolov2 and YOLOv3 yolo: model: "yolov3.weights" config: "yolov3.cfg" mean: [0, 0, 0] scale: 0.00392 width: 416 height: 416 rgb: true classes: "object_detection_classes_yolov3.txt" sample: "object_detection" tiny-yolo-voc: model: "tiny-yolo-voc.weights" config: "tiny-yolo-voc.cfg" mean: [0, 0, 0] scale: 0.00392 width: 416 height: 416 rgb: true classes: "object_detection_classes_pascal_voc.txt" sample: "object_detection" . . .

Network input and output

- Input layer [Nx3xHxW] channel sequence: RGB, mean value 0, scaling 1 / 255

- Multiple output layers, output structure: [C, center, y, width, heigjt]

- Remove the duplicate BOX through NMS, because there are multiple output layers, the object may be detected multiple times

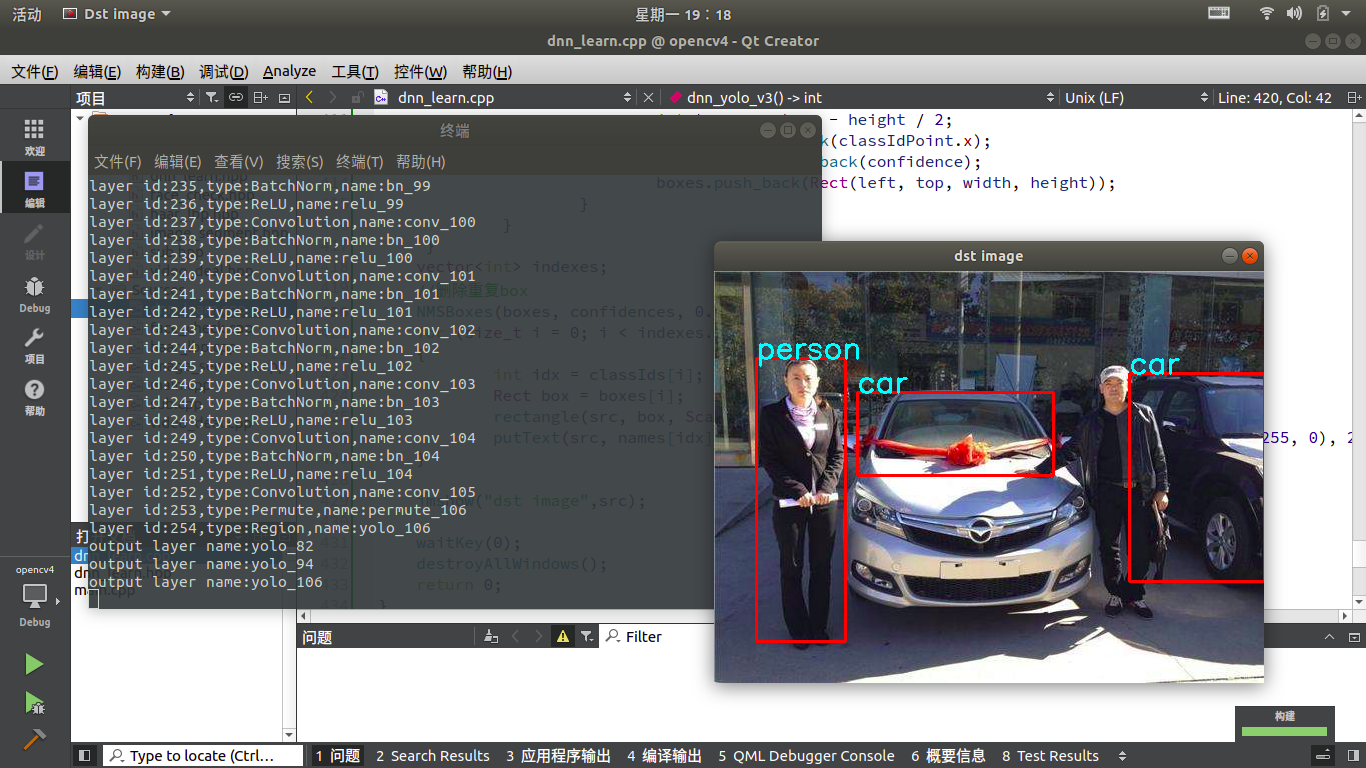

Code

#include <opencv2/opencv.hpp> #include <opencv2/dnn.hpp> #include <iostream> using namespace std; using namespace cv; using namespace cv::dnn; #define PIC_PATH "/work/opencv_pic/" #define PIC_NAME "pedestrian.png const size_t width = 416; const size_t height = 416; string weight_file = "/work/opencv_dnn/yolov3/yolov3.weights"; string cfg_file = "/work/opencv_dnn/yolov3/yolov3.cfg"; string label_map = "/work/opencv_dnn/yolov3/object_detection_classes_yolov3.txt"; vector<string> readLabelMaps(void); int main(void) { string pic = string(PIC_PATH)+string(PIC_NAME); Mat src; src = imread(pic); if(src.empty()) { printf("pic read err\n"); return -1; } //Create and load neural networks Net net = readNetFromDarknet(cfg_file,weight_file); if(net.empty()) { printf("read caffe model data err\n"); return -1; } //Set calculation background net.setPreferableBackend(DNN_BACKEND_OPENCV); net.setPreferableTarget(DNN_TARGET_CPU); //Get information of each layer vector<string> layers_names = net.getLayerNames(); for(size_t i=0;i<layers_names.size();i++) { int id = net.getLayerId(layers_names[i]); auto layer = net.getLayer(id); printf("layer id:%d,type:%s,name:%s\n",id,layer->type.c_str(),layer->name.c_str()); } //Get all output layers vector<string> outNames = net.getUnconnectedOutLayersNames(); for(size_t i=0;i<outNames.size();i++) { printf("output layer name:%s\n",outNames[i].c_str()); } //Picture format conversion Mat blobimage = blobFromImage(src,0.00392, Size(width, height), Scalar(), true, false); //Network input data net.setInput(blobimage); //yolo has multiple output layers to obtain identification data vector<Mat> outs; net.forward(outs,outNames); //Each layer has a rectangular confidence label index vector<Rect> boxes; vector<int> classIds; vector<float> confidences; //Get name index vector<string> names = readLabelMaps(); //analysis for (size_t i = 0; i < outs.size(); i++) { // ¿ªÊ¼½âÎöÿ¸öÊä³öblob float* data = (float*)outs[i].data; //Parse each row of data for each output layer for (int j = 0; j < outs[i].rows; j++, data += outs[i].cols) { //Remove the box data in each line and get the box location information Mat scores = outs[i].row(j).colRange(5, outs[i].cols); Point classIdPoint; //Maximum position double confidence; //Get confidence value minMaxLoc(scores, 0, &confidence, 0, &classIdPoint); if (confidence > 0.5) { int centerx = (int)(data[0] * src.cols); int centery = (int)(data[1] * src.rows); int width = (int)(data[2] * src.cols); int height = (int)(data[3] * src.rows); int left = centerx - width / 2; int top = centery - height / 2; classIds.push_back(classIdPoint.x); confidences.push_back(confidence); boxes.push_back(Rect(left, top, width, height)); } } } vector<int> indexes; //Delete duplicate box NMSBoxes(boxes, confidences, 0.5, 0.5, indexes); for (size_t i = 0; i < indexes.size(); i++) { int idx = classIds[i]; Rect box = boxes[i]; rectangle(src, box, Scalar(0, 0, 255), 2, 8); putText(src, names[idx].c_str(), box.tl(), FONT_HERSHEY_SIMPLEX, 1, Scalar(255, 255, 0), 2, 8); } imshow("dst image",src); waitKey(0); destroyAllWindows(); return 0; } vector<string> readLabelMaps() { vector<string> labelNames; std::ifstream fp(label_map); if (!fp.is_open()) { printf("could not open file...\n"); exit(-1); } string one_line; string display_name; while (!fp.eof()) { std::getline(fp, one_line); if (one_line.length()) { labelNames.push_back(one_line) ; } } fp.close(); return labelNames; }

Effect